Balancing Data Integrity and Motion Artifact Removal in 2025: Strategies for Reliable Clinical Research

This article addresses the critical challenge of balancing comprehensive data retention policies with effective motion artifact removal in clinical research and drug development.

Balancing Data Integrity and Motion Artifact Removal in 2025: Strategies for Reliable Clinical Research

Abstract

This article addresses the critical challenge of balancing comprehensive data retention policies with effective motion artifact removal in clinical research and drug development. For researchers and scientists, we explore the foundational principles of data governance and the pervasive impact of motion artifacts on EEG, ECG, and MRI data. The content provides a methodological overview of state-of-the-art removal techniques, from deep learning models like Motion-Net and iCanClean to traditional signal processing. It further delivers practical troubleshooting guidance for optimizing data workflows and a comparative analysis of validation frameworks to ensure both data integrity and regulatory compliance. By synthesizing these intents, this guide aims to equip professionals with the knowledge to enhance data quality, accelerate research timelines, and maintain audit-ready data pipelines.

The Dual Imperative: Understanding Data Retention Rules and Motion Artifact Sources

Data retention involves creating policies for the persistent management of data and records to meet legal and business archival requirements. These policies determine how long data is kept, the rules for archiving, and the secure means of storage, access, and eventual disposal [1].

In a research context, particularly when balancing data retention with motion artifact removal, you must keep all original, unaltered source data for the entire mandated retention period, even after artifacts have been removed or corrected. Processed datasets must be linked to their raw origins to ensure research integrity and regulatory compliance.

Understanding MCP in Data Contexts

The term "MCP" can refer to different concepts. In the context of AI and data systems, it stands for the Model Context Protocol, a protocol that allows AI applications to connect to external data sources and tools [2]. From a Microsoft compliance perspective, "MCP" is often used informally to refer to Microsoft Purview Compliance, a suite for data governance and lifecycle management [3].

For scientific data management, the core principle is the same: you must establish and follow a Master Control Program (MCP) for your data—a central set of controlled procedures that govern how data is created, modified, stored, and deleted throughout its lifecycle to ensure authenticity and integrity.

Core Regulatory Requirements

Your data retention strategy must comply with several overlapping regulations. The table below summarizes the key requirements.

| Regulation | Core Principle | Typical Retention Requirements | Key Considerations for Research Data |

|---|---|---|---|

| GDPR [4] [5] | Storage Limitation: Data kept no longer than necessary. | Period must be justified and proportionate to the purpose. | Raw human subject data must be anonymized or deleted after the purpose expires; requires clear legal basis for processing. |

| HIPAA [6] | Security and Privacy of Protected Health Information (PHI). | Patient authorizations and privacy notices: 6 years (minimum). | Applies to any research involving patient health data; requires strict access controls and audit trails. |

| 21 CFR Part 11 [7] [8] | Electronic records must be trustworthy, reliable, and equivalent to paper. | Follows underlying predicate rules (e.g., GLP, GCP). Often 2+ years for clinical data, 7+ years for manufacturing [8]. | Requires system validation, secure audit trails, and electronic signatures that are legally binding. |

GDPR Principles in Detail

Article 5 of the GDPR requires that personal data be [4]:

- Processed lawfully, fairly, and transparently.

- Collected for specified and legitimate purposes (purpose limitation).

- Adequate, relevant, and limited to what is necessary (data minimization).

- Kept in an identifiable form for no longer than necessary (storage limitation).

- Processed in a manner that ensures appropriate security.

CFR Part 11 Technical Controls

For systems handling electronic records, key controls include [7]:

- System Validation: Ensuring accuracy, reliability, and consistent intended performance.

- Audit Trails: Secure, computer-generated, time-stamped audit trails to record operator actions. These must be retained for the same period as the electronic records themselves.

- Authority Checks: Ensuring only authorized individuals can use the system, access data, or sign records.

- Protection of Records: Ensuring accurate and ready retrieval throughout the entire retention period [8].

The Scientist's Toolkit: Essential Research Reagent Solutions

The following tools and protocols are essential for maintaining compliant data management in a research environment.

| Item / Solution | Function in Compliant Data Management |

|---|---|

| Validated Electronic Lab Notebook (ELN) | A system compliant with 21 CFR Part 11 for creating, storing, and retrieving electronic records with immutable audit trails. |

| Data Archiving & Backup System | Ensures accurate and ready retrieval of all raw and processed data throughout the retention period, as required by 21 CFR Part 11.10(c) [8]. |

| De-identification/Anonymization Tool | Enables compliance with GDPR's data minimization principle by removing personal identifiers from research data when full data is not necessary [5]. |

| Access Control Protocol | Procedures to limit system access to authorized individuals only, a key requirement of 21 CFR Part 11.10(g) [7]. |

| Data Disposal & Sanitization Tool | Securely and permanently deletes data that has reached the end of its retention period, complying with GDPR's storage limitation principle [5]. |

Data Retention Workflow for Research

Frequently Asked Questions (FAQs)

Q1: After we remove motion artifacts from a dataset, can we delete the original raw data to save space? A: No. Regulatory standards like 21 CFR Part 11 and scientific integrity require you to retain the original, raw source data for the entire mandated retention period. The processed data (with artifacts removed) must be linked back to this original data to provide a complete and verifiable record of your research activities.

Q2: Our research involves data from EU citizens. How does GDPR's "storage limitation" affect us? A: GDPR requires that you store personal data for no longer than is necessary for the purposes for which it was collected [5]. You must define and justify a specific retention period for your research data. Once the purpose is fulfilled (e.g., the study is concluded and published), you must either anonymize the data (so it is no longer "personal") or securely delete it.

Q3: What is the single most important technical control for 21 CFR Part 11 compliance? A: While multiple controls are critical, the implementation of secure, computer-generated, time-stamped audit trails is fundamental [7]. This trail must automatically record the date, time, and user for any action that creates, modifies, or deletes an electronic record, and it must be retained for the same period as the record itself.

Q4: How do we handle the end of a data retention period? A: You must have a documented procedure for secure disposal. This involves:

- Review: Confirm the data has reached the end of its retention period and is not needed for any ongoing legal or regulatory action.

- Destruction: Permanently and securely delete the data so it cannot be recovered.

- Documentation: Record the disposal action (what, when, how) to demonstrate compliance with your policy [9].

FAQs: Data Management and Fragmentation

Q1: What are data silos and data fragmentation, and how do they differ? A: Data silos are isolated collections of data accessible only to one group or department, hindering organization-wide access and collaboration [10] [11]. Data fragmentation is the broader problem where this data is scattered across different systems, applications, and storage locations, making it difficult to manage, analyze, and integrate effectively [12]. Fragmentation can be both physical (data scattered across different locations or storage devices) and logical (data duplicated or divided across different applications, leading to different versions of the same data) [12].

Q2: What are the primary causes of data silos in a research organization? A: Data silos often arise from a combination of technical and organizational factors:

- Organizational Structure: Silos frequently mirror company department charts, created when business units or product groups manage data independently [10].

- Decentralized Systems: Different teams using unintegrated data management systems or independently developing their own approaches to data collection [10] [11].

- Company Culture: A lack of communication between teams, "turf wars" where departments hoard data, and a lack of a unified vision for data collection and utilization [12] [11].

- Legacy Systems: Older systems that are incapable of interacting with modern tools, creating islands of incompatible data [12].

- Rapid Technology Adoption: Adopting new applications and technologies without a plan for how the data will integrate with existing systems [12].

Q3: How does fragmented data directly impact the quality and cost of research? A: The impacts are significant and multifaceted:

- Flawed Decisions: Inconsistent or incomplete data leads to unreliable conclusions and poor strategic choices [13].

- Operational Inefficiency: Knowledge workers can spend up to 30% of their time just searching for information, drastically slowing down research progress [13].

- Increased Costs: Maintaining multiple, separate data systems requires more resources for storage, maintenance, and management. In fields like healthcare, data fragmentation can cost tens to hundreds of billions of dollars annually [12].

- Data Integrity Issues: Fragmentation leads to discrepancies, duplication, and data decay (outdated information), undermining the validity of research findings [11].

Q4: What is a "digital nervous system" and why is it important for modern AI-driven research? A: A "digital nervous system" is a foundational data framework that acts as a reusable backbone for all AI solutions and data streams within an organization [14]. Unlike legacy data management systems, it is not just an IT project but a business enabler that ensures data can be easily integrated, reconciled, and adapted. For AI research, which evolves in months, not years, this system is critical. It prevents each new AI project from creating a new level of data fragmentation, thereby ensuring data interoperability, auditability, and long-term viability of intelligent solutions [14].

Q5: What strategies can we implement to break down data silos and prevent fragmentation? A: Solving data fragmentation requires a combined technical and cultural approach:

- Centralize Data Storage: Use scalable solutions like data warehouses (for structured data), data lakes (for raw, unstructured data), or a data lakehouse (combining the benefits of both) to create a single source of truth [10] [12].

- Enforce Data Governance: Establish clear policies for data access, quality, and usage. Define roles and responsibilities for data ownership and management [12].

- Shift to Organizational Data Ownership: Treat data as a shared organizational asset rather than a department-specific resource. This encourages collaboration and a unified view [13].

- Use ETL Tools: Implement Extract, Transform, Load (ETL) processes to standardize and move data from existing silos into a centralized location [10].

- Implement Robust Data Management Plans: Create formal documents outlining how data will be handled during and after a research project to ensure consistency and compliance [15].

Troubleshooting Guides

Guide 1: Addressing Data Silos and Improving Integration

Problem: Researchers cannot access or integrate data from other departments, leading to incomplete analyses and duplicated efforts.

Solution: Follow a structured approach to identify and break down silos.

Step 1: Identify and Audit

Step 2: Develop a Technical Consolidation Plan

Step 3: Establish Governance and Culture

Guide 2: Balancing Motion Artifact Removal with Data Retention in fMRI

Problem: Overly aggressive motion artifact removal in fMRI data leads to the exclusion of large amounts of data, reducing sample size and statistical power.

Solution: Employ data-driven scrubbing methods that selectively remove only severely contaminated data volumes.

Background: Motion artifacts cause deviations in fMRI timeseries, and their removal ("scrubbing") is essential for analysis accuracy [16]. However, traditional motion scrubbing (based on head-motion parameters) often has high rates of censoring, leading to unnecessary data loss and the exclusion of many subjects [16].

Recommended Methodology: Data-Driven Projection Scrubbing

This method uses a statistical outlier detection framework to identify and flag only those volumes displaying abnormal patterns [16].

Workflow: Data-Driven fMRI Scrubbing

- Step 1: Dimensionality Reduction. Use a method like Independent Component Analysis (ICA) to project the high-dimensional fMRI data into a lower-dimensional space. This helps isolate underlying sources of variation, including artifacts [16].

- Step 2: Statistical Outlier Detection. Within this reduced space, apply a statistical framework (like projection scrubbing) to identify individual timepoints (volumes) that are multivariate outliers. These volumes are characterized by abnormal patterns of high variance or influence [16].

- Step 3: Flag and Remove. Only the volumes identified as severe outliers are flagged ("scrubbed") and removed from subsequent analysis. This contrasts with motion scrubbing, which may remove all data points exceeding a rigid motion threshold [16].

Comparison of Scrubbing Methods

| Feature | Motion Scrubbing | Data-Driven Scrubbing (e.g., Projection Scrubbing) |

|---|---|---|

| Basis | Derived from subject head-motion parameters [16] | Based on observed noise in the processed fMRI timeseries [16] |

| Data Loss | High rates of volume and entire subject exclusion [16] | Dramatically increases sample size by avoiding unnecessary censoring [16] |

| Key Advantage | Simple to compute | More valid and reliable functional connectivity on average; only flags volumes with abnormal patterns [16] |

| Main Drawback | Can exclude useable data, needs arbitrary threshold selection | Requires computational resources and statistical expertise |

Guide 3: Implementing a Clinical Data Management System (CDMS) for Drug Development

Problem: Clinical trial data is collected in disparate formats, leading to errors, delays, and compliance risks in regulatory submissions.

Solution: Implement a CDMS following established best practices and standards.

Essential Research Reagents & Tools for Clinical Data Management

| Item | Function |

|---|---|

| Clinical Data Management System (CDMS) | 21 CFR Part 11-compliant software (e.g., Oracle Clinical, Rave) to electronically store, capture, and protect clinical trial data [17] [15]. |

| Electronic Data Capture (EDC) System | Enables direct entry of clinical trial data at the study site, reducing errors from paper-based collection [15]. |

| CDISC Standards | Standardized data formats (e.g., SDTM, ADaM) for regulatory submissions, improving data quality and consistency [17] [15]. |

| MedDRA (Medical Dictionary) | A medical coding dictionary used to classify Adverse Events (AEs) for consistent review and analysis [17]. |

| Data Management Plan (DMP) | A formal document describing how data will be handled during and after the clinical trial to ensure quality and compliance [17]. |

Workflow: Clinical Data Management Lifecycle

- Step 1: Protocol & System Setup. Develop the clinical trial protocol and design the data collection tools (Case Report Forms - CRFs) and database [15].

- Step 2: Data Collection & Entry. Systematically collect and enter data according to the protocol, often using an EDC system [15].

- Step 3: Data Validation & Cleaning. Perform rigorous checks to identify errors, inconsistencies, or missing data. Issue queries to clinical sites to resolve issues [17] [15].

- Step 4: Database Lock. Once data cleaning is complete, the database is locked ("frozen") to prevent any further changes, ensuring data stability for analysis [17] [15].

- Step 5: Analysis & Reporting. Analyze the locked data and compile reports for regulatory submission [15].

Frequently Asked Questions

1. What are the most common motion artifacts encountered in EEG, ECG, and MRI? Motion artifacts are a pervasive challenge in biomedical signal acquisition. In EEG, the most common motion artifacts include cable movement, electrode popping (from abrupt impedance changes), and head movements that displace electrodes relative to the scalp [18] [19]. For ECG recorded inside an MRI scanner, the primary motion artifact is the gradient artifact (nGA(t)), induced by time-varying magnetic gradients according to Faraday's Law of Induction [20]. In fMRI, head motion is the dominant source, causing spin history effects and disrupting the magnetic field homogeneity, which can severely compromise the analysis of resting-state networks [21] [22].

2. How can I differentiate between physiological and non-physiological motion artifacts? Distinguishing between these artifact types is crucial for selecting the correct removal strategy.

- Physiological Artifacts originate from the patient's body. In EEG, this includes ballistocardiogram (BCG) artifact from scalp pulse and cardiac-related head motion, ocular artifacts from eye blinks, and muscle artifacts from jaw clenching or head movement [23] [18] [22]. These artifacts often have a biological rhythm and can be correlated with reference signals like ECG or EOG.

- Non-Physiological (External) Artifacts arise from outside the body. Examples are the MRI gradient artifact, 60-Hz power line interference, artifacts from infusion pumps, and cable movement [20] [18]. These are typically more abrupt and have characteristics tied to the external equipment.

3. What are the best practices for minimizing motion artifacts during data acquisition?

- EEG/ECG in MRI: Use fiber-optic transmission lines, proper analog low-pass filters to suppress high-frequency RF pulses, and keep electrodes close together near the magnet isocenter to minimize conductive loop areas [20] [19].

- General Setup: Ensure low electrode-scalp impedance, secure all cables to prevent sway, and use padding or bite bars in the MRI to physically restrict head motion [18] [22].

- Hardware Solutions: For wearable EEG, systems with active electrodes and in-ear designs can offer better mechanical stability and reduce motion artifacts [19].

4. My data is already contaminated. What are the most effective post-processing methods for artifact removal? The optimal method depends on the signal and artifact type.

- For EEG (General): Blind Source Separation (BSS), particularly Independent Component Analysis (ICA), is a state-of-the-art and commonly used algorithm for separating neural activity from artifacts like EMG and EOG [23].

- For EEG in fMRI: A combination of Average Artifact Subtraction (AAS) for gradient and BCG artifacts, followed by ICA and head movement trajectories from fMRI images to remove residual physiological artifacts, is highly effective [24] [22].

- For ECG in MRI: Adaptive filtering, such as the Least Mean Squares (LMS) algorithm, using the scanner's gradient signals as references, is successful in removing gradient-induced artifacts while preserving ECG morphology for arrhythmia detection [20].

- For fMRI: RETROICOR is a standard method for removing physiological noise from cardiac and respiratory cycles. Additionally, regressing out signals from white matter and cerebrospinal fluid (CSF) and incorporating head motion parameters as regressors are key steps [21].

5. How do I balance the removal of motion artifacts with the preservation of underlying biological signals? This is a central challenge in artifact removal research. Overly aggressive filtering can distort or remove the signal of interest.

- Reference Signals: Using dedicated reference channels (e.g., EOG, ECG, accelerometers) helps target only the artifactual components [23] [20].

- Data-Driven Approaches: Methods like ICA allow for the visual inspection and selective rejection of components identified as artifact, preserving neural components [23] [24].

- Validation: Always compare the data before and after processing. For event-related potentials (ERPs) in EEG, ensure that known components (like the P300) are not attenuated. In fMRI, check that the global signal characteristics remain physiologically plausible after noise regression [21].

Troubleshooting Guides

Guide 1: Identifying and Categorizing Common Motion Artifacts

Use this guide to diagnose artifacts in your recorded data.

| Signal | Artifact Name | Type | Key Characteristics | Visual Cue in Raw Signal |

|---|---|---|---|---|

| EEG | Cable Movement | Non-Physiological | High-amplitude, irregular, low-frequency drifts [18] | Large, slow baseline wanders |

| Electrode Pop | Non-Physiological | Abrupt, high-amplitude transient localized to a single electrode [18] | Sudden vertical spike in one channel | |

| Muscle (EMG) | Physiological | High-frequency, irregular, "spiky" activity [23] [18] | High-frequency "hairy" baseline | |

| Ballistocardiogram (BCG) | Physiological | Pulse-synchronous, rhythmic, ~1 Hz, global across scalp [24] [22] | Repetitive pattern synchronized with heartbeat | |

| ECG (in MRI) | Gradient (nGA(t)) | Non-Physiological | Overwhelming amplitude, synchronized with MRI sequence repetition [20] | Signal is completely obscured by large, repeating pattern |

| fMRI | Head Motion | Physiological | Abrupt signal changes, spin history effects, correlated with motion parameters [21] | "Jumpy" time-series; correlations at brain edges |

Guide 2: Quantitative Comparison of Artifact Removal Methods

This table summarizes the performance of different methods as reported in the literature, aiding in method selection.

| Method | Best For | Key Performance Metrics | Advantages | Limitations |

|---|---|---|---|---|

| Regression | Ocular artifacts in EEG [23] | N/A | Simple, computationally inexpensive | Requires reference channels; bidirectional contamination can cause signal loss [23] |

| ICA / BSS | Muscle, ocular, & BCG artifacts in EEG [23] [24] | N/A | Does not require reference channels; can separate multiple sources | Computationally intensive; requires manual component inspection [23] |

| AAS | Gradient & BCG artifacts in EEG-fMRI [24] [22] | N/A | Standard, well-validated method | Assumes artifact is stationary; leaves residuals [22] |

| Motion-Net (CNN) | Motion artifacts in mobile EEG [25] | 86% artifact reduction; 20 dB SNR improvement [25] | Subject-specific; effective on real-world data | Requires a separate model to be trained for each subject [25] |

| Adaptive LMS Filter | ECG during real-time MRI [20] | 38 dB improvement in peak QRS to artifact noise [20] | Operates in real-time; adapts to changing conditions | Requires reference gradient signals from scanner [20] |

| RETROICOR | Cardiac/Respiratory noise in fMRI [21] | Significantly explains additional BOLD variance [21] | Highly effective for periodic physiological noise | Requires cardiac and respiratory recordings [21] |

Guide 3: Step-by-Step Experimental Protocol for EEG-fMRI Artifact Removal

This protocol outlines a robust pipeline for processing EEG data contaminated with fMRI-related artifacts [24] [22].

Step 1: Preprocessing. Resample the EEG data to a high sampling rate (e.g., 5 kHz) if necessary. Synchronize the EEG and fMRI clocks to ensure accurate timing of the gradient artifact template.

Step 2: Remove Gradient Artifact (GA). Apply the Averaged Artifact Subtraction (AAS) method. Create a template of the GA by averaging the artifact over many repetitions, aligned to the fMRI volume triggers. Subtract this template from the raw EEG data [22].

Step 3: Remove Ballistocardiogram (BCG) Artifact. Apply the AAS method again, but this time using the ECG or pulse oximeter signal to create a time-locked template of the BCG artifact. Subtract this template from the GA-corrected data [24].

Step 4: Remove Residual Physiological Artifacts. Use Independent Component Analysis (ICA) on the GA- and BCG-corrected data. Decompose the data into independent components. Manually or automatically identify and remove components corresponding to ocular, muscle, and residual motion artifacts. Innovative Step: Incorporate the head movement trajectories estimated from the fMRI images to help identify motion-related artifact components more accurately [24].

Step 5: Reconstruct and Verify. Reconstruct the clean EEG signal by projecting the remaining components back to the channel space. Visually inspect the final data to ensure artifact removal and signal preservation.

The following workflow diagram illustrates this multi-stage process:

Guide 4: The Scientist's Toolkit - Key Research Reagents & Materials

Essential materials and tools for designing experiments robust to motion artifacts.

| Item Name | Function/Purpose | Key Consideration |

|---|---|---|

| Active Electrode Systems | Amplifies signal at the source, reducing cable motion artifacts and environmental interference [19]. | Ideal for mobile EEG (mo-EEG) and high-motion environments. |

| Carbon Fiber Motion Loops | Placed on the head to measure motion inside the MRI bore, providing reference signals for artifact removal [24]. | Essential for advanced motion correction in EEG-fMRI. |

| Electrooculogram (EOG) Electrodes | Placed near eyes to record eye movements and blinks, providing a reference for regression-based removal of ocular artifacts [23]. | Crucial for isolating neural activity in frontal EEG channels. |

| Pulse Oximeter / Electrocardiogram (ECG) | Records cardiac signal, essential for identifying and removing pulse and BCG artifacts in EEG and fMRI [21] [22]. | A core component for physiological noise modeling. |

| Respiratory Belt | Monitors breathing patterns, providing the respiratory phase for RETROICOR-based noise correction in fMRI [21]. | Needed for comprehensive physiological noise correction. |

| Visibility Graph (VG) Features | A signal transformation method that provides structural information to deep learning models, improving artifact removal on smaller datasets [25]. | An emerging software "tool" for enhancing machine learning performance. |

The relationships between these tools, the artifacts they measure, and the correction methods they enable are shown below:

Technical Support Center

Troubleshooting Guides

Guide 1: Troubleshooting Motion Artifacts in Neuroimaging Data (fNIRS/fMRI)

Problem: My fNIRS or fMRI data shows unexpected spikes or shifts, suggesting potential motion artifact corruption. How can I confirm and address this?

Explanation: Motion artifacts are a predominant source of noise in neuroimaging, caused by head movements that disrupt the signal. In fMRI, this systematically alters functional connectivity (FC), decreasing long-distance and increasing short-range connectivity [26]. In fNIRS, motion causes peaks or shifts in time-series data due to changes in optode-scalp coupling [27] [28].

Solution Steps:

- Visual Inspection & Impact Scoring: Begin with a visual check of your time-series data for sudden, large-amplitude deflections. For fMRI, calculate a Motion Impact Score using methods like SHAMAN (Split Half Analysis of Motion Associated Networks) to quantify how much motion is skewing your brain-behavior relationships [26].

- Apply Algorithmic Correction: Choose a denoising method appropriate for your data.

- For fNIRS: Consider a Deep Learning Autoencoder (DAE), which has been shown to outperform conventional methods by automatically learning noise features without manual parameter tuning [27]. Other methods include spline interpolation, wavelet filtering, and correlation-based signal improvement (CBSI) [27].

- For fMRI: After standard denoising pipelines, consider additional motion censoring (removing high-motion frames). A framewise displacement (FD) threshold of <0.2 mm can significantly reduce motion overestimation [26].

- Validate with Metrics: After correction, calculate quality metrics to ensure signal integrity.

Guide 2: Troubleshooting AI Model Performance Degradation from Medical Imaging Artifacts

Problem: My AI model for automated medical image segmentation performs well on clean data but fails on clinical images with motion artifacts.

Explanation: Diagnostic AI models are often trained on high-quality, artifact-free data. When deployed in clinical settings, motion artifacts cause a performance drop because the model encounters data different from its training set [29]. This is critical as motion artifacts affect up to a third of clinical MRI sequences [29].

Solution Steps:

- Assess Artifact Severity: First, grade the artifact severity in your test data. A common method is a qualitative scale: None, Mild, Moderate, Severe [29].

- Retrain with Data Augmentation: Incorporate data augmentation during training to improve model robustness.

- MRI-Specific Augmentations: Augment your training set with artificially introduced motion artifacts that emulate real-world MR image degradation [29].

- Standard Augmentations: Also apply standard nnU-Net or other framework-specific augmentations. Research shows that while MRI-specific augmentations help, general-purpose augmentations are highly effective [29].

- Benchmark Performance: Evaluate your retrained model on the artifact-corrupted test set. Track key metrics like Dice Similarity Coefficient (DSC) for segmentation accuracy and Mean Absolute Deviation (MAD) for quantification tasks across different artifact severity levels [29].

Frequently Asked Questions (FAQs)

FAQ 1: What are the most common sources of motion artifacts in brain imaging?

- fNIRS: Head movements (nodding, shaking), facial muscle movements (eyebrow raises, jaw movements during talking/eating), and body movements that cause head displacement or device inertia [28]. The root cause is imperfect contact between sensors (optodes) and the scalp [27] [28].

- fMRI: Any in-scanner head motion, including involuntary sub-millimeter movements. This is a major challenge in populations where movement is more common, such as children or individuals with certain neurological disorders [26].

FAQ 2: My data is contaminated with severe motion artifacts. Should I remove the entire dataset? The decision balances data retention and artifact removal. While discarding data is sometimes necessary, it can introduce bias by systematically excluding participants who move more (e.g., certain patient groups) [26]. The preferred methodology is to apply advanced artifact removal techniques (e.g., DAE for fNIRS, censoring with FD < 0.2 mm for fMRI) to salvage the data [27] [26]. The goal is to preserve data integrity without compromising the study's population representativeness.

FAQ 3: How can I prevent motion artifacts during data acquisition? Proactive strategies include:

- Patient Preparation: Clearly explaining the importance of staying still to participants.

- Physical Stabilization: Using comfortable but secure head restraints (e.g., vacuum pads, foam padding) [28].

- Hardware Solutions: Using accelerometers or inertial measurement units (IMUs) to measure motion in real-time, which can be used for post-processing correction [28].

- Sequence Innovation: Using motion-robust MRI sequences like radial sampling (PROPELLER/BLADE) [29].

FAQ 4: What are the key metrics for evaluating artifact removal success? Metrics depend on the data type and goal:

- Noise Suppression: Signal-to-Noise Ratio (SNR), Signal-to-Artifact Ratio (SAR) [30].

- Signal Fidelity: Root Mean Square Error (RMSE), Normalized Mean Square Error (NMSE), Correlation Coefficient (CC) with a ground truth signal [30].

- Task Performance: For AI models, Dice Similarity Coefficient (DSC) for segmentation, and Mean Absolute Deviation (MAD) for measurement tasks [29].

Table 1: Performance of Artifact Removal Methods in fNIRS

| Method | Key Principle | Key Performance Metrics | Computational Efficiency |

|---|---|---|---|

| Denoising Autoencoder (DAE) [27] | Deep learning model to automatically learn and remove noise features. | Outperformed conventional methods in lowering residual motion artifacts and decreasing Mean Squared Error [27]. | High (after training) [27] |

| Spline Interpolation [27] | Models artifact shape using cubic spline interpolation. | Performance highly dependent on the accuracy of the initial noise detection step [27]. | Medium |

| Wavelet Filtering [27] | Identifies outliers in wavelet coefficients as artifacts. | Requires tuning of the probability threshold (alpha) [27]. | Medium |

| Accelerometer-Based (ABAMAR) [28] | Uses accelerometer data for active noise cancellation or artifact rejection. | Enables real-time artifact rejection; improves feasibility of use in mobile settings [28]. | Varies |

| Artifact Severity | Augmentation Strategy | Segmentation Quality (DSC) - Proximal Femur | Femoral Torsion Measurement MAD (˚) |

|---|---|---|---|

| Severe | No Augmentation (Baseline) | 0.58 ± 0.22 | 20.6 ± 23.5 |

| Severe | Default nnU-Net Augmentations | 0.72 ± 0.22 | 7.0 ± 13.0 |

| Severe | Default + MRI-Specific Augmentations | 0.79 ± 0.14 | 5.7 ± 9.5 |

| All Levels | Default + MRI-Specific Augmentations | Maintained higher DSC and lower MAD across all severity levels [29]. | N/A |

Experimental Protocols

Aim: To remove motion artifacts from fNIRS data using a deep learning model that is free from strict assumptions and manual parameter tuning.

Methodology:

- Data Simulation: Generate a large synthetic fNIRS dataset to facilitate deep learning training. The simulated noisy signal ((F'(t))) is a composite of:

- A clean hemodynamic response function ((F(t))) modeled by gamma functions.

- Motion artifacts ((\Phi{MA}(t))), including spike noise (modeled by a Laplace distribution) and shift noise (DC changes).

- Resting-state fNIRS background ((\Phi{rs}(t))), simulated using an Autoregressive (AR) model.

- Parameters for all components are derived from experimental data distributions [27].

- Network Design: Implement a DAE with a nine-layer stacked convolutional neural network architecture, followed by max-pooling layers [27].

- Training: Train the network using a dedicated loss function designed to effectively separate the clean signal from the motion artifacts [27].

- Validation: Benchmark the DAE's performance against conventional methods (e.g., spline, wavelet) on both synthetic and open-access experimental fNIRS datasets using metrics like Mean Squared Error (MSE) and qualitative residual analysis [27].

Aim: To systematically study how motion artifacts and data augmentation strategies affect an AI model's accuracy in segmenting lower limbs and quantifying their alignment.

Methodology:

- Test Set Acquisition:

- Acquire axial T2-weighted MR images of the hips, knees, and ankles from healthy participants.

- For each participant, acquire five image series: one at rest and four during induced motions (foot motion and gluteal contraction at high and low frequencies) [29].

- Artifact Grading: Have two clinical radiologists independently grade each image stack for motion artifact severity using a standardized scale (None, Mild, Moderate, Severe), reaching a consensus for each stack [29].

- AI Model Training: Train three versions of an nnU-Net-based AI model for bone segmentation with different augmentation strategies:

- Baseline: No data augmentation.

- Default: Standard nnU-Net augmentations (e.g., rotations, scaling, elastic deformations).

- MRI-Specific: Default augmentations plus simulated MR artifacts [29].

- Performance Evaluation:

- Segmentation Quality: Calculate the Dice Similarity Coefficient (DSC) between AI and manual segmentations.

- Measurement Accuracy: Compare AI-derived torsional angles to manual measurements using Mean Absolute Deviation (MAD), Intraclass Correlation Coefficient (ICC), and Pearson's correlation (r) [29].

- Analyze performance stratified by the radiologists' artifact severity grades [29].

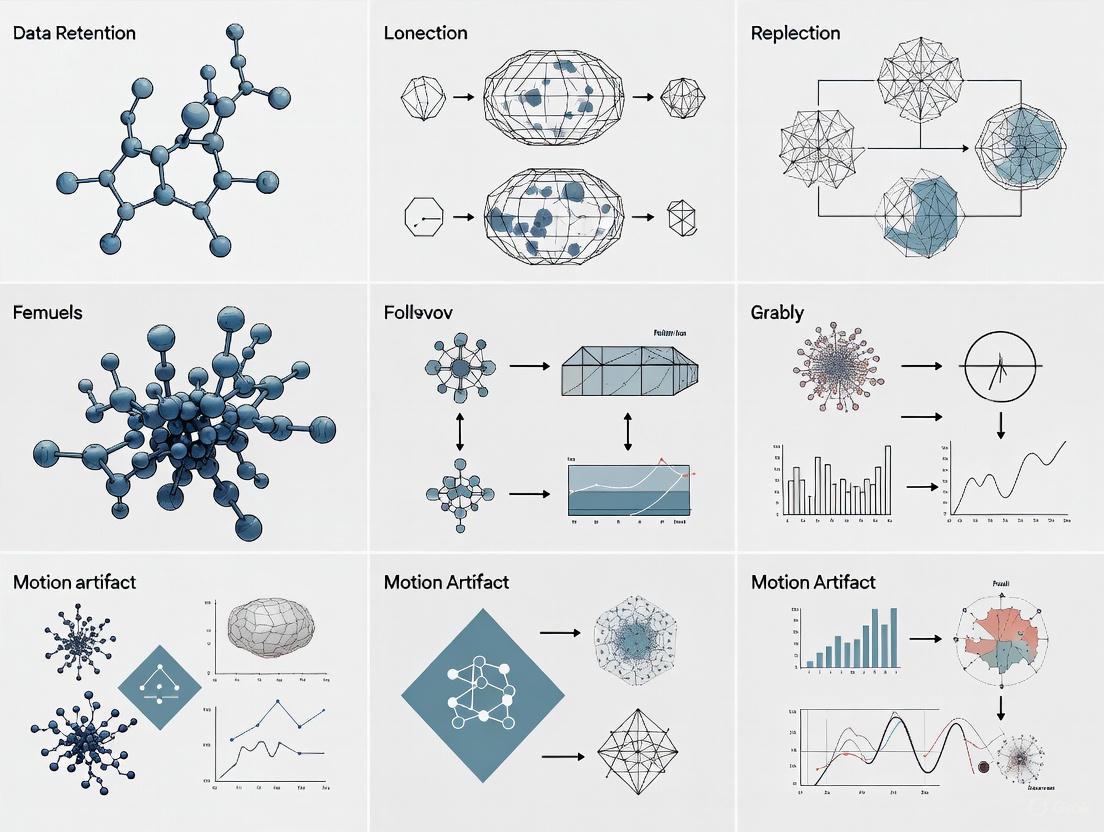

Workflow and Signaling Diagrams

Diagram 1: fNIRS DAE Training & Application

Diagram 2: Artifact Severity vs. AI Performance

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Artifact Management Research

| Item | Function in Research |

|---|---|

| Denoising Autoencoder (DAE) [27] | A deep learning architecture used for automatically removing motion artifacts from fNIRS and other biosignals without manual parameter tuning. |

| nnU-Net Framework [29] | A self-configuring framework for biomedical image segmentation, used as a base for training and evaluating AI model robustness with different augmentation strategies. |

| Accelerometer / IMU [28] | Auxiliary hardware used to measure head motion quantitatively. The signal serves as a reference for motion artifact removal algorithms in fNIRS. |

| Motion Impact Score (SHAMAN) [26] | A computational method for assigning a trait-specific score quantifying how much residual motion artifact is affecting functional connectivity-behavior relationships in fMRI. |

| Data Augmentation Pipelines [29] | A set of techniques to artificially expand training datasets by applying transformations like rotations, scaling, and simulated MR artifacts to improve AI model generalizability. |

| Standardized Artifact Severity Scale [29] | A qualitative grading system (e.g., None, Mild, Moderate, Severe) used by radiologists to consistently classify the degree of motion corruption in medical images. |

Troubleshooting Guides

Troubleshooting Scenario 1: Handling Low-Quality Data with Suspected Motion Artifacts

User Question: "My dataset has unexpected signal noise and missing data points, potentially from patient motion during collection. How should I proceed with artifact removal without violating our data retention policy?"

Diagnosis Guide:

- Check Data Acquisition Logs: Review device timestamps and operator notes for documented collection interruptions.

- Visualize Raw Signal Patterns: Use spectral analysis to identify high-frequency noise patterns characteristic of motion artifacts.

- Compare with Predefined Quality Thresholds: Measure signal-to-noise ratio (SNR) against your study's pre-established minimum thresholds.

Resolution Steps:

- Document Artifact Justification: Create an audit trail entry detailing:

- Specific data segments affected

- Technical evidence of artifact presence (e.g., SNR measurements)

- Timestamp of detection

- Apply Approved Filtering Methods: Use only validated algorithms from your institution's approved toolset (e.g., wavelet denoising for physiological signals).

- Retain Raw Data Version: Preserve the original dataset per retention policy requirements before any processing.

- Version Control: Create a new, clearly labeled version of the dataset indicating "post-artifact removal" with processing parameters documented.

Verification:

- Processed data maintains structural integrity with metadata preserved.

- Audit log updated with artifact removal justification and methodology.

- Raw data version remains accessible and unaltered in designated repository.

Troubleshooting Scenario 2: Data Processing Pipeline Failures During Artifact Removal

User Question: "Our automated artifact removal script is failing during batch processing, but we can't identify which files are causing the problem."

Diagnosis Guide:

- Review Error Logs: Identify specific error codes and failure points in the processing pipeline.

- Check Input Data Consistency: Verify all files adhere to expected format, schema, and completeness requirements.

- Test with Subset: Run the process on a small, known-valid subset to isolate the issue.

Resolution Steps:

- Implement Data Validation Checkpoint: Add a pre-processing verification step that checks for:

- File format compliance

- Required metadata fields

- Signal value ranges within expected parameters

- Create Exception Handling: Modify scripts to:

- Flag problematic files without stopping entire batch process

- Generate detailed error reports for each failed file

- Route failures to a quarantine directory for manual review

- Maintain Processing Logs: Document all processing attempts, including successful files and failures with timestamps.

Verification:

- Batch processing completes with comprehensive success/failure report.

- Failed files are isolated with specific error descriptions.

- No data loss occurs during the failure handling process.

Troubleshooting Scenario 3: Regulatory Compliance Concerns with Data Modification

User Question: "Our ethics committee is questioning how we reconcile data modification during artifact removal with requirements for data integrity and provenance."

Diagnosis Guide:

- Review Consent Language: Verify that informed consent documents allow for data processing methods being employed.

- Audit Trail Analysis: Check completeness of documentation for all data transformation steps.

- Policy Alignment Check: Compare your artifact removal procedures against institutional data governance policies.

Resolution Steps:

- Implement Comprehensive Provenance Tracking:

- Document exact parameters used for all filtering algorithms

- Maintain both raw and processed data versions with clear lineage

- Record software versions and computational environment details

- Create Processing Justification Documentation:

- Reference scientific literature supporting chosen artifact removal methods

- Provide quantitative evidence of data quality improvement

- Document how processed data better addresses research questions

- Develop Validation Protocols:

- Perform sensitivity analyses showing results are not artifacts of processing

- Implement positive controls to verify processing efficacy

- Conduct blinded reviews of processed vs. unprocessed data

Verification:

- Complete audit trail from raw data through all processing steps to analytical results.

- Clear documentation demonstrating that processing enhances signal validity rather than distorting findings.

- Ethics committee approval of documented methodology.

Frequently Asked Questions

Q: How should we document artifact removal to satisfy both scientific rigor and regulatory data retention policies?

A: Implement a standardized artifact removal documentation protocol that includes:

- Pre-processing data quality metrics

- Exact parameters and algorithms used for artifact removal

- Post-processing quality validation results

- Clear linkage between raw and processed data versions This approach aligns with FDA 2025 guidance emphasizing transparent documentation for AI/ML-based data processing [31].

Q: What are the minimum metadata requirements when removing artifacts from datasets governed by data lifecycle policies?

A: Your metadata should comprehensively capture:

- Artifact Identification: How and why specific data segments were flagged as artifacts

- Processing Methodology: Specific algorithms, parameters, and software versions used

- Personnel & Timing: Who performed the removal and when

- Validation Evidence: Quantitative metrics demonstrating improved data quality without substantive alteration This metadata should be preserved throughout the data lifecycle per FDAAA 801 requirements [32].

Q: How do we handle artifact removal in multi-center studies where different sites use different collection equipment?

A: Standardize the artifact definition and removal process through:

- Cross-site Protocol Alignment: Establish unified quality thresholds and artifact definitions

- Equipment-Specific Validation: Validate that artifact removal methods perform consistently across different platforms

- Centralized Processing: Where possible, perform artifact removal using standardized methods at a central processing site

- Comparative Analysis: Document and account for any site-specific processing effects in your analysis

Q: What's the appropriate retention period for raw data after artifacts have been removed and analysis completed?

A: Retention periods should follow:

- Regulatory Minimums: FDAAA 801 and similar regulations often specify minimum retention periods (typically several years after study completion) [32]

- Scientific Best Practice: Retain raw data for at least as long as processed data and research results

- Publication Requirements: Many journals require raw data availability for several years post-publication

- Institutional Policy: Always comply with your institution's specific data governance framework, which may exceed regulatory minimums

Q: Can automated artifact removal tools be used in FDA-regulated research?

A: Yes, with appropriate validation as outlined in FDA 2025 AI/ML guidance [31]. Key requirements include:

- Establishing tool credibility for your specific context of use

- Documenting performance characteristics and limitations

- Validating against manual expert review as a reference standard

- Implementing version control and change management procedures

- Maintaining comprehensive audit trails of all automated processing

Experimental Protocols

Table 1: Experimental Protocols for Artifact Removal Validation

| Protocol Name | Purpose | Methodology | Key Metrics | Data Lifecycle Considerations |

|---|---|---|---|---|

| Signal Quality Assessment | Quantify baseline data quality before processing | Calculate signal-to-noise ratio (SNR), amplitude range analysis, missing data quantification | SNR > 6dB, missing data <5%, amplitude within expected physiological range | Results documented in metadata; triggers artifact removal protocol when thresholds not met |

| Motion Artifact Removal Validation | Validate efficacy of motion artifact removal algorithms | Apply wavelet denoising + bandpass filtering; compare with expert-annotated gold standard | Reduction in high-frequency power (>20Hz); preservation of physiological signal characteristics | Raw and processed versions stored with clear lineage; processing parameters archived |

| Data Provenance Documentation | Maintain complete audit trail of all data transformations | Automated logging of processing steps, parameters, and software versions using standardized metadata schema | Completeness of provenance documentation; ability to recreate processing exactly | Integrated with institutional data repository; retained for duration of data lifecycle |

| Impact Analysis on Study Outcomes | Assess whether artifact removal meaningfully alters study conclusions | Sensitivity analysis comparing results with/without processing; statistical tests for significant differences | Consistency of primary outcomes; effect size changes <15% | Documentation supports regulatory submissions; demonstrates processing doesn't introduce bias |

Research Reagent Solutions

Table 2: Essential Research Materials for Artifact Removal Research

| Item Name | Function | Application Context | Implementation Considerations |

|---|---|---|---|

| Qualitative Data Analysis Software (NVivo) | Organize, code, and analyze qualitative research data [33] | Thematic analysis of interview transcripts regarding patient-reported outcomes | Color-coding available for visual analysis; supports collaboration cloud for team-based analysis [34] [35] |

| Data Quality Assessment Toolkit | Quantitative metrics for signal quality evaluation | Automated quality screening during data acquisition and preprocessing | Must be validated for specific data types and integrated with data lifecycle management platform |

| Wavelet Denoising Algorithms | Multi-resolution analysis for noise removal without signal distortion | Motion artifact removal in physiological signals (EEG, ECG, accelerometry) | Parameter optimization required for specific applications; validation against known signals essential |

| Provenance Tracking Framework | Comprehensive audit trail for all data transformations | Required for regulated research environments and publication transparency | Should automatically capture processing parameters, software versions, and operator information |

| Statistical Validation Package | Sensitivity analysis for processing impact assessment | Quantifying effects of artifact removal on study outcomes | Includes appropriate multiple comparison corrections; power analysis for detecting meaningful differences |

Workflow Visualization

Diagram 1: Artifact Removal within Data Lifecycle

Diagram 2: Artifact Removal Decision Framework

Modern Removal Techniques and Their Integration into Research Data Pipelines

FAQ: Technical Troubleshooting for Deep Learning Signal Reconstruction

Q1: My CNN model for removing motion artifacts from fNIRS signals is not converging. What could be the issue?

A: Non-convergence can often stem from problems with your input data or model configuration. Focus on these key areas:

- Data Verification: First, ensure your input data and artifact simulations are correct. For fNIRS, a common synthesis method involves creating noisy signals by combining a clean hemodynamic response (HRF), simulated motion artifacts (spikes and shifts), and resting-state fNIRS generated via an autoregressive (AR) model [27]. If the simulated artifacts do not resemble real noise, the model cannot learn effectively.

- Loss Function: The choice of loss function is critical. Standard losses like Mean Squared Error (MSE) might not be sufficient. Designing a dedicated loss function that specifically penalizes the presence of artifact characteristics can guide the training process more effectively and help the model converge [27].

- Model Depth and Capacity: Verify that your model has sufficient depth (number of layers) and filters to capture the complex, non-linear relationships in the signal. CNNs are favored in this domain precisely for their ability to handle non-linear and non-stationary signal properties [36].

Q2: When should I choose a U-Net architecture over a standard 1D-CNN for my signal reconstruction task?

A: The choice depends on the goal of your project and the nature of the signal corruption.

- Use a 1D-CNN or Denoising Autoencoder (DAE) when the primary task is cleanup or denoising—for instance, removing motion-induced spikes and shifts from a 1D fNIRS or EEG signal while preserving the underlying physiological waveform. These architectures are efficient at learning to filter out noise from the input signal [27].

- Use a U-Net when the task requires precise, pixel-wise (or sample-wise) localization in addition to removal. This is especially powerful in scenarios like biomedical image segmentation or when the artifact causes complex, structured distortions. The U-Net's defining feature is its contracting and expanding path with skip connections. The encoder captures context, while the decoder enables precise localization by combining this context with high-resolution features from the skip connections, preventing information loss during downsampling [37].

Q3: How can I evaluate my model's performance beyond standard metrics like Mean Squared Error (MSE)?

A: Relying solely on MSE can be misleading, as a low MSE does not guarantee that the signal's physiological features are preserved. You should employ a combination of metrics to evaluate both noise suppression and signal fidelity [38].

- For Noise Suppression:

- Signal-to-Noise Ratio (SNR): Measures the level of the desired signal relative to the background noise. An increase indicates better denoising.

- Peak-to-Peak Ratio (PPR): Useful for evaluating the preservation of signal amplitude.

- Contrast-to-Noise Ratio (CNR): Assesses the ability to distinguish a signal feature from the background.

- For Signal Distortion:

- Pearson's Correlation Coefficient (PCC): Quantifies how well the shape of the reconstructed signal matches the clean, ground-truth signal.

- Delta (Signal Deviation): Measures the absolute difference between the original and processed signals [38].

The table below summarizes a broader set of evaluation metrics for your experiments.

| Metric Category | Metric Name | Description | Application Focus |

|---|---|---|---|

| Noise Suppression | Signal-to-Noise Ratio (SNR) | Level of desired signal relative to background noise. | General denoising quality [38] |

| Peak-to-Peak Ratio (PPR) | Ratio between the maximum and minimum amplitudes of a signal. | Preservation of signal amplitude [38] | |

| Contrast-to-Noise Ratio (CNR) | Ability to distinguish a signal feature from the background. | Feature detectability [27] | |

| Signal Fidelity | Pearson's Correlation (PCC) | Measures the linear correlation between original and processed signals. | Shape preservation [38] |

| Delta (Signal Deviation) | Measures the absolute difference between signals. | Overall accuracy [38] | |

| Computational | Processing Time/Throughput | Time required to process a given length of signal data. | Real-time application feasibility [36] |

Q4: What is a "skip connection" in a U-Net and why is it important for signal reconstruction?

A: A skip connection is a direct pathway that forwards the feature maps from a layer in the contracting (encoder) path to the corresponding layer in the expanding (decoder) path.

- Function: They serve as a highway for high-resolution, local details (like the exact position of an artifact) that might be lost during the downsampling process in the encoder.

- Importance: Without skip connections, the decoder would have to reconstruct fine-grained details using only the highly processed, low-resolution data from the bottom of the network. This is very difficult and can lead to blurry or inaccurate reconstructions. By providing these high-resolution features, skip connections allow the U-Net to generate more precise and detailed outputs, which is crucial for accurate signal reconstruction and segmentation [37].

The following diagram illustrates the flow of data in a U-Net, highlighting how skip connections bridge the encoder and decoder.

Q5: From a data management perspective, how long should I retain the raw and processed signal data from my experiments?

A: Establishing a clear Data Retention Policy is a critical part of responsible research, balancing the need for reproducibility with storage costs and privacy regulations. Adopt a risk-based approach and consider these factors [39]:

- Purpose and Regulatory Requirements: Retain data for as long as it serves a legitimate research purpose. Be aware of specific legal or funding body mandates that dictate minimum retention periods (e.g., for clinical trials). The GDPR principle of "storage limitation" requires that data be kept no longer than necessary [39].

- Data Categorization: Implement different retention windows for different data types.

- Raw Data: Keep permanently or for a long period (e.g., 5-10 years) to ensure the reproducibility of your results, as it is the ground truth of your experiment.

- Processed/Denoised Data: These can often have a shorter retention period, as they can be re-generated from the raw data if the processing pipeline is preserved.

- Intermediate Training Data (e.g., synthetic fNIRS): This data, often generated for training deep learning models [27], can typically be deleted once the model is finalized and validated, as it can be re-simulated.

- Automated Lifecycle Policies: Use automated scripts or cloud storage lifecycle policies to automatically archive or delete data once its retention period expires. This reduces human error and guarantees consistent compliance with your policy [39] [40].

The Scientist's Toolkit: Research Reagents & Essential Materials

The following table details key components and their functions used in developing and testing deep learning models for signal reconstruction, as featured in the cited research.

| Item Name | Function/Description | Application Context |

|---|---|---|

| Convolutional Neural Network (CNN) | An artificial neural network designed to process data with a grid-like topology (e.g., 1D signals, 2D images). It uses convolutional layers to automatically extract hierarchical features [36]. | Base architecture for many signal denoising tasks; effective for capturing temporal dependencies in EEG and fNIRS [36]. |

| U-Net Architecture | A specific CNN architecture with a symmetric encoder-decoder structure and skip connections. It captures context and enables precise localization [37]. | Biomedical image segmentation and detailed signal reconstruction where preserving spatial/temporal structure is vital [41] [37]. |

| Denoising Autoencoder (DAE) | A type of neural network trained to reconstruct a clean input from a corrupted version. It learns a robust representation of the data [27]. | Removing motion artifacts and noise from fNIRS and other signals in an end-to-end manner [27]. |

| Synthetic fNIRS Dataset | Computer-generated data that mimics the properties of real fNIRS signals, created by combining simulated hemodynamic responses, motion artifacts, and resting-state noise [27]. | Provides large volumes of labeled data (clean & noisy pairs) for robust training of deep learning models where real-world data is limited [27]. |

| Quantitative Evaluation Metrics (SNR, PCC, etc.) | A standardized set of numerical measures to objectively quantify the performance of a reconstruction algorithm in terms of noise removal and signal preservation [38]. | Essential for benchmarking different models (e.g., CNN vs. U-Net vs. DAE) and demonstrating improvement over existing methods [38] [27]. |

| Motion Artifact Simulation Model | A computational model (e.g., using Laplace distributions for spikes) that generates realistic noise patterns to corrupt clean signals for training [27]. | Creates the "noisy" part of the input data for supervised learning, allowing models to learn the mapping from corrupted to clean signals [27]. |

Experimental Protocol: Training a Denoising Autoencoder (DAE) for fNIRS Motion Artifact Removal

This protocol outlines the methodology, based on current research, for training a deep learning model to remove motion artifacts from functional Near-Infrared Spectroscopy (fNIRS) data [27].

1. Objective: To train a DAE model that takes a motion-artifact-corrupted fNIRS signal as input and outputs a cleaned, motion-artifact-free signal.

2. Data Preparation and Synthesis:

- Clean Hemodynamic Response (HRF) Simulation: Generate the clean signal component,

F(t), using a standard double-gamma function to model the brain's blood oxygenation response. - Motion Artifact Simulation: Create the noise component,

ΦMA(t), by simulating two common artifact types:- Spike Artifacts: Model using a Laplace distribution:

f(t) = A · exp(-|t - t₀| / b), whereAis amplitude andbis a scale parameter. - Shift Artifacts: Model as a sudden, sustained positive or negative baseline shift.

- Spike Artifacts: Model using a Laplace distribution:

- Resting-State fNIRS Simulation: Generate the background physiological noise,

Φrs(t), using a 5th-order Autoregressive (AR) model. The parameters for the AR model are obtained by fitting to experimental resting-state data. - Final Synthetic Data: Create the training dataset by combining these components:

Noisy HRF = Clean HRF + Motion Artifacts + Resting-State fNIRS[27]. This provides a large, scalable set of paired data (noisy input, clean target) for supervised learning.

3. Model Architecture (DAE):

- The model should consist of multiple stacked convolutional layers.

- Use convolutional layers with ReLU activation functions to extract features from the input signal.

- Incorporate max-pooling layers to reduce dimensionality and capture broader features.

- Use upsampling layers (or transposed convolutions) to reconstruct the signal to its original length.

- The final output layer should use a linear activation function for regression.

4. Training Configuration:

- Loss Function: Use a dedicated loss function, such as Mean Squared Error (MSE) combined with a term that penalizes the difference in correlation between the oxy- and deoxy-hemoglobin signals, to better guide the training.

- Optimizer: Use the Adam optimizer for efficient learning.

- Validation: Split the synthetic data into training and validation sets (e.g., 80/20) to monitor for overfitting.

5. Evaluation:

- After training, evaluate the model on a held-out test set of synthetic data.

- Finally, validate the model's performance on a real, experimental fNIRS dataset that was not used during training to assess its generalizability [27].

The workflow for this experimental protocol is summarized in the following diagram.

Electroencephalography (EEG) is the only brain imaging method that is both lightweight and possesses the temporal precision necessary to assess electrocortical dynamics during human locomotion and other real-world activities [42] [43]. A significant barrier in mobile brain-body imaging (MoBI) is the contamination of EEG signals by motion artifacts, which originate from head movement, electrode displacement, and cable sway [42] [25]. These artifacts can severely reduce data quality and impede the identification of genuine brain activity. Among the various solutions developed, two advanced signal processing approaches stand out: iCanClean with pseudo-reference noise signals and Artifact Subspace Reconstruction (ASR). This technical support center article provides a detailed comparison, troubleshooting guide, and experimental protocols for these methods, framed within the critical research context of balancing aggressive artifact removal with the preservation of underlying neural signals.

Method Comparison & Quantitative Performance

The following table summarizes the core characteristics and documented performance of iCanClean and ASR based on recent studies.

Table 1: Comparison of iCanClean and Artifact Subspace Reconstruction

| Feature | iCanClean | Artifact Subspace Reconstruction (ASR) |

|---|---|---|

| Core Principle | Uses Canonical Correlation Analysis (CCA) to identify and subtract noise subspaces correlated with reference or pseudo-reference noise signals [44] [42]. | Uses sliding-window Principal Component Analysis (PCA) to identify and remove high-variance components exceeding a threshold from calibration data [42] [45]. |

| Noise Signal Requirement | Works with physical reference signals (e.g., dual-layer electrodes) or generates its own "pseudo-reference" signals from the raw EEG [44] [46]. | Requires a segment of clean EEG data for calibration [42]. |

| Primary Artifacts Addressed | Motion, muscle, eye, and line-noise artifacts [44]. | Motion, eye, and muscle artifacts [42] [47]. |

| Key Performance Findings | In a phantom head study, improved Data Quality Score from 15.7% to 55.9% in a combined artifact condition, outperforming ASR, Auto-CCA, and Adaptive Filtering [44]. | An optimal parameter (k) between 20-30 balances non-brain signal removal and brain activity retention [47]. |

| During running, enabled identification of the expected P300 ERP congruency effect [42] [43]. | During running, produced ERP components similar to a standing task, but the P300 effect was less clear than with iCanClean [42]. | |

| Improved ICA dipolarity more effectively than ASR in human running data [42]. | Improved ICA decomposition quality and removed more eye/muscle components than brain components [47]. | |

| Computational Profile | Suitable for real-time implementation [44]. | Suitable for real-time and online applications [42] [47]. |

Detailed Methodologies & Experimental Protocols

The iCanClean algorithm is designed to remove latent noise components from data signals like EEG. Its effectiveness has been validated on both phantom and human data [44].

Workflow Overview: The following diagram illustrates the core signal processing workflow of the iCanClean algorithm when using pseudo-reference signals.

Experimental Protocol for Human Locomotion (e.g., Running):

- Data Acquisition: Record high-density EEG (e.g., 100+ channels) at a sampling frequency of at least 500 Hz during the dynamic task (e.g., overground running) and a static control task [44] [42].

- Software Setup: Install the iCanClean plugin for EEGLAB from the official repository [46].

- Parameter Configuration:

- Noise Signal Source: Select the option to generate pseudo-reference noise signals. This is crucial when dedicated noise sensors (e.g., dual-layer electrodes) are not available.

- R² Threshold: Set the correlation threshold to 0.65. This value has been shown in human locomotion data to produce the most dipolar brain independent components [42].

- Sliding Window: Set the window length to 4 seconds for analysis, as validated in running studies [42].

- Execution: Run the iCanClean algorithm on the continuous raw EEG data from the dynamic task.

- Validation & Analysis:

- Perform Independent Component Analysis (ICA) on the cleaned data.

- Use ICLabel to classify components and assess the number of brain components.

- Calculate the dipolarity of the resulting independent components; a higher number of dipolar brain components indicates better cleaning [42].

- For event-related potential (ERP) studies, extract epochs and compare the morphology and expected effects (e.g., P300 congruency effect) to the static condition [42] [43].

Implementing Artifact Subspace Reconstruction (ASR)

ASR is an automatic, component-based method for removing transient or large-amplitude artifacts. Its performance is highly dependent on the quality of calibration data and the chosen threshold parameter [45] [47].

Workflow Overview: The diagram below outlines the key steps in the Artifact Subspace Reconstruction process, highlighting the critical calibration phase.

Experimental Protocol for Human Locomotion:

- Data Acquisition: Include a segment of clean data during the recording session, such as a few minutes of resting-state EEG while the participant is seated or standing quietly. This will serve as the calibration data [42].

- Parameter Configuration:

- Calibration Data: Manually select a high-quality, artifact-free segment of data for calibration. Newer variants of ASR (like ASRDBSCAN and ASRGEV) can automatically find better calibration data in non-stationary recordings [45].

- Threshold (k): Set the standard deviation cutoff parameter. A value between 20 and 30 is generally recommended as a starting point, balancing artifact removal and brain signal preservation [47]. For high-motion scenarios like running, a less aggressive threshold (e.g., k=10) may be necessary to avoid "over-cleaning" [42].

- Execution: Run the ASR algorithm on the continuous data, using the selected calibration segment.

- Validation & Analysis:

- Perform ICA on the ASR-cleaned data.

- Compare the number and dipolarity of brain independent components against a baseline (e.g., data cleaned only with a high-pass filter) [42].

- Examine the power spectrum at the gait frequency and its harmonics; effective cleaning should significantly reduce power at these frequencies [42].

Troubleshooting Guides & FAQs

FAQ 1: Why does my ICA look worse after cleaning with ASR?

This is a common sign of "over-cleaning," where the algorithm is too aggressive and starts to remove brain activity.

- Potential Cause: The ASR threshold parameter (

k) is set too low. - Solution: Increase the

kvalue to make the algorithm less sensitive. Start with a value of 20-30 as recommended in the literature [47] and adjust upwards if necessary. For very dynamic tasks, a higherkmight be required [42]. - Advanced Solution: Use an improved ASR algorithm like ASRDBSCAN or ASRGEV, which are specifically designed to handle non-stationary data and better identify clean calibration periods, thus preserving more brain activity [45].

The choice depends on your experimental setup and the quality of results required.

- Physical Noise Sensors (Dual-Layer Electrodes): These are the gold standard. They are mechanically coupled to the EEG electrodes but only record environmental and motion noise, providing a pristine noise reference. Use them whenever possible for optimal performance [44] [42].

- Pseudo-References: This is a fallback option for standard EEG systems without dedicated noise sensors. The algorithm creates its own reference by applying a filter (e.g., a notch filter below 3 Hz) to the raw EEG to isolate likely noise. While highly effective, it may not perform as well as having a true physical noise reference [44] [42].

FAQ 3: How do I choose between iCanClean and ASR for my motion artifact research?

The decision involves considering the principles and practicalities of each method.

- Choose iCanClean if:

- You have access to dual-layer EEG hardware or need to work without clean calibration data.

- Your primary goal is to recover high-fidelity ERPs from high-motion data, as it has shown superior performance in preserving components like the P300 during running [42] [43].

- You want a method that has consistently outperformed others in controlled phantom tests with known ground-truth signals [44].

- Choose ASR if:

- You are working with a standard EEG system and can record a clean calibration segment.

- You need a well-established, online-capable method that is integrated into popular toolboxes like EEGLAB.

- You are willing to fine-tune the

kparameter and potentially use newer variants (ASRDBSCAN/ASRGEV) for best results on intense motor tasks [45].

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Materials and Tools for Mobile EEG Artifact Research

| Item | Function in Research |

|---|---|

| High-Density EEG System (100+ channels) | Provides sufficient spatial information for effective blind source separation techniques like ICA and for localizing cortical sources [44]. |

| Dual-Layer or Active Electrodes | Specialized electrodes with a separate noise-sensing layer. They provide the optimal physical reference noise signal for methods like iCanClean, dramatically improving motion artifact removal [44] [42]. |

| Robotic Motion Platform & Electrical Head Phantom | A controlled setup for generating ground-truth data. It allows for precise introduction of motion and other artifacts while the true "brain" signals are known, enabling rigorous algorithm validation [44]. |

| EEGLAB Software Environment | An interactive MATLAB toolbox for processing continuous and event-related EEG data. It serves as a common platform for integrating and running various artifact removal plugins, including ASR and iCanClean [46]. |

| ICLabel Classifier | An EEGLAB plugin that automates the classification of independent components into categories (brain, muscle, eye, heart, line noise, channel noise, other). It is essential for quantitatively evaluating the outcome of cleaning procedures [42]. |

| Inertial Measurement Units (IMUs) | Sensors (accelerometers, gyroscopes) attached to the head. They can provide reference signals for motion artifacts, though traditional Adaptive Filtering with these signals may require nonlinear extensions for optimal results [44]. |

Frequently Asked Questions (FAQs)

Q1: What is the core difference between "default" and "MRI-specific" data augmentation?

A1: Default augmentations are general-purpose image transformations used broadly in computer vision. MRI-specific augmentations are designed to replicate the unique artifacts and corruptions found in real-world clinical MRI scans, such as motion artifacts, thereby making models more robust to these specific failure modes [29].

Q2: My AI model performs well on high-quality MRI scans but fails on artifact-corrupted data. How can data augmentation help?

A2: Training a model solely on clean data leads to overfitting and poor generalization to real-world clinical images. By incorporating augmentations that simulate MRI artifacts (e.g., motion ghosting) into your training set, you force the model to learn features that are invariant to these distortions. This improves its robustness and accuracy when it encounters corrupted data during clinical use [48] [29].

Q3: Is it always necessary to develop complex, MRI-specific augmentations?

A3: Not necessarily. Recent research indicates that while MRI-specific augmentations are beneficial, standard default augmentations can provide a very significant portion of the robustness gain. One study found that MRI-specific augmentations offered only a minimal additional benefit over comprehensive default strategies for a segmentation task. Therefore, a strong baseline should always be established using default methods before investing in more complex, domain-specific ones [29].

Q4: How do data augmentation strategies relate to broader data management, such as image retention policies?

A4: Effective data augmentation can artificially expand the value and utility of existing datasets. In a context where healthcare organizations face significant logistical and financial pressures regarding the long-term storage of medical images (with retention periods varying from 6 months to 30 years), robust augmentation techniques can help maximize the informational yield from retained data. This creates a balance between the costs of data retention and the need for large, diverse datasets to build reliable AI models [49] [50].

Troubleshooting Guides

Problem: Model Performance Degrades Severely on Motion-Corrupted Scans

Symptoms: High accuracy on clean validation images but significant drops in metrics like Dice Score (DSC) or Peak Signal-to-Noise Ratio (PSNR) when inference is run on scans with patient motion artifacts [48] [29].

Solution: Implement a combined augmentation strategy during training.

| Step | Action | Description |

|---|---|---|

| 1 | Apply Default Augmentations | Integrate a standard set of spatial and pixel-level transformations. These are often provided by deep learning frameworks or toolkits like nnU-Net [29]. |

| 2 | Add MRI-Specific Motion Augmentation | Simulate k-space corruption to generate realistic motion artifacts. This can involve using pseudo-random sampling orders and applying random motion tracks to simulate patient movement during the scan [48]. |

| 3 | Train and Validate | Train the model on the augmented dataset. Crucially, validate its performance on a separate test set that includes real or realistically simulated motion-corrupted images with varying severity levels [29]. |

Problem: Overfitting on a Small Medical Dataset

Symptoms: The model's training loss continues to decrease while validation loss stagnates or begins to increase, indicating the model is memorizing the training data rather than learning to generalize.

Solution: Systematically apply and evaluate a suite of data augmentation techniques.

| Step | Action | Description |

|---|---|---|

| 1 | Start with Basic Augmentations | Begin with simple geometric transformations. Studies have shown that even single techniques like random rotation can significantly boost performance, achieving AUCs up to 0.85 in classification tasks [51]. |

| 2 | Explore Deep Generative Models | For a more extensive data expansion, consider deep generative models like Generative Adversarial Networks (GANs) or Diffusion Models (DMs). These can generate highly realistic and diverse synthetic medical images that conform to the true data distribution, though they require more computational resources [52]. |

| 3 | Evaluate Rigorously | Always test the model trained on augmented data on a completely held-out test set. Use domain-specific metrics, such as Dice Similarity Coefficient (DSC) for segmentation or AUC for classification, to confirm genuine improvement [29] [51]. |

Table 1: Impact of Data Augmentation on Model Performance under Motion Artifacts [29]

| Anatomical Region | Artifact Severity | Dice Score (Baseline) | Dice Score (Default Aug) | Dice Score (MRI-Specific Aug) |

|---|---|---|---|---|

| Proximal Femur | Severe | 0.58 ± 0.22 | 0.72 ± 0.22 | 0.79 ± 0.14 |

| Proximal Femur | Moderate | Data Not Provided | Data Not Provided | Data Not Provided |

| Proximal Femur | Mild | Data Not Provided | Data Not Provided | Data Not Provided |

Table 2: Performance of Different Augmentation Techniques in Prostate Cancer Classification [51]

| Augmentation Method | AUC (Shallow CNN) | AUC (Deep CNN) |

|---|---|---|

| None (Baseline) | Data Not Provided | Data Not Provided |

| Random Rotation | 0.85 | Data Not Provided |