Optimizing Denoising Pipelines for Task-Based fMRI: A Framework for Enhanced Signal Detection and Biomarker Development

Task-based functional magnetic resonance imaging (tb-fMRI) is a powerful tool for probing brain function and individual differences in cognition.

Optimizing Denoising Pipelines for Task-Based fMRI: A Framework for Enhanced Signal Detection and Biomarker Development

Abstract

Task-based functional magnetic resonance imaging (tb-fMRI) is a powerful tool for probing brain function and individual differences in cognition. However, its signals are inherently noisy, contaminated by motion, physiological artifacts, and scanner noise, which can obscure true neural activity and limit the development of reliable biomarkers. This article provides a comprehensive guide for researchers and drug development professionals on optimizing denoise pipelines for tb-fMRI. We explore the foundational sources of noise and their impact on data quality, detail current methodological approaches from conventional preprocessing to advanced deep learning applications, present frameworks for pipeline optimization and troubleshooting, and finally, outline rigorous validation and comparative techniques to ensure pipeline efficacy for both individual-level analysis and large-scale population studies.

Understanding the Noise: Foundational Challenges in Task-Based fMRI Data Quality

Troubleshooting Guide: Identifying and Correcting Common fMRI Artifacts

This guide addresses frequent challenges researchers face regarding noise and confounds in task-based fMRI, providing targeted solutions for optimizing denoising pipelines.

FAQ: Motion Artifacts

Q: What are the most effective strategies for mitigating head motion artifacts, especially in challenging populations?

Head motion is the largest source of error in fMRI studies, causing image misalignment and signal disruptions. Effective mitigation requires a multi-layered approach [1]:

- Prospective Methods: Subject immobilization with padding and straps is essential. Proper coaching and training prior to imaging significantly reduce motion.

- Retrospective Correction: This standard method aligns all functional volumes to a chosen reference volume by applying rigid-body transformations (three translations and three rotations) to minimize a cost function like the mean-squared difference [1].

- Advanced Denoising: Incorporate motion parameters as regressors in your general linear model (GLM). Techniques like ICA-AROMA are specifically designed to identify and remove motion-related components from the data [2] [3].

Q: How can I identify subjects or runs with excessive motion in my dataset?

Most fMRI analysis packages produce line plots of the translation and rotation parameters for each volume, allowing for visual inspection of abrupt changes [1]. Additionally, quality control metrics like Framewise Displacement (FD) can be calculated to quantify volume-to-volume changes in head position. Runs with mean FD exceeding a threshold (e.g., 0.2-0.5 mm) are often flagged for censoring (scrubbing) or exclusion.

FAQ: Physiological Artifacts

Q: How do cardiac and respiratory cycles confound the BOLD signal, and how can I correct for them?

Cardiac and respiratory processes introduce high-frequency fluctuations and spurious correlations that can obscure true neural activity [4]. These physiological artifacts manifest as rhythmic signal changes independent of the task.

- RETROICOR (Retrospective Image Correction): This method leverages concurrently recorded physiological data (pulse oximeter and respiratory belt) to model and remove cardiac and respiratory noise components from the fMRI time series [4]. It has been shown to augment the temporal signal-to-noise ratio (tSNR) [4].

- Data-Driven Methods: For studies without physiological recordings, component-based noise correction (CompCor) identifies noise sources from regions without BOLD signal, such as white matter and cerebrospinal fluid (CSF), and regresses them out [3].

Q: Does the order of applying physiological noise correction matter in a multi-echo fMRI pipeline?

A 2025 study evaluated this directly and found that the difference is minimal. Applying RETROICOR to individual echoes (RTCind) versus the composite multi-echo data (RTCcomp) both viably enhance signal quality. The choice of acquisition parameters (e.g., multiband acceleration factor and flip angle) had a more notable impact on data quality than the correction order [4].

FAQ: Scanner-Related Artifacts

Q: What are the main scanner-related artifacts, and how do they affect my data?

Scanner-related artifacts arise from the hardware and physics of MRI acquisition [1]:

- Magnetic Field Inhomogeneity: Causes geometric distortions and signal loss, especially in regions near air-tissue interfaces (e.g., orbitofrontal cortex, temporal lobes).

- Acoustic Scanner Noise: The loud gradients can induce participant stress and motion, and may even elicit neural responses that confound the task-based BOLD signal [5].

- Ghosting and Eddy Currents: Result from imperfections in gradient performance and can manifest as duplicate images or intensity distortions [5].

Q: What advanced hardware solutions are emerging to combat these artifacts at the source?

Next-generation scanner hardware is being designed to fundamentally address these limitations. Key innovations include [6]:

- Ultra-High Performance Gradient Coils: New "head-only" asymmetric gradient coils achieve much higher slew rates and amplitudes, enabling shorter echo times (TE) and echo spacing. This reduces T2* signal decay, geometric distortion, and blurring.

- High-Density Receiver Coil Arrays: 64-channel and 96-channel receiver arrays provide a better signal-to-noise ratio (SNR) in the cerebral cortex compared to standard 32-channel arrays.

- Silent Acquisition Sequences: Novel sequences like SORDINO maintain a constant gradient amplitude while continuously changing gradient direction. This makes the acquisition virtually silent and highly resistant to motion and susceptibility artifacts, which is particularly beneficial for awake animal studies or sensitive human populations [5].

Quantitative Data on Denoising Efficacy

The following tables summarize empirical findings from recent studies on the impact of acquisition parameters and denoising techniques.

Table 1: Impact of Acquisition Parameters on Data Quality and RETROICOR Efficacy [4]

| Multiband Factor | Flip Angle | tSNR Improvement with RETROICOR | Key Findings |

|---|---|---|---|

| 4 & 6 | 45° | High | Most notable improvement in data quality; optimal balance. |

| 4 & 6 | 20° | Moderate | Benefits observed, but lower flip angle reduces signal. |

| 8 | 20° | Low | Highest acceleration degraded data quality; limited correction efficacy. |

Table 2: Comparison of Common Denoising Pipelines for Task-fMRI [3]

| Denoising Technique | Underlying Principle | Performance in Task-fMRI |

|---|---|---|

| FIX | Classifies and removes noise components from ICA using a trained classifier. | Optimal performance for noxious heat and auditory stimuli, best balance of noise removal and signal conservation. |

| ICA-AROMA | Identifies and removes motion-related components based on specific criteria. | Removes noise but may remove more signal of interest compared to FIX. |

| CompCor (aCompCor/tCompCor) | Regresses out noise from WM/CSF or high-variance areas. | Conserved less signal of interest compared to ICA-based methods. |

Experimental Protocols for Key Studies

Protocol 1: Evaluating RETROICOR in Multi-Echo fMRI

This protocol is adapted from a 2025 study evaluating physiological noise correction [4].

- Participants: 50 healthy adults (23 women, 27 men), aged 19-41.

- Scanner: Siemens Prisma 3T with a 64-channel head-neck coil.

- Data Acquisition:

- Seven multi-echo EPI fMRI runs with varying parameters (see Table 1).

- Key constant parameters: FOV = 192 mm, TEs = 17.00, 34.64, 52.28 ms.

- Concurrent physiological monitoring: cardiac and respiratory signals.

- Processing & Analysis:

- Two RETROICOR implementations tested: RTC_ind (on individual echoes) and RTC_comp (on composite data).

- Primary Metrics: Temporal signal-to-noise ratio (tSNR), signal fluctuation sensitivity (SFS), and variance of residuals.

Protocol 2: Benchmarking a Novel Silent fMRI Sequence (SORDINO)

This protocol is based on a 2025 preprint introducing a transformative fMRI technique [5].

- Benchmarking: SORDINO was compared against conventional GRE-EPI and ZTE on a 9.4T preclinical system.

- Key Sequence Innovation: Maintains constant total gradient amplitude while continuously changing gradient direction.

- Measured Advantages:

- Acoustic Noise: Ultra-low slew rate (0.21 T/m/s vs. EPI's 1263 T/m/s), making it virtually silent.

- Artifact Resistance: Inherently less susceptible to motion, ghosting, and susceptibility artifacts.

- Sensitivity: Demonstrated robust sensitivity for mapping brain-wide resting-state connectivity in awake, behaving mice.

Signaling Pathways and Workflows

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Solutions for fMRI Noise Mitigation

| Item | Function / Relevance | Example/Note |

|---|---|---|

| RETROICOR | Algorithm for correcting physiological noise from cardiac and respiratory cycles. | Requires peripheral pulse oximeter and respiratory belt data [4]. |

| ICA-based Denoising (FIX/ICA-AROMA) | Software tools to automatically identify and remove motion and other artifacts from ICA components. | FIX showed optimal balance for task-fMRI; requires classifier training [3]. |

| CompCor | Data-driven method to estimate and regress noise from regions without BOLD signal. | Useful when physiological recordings are unavailable [3]. |

| High-Performance Gradient Coil | Scanner hardware that enables faster encoding, reducing distortion and signal blurring. | e.g., "Impulse" head-only coil (200 mT/m, 900 T/m/s slew rate) [6]. |

| High-Channel Count Receiver Coil | RF coil array that increases signal-to-noise ratio, particularly in the cerebral cortex. | 64-channel and 96-channel arrays provide ~30% higher cortical SNR vs. 32-channel [6]. |

| Silent fMRI Sequence | An acquisition sequence designed to operate with minimal acoustic noise. | e.g., SORDINO sequence for silent, motion-resilient imaging [5]. |

Impact of Noise on Signal Detection and Brain-Behaviour Correlations

Troubleshooting Guide: Frequently Asked Questions

How does measurement noise in behavioral data affect brain-behavior prediction models?

Low test-retest reliability in your behavioral measurements (phenotypes) systematically reduces out-of-sample prediction accuracy when linking brain imaging data to behavior. This occurs because measurement noise lowers the upper bound of identifiable effect sizes.

Evidence: A 2024 study demonstrated that every 0.2 drop in phenotypic reliability reduced prediction accuracy (R²) by approximately 25%. When reliability reached 0.5-0.6—common for many behavioral assessments—prediction accuracy halved. This reliability-accuracy relationship was consistent across large datasets including the UK Biobank and Human Connectome Project [7].

Troubleshooting Steps:

- Estimate Reliability: Calculate test-retest reliability (e.g., via Intraclass Correlation Coefficient (ICC)) for your behavioral measures before conducting brain-behavior analyses.

- Select Measures: Prioritize behavioral measures with high reliability (ICC > 0.8 is excellent, ICC > 0.6 is good) [7].

- Interpret Results Cautiously: Recognize that low prediction accuracy may stem from unreliable behavioral measures rather than a true absence of brain-behavior relationships [7].

What is the optimal denoising technique for task-based fMRI data?

The optimal technique depends on your specific research context, particularly the nature of your task. However, evidence suggests that ICA-based methods, particularly FIX, often provide a superior balance between noise removal and signal conservation.

Evidence: A 2023 comparison of denoising techniques for task-based fMRI during noxious heat and non-noxious auditory stimulation found that FIX optimally conserved signals of interest while removing noise. It outperformed CompCor-based methods and ICA-AROMA in conserving signal, especially for tasks that may induce global physiological changes [8].

Performance Comparison of Common Denoising Techniques:

| Technique | Type | Key Principle | Best For | Considerations |

|---|---|---|---|---|

| FIX [9] [8] | ICA-based | Classifier identifies & removes noise components from ICA decomposition. | Task-fMRI; datasets with physiological noise; protocols similar to HCP. | Requires training a classifier on your specific dataset for optimal results. |

| ICA-AROMA [8] [10] | ICA-based | Uses pre-defined spatial and temporal features to identify motion-related noise. | Resting-state and task-fMRI; quick implementation without training. | Less customizable than FIX; may be less effective for non-motion noise. |

| CompCor (aCompCor/tCompCor) [8] [11] | CompCor-based | Derives noise regressors from Principal Component Analysis (PCA) of signals in noise regions (e.g., white matter, CSF). | General-purpose denoising. | May remove less noise than ICA-based methods in some task contexts [8]. |

| GLMdenoise [12] | Data-driven | Automatically derives noise regressors via PCA from voxels unrelated to the task paradigm. | Event-related designs with multiple runs/conditions. | Data-intensive; requires multiple runs for cross-validation. |

| DeepCor [11] | Deep Learning | Uses contrastive autoencoders to disentangle and remove noise from single-participant data. | Enhancing BOLD signal response; a modern alternative to existing methods. | Newer method; outperformed CompCor by 215% in face-stimulus response [11]. |

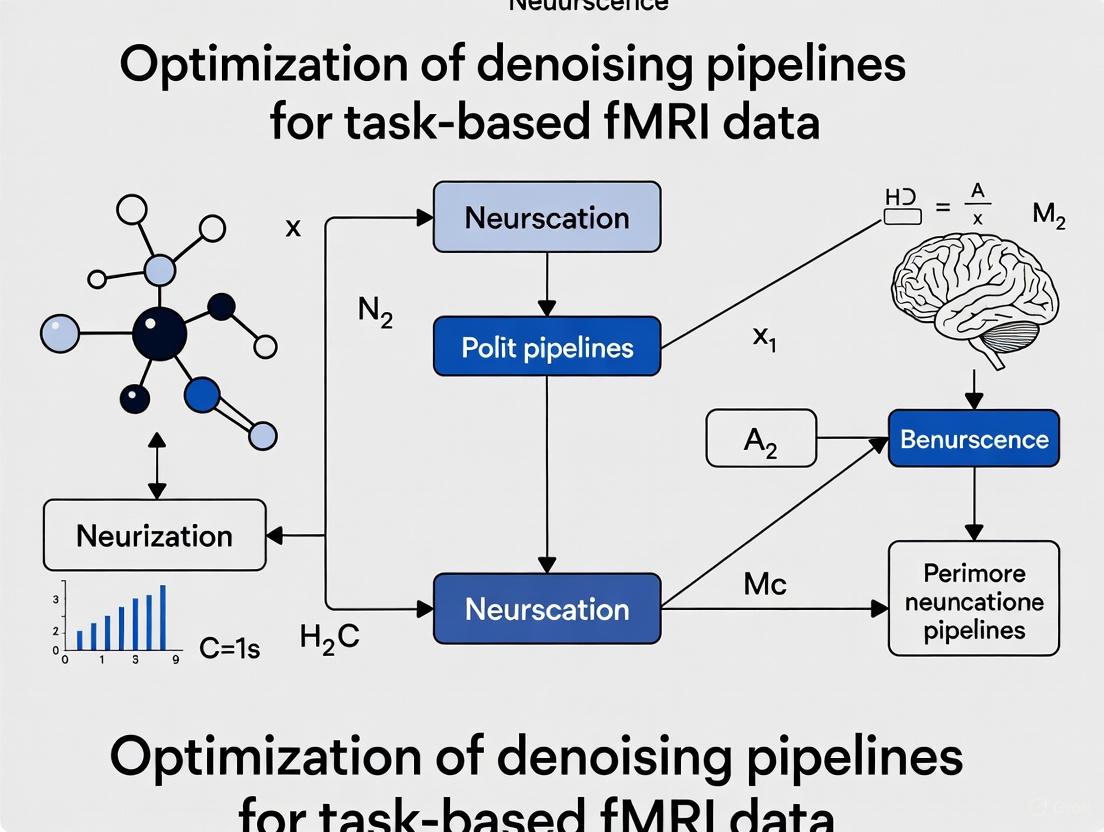

Decision Workflow: The diagram below outlines a protocol for selecting a denoising strategy.

How do noise correlations in neural populations impact behavioral readout?

The impact of noise correlations (trial-to-trial co-variations in neural activity) is more complex than previously thought. While they typically reduce the amount of sensory information encoded by a neural population, they can paradoxically enhance the accuracy of behavioral choices by improving information consistency.

Evidence: Research on mouse posterior parietal cortex during perceptual discrimination tasks found that both across-neuron and across-time noise correlations were higher during correct trials than incorrect trials, even though these same correlations limited the total encoded stimulus information. This is because behavioral choices depend not only on the total information but also on its consistency across neurons and time. Correlations enhance this consistency, facilitating better readout by downstream circuits [13].

Troubleshooting Implications:

- When analyzing population coding, do not assume that reducing all noise correlations will improve behavioral decoding.

- Consider analytical approaches that account for or leverage the structure of noise correlations to improve readout accuracy.

Does the optimal denoising strategy change for clinical populations with brain lesions?

Yes, the nature of the brain disease significantly influences the optimal denoising strategy. What works for healthy controls or one patient group may not be optimal for another.

Evidence: A 2025 study found that for patients with non-lesional encephalopathic conditions, pipelines incorporating ICA-AROMA were most effective. In contrast, for patients with lesional conditions (e.g., glioma, meningioma), pipelines incorporating anatomical Component Correction (CC) yielded the best results, even at comparable levels of head motion [10].

Troubleshooting Steps:

- Identify Your Cohort: Classify your data as from healthy controls, lesional patients, or non-lesional patients.

- Tailor Your Pipeline: For lesional data, prioritize CompCor-based denoising. For non-lesional data with motion, prioritize ICA-AROMA.

- Validate Effectiveness: Use quality control metrics like QC-FC correlations and RSN identifiability to confirm the chosen pipeline's performance on your specific data [10].

The Scientist's Toolkit: Research Reagent Solutions

This table lists key software tools and methods for denoising fMRI data, which form the essential "reagents" for a robust analysis pipeline.

| Tool/Method | Primary Function | Key Features | Application Context |

|---|---|---|---|

| FIX [9] [8] | Automated ICA-based noise removal | Uses a classifier to label noise components; high accuracy when trained on specific data. | HCP-style data; task-fMRI with physiological noise; high-quality resting-state. |

| ICA-AROMA [8] [10] | Automated removal of motion artifacts from ICA | No training required; uses pre-defined features to identify motion components. | Resting-state and task-fMRI; quick setup; data with significant head motion. |

| CompCor [8] [11] | Noise regression from physiological compartments | Derives noise regressors from PCA of WM and CSF signals (aCompCor) or high-variance voxels (tCompCor). | General-purpose denoising; when physiological recordings are unavailable. |

| GLMdenoise [12] | Data-driven denoising within GLM framework | Automatically derives noise regressors from "unmodeled" voxels; optimizes via cross-validation. | Event-related designs with multiple runs; studies with many conditions. |

| DeepCor [11] | Denoising using deep generative models | Disentangles noise from signal using contrastive autoencoders; applicable to single subjects. | Enhancing BOLD signal response; a modern alternative to existing methods. |

| FSL MELODIC [9] | ICA decomposition of fMRI data | Performs single-subject ICA to decompose data into independent components for manual or automated cleaning. | Foundational step for ICA-based denoising (e.g., FIX). |

Frequently Asked Questions (FAQs)

Q1: Why is the choice of a specific preprocessing pipeline so critical for fMRI results?

The choice of preprocessing pipeline is critical because different pipelines can lead to vastly different conclusions from the same dataset. This extensive flexibility in analysis workflows can substantially elevate the rate of false-positive findings. One systematic evaluation revealed that inappropriate pipeline choices can produce results that are not only misleading but systematically so, with the majority of pipelines failing at least one key criterion for reliability and validity [14]. Standardizing preprocessing across studies helps eliminate between-study differences caused solely by data-processing choices, ensuring that reported results reflect the true effect of the study design rather than analytical variability [15].

Q2: What are the key advantages of using fMRIPrep for preprocessing?

fMRIPrep offers several key advantages:

- Robustness: It automatically adapts preprocessing steps based on the input dataset, providing high-quality results independently of scanner make, scanning parameters, or the presence of additional correction scans [16] [17].

- Ease of Use: By relying on the Brain Imaging Data Structure (BIDS), it reduces manual parameter input to a minimum, allowing for fully automatic execution [16] [15].

- Transparency: fMRIPrep follows a "glass box" philosophy, providing visual reports for each subject that detail the accuracy of critical processing steps. This helps researchers understand the process and decide which subjects to include in group-level analysis [16].

- Reproducibility: As a standardized tool, it addresses reproducibility concerns inherent in the established, often ad-hoc, protocols for fMRI preprocessing [15].

Q3: I am using fMRIPrep. Why might my subsequent analysis in another software package (like C-PAC) fail?

This is a common integration challenge. If your analysis tool cannot find necessary files output by fMRIPrep, such as the desc-confounds_timeseries file, check the following:

- Data Configuration: Ensure your

data_configfile correctly points to the fMRIPrep output directory. Thederivatives_dirfield should specify the path containing the subject subdirectories [18]. - Pipeline Configuration: Use a preconfigured pipeline file designed for fMRIPrep ingress (e.g.,

pipeline_config_fmriprep-ingress.yml). These files have the necessary settings, such as enablingoutdir_ingress, to properly pull in fMRIPrep outputs and turn off redundant preprocessing steps [18].

Q4: What are the current limitations of fMRIPrep that I should be aware of?

Researchers should be mindful of several limitations:

- Data Type: fMRIPrep is designed for BOLD fMRI and does not preprocess non-BOLD fMRI data (e.g., arterial spin labeling) [15].

- Species: It currently does not support nonhuman species, though extensions for primates and rodents are being explored [15].

- Anatomical Abnormalities: Data from individuals with gross structural abnormalities or acquisitions with a very narrow field of view should be used with caution, as spatial normalization and co-registration may be suboptimal [15].

- Scope: fMRIPrep performs minimal preprocessing and does not include analysis-tailored steps like spatial-temporal filtering. Its outputs are designed to be fed into specialized downstream analysis tools [15].

Troubleshooting Guides

Issue: High Motion Artifacts in Resting-State Data

Problem: Resting-state fMRI data is notoriously susceptible to motion artifacts, where even small movements can introduce spurious correlations that threaten the validity of your results [19].

Solution:

- Preprocessing Mitigation: Utilize the noise components extracted by fMRIPrep. The confounds table (

*_desc-confounds_timeseries.tsv) includes multiple motion-related regressors. Incorporate these into your statistical model to regress out motion effects. - Component-Based Correction: Use a method like

CompCor(a component-based noise correction method), which is integrated into fMRIPrep and helps remove noise from physiological sources [15] [19]. - Advanced Denoising: Consider modern deep learning-based denoising tools like

DeepCorfor challenging cases. One study found that DeepCor enhanced BOLD signal responses to stimuli, outperforming CompCor by 215% in a face-stimulus task [11]. - Quality Control: Always consult the visual report generated by fMRIPrep for each subject to assess the extent of motion and the effectiveness of correction.

Issue: Choosing a Pipeline for Functional Connectomics

Problem: Constructing functional brain networks from preprocessed data involves many choices (e.g., parcellation, connectivity definition, global signal regression), leading to a "combinatorial explosion" of possible pipelines. An uninformed choice can yield misleading and unreliable results [14].

Solution: Follow a systematic, criteria-based selection.

- Define Your Criteria: A suitable pipeline should:

- Minimize spurious differences: Show high test-retest reliability across short and long-term delays [14].

- Maximize biological sensitivity: Be sensitive to individual differences and experimental effects of interest [14].

- Be generalizable: Perform well across datasets with different acquisition parameters and preprocessing methods [14].

- Consult Systematic Evaluations: Refer to large-scale studies that have evaluated pipelines against these criteria. One such study evaluated 768 pipelines and identified a subset that consistently satisfied all criteria [14].

- Use an Optimal Pipeline: The table below summarizes key steps and some of the optimal choices identified in a recent systematic evaluation for network construction [14].

Table 1: Optimal Pipeline Choices for Functional Connectomics

| Pipeline Step | Description | Optimal Choices (Example) |

|---|---|---|

| Global Signal Regression (GSR) | Controversial step to remove global signal | Pipelines identified for both with and without GSR [14]. |

| Brain Parcellation | Definition of network nodes | Anatomical landmarks; functional characteristics; multimodal features [14]. |

| Number of Nodes | Granularity of the parcellation | ~100, 200, or 300-400 regions [14]. |

| Edge Definition | How to quantify connectivity between nodes | Pearson correlation or Mutual Information [14]. |

| Edge Filtering | How to sparsify the network | Data-driven methods like Efficiency Cost Optimisation (ECO) [14]. |

Issue: Reconciling Conflicting Connectivity Findings (e.g., in ASD)

Problem: In conditions like Autism Spectrum Disorder (ASD), literature often reports conflicting findings of both hyper-connectivity and hypo-connectivity, making it difficult to draw clear conclusions [20].

Solution: Employ advanced network comparison techniques that can capture complex, mesoscopic-scale patterns.

- Move Beyond Single Features: Avoid relying on isolated network features. Instead, use methods that capture the network's organization as a whole [14] [20].

- Use Contrast Subgraphs: Leverage algorithms that extract "contrast subgraphs"—subnetworks that are maximally different in connectivity between two groups. This approach can identify specific sets of regions that are simultaneously hyper-connected in one group and hypo-connected in the other, reconciling conflicting reports within a single framework [20].

- Validate Statistically: Ensure the robustness of identified subgraphs using techniques like bootstrapping and Frequent Itemset Mining to establish statistical significance [20].

Experimental Protocols & Methodologies

Protocol 1: Standardized Preprocessing with fMRIPrep

This protocol outlines how to integrate fMRIPrep into a task-based fMRI investigation workflow [15].

Materials/Input:

- Dataset: fMRI data organized in BIDS format [15].

- Software: fMRIPrep container (Docker/Singularity) [15].

- Computing Environment: High-performance computing (HPC) cluster, cloud, or powerful personal computer [15].

Method:

- Data Validation: Use the BIDS Validator to ensure your dataset is compliant [15].

- Quality Assessment (Preprocessing): Run MRIQC on the raw data to assess initial quality and help specify clear exclusion criteria [15].

- Execute fMRIPrep: Run the fMRIPrep pipeline. It will automatically:

- Perform motion correction, field unwarping, normalization, and bias field correction.

- Combine tools from FSL, ANTs, FreeSurfer, and AFNI.

- Generate a visual report for each subject.

- Quality Assurance (Postprocessing): Meticulously review the fMRIPrep visual reports to identify any outliers or processing inaccuracies [16] [15].

- Statistical Analysis: Feed the preprocessed data (now in BIDS-Derivatives format) into your preferred analysis software (e.g., FSL, SPM) for first and second-level modeling [15].

Protocol 2: Systematic Pipeline Evaluation for Network Analysis

This protocol is derived from a systematic framework for evaluating end-to-end pipelines for constructing functional brain networks from resting-state fMRI [14].

Materials/Input:

- Preprocessed fMRI Data: Data that has undergone initial preprocessing (e.g., via fMRIPrep).

- Test-Retest Datasets: Multiple independent datasets with repeated scans from the same individuals across different time intervals (e.g., minutes, weeks, months) [14].

- Evaluation Framework: A set of criteria and metrics for comparison (e.g., Portrait Divergence for topological dissimilarity) [14].

Method:

- Define Pipeline Dimensions: Systematically combine choices across key steps:

- With/without Global Signal Regression.

- Multiple brain parcellation schemes and node numbers.

- Edge definitions (Pearson correlation, Mutual Information).

- Multiple edge-filtering approaches and use of binary/weighted networks.

- Evaluate Test-Retest Reliability: For each pipeline, calculate the topological similarity (using a measure like Portrait Divergence) between networks from the same individual across repeated scans. Pipelines that minimize spurious discrepancies are preferred [14].

- Evaluate Biological Sensitivity: Test each pipeline's sensitivity to meaningful variables like inter-subject differences and experimental effects (e.g., response to pharmacological intervention) [14].

- Identify Optimal Pipelines: Select pipelines that consistently satisfy all criteria (high reliability and high sensitivity) across the different test-retest datasets [14].

Workflow Diagrams

The Scientist's Toolkit

Table 2: Essential Tools for fMRI Preprocessing and Denoising Analysis

| Tool / Resource | Function / Purpose | Application in Context |

|---|---|---|

| fMRIPrep | A robust, automated pipeline for minimal preprocessing of fMRI data. | Standardizes the initial preprocessing steps (motion correction, normalization, etc.), providing a consistent foundation for all subsequent analyses [16] [15]. |

| BIDS (Brain Imaging Data Structure) | A standard for organizing and describing neuroimaging datasets. | Enables fMRIPrep and other tools to automatically understand dataset structure and metadata, facilitating reproducibility and automated processing [15]. |

| DeepCor | A deep learning-based denoising method using contrastive autoencoders. | Advanced denoising for single-participant data; shown to enhance BOLD signal response to stimuli, outperforming other methods in specific tasks [11]. |

| CompCor | A component-based noise correction method for BOLD fMRI. | A widely used strategy for denoising by removing principal components from noise-prone regions (e.g., white matter, CSF) [11] [19]. |

| Contrast Subgraph Analysis | A network comparison technique to find maximally different subgraphs between groups. | Helps reconcile conflicting connectivity findings (e.g., hyper-/hypo-connectivity in ASD) by identifying nuanced, mesoscopic-scale patterns [20]. |

| Nipype | A Python-based workflow engine for integrating neuroimaging software. | The foundation of fMRIPrep, allowing it to combine tools from FSL, ANTs, FreeSurfer, and AFNI into a single, cohesive pipeline [16] [15]. |

Troubleshooting Guide: Frequently Asked Questions

Q1: My fMRI results are inconsistent across repeated scans. Which specific metrics should I use to diagnose reproducibility issues, and what are the benchmark values I should aim for?

Reproducibility can be broken down into several measurable components. To diagnose issues, you should calculate the following key metrics:

- Test-Retest Reliability: Use the Intra-class Correlation Coefficient (ICC) to measure the consistency of measurements from the same subject across different scanning sessions. ICC values are interpreted as follows: poor (< 0.4), fair (0.4 - 0.59), good (0.6 - 0.74), and excellent (≥ 0.75) [21]. For example, in graph metrics of fMRI networks, clustering coefficient and global efficiency have shown high ICC scores (0.86 and 0.83, respectively), while degree has been reported to have a low ICC (0.29) [21].

- Replicability: This measures whether a finding can be reproduced in an entirely independent dataset. It is often quantified as the proportion of significant findings from one study that are successfully detected in another [22]. One study on R-fMRI metrics found replicability for between-subject sex differences to be below 0.3, highlighting that even moderately reliable indices can replicate poorly in new datasets [22].

Q2: I am using a denoising pipeline, but my model's power to predict individual traits or task states is still low. How can I accurately assess if my pipeline is harming my signal?

Low predictive power can stem from the pipeline removing meaningful biological signal. To assess this, evaluate the following:

- Benchmark against Test-Retest Reliability: A powerful validation is to compare your model's prediction accuracy against the test-retest reliability of the task-fMRI measure itself. State-of-the-art models using resting-state fMRI to predict task-evoked activity have achieved prediction accuracy on par with the test-retest reliability of repeated task-fMRI scans [23] [24]. If your model's accuracy is significantly lower, your pipeline may be overly aggressive.

- Evaluate Discriminability Separately: Use decoding accuracy (e.g., from a linear support vector machine) to measure how well brain patterns can distinguish between different task states or groups [25] [26]. If discriminability is low, check if your feature selection method is appropriate. Note that discrimination-based feature selection (DFS) often provides better distinguishing power, while reliability-based feature selection (RFS) yields more stable features across different samples [26].

Q3: My sample size is limited. How does this directly impact the reliability and validity of my findings, and is there a quantitative guideline?

Small sample sizes are a major threat to reproducibility and validity in fMRI research. The impact is quantifiable:

- Positive Predictive Value (PPV): This is the likelihood that a significant finding reflects a true effect. One study demonstrated that with small sample sizes (e.g., below 80 subjects, or 40 per group), the PPV for between-subject sex differences in R-fMRI can be very low (< 26%) [22]. This means most of your significant results are likely false positives.

- Sensitivity (Power): The ability to detect a true effect. The same study found that with small sample sizes (< 80), sensitivity plummeted to less than 2% [22].

- Recommendation: The research indicates that sample sizes of at least 80 subjects (40 per group) are a minimum to achieve reasonable PPV and sensitivity for group comparisons [22]. Furthermore, for reliable estimation of functional connectivity gradients, longer time-series data (at least ≥20 minutes) is preferential [27].

Quantitative Data on Evaluation Metrics

The tables below consolidate key quantitative findings from the literature to serve as benchmarks for your own experiments.

Table 1: Benchmark Values for Reproducibility of Common fMRI Metrics

| Metric / Approach | Reproducibility Measure | Reported Value | Context & Notes |

|---|---|---|---|

| Graph Metrics [21] | Intra-class Correlation (ICC) | Clustering Coefficient: 0.86Global Efficiency: 0.83Path Length: 0.79Local Efficiency: 0.75Degree: 0.29 | Calculated on fMRI data from healthy older adults; degree showed low reproducibility. |

| R-fMRI Indices [22] | Test-Retest Reliability (ICC) | ~0.68 (e.g., for ALFF in sex differences) | Measured for supra-threshold voxels using permutation test with TFCE. |

| R-fMRI Indices [22] | Replicability | ~0.25 (for ALFF in between-subject sex differences)~0.49 (for ALFF in within-subject conditions) | Replicability measures performance in totally independent datasets. |

| Connectivity Estimates [28] | Test-Retest Reproducibility | Structural Connectivity (SC): CV = 2.7%Functional Connectivity (FC): CV = 5.1% | Lower Coefficient of Variation (CV) indicates higher reproducibility. SC was most reproducible. |

Table 2: Impact of Experimental Parameters on Metric Performance

| Parameter | Impact on Metrics | Recommendation |

|---|---|---|

| Sample Size [22] | Small samples (n<80) drastically reduce:· Sensitivity (Power): < 2%· Positive Predictive Value (PPV): < 26% | Use a sample size of at least 80 subjects (40 per group) for group comparisons to ensure PPV > 50% and sufficient power. |

| Feature Selection [26] | · DFS: Better classification accuracy for distinguishing brain states.· RFS: Higher stability across different subsets of subjects/features. | Choose DFS for maximum discriminability. Choose RFS for higher feature stability and robustness. |

| Time-Series Length [27] | Longer data improves the reliability of functional connectivity gradients. | Acquire at least 20 minutes of resting-state fMRI data per subject for more reliable connectivity gradient estimates. |

Detailed Experimental Protocols

To ensure the reliability of your own findings, you can implement these established experimental methodologies.

Protocol 1: Assessing Test-Retest Reliability with Intra-class Correlation (ICC)

- Data Acquisition: Collect repeated fMRI datasets from the same participants. This typically involves two scanning sessions, which can be on the same day (with a short break) or days/weeks apart, depending on the research question [22] [21].

- Preprocessing & Analysis: Run your denoising pipeline and calculate the fMRI metric of interest (e.g., ALFF, ReHo, graph metrics, functional connectivity) for each session.

- ICC Calculation: Use a statistical software package (e.g., R, SPSS) to compute the ICC. The ICC for absolute agreement is often appropriate for test-retest reliability. The formula models the ratio of between-subject variability to the total variability (which includes within-subject variability) [22] [21].

- Interpretation: Compare your calculated ICC values to established benchmarks (see Table 1) and qualitative thresholds (poor, fair, good, excellent).

Protocol 2: Evaluating Discriminability via Multi-Voxel Pattern Analysis (MVPA)

- Stimulus Presentation: Conduct an fMRI experiment where participants are presented with stimuli from different categories (e.g., "old people" vs. "young people" faces) in a randomized, event-related design [25].

- Preprocessing & Feature Extraction: Apply your denoising pipeline. Then, for each trial, extract the brain activity pattern (a vector of fMRI signal values) from a pre-defined set of voxels. Informative voxels can be selected using a pattern search algorithm or a whole-brain approach.

- Classifier Training & Testing: Use a machine learning classifier, such as a Linear Support Vector Machine (SVM), to learn the mapping between brain patterns and stimulus categories. Employ a cross-validation scheme (e.g., 10-fold) to train the classifier on a subset of data and test its accuracy on the held-out data [25] [26].

- Metric Calculation: The decoding accuracy (percentage of correctly classified trials in the test set) is the primary measure of discriminability. Accuracy significantly above chance level (e.g., 50% for two categories) indicates that the brain patterns contain information that distinguishes the conditions.

Visualization of Relationships and Workflows

The following diagram illustrates the logical relationship between key concepts, experimental parameters, and the evaluation metrics discussed in this guide.

Figure 1. Logical framework connecting denoising pipeline optimization to core evaluation metrics, their primary measures, and key influencing factors. Dashed lines indicate cross-cutting influences.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools and Resources for fMRI Denoising and Evaluation

| Tool / Resource | Function / Role | Example Use Case |

|---|---|---|

| Threshold-Free Cluster Enhancement (TFCE) [22] | A strict multiple comparison correction method that enhances cluster-like structures without setting an arbitrary cluster-forming threshold. | Found to provide the best balance between controlling family-wise error rate and maintaining test-retest reliability/replicability [22]. |

| Dual Regression [23] [29] | A technique used to extract subject-specific temporal and spatial features from functional data based on a set of group-level spatial maps. | Used in functional connectivity analysis (e.g., with ICA) and as a basis for predicting individual task-evoked activity from resting-state fMRI [29]. |

| Stochastic Probabilistic Functional Modes (sPROFUMO) [23] [24] | A method for extracting more informative "functional modes" (spatial maps) from resting-state fMRI data than older approaches. | Used as features in models to predict individual task-fMRI activity, outperforming the dual-regression approach [23] [24]. |

| Permutation Test with TFCE [22] | A non-parametric statistical testing method that combines permutation testing with TFCE for robust inference. | Recommended for achieving high test-retest reliability and replicability while controlling for false positives in R-fMRI studies [22]. |

| Linear Support Vector Machine (SVM) [25] [26] | A simple yet powerful classifier for multi-voxel pattern analysis (MVPA). | Used to decode cognitive states or categories from fMRI brain patterns, providing a measure of discriminability [25]. |

From Conventional to AI-Driven Denoising: A Toolkit for Modern fMRI Analysis

Frequently Asked Questions (FAQs)

Q1: What is the primary goal of these preprocessing steps? The primary goal is to remove non-neuronal noise from the fMRI data to improve the detection of true BOLD signals related to brain activity. This involves correcting for head motion, removing slow signal drifts, and regressing out noise from physiological processes, which collectively enhance the validity and reliability of functional connectivity estimates and brain-behavior associations [30] [2] [1].

Q2: Should I perform slice-timing correction before or after motion correction? The optimal order is not universally agreed upon. Slice-timing correction can be performed either before or after motion correction, and the best choice may depend on factors like the expected degree of head motion in your dataset and the slice acquisition order [1].

Q3: Is global signal regression (GSR) recommended for denoising? GSR is a controversial step. Some studies indicate that pipelines combining ICA-FIX with GSR can offer a reasonable trade-off between mitigating motion artifacts and preserving behavioral prediction performance. However, the efficacy of GSR and other denoising methods can vary across datasets, and no single pipeline universally excels [2] [31].

Q4: What is a major pitfall in nuisance regression and how can it be avoided? A major pitfall is ignoring the temporal autocorrelation in the fMRI noise, which can invalidate statistical inference. Pre-whitening should be applied during nuisance regression to account for this autocorrelation and achieve valid statistical results [30].

Q5: Can I denoise data after aggregating it into brain regions to save time? Yes, for certain analyses. Recent evidence suggests that region-level denoising can be computationally efficient and, when using Mean aggregation, yields functional connectivity results with individual specificity and predictive capacity equal to or better than traditional voxel-level denoising [32].

Troubleshooting Common Problems

Motion Correction Failures

- Problem: Poor realignment of functional volumes, often due to excessive or abrupt head motion.

- Solutions:

- Prevention: Properly immobilize the head using padding and straps during scanning. Coach and train the subject to remain still [1].

- Inspection: Always visually inspect the motion parameter plots (translation and rotation) generated by your preprocessing software to identify sudden, large displacements [1].

- Post-hoc Correction: For volumes with abrupt motion, consider using "scrubbing" (volume censoring) to remove these time points from analysis [2] [33].

- Note: Rigid-body motion correction cannot fully compensate for non-linear effects like changes in magnetic field homogeneity caused by head movement [1].

Incomplete Detrending and Residual Drift

- Problem: Slow signal drifts remain in the data, leading to a colored noise structure that complicates statistical inference and reduces detection power [34].

- Solutions:

- Method Selection: Employ robust detrending methods such as high-pass filtering with a discrete cosine transform (DCT) basis set or polynomial regressors [1] [34].

- Model Flexibility: Consider exploratory drift models that can adaptively capture slow, varying trends in the data more effectively than standard models [34].

- Pipeline Integration: Remember that temporal filtering can be incorporated directly into the General Linear Model (GLM) as a confound to account for changes in degrees of freedom [30].

Inefficient or Ineffective Nuisance Regression

- Problem: Nuisance regression fails to adequately remove noise, or the choice of pipeline leads to spurious results or loss of biological signal.

- Solutions:

- Pre-whitening: As highlighted in FAQ #4, always use pre-whitening to account for the colored noise structure in fMRI data during nuisance regression [30].

- Temporal Shifting: For some physiological regressors (e.g., cardiac, respiratory), applying a temporal shift may be warranted. However, the optimal shift should be carefully validated, as it may not be reliably estimated from resting-state data alone [30].

- Pipeline Validation: Systematically evaluate your chosen denoising pipeline against criteria like motion reduction, test-retest reliability, and sensitivity to individual differences or behavioral predictions [2] [31]. Don't assume a pipeline works; validate it for your data.

Experimental Protocols & Methodologies

Protocol: Evaluating Denoising Pipeline Efficacy

This protocol is adapted from large-scale benchmarking studies [2] [31].

- Data Selection: Use a dataset with repeated scans (test-retest) from the same individuals to assess reliability. Include data with associated behavioral measures to assess predictive validity.

- Pipeline Construction: Define multiple preprocessing pipelines that vary in their use of key steps (e.g., with/without GSR, different nuisance regressors, scrubbing thresholds).

- Quality Metrics Calculation: For each pipeline, compute the following metrics on the processed data:

- Motion Contamination: Use quality control metrics like framewise displacement (FD) to quantify residual motion artifacts.

- Test-Retest Reliability: Measure the intra-class correlation (ICC) of functional connectivity networks or other derived measures across repeated scans.

- Behavioral Prediction: Use a model (e.g., kernel ridge regression) to predict behavioral variables from functional connectivity and assess cross-validated prediction performance.

- Pipeline Comparison: Identify pipelines that successfully minimize motion artifacts while maximizing reliability and behavioral prediction accuracy.

Protocol: Implementing Pre-whitening in Nuisance Regression

This protocol addresses a key recommendation from the literature [30].

- Model Specification: Fit a noise model (e.g., an AR(1) model) to the residuals of your fMRI data to estimate the temporal autocorrelation structure.

- Whitening Matrix: Construct a whitening matrix based on the estimated autocorrelation parameters.

- Data Transformation: Apply the whitening matrix to both the fMRI data and the design matrix (containing your task regressors and nuisance regressors).

- Model Refitting: Perform the nuisance regression (and task regression) on the pre-whitened data and design matrix. This ensures valid statistical inference.

Table 1: Comparison of Denoising Pipeline Performance on Behavioral Prediction

| Pipeline Feature | Effect on Motion Reduction | Effect on Behavioral Prediction | Key Findings |

|---|---|---|---|

| Global Signal Regression (GSR) | Can help reduce motion artifacts [2] | Variable; can be part of a reasonable trade-off pipeline [2] | No pipeline, including those with GSR, universally excels across different cohorts [2]. |

| ICA-based cleanup (e.g., ICA-FIX) | Effective for artifact removal [2] | Shows reasonable performance when combined with GSR [2] | Modest inter-pipeline variations in predictive performance [2]. |

| Region-level vs. Voxel-level Denoising | --- | Generally equal or better prediction performance for region-level [32] | Using Mean aggregation with region-level denoising offers equal performance with reduced computational resources [32]. |

Table 2: Impact of Preprocessing Choices on Network Topology Reliability

| Processing Choice | Impact on Test-Retest Reliability | Impact on Individual Specificity | Recommendation |

|---|---|---|---|

| Global Signal Regression (GSR) | Systematic variability in reliability [31] | Affects sensitivity to individual differences [31] | Optimal pipelines exist with and without GSR; choice should be intentional and validated [31]. |

| Parcellation Granularity | --- | Generally improves with more regions [32] | Increasing the number of brain regions (100, 400, 1000) generally improves individual fingerprinting [32]. |

| Aggregation Method for Regions | --- | Mean and 1st Eigenvariate (EV) perform differently [32] | Use Mean aggregation for stable results; EV can reduce individual specificity with voxel-level denoising [32]. |

Workflow and Signaling Pathways

Figure 1: Conventional fMRI Preprocessing Pipeline. This workflow shows the standard sequence of steps. Temporal Detrending and Nuisance Regression are highlighted as the core denoising steps central to optimizing a pipeline for task-based fMRI research.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software Tools for fMRI Preprocessing

| Tool Name | Function / Purpose | Key Features / Notes |

|---|---|---|

| fMRIPrep | Automated, robust preprocessing of fMRI data. | Integrates many steps from Fig. 1; promotes reproducibility and standardization [2] [33]. |

| RABIES | Standardized preprocessing and QC for rodent fMRI data. | Addresses the need for reproducible practices in the translational rodent imaging community [33]. |

| Independent Component Analysis (ICA) | Data-driven method to identify and remove artifact components (e.g., via FIX). | Effective for identifying motion, cardiac, and other noise sources not fully captured by model-based regression [2]. |

| Portrait Divergence (PDiv) | Information-theoretic measure to compare whole-network topology. | Used to evaluate pipeline reliability by measuring dissimilarity between networks from repeated scans [31]. |

In task-based fMRI research, the Blood Oxygenation Level Dependent (BOLD) signal is contaminated by various noise sources, including head motion, physiological processes (e.g., respiration, cardiac pulsation), and scanner artifacts. These confounds significantly reduce the contrast-to-noise ratio (CNR) and can lead to both false positives and false negatives in activation maps. Independent Component Analysis-based cleanup (ICA-FIX) and Global Signal Regression (GSR) represent two powerful but philosophically distinct approaches to denoising fMRI data. ICA-FIX is a data-driven method that selectively removes structured noise components identified by a trained classifier, while GSR is a more global approach that regresses out the average signal across the entire brain. Understanding the strengths, limitations, and optimal application of each method is crucial for building robust denoising pipelines, especially in clinical and pharmacological research where signal integrity is paramount [35] [36] [37].

? Frequently Asked Questions (FAQs)

What is the fundamental difference between ICA-FIX and GSR?

ICA-FIX and GSR operate on different principles and remove different types of variance from your data:

- ICA-FIX is a selective, data-driven approach. It uses Independent Component Analysis (ICA) to decompose the 4D fMRI data into spatially independent components. A trained classifier then automatically identifies and labels these components as either "signal" or "noise." Finally, only the variance associated with the noise components is regressed out of the original data. This method aims to remove specific structured artifacts (e.g., motion, physiology) while preserving global neural signals [9] [38].

- GSR is a non-selective, model-based approach. It calculates the global signal as the average timecourse across all voxels in the brain (or a mask like the grey matter) and regresses this signal out of every voxel's timecourse. This effectively removes all variance that is shared globally across the brain, including both global artifacts and any globally synchronized neural activity [35] [36].

Table: Core Conceptual Differences Between ICA-FIX and GSR

| Feature | ICA-FIX | Global Signal Regression (GSR) |

|---|---|---|

| Primary Goal | Selective removal of specific, structured noise | Removal of all globally shared variance |

| Action | Regresses out noise components identified by a classifier | Regresses out the mean whole-brain signal |

| Effect on Neural Signal | Aims to preserve global and semi-global neural signals | Also removes globally distributed neural information |

| Effect on Correlations | Maintains the native distribution of correlations | Introduces a shift in the correlation distribution, creating negative values |

Should I use GSR on my task-based fMRI data if I have already cleaned it with ICA-FIX?

This is a subject of ongoing debate, but recent large-scale systematic evidence suggests that GSR can provide additional benefits even after ICA-FIX cleanup, particularly for studies focused on behavior and individual differences.

A study on the Human Connectome Project (HCP) data, which is preprocessed with ICA-FIX, found that applying GSR afterward increased the behavioral variance explained by whole-brain functional connectivity by an average of 40% across 58 behavioral measures. Furthermore, behavioral prediction accuracies improved by 12% after GSR. This indicates that GSR can remove residual global noise that ICA-FIX misses, thereby strengthening brain-behavior associations [35].

However, this decision should be guided by your research question. GSR remains controversial because it also removes global neural signals potentially related to arousal and vigilance [36]. You should justify your choice based on whether your hypothesis is better tested by examining:

- Total fMRI fluctuation (without GSR), or

- fMRI fluctuations relative to the global signal (with GSR) [35].

I am processing data that deviates from the HCP protocol. Can I still use FIX?

Yes, but you will likely need to train a study-specific classifier. The FIX classifier works "out-of-the-box" for data that closely matches the HCP in terms of study population, imaging sequence, and processing steps. However, for most applications that deviate from these protocols, FIX must be trained on a set of hand-labelled components from your own data. This involves:

- Manually classifying the ICA components from a subset of your subjects (e.g., 10-20 subjects) as "signal" or "noise."

- Using these hand-labelled examples to train a new FIX model.

- Applying this custom-trained model to the rest of your dataset for automated cleaning [9].

GSR is known to introduce negative correlations. Are these correlations meaningful?

The interpretation of negative correlations after GSR is a major point of contention. The core issue is that these negative values are, in part, a mathematical consequence of the regression process. When the global mean is removed, the correlation structure is necessarily recentered, forcing some connections to become negative [35] [36].

While some studies treat these anti-correlations as biologically meaningful (e.g., reflecting inhibitory interactions or competing neural networks), others caution against this interpretation. The current prevailing view is that the sign of the correlations after GSR is difficult to interpret directly. The utility of GSR should therefore be evaluated based on its effectiveness for a specific goal, such as improving the association between functional connectivity and behavior, rather than on the interpretation of negative correlations per se [35] [36].

Are there alternatives that can remove global noise without the biases of GSR?

Yes, emerging methods like temporal ICA (tICA) show promise. While spatial ICA (sICA), used in FIX, is mathematically unable to separate global noise from global neural signal, temporal ICA (tICA) is designed to do exactly that. tICA decomposes the data into temporally independent components, which can then be classified as global noise or global signal.

Studies have shown that tICA can selectively remove global structured noise (e.g., from physiology) without inducing the network-specific negative biases characteristic of GSR. This positions tICA as a potential "best of both worlds" solution, offering the selectivity of an ICA-based approach for tackling the global noise problem [39].

Troubleshooting Guides

Problem: Poor Performance of ICA-FIX After Training

Possible Causes and Solutions:

- Cause 1: Inadequate or Poor-Quality Training Data.

- Solution: Ensure your hand-labelled training set is of high quality and sufficient size. The training subjects should be representative of your entire dataset in terms of data quality and noise characteristics. Manually label components for more subjects to improve classifier training [9].

- Cause 2: Incorrect Registration.

- Solution: FIX uses registration to standard space for feature extraction. While the registrations do not need to be perfect, they must be approximately accurate. Visually inspect your registrations (e.g.,

functohighresandhighrestostandardspace) to ensure there are no major failures. Using BBR (Boundary-Based Registration) for functional-to-structural registration is recommended for improved accuracy [9].

- Solution: FIX uses registration to standard space for feature extraction. While the registrations do not need to be perfect, they must be approximately accurate. Visually inspect your registrations (e.g.,

- Cause 3: Mismatch Between Preprocessing Steps.

Problem: GSR Obscures a Group Difference or Experimental Effect

Possible Causes and Solutions:

- Cause: The global signal itself differs between groups or conditions. For example, if one group (e.g., a clinical population) has a systematically higher or more variable global signal than the control group, GSR can remove this genuine difference and thus obscure true effects or even create spurious ones [35] [36].

- Solution 1: Compare results with and without GSR. It is considered a best practice to run your analyses both ways and report any discrepancies. If an effect is only present with GSR or only present without GSR, this requires careful interpretation and should not be overstated [31].

- Solution 2: Consider alternative methods. If GSR is problematic for your specific experimental contrast, investigate other denoising strategies. As noted in the FAQs, temporal ICA (tICA) is a promising alternative for global noise removal [39]. Additionally, ensure you are using a comprehensive set of nuisance regressors (e.g., motion parameters, white matter and CSF signals) before considering GSR.

Problem: General Weak or Unreliable Task Activation

Possible Cause: Sub-optimal Preprocessing Pipeline. The choice of preprocessing steps significantly impacts the quality of activation maps, and a "one-size-fits-all" pipeline may be sub-optimal [41] [37].

- Solution: Use a data-driven framework to optimize your pipeline. The NPAIRS (Nonparametric Prediction, Activation, Influence, and Reproducibility reSampling) framework can be used to select preprocessing steps that maximize prediction accuracy (P) and spatial reproducibility (R) for your specific data [41] [37].

- Motion Correction: Even with small motion (<1 voxel), the interaction between motion parameter regression (MPR) and other steps can be significant. Test different MPR strategies (e.g., 6, 12, or 24 parameters) [41].

- Physiological Noise Correction (PNC): The effectiveness of PNC (e.g., RETROICOR, CompCor) can depend on the cohort and task. Optimization can determine if and which PNC method is most beneficial [37].

- Temporal Filtering: The choice of high-pass filter cutoff can interact with other parameters. Optimization helps find the filter that best preserves the task-related signal while removing drift [41].

Table: Systematic Evaluation of Denoising Pipelines for Network-Based Findings [31]

| Evaluation Criterion | Why It Matters | How ICA-FIX and GSR Are Assessed |

|---|---|---|

| Test-Retest Reliability | Ensures network topology is stable across repeated scans of the same individual. | Pipelines are evaluated using the "Portrait Divergence" (PDiv) measure to minimize spurious differences. |

| Sensitivity to Individual Differences | The pipeline must be able to detect meaningful variation between people. | Assessed by the ability to distinguish individuals based on their functional connectome. |

| Sensitivity to Experimental Effects | The pipeline must detect changes due to an intervention (e.g., pharmacology). | Tested using a propofol anaesthesia dataset to see if pipelines capture known drug-induced changes. |

| Generalizability | Findings should hold across different datasets and acquisition parameters. | Validated on an independent HCP dataset, which uses FIX-ICA and has different resolution. |

▷ Experimental Protocols and Workflows

Protocol 1: Implementing Single-Subject ICA and FIX

This protocol outlines the steps for setting up and running a single-subject ICA as a prerequisite for FIX cleaning [9] [40].

Detailed Methodology:

- Data Preparation: Gather brain-extracted structural T1 images and the 4D functional resting-state or task-based fMRI data.

- Create a Template Design File: Use the FSL FEAT GUI to create a template analysis file (

ssica_template.fsf).- In the Data tab, select your 4D functional data and set the correct TR.

- In the Pre-stats tab, turn off spatial smoothing (set to 0 mm) and set high-pass filtering to a conservative cutoff (e.g., 100s for resting-state). Disable any preprocessing steps you have already applied.

- In the Registration tab, configure the functional-to-structural and structural-to-standard space registration. Using BBR is recommended.

- In the Stats tab, select "MELODIC ICA data exploration."

- Save this file as your template.

- Generate Scan-Specific Design Files: Use a script (Bash or Python) to loop over all subjects and sessions, replacing placeholders in the template file with specific file paths and identifiers. Example Bash Code:

- Run Single-Subject ICA: Execute the FEAT analysis for each generated design file. This can be done in parallel for speed.

- Train and Apply FIX:

- Manually classify components for a representative subset of your runs to create a training set.

- Use the

fix -tcommand to train a new model on your hand-labelled data. - Apply the trained classifier to the entire dataset using

fix -cto clean the data and generatefiltered_func_data_clean.nii.gz.

The following workflow diagram illustrates the key stages of this protocol:

Protocol 2: Systematic Pipeline Optimization with NPAIRS

For task-based fMRI, especially in cohorts like older adults or clinical populations where noise confounds are elevated, adaptively optimizing the preprocessing pipeline can significantly improve reliability and sensitivity [41] [37].

Detailed Methodology:

- Define a Set of Preprocessing Options: Identify key steps to test, such as:

- Motion Parameter Regression (MPR): 6, 12, or 24 parameters.

- Physiological Noise Correction (PNC): None, RETROICOR, aCompCor.

- Temporal Detrending: Different high-pass filter cutoffs.

- Generate Multiple Pipelines: Systematically combine all options, creating a large set of possible preprocessing pipelines.

- Apply NPAIRS Framework: For each pipeline and task run, use split-half resampling to compute:

- Prediction Accuracy (P): How well a model trained on one data split predicts the experimental condition in the other split.

- Spatial Reproducibility (R): The similarity of activation maps between the two splits.

- Select Optimal Pipeline: Choose the pipeline that provides the best joint optimization of (P, R) for a given subject and task run. This can be done to find a single optimal pipeline for a group or individually for each subject.

▤ The Scientist's Toolkit: Research Reagent Solutions

Table: Essential Software and Methodological "Reagents" for fMRI Denoising

| Item Name | Function/Brief Explanation | Example Use Case |

|---|---|---|

| FSL (FMRIB Software Library) | A comprehensive library of MRI analysis tools, including MELODIC for ICA and FIX for automated component classification. | The primary software suite for implementing the ICA-FIX pipeline as described in [9] and [40]. |

| MELODIC | The tool within FSL that performs Independent Component Analysis (ICA) decomposition of 4D fMRI data. | Used for the initial single-subject ICA to decompose data into spatial maps and timecourses for manual inspection or FIX training [40]. |

| FIX (FMRIB's ICA-based Xnoiseifier) | A machine learning classifier that automatically labels ICA components as "signal" or "noise" based on a set of spatial and temporal features. | Automated, high-throughput cleaning of fMRI datasets after being trained on a hand-labelled subset [9] [38]. |

| NPAIRS Framework | A data-driven, cross-validation framework that optimizes preprocessing pipelines based on prediction accuracy and spatial reproducibility, avoiding the need for a "ground truth." | Identifying the most effective combination of preprocessing steps (e.g., MPR, PNC) for a specific task or cohort [41] [37]. |

| Global Signal Regressor | A nuisance regressor calculated as the mean timecourse across all brain voxels. Used in a General Linear Model (GLM) to remove globally shared variance. | Applied as a final denoising step to remove residual global noise, potentially strengthening brain-behavior associations [35] [36]. |

| Temporal ICA (tICA) | An emerging alternative to spatial ICA that separates data into temporally independent components, capable of segregating global neural signal from global noise. | A potential selective alternative to GSR for removing global physiological noise without inducing widespread negative correlations [39]. |

Frequently Asked Questions (FAQs)

Q1: What are the main advantages of using deep learning for task-based fMRI denoising compared to traditional methods?

Deep learning (DL) models offer several key advantages for denoising task-based fMRI data. Unlike traditional methods such as CompCor or ICA-AROMA, which often require explicit noise modeling or manual component classification, DL approaches like the Deep Neural Network (DNN) are data-driven and can be optimized for each subject without expert intervention [42] [43]. They do not assume a specific parametric noise model and can adapt to varying hemodynamic response functions (HRFs) across different brain regions [43]. Furthermore, methods like DeepCor utilize deep generative models to disentangle and remove noise, significantly enhancing the BOLD signal; for instance, DeepCor was shown to outperform CompCor by 215% in enhancing responses to face stimuli [11].

Q2: My reconstructed images from fMRI data lack semantic accuracy. How can I improve this?

The lack of semantic accuracy is a common challenge, often indicating an over-reliance on low-level visual features. To improve semantic fidelity, integrate multimodal semantic information into your reconstruction pipeline. One effective approach is to combine visual reconstruction with semantic reconstruction modules [44]. You can use automatic image captioning models like BLIP to generate text descriptions for your training images, extract semantic features from these captions, and then train a decoder to map brain activity to these semantic features [44]. Subsequently, use a generative model like a Latent Diffusion Model (LDM), conditioned on both the initial visual reconstruction and the decoded semantic features, to produce the final, semantically accurate image [44]. This methodology has been shown to significantly improve quantitative metrics like CLIP score, which evaluates semantic content [44].

Q3: What is the role of diffusion models in fMRI-based image reconstruction, and are they superior to GANs?

Diffusion Models (DMs) and Latent Diffusion Models (LDMs) are state-of-the-art generative models that have recently been applied to fMRI reconstruction with remarkable success [44] [45]. They work through a forward process that gradually adds noise to data and a reverse process that learns to denoise, effectively generating high-quality, coherent images from noise [44]. Compared to Generative Adversarial Networks (GANs), DMs offer greater structure flexibility and have been demonstrated to generate high-quality samples, overcoming significant optimization challenges posed by GANs [44]. Models like the "Brain-Diffuser" leverage the powerful image-generation capabilities of frameworks like Versatile Diffusion, often conditioned on multimodal features, to reconstruct complex natural scenes with superior qualitative and quantitative performance compared to previous GAN-based approaches [45].

Q4: How do I choose between a real-valued and a complex-valued CNN for MRI denoising?

The choice depends on whether you are working with magnitude images only or have access to the raw complex-valued MRI data. If you are using only magnitude images, a real-valued CNN may be sufficient. However, the raw MRI data is complex-valued, containing information in both the real and imaginary parts (or magnitude and phase). Complex-valued CNNs are specifically designed to process this complex data directly [46]. They offer several advantages, including easier optimization, faster learning, richer representational capacity, and, crucially, better preservation of phase information [46]. For tasks where phase is important or when dealing with spatially varying noise from parallel imaging, a complex-valued CNN like the non‑blind ℂDnCNN is likely to yield superior denoising performance [46].

Troubleshooting Common Experimental Issues

Problem: Poor Quality Reconstructions with Low Structural Similarity

- Symptoms: Reconstructed images are blurry, lack fine details, and score poorly on low-level metrics like the Structural Similarity Index Measure (SSIM).

- Possible Causes & Solutions:

| Cause | Solution |

|---|---|

| Insufficient Low-level Information | The reconstruction model may be overly focused on semantic content at the expense of layout and texture. Incorporate a dedicated low-level reconstruction stage. For example, use a Very Deep Variational Autoencoder (VDVAE) to generate an initial image that captures the overall layout and shape, which can then be refined by a subsequent model [45]. |

| Weak Mapping from fMRI to Visual Features | The decoder mapping brain activity to image features may be underperforming. Ensure your model decodes visual features from fMRI data using a trained decoder and employs a powerful generator (like DGN or VDVAE). Iteratively optimize the generated image to minimize the error between its features (extracted by a network like VGG19) and the features decoded from the brain data [44]. |

Problem: Ineffective Denoising Leading to Low SNR in Activation Maps

- Symptoms: Task-based fMRI analysis reveals weak, noisy, or unreliable activation maps; statistical power is low.

- Possible Causes & Solutions:

| Cause | Solution |

|---|---|

| Failure to Model Temporal Autocorrelation | fMRI data is a time series with strong temporal dependencies. Standard denoising methods may not capture this effectively. Implement a deep learning model that incorporates layers designed for sequential data, such as Long Short-Term Memory (LSTM) layers, which can use information from previous time points to characterize temporal autocorrelation and better separate signal from noise [42] [43]. |

| Inadequate Generalization of Denoising Model | The denoising model may not adapt well to your specific dataset. Use a robust, data-driven DNN that is trained at the subject level. Such models optimize their parameters by leveraging the task design matrix to maximize the correlation difference between signals in gray matter (where task-related responses are expected) and signals in white matter/CSF (primarily noise), ensuring the model learns to extract task-relevant signals effectively [42] [43]. |

Experimental Protocols for Key Methodologies

Protocol 1: Implementing a DNN for Task-fMRI Denoising

This protocol is based on the robust DNN architecture described in [42] [43].

- Input Data Preparation: Use minimally preprocessed fMRI data. The input dimension is N × T × 1, where N is the number of voxels and T is the length of the time series. Each voxel is treated as a sample.

- Network Architecture:

- Temporal Convolutional Layer: Applies 1-dimensional convolutional filters as an adaptive temporal filter.

- LSTM Layer: Processes the output of the convolutional layer to characterize temporal autocorrelation in the fMRI time series.

- Time-Distributed Fully-Connected Layer: Weights the multiple outputs from the LSTM layer. The number of nodes (K) can be adjusted (e.g., 4 nodes to adapt to varying HRFs).

- Selection Layer: A non-conventional layer that selects the single output time series (from the K possibilities) for each voxel that has the maximal correlation with the task design matrix.

- Model Training: Train the model separately for each subject. The parameters are optimized by maximizing the correlation difference between the denoised signals in gray matter voxels and those in white matter or CSF voxels, thereby enhancing task-related signals.

The workflow for this denoising process is illustrated below.

Protocol 2: Two-Stage Semantic Image Reconstruction from fMRI

This protocol outlines the method combining visual and semantic information for high-fidelity reconstruction [44] [45].

Stage 1: Low-Level Visual Reconstruction

- fMRI Feature Decoding: Train a decoder to map fMRI signals to visual features.

- Image Generation: Use a Deep Generator Network (DGN) or a VDVAE to generate an initial image from the decoded features.

- Iterative Optimization: Optimize the generated image iteratively by minimizing the error between visual features extracted from it (using VGG19) and the features decoded from the brain data. The output is a "low-level" reconstructed image.

Stage 2: Semantic-Guided Refinement

- Caption Generation & Semantic Decoding: Use a model like BLIP to generate multiple captions for each training image. Train a separate decoder to map fMRI signals to semantic features extracted from these captions.

- Multimodal Fusion and Generation: Use a Latent Diffusion Model (LDM) for the final reconstruction. The initial image from Stage 1 serves as the visual input for the LDM's image-to-image pipeline, while the semantic features decoded from the fMRI are provided as conditional input. This guides the LDM to produce an image that is both visually coherent and semantically accurate.

The following diagram visualizes this two-stage pipeline.

Performance Comparison of Denoising and Reconstruction Models

The tables below summarize the quantitative performance of various state-of-the-art models, providing benchmarks for expected outcomes.

Table 1: Quantitative Performance of Image Reconstruction Models

| Model | Dataset | Key Metric | Reported Score | Key Innovation |

|---|---|---|---|---|

| Shen et al. (Improved) [44] | Kamitani Lab (ImageNet) | SSIM | 0.328 | Combines visual reconstruction with semantic information from BLIP captions and LDM. |

| CLIP Score | 0.815 | |||

| Brain-Diffuser [45] | NSD (COCO) | - | State-of-the-art | Two-stage model using VDVAE for low-level info and Versatile Diffusion with multimodal CLIP features. |

| GAN-based Methods (e.g., IC-GAN) [45] | NSD (COCO) | - | Outperformed | Focus on semantics using Instance-Conditioned GAN. |

Table 2: Denoising Model Performance on Task-Based fMRI

| Model | Data Type | Key Improvement | Application / Validation |

|---|---|---|---|

| DNN with LSTM [42] [43] | Working Memory, Episodic Memory fMRI | Improved activation detection, adapts to varying HRFs, reduces physiological noise. | Simulated data, HCP cohort. Generates more homogeneous task-response maps. |

| DeepCor [11] | fMRI with face stimuli | Enhanced BOLD signal response by 215% compared to CompCor. | Applied to single-participant data. Outperforms others on simulated and real data. |

| Non-blind ℂDnCNN [46] | Low-field MRI data | Superior NRMSE, PSNR, SSIM; preserves phase; handles parallel imaging noise. | Validated on simulated and in vivo low-field data. |

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Resources for fMRI Denoising and Reconstruction Pipelines

| Resource | Type | Primary Function | Example Use Case |

|---|---|---|---|

| Natural Scenes Dataset (NSD) [45] | Dataset | Large-scale 7T fMRI benchmark with complex scene images (from COCO). | Training and benchmarking reconstruction models for natural scenes. |

| Kamitani Lab Dataset [44] | Dataset | fMRI data from subjects viewing ImageNet images. | Training and benchmarking reconstruction models for object-centric images. |

| BLIP (Bootstrapping Language-Image Pre-training) [44] | Software Model | Generates descriptive captions for images to provide semantic context. | Extracting textual descriptions for training semantic decoders in reconstruction. |

| Latent Diffusion Model (LDM) [44] [45] | Software Model | Generative model that produces high-quality images from noise in a latent space. | Final stage of image reconstruction, conditioned on visual and semantic features. |

| CLIP (Contrastive Language-Image Pre-training) [45] | Software Model | Provides multimodal (vision & text) feature representations. | Conditioning generative models; evaluating semantic accuracy of reconstructions (CLIP score). |