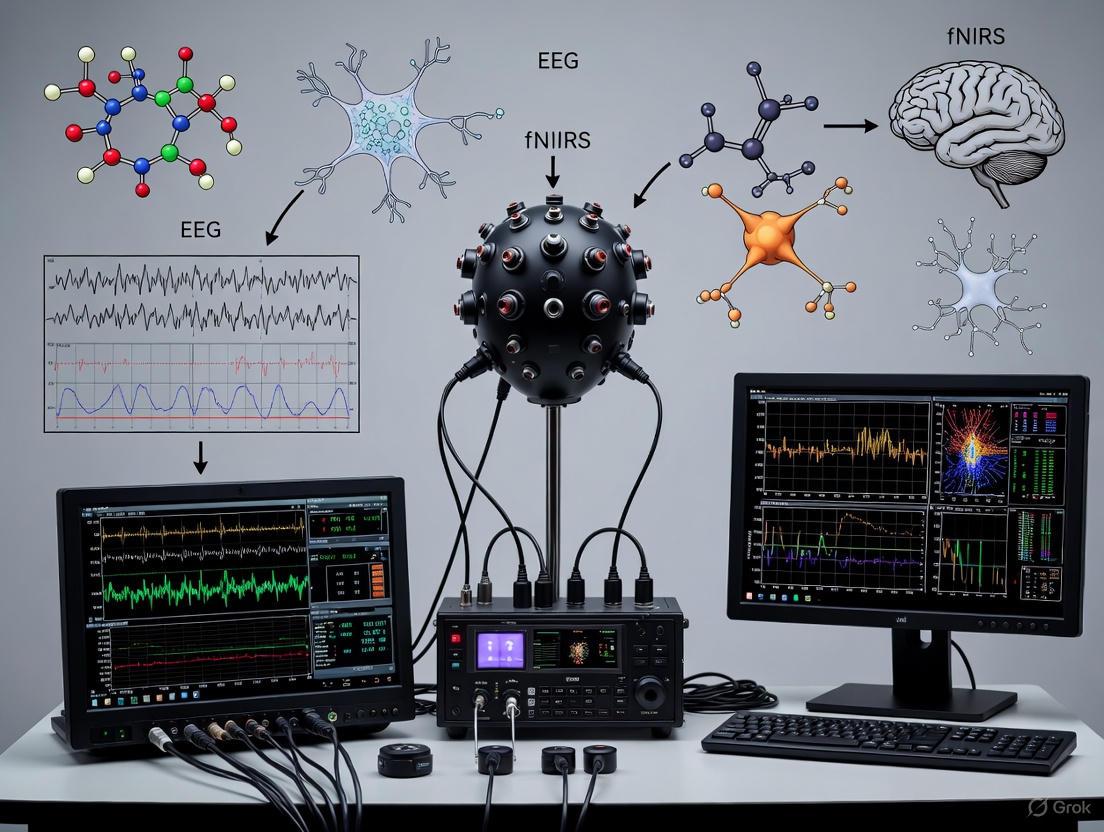

Synergizing EEG and fNIRS: A Comprehensive Guide to Hybrid Brain-Computer Interface Systems for Biomedical Research

This article provides a detailed exploration of simultaneous EEG-fNIRS setups for brain-computer interface (BCI) applications, tailored for researchers, scientists, and drug development professionals.

Synergizing EEG and fNIRS: A Comprehensive Guide to Hybrid Brain-Computer Interface Systems for Biomedical Research

Abstract

This article provides a detailed exploration of simultaneous EEG-fNIRS setups for brain-computer interface (BCI) applications, tailored for researchers, scientists, and drug development professionals. It covers the foundational principles of both modalities, highlighting their complementary nature—EEG's millisecond-scale temporal resolution for electrical activity and fNIRS's superior spatial resolution for hemodynamic responses. The content delves into practical methodological aspects of system integration, data acquisition, and signal processing, alongside advanced fusion strategies and analysis techniques. Furthermore, it addresses common troubleshooting challenges, optimization methods for enhanced performance, and a comparative validation of the hybrid system's efficacy against unimodal approaches through case studies and performance metrics. The article concludes by synthesizing key takeaways and outlining future directions for the technology in clinical and biomedical research applications.

Understanding the Core Principles: Why EEG and fNIRS are a Powerful Combination for BCI

In brain-computer interface (BCI) research, the quest for a more comprehensive understanding of brain activity has driven the adoption of multimodal neuroimaging. Electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) have emerged as a particularly powerful combination, integrating distinct yet complementary information about neuroelectrical and neurovascular activities [1] [2]. EEG measures the brain's rapid electrical activity resulting from the summation of post-synaptic potentials of pyramidal neurons, providing millisecond-level temporal resolution but limited spatial precision [3] [4]. In contrast, fNIRS utilizes near-infrared light to monitor hemodynamic responses linked to neural metabolism, offering superior spatial localization but slower temporal resolution [1] [5]. This inherent complementarity enables researchers to capture a more complete picture of brain dynamics, making simultaneous EEG-fNIRS particularly valuable for BCI systems aimed at decoding diverse cognitive states and intents [6] [7].

Fundamental Signal Origins and Complementarity

Electroencephalography (EEG) Signal Origins

EEG captures electrical potentials generated primarily by the synchronized postsynaptic activity of cortical pyramidal neurons. When these neurons fire in synchrony, the resulting current flows create electrical fields measurable at the scalp surface [3]. The signal is characterized by its excellent temporal resolution (milliseconds) but suffers from limited spatial resolution due to the blurring effects of the skull, meninges, and other tissues between the cortex and electrodes [2] [4]. This fundamental physical property makes EEG ideal for tracking rapid neural dynamics but challenges precise localization of neural sources.

Functional Near-Infrared Spectroscopy (fNIRS) Signal Origins

fNIRS relies on neurovascular coupling, the tight relationship between neural activity and subsequent hemodynamic changes. It employs near-infrared light (650-950 nm wavelengths) to measure concentration changes in oxygenated hemoglobin (HbO) and deoxygenated hemoglobin (HbR) in the cerebral cortex [1] [5]. Active brain regions experience increased oxygen demand, triggering a compensatory hemodynamic response that alters the relative concentrations of HbO and HbR – a phenomenon fNIRS detects through differential light absorption properties of these chromophores [1] [8]. This optical measurement provides good spatial resolution but is inherently limited by the slow nature of the hemodynamic response (typically peaking 4-6 seconds after neural activation) [3].

Complementary Characteristics for BCI

The orthogonal nature of EEG and fNIRS signals creates powerful synergies for BCI applications. Their complementary properties span temporal and spatial domains, sensitivity to artifacts, and the types of brain activity they best capture [2]. The following table summarizes these complementary characteristics:

Table 1: Complementary Characteristics of EEG and fNIRS for BCI Applications

| Feature | EEG | fNIRS |

|---|---|---|

| Temporal Resolution | Millisecond precision [3] | Slower (0.1-0.5 Hz), peaks 4-6s post-stimulus [3] |

| Spatial Resolution | Limited (several cm) due to volume conduction [2] [3] | Better (<1 cm) for cortical mapping [3] |

| Signal Origin | Electrical activity (post-synaptic potentials) [3] | Hemodynamic response (HbO/HbR concentration changes) [1] |

| Artifact Sensitivity | Sensitive to electrical noise, muscle artifacts, eye movements [4] | Sensitive to scalp blood flow, motion artifacts, ambient light [1] |

| Measured Process | Direct neural electrical activity [9] | Metabolic demand following neural activity [9] |

| Ideal BCI Tasks | Rapid changes (P300, SSVEP, motor imagery onset) [5] [9] | Sustained cognitive states (mental arithmetic, workload, vigilance) [5] [9] |

The signaling pathways illustrating the relationship between neural activity and the measurable signals for each modality can be visualized as follows:

Diagram 1: Signal Origin Pathways

Quantitative Performance in BCI Applications

Research has consistently demonstrated that combining EEG and fNIRS yields superior BCI performance compared to either modality alone. The integration enhances classification accuracy across various paradigms, particularly for motor imagery and cognitive tasks.

Table 2: BCI Classification Performance of Unimodal vs. Multimodal Approaches

| Modality | Task | Key Features/Methods | Reported Performance | Citation |

|---|---|---|---|---|

| EEG-only | Motor Imagery (MI) | Event-related desynchronization | Lower accuracy compared to hybrid | [4] |

| fNIRS-only | Mental Arithmetic (MA) | HbO/HbR mean, slope, variance | Lower accuracy compared to hybrid | [4] |

| EEG-fNIRS Hybrid | MI & MA | Multi-domain features + multi-level progressive learning | 96.74% (MI), 98.42% (MA) accuracy | [6] [4] |

| EEG-fNIRS Hybrid | MI | Non-linear features + ensemble learning | 95.48% accuracy, 97.67% F1-score | [7] |

| EEG-fNIRS Hybrid | Mental Stress | Decision-level fusion (SVM probability combining) | +7.76% vs. EEG, +10.57% vs. fNIRS | [4] |

Experimental Protocols for Simultaneous EEG-fNIRS

Protocol 1: Motor Imagery and Mental Arithmetic Paradigm

This protocol adapts well-established tasks that elicit robust responses in both modalities, suitable for evaluating hybrid BCI performance [6] [4].

Materials and Setup:

- Simultaneous EEG-fNIRS system with synchronized data acquisition

- 64-channel EEG cap integrated with fNIRS optodes

- fNIRS source-detector pairs over motor and prefrontal cortices

- Visual stimulus presentation system

- Trigger synchronization interface (LSL or hardware triggers)

Procedure:

- Participant Preparation: Fit integrated EEG-fNIRS cap ensuring proper electrode impedance (<10 kΩ for EEG) and optical contact quality for fNIRS [3] [9].

- Baseline Recording: Acquire 5 minutes of resting-state data with eyes open for later signal normalization.

- Task Block Structure: Implement alternating task and rest blocks:

- Trial Marking: Implement event markers for both brief EEG events (stimulus onset) and extended fNIRS blocks (task period start/end) [3].

- Data Acquisition: Record continuous EEG (≥500 Hz sampling) and fNIRS (10-50 Hz sampling) with synchronized triggers.

Data Analysis:

- EEG Processing: Bandpass filtering (0.5-40 Hz), artifact removal (ICA), time-locked epoch extraction, and feature extraction (power spectral densities, ERD/ERS) [4].

- fNIRS Processing: Conversion of optical density to HbO/HbR concentrations, bandpass filtering (0.01-0.2 Hz), motion artifact correction, and feature extraction (mean, slope, variance) [6] [5].

- Multimodal Fusion: Apply feature-level fusion (concatenating EEG and fNIRS features) or decision-level fusion (combining classifier outputs) [6] [7].

The experimental workflow for a typical simultaneous recording session proceeds through distinct phases:

Diagram 2: Experimental Workflow

Protocol 2: Resting-State Functional Connectivity with Vigilance Monitoring

This protocol examines the relationship between electrophysiological and hemodynamic signals during resting states, particularly valuable for clinical populations.

Materials and Setup:

- High-density EEG-fNIRS systems (whole-head coverage)

- Polysomnography-capable amplifier for EEG vigilance monitoring

- Multiple short-distance fNIRS channels for superficial signal regression [10]

- Comfortable reclining chair with support for various body positions

Procedure:

- System Setup: Configure high-density montage (≥64 EEG channels, ≥64 fNIRS channels) with emphasis on frontal and default mode network regions.

- Position Variation: Acquire data in multiple body positions (supine, sitting, standing) to assess posture effects on global signals [10].

- Extended Recording: Collect 45 minutes of resting-state data with simultaneous EEG-fNIRS for vigilance fluctuation analysis.

- Vigilance Assessment: Continuously monitor EEG for vigilance shifts using standardized rating scales (e.g., VIGALL).

- Control Task: Include auditory oddball paradigm to assess evoked responses across body positions.

Data Analysis:

- Global Signal Analysis: Calculate fNIRS global signal as average across all channels, examine frequency content (0.01-0.1 Hz) [10].

- Vigilance Correlation: Compute correlation between EEG vigilance measures and fNIRS global signal amplitude.

- Functional Connectivity: Assess RSFC using correlation-based approaches between brain regions.

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful simultaneous EEG-fNIRS experimentation requires careful selection of equipment and materials that address the unique challenges of multimodal integration.

Table 3: Essential Materials for Simultaneous EEG-fNIRS Research

| Item | Specification/Type | Function/Purpose | Implementation Notes |

|---|---|---|---|

| Integrated Cap System | Customizable cap with 128-160 slits, black fabric | Hosts both EEG electrodes and fNIRS optodes, prevents light leakage | actiCAP with 128 slits recommended; dark fabric reduces optical reflection [3] |

| EEG Electrodes | Active electrode systems (e.g., g.SCARABEO) | Measures electrical potentials with high signal quality | Active electrodes reduce preparation time to ~10 minutes for 32 channels [9] |

| fNIRS Optodes | Laser diode or LED sources with sensitive detectors | Measures hemodynamic responses via light absorption | Source-detector distance of 20-30 mm standard; multiple wavelengths (e.g., 760, 850 nm) [1] [9] |

| Synchronization Interface | LSL protocol or shared hardware triggers | Ensures temporal alignment of EEG and fNIRS data streams | Critical for event-related analysis; LSL enables software synchronization [3] |

| Amplifier System | Hybrid EEG-fNIRS amplifiers (e.g., g.Nautilus with g.SENSOR fNIRS) | Simultaneously acquires both signal types with minimal interference | Integrated systems simplify setup and improve synchronization precision [9] |

| Montage Design Software | MATLAB-based tools (e.g., ArrayDesigner) | Plans optimal placement of competing sensors | Determines trade-offs between EEG and fNIRS coverage in target regions [3] |

Integration Methodologies and Fusion Approaches

The successful integration of EEG and fNIRS data occurs at multiple levels, each with distinct advantages for BCI applications. The three primary fusion strategies include:

Data-Level Fusion: Direct combination of raw or minimally processed signals, though this approach is computationally intensive and less commonly used due to the fundamentally different nature of EEG and fNIRS signals [4].

Feature-Level Fusion: This dominant approach involves extracting relevant features from each modality then combining them into a unified feature vector for classification. Methods range from simple concatenation to advanced techniques like multi-domain feature extraction with optimization algorithms [6]. Recent advances include multi-level progressive learning frameworks that achieve >96% accuracy in both motor imagery and mental arithmetic tasks [6] [4].

Decision-Level Fusion: Separate classification of each modality followed by combination of decisions through voting schemes, weighted averaging, or meta-classifiers. This approach provides robustness against modality-specific artifacts and has demonstrated significant improvements (+7-10%) over unimodal approaches in mental stress detection [4].

The relationship between these fusion approaches and their respective advantages can guide selection based on specific BCI requirements:

Diagram 3: Multimodal Fusion Approaches

EEG and fNIRS provide fundamentally distinct yet highly complementary windows into brain function, with electrical and hemodynamic signals offering temporally and spatially orthogonal information. Their successful integration in simultaneous setups requires careful attention to experimental design, hardware integration, and data fusion methodologies. The protocols and frameworks presented here provide a foundation for leveraging this powerful multimodal approach in BCI research, enabling more robust and comprehensive decoding of brain states and intents. As hardware integration continues to advance and analysis techniques become increasingly sophisticated, simultaneous EEG-fNIRS is poised to become an increasingly indispensable tool in the neuroscience and neuroengineering toolkit.

The development of brain-computer interfaces (BCIs) necessitates neuroimaging techniques that can accurately decode neural activity with high precision in both time and space. Electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) have emerged as leading non-invasive modalities, each with distinct resolution profiles that present researchers with a fundamental trade-off. EEG measures the brain's electrical activity via electrodes placed on the scalp, offering millisecond-level temporal resolution but limited spatial accuracy due to the dispersion of electrical signals as they pass through the skull and scalp [11]. In contrast, fNIRS monitors cerebral hemodynamic responses by measuring changes in oxygenated and deoxygenated hemoglobin using near-infrared light, providing superior spatial resolution for surface cortical areas but constrained by a slower temporal response on the scale of seconds [11] [12].

This application note examines the temporal versus spatial resolution trade-offs between EEG and fNIRS within the context of simultaneous setup for BCI research. The complementary nature of these modalities enables a hybrid approach that mitigates their individual limitations through strategic integration [13]. We provide a comprehensive analysis of their comparative strengths, methodological protocols for simultaneous implementation, and visualization of integrated signaling pathways to guide researchers in optimizing BCI system design.

Technical Comparison of EEG and fNIRS

Fundamental Principles and Signal Characteristics

Electroencephalography (EEG) captures postsynaptic potentials generated primarily by pyramidal cells in the cerebral cortex. When tens of thousands of these neurons fire synchronously, with dendritic trunks oriented parallel to each other and perpendicular to the cortical surface, their electrical signals summate sufficiently to be detected by scalp electrodes [12]. These signals represent large-scale neural oscillatory activity divided into characteristic frequency bands: theta (4-7 Hz), alpha (8-14 Hz), beta (15-25 Hz), and gamma (>25 Hz) [12].

Functional Near-Infrared Spectroscopy (fNIRS) employs near-infrared light (600-1000 nm wavelength) to measure hemodynamic responses coupled with neural activity. Light at different wavelengths is introduced into the scalp via optical sources, and the attenuated light that diffusely reflects back to detectors is used to compute concentration changes in oxygenated hemoglobin (HbO) and deoxygenated hemoglobin (HbR) based on the Modified Beer-Lambert Law [12] [5]. These hemodynamic changes serve as indirect markers of brain activity through the mechanism of neurovascular coupling [12].

Comparative Analysis of Resolution Characteristics

Table 1: Quantitative Comparison of EEG and fNIRS Resolution Profiles

| Parameter | EEG | fNIRS |

|---|---|---|

| Temporal Resolution | Milliseconds [11] | Seconds (typically 2-6 second delay) [11] [14] |

| Spatial Resolution | Centimeter-level [11] | Moderate (better than EEG, limited to cortex) [11] |

| Depth of Measurement | Cortical surface [11] | Outer cortex (approximately 1-2.5 cm deep) [11] |

| Signal Source | Postsynaptic potentials in cortical neurons [11] | Hemodynamic response (blood oxygenation changes) [11] |

| Neurovascular Coupling | Direct neural electrical activity [12] | Indirect metabolic-hemodynamic response [12] |

| Movement Artifact Sensitivity | High susceptibility [11] [15] | Relatively robust tolerance [11] [15] |

The fundamental trade-off between temporal and spatial resolution emerges from the different physiological phenomena each modality captures. EEG provides a direct view of neural dynamics with exceptional temporal fidelity, making it ideal for tracking rapid cognitive processes like stimulus perception and decision onset [11]. However, its spatial resolution is limited due to the blurring effect of the skull and scalp on electrical fields [11] [12].

Conversely, fNIRS offers superior spatial localization for surface cortical areas, particularly the prefrontal region, but is constrained by the inherent delay of the hemodynamic response, which typically peaks 4-6 seconds after neural activation [11] [15]. This temporal lag presents challenges for real-time BCI applications requiring immediate feedback [15].

Neurophysiological Basis for Integration

The Neurovascular Coupling Mechanism

The theoretical foundation for integrating EEG and fNIRS lies in the physiological phenomenon of neurovascular coupling - the intimate relationship between neural electrical activity and subsequent hemodynamic responses [12]. When neurons within a specific brain region activate, they trigger a complex cascade of metabolic and vascular events that increase local cerebral blood flow to deliver oxygen and nutrients, resulting in measurable fluctuations in hemoglobin concentrations [12].

This coupling forms the basis for connecting EEG's direct measurement of electrical activity with fNIRS's indirect measurement of hemodynamic changes, providing complementary information about the same underlying neural events [12]. Importantly, impairments in neurovascular coupling have been associated with various neurological conditions, including Alzheimer's disease and stroke, making simultaneous measurement valuable for both basic research and clinical applications [12].

Signaling Pathway in Simultaneous EEG-fNIRS

The following diagram illustrates the integrated signaling pathway from neural activity to measured signals in simultaneous EEG-fNIRS experimentation:

Signaling Pathway from Neural Activity to Measured Signals

This pathway visualization illustrates the parallel processes by which underlying neural activity generates measurable EEG and fNIRS signals. The direct electrical pathway (blue) enables millisecond temporal resolution, while the indirect hemodynamic pathway (red) provides superior spatial localization at the cost of slower response time [12].

Experimental Protocols for Simultaneous EEG-fNIRS

System Setup and Sensor Placement

Equipment Configuration:

- Utilize integrated EEG-fNIRS systems or synchronized separate systems with trigger synchronization [11]

- For EEG: Employ systems with 16+ channels, sampling rate ≥500 Hz (e.g., BrainAmp DC EEG system) [15]

- For fNIRS: Implement continuous-wave systems with dual-wavelength sources (e.g., 760 nm and 850 nm) and photodetectors [12] [15]

- Ensure emitter-detector distance of 30 mm for optimal sensitivity to cerebral tissues [16]

Sensor Placement Protocol:

- Use the international 10-20 or 10-5 system for consistent positioning [11] [16]

- For motor imagery tasks: Focus on C3, C4 positions with coverage of prefrontal cortex [15]

- For cognitive tasks: Emphasize prefrontal cortex coverage [5]

- Implement high-density EEG caps with pre-defined fNIRS-compatible openings to avoid physical interference [11]

- Use optode holders that avoid electrode contact points to prevent signal contamination [11]

Data Acquisition and Preprocessing Workflow

Table 2: Research Reagent Solutions for EEG-fNIRS Experimentation

| Category | Specific Solution/Equipment | Function/Purpose |

|---|---|---|

| fNIRS Hardware | NIRScout System (NIRx) [16] | Continuous-wave fNIRS data acquisition |

| EEG Hardware | BrainAmp DC EEG System (Brain Products) [15] | High-quality EEG signal acquisition |

| Sensor Integration | Integrated EEG-fNIRS Caps [11] | Compatible sensor placement minimizing interference |

| Synchronization | TTL Pulse Systems/Parallel Port Triggers [11] | Temporal alignment of EEG and fNIRS data streams |

| fNIRS Processing | Modified Beer-Lambert Law [12] [5] | Conversion of optical density to hemoglobin concentration changes |

| Artifact Removal | Motion Correction Algorithms [11] | Reduction of movement artifacts in both modalities |

| Advanced Analysis | Joint Independent Component Analysis (jICA) [11] | Multimodal data fusion and feature extraction |

The experimental workflow for simultaneous acquisition involves:

Simultaneous EEG-fNIRS Experimental Workflow

Data Acquisition Protocol:

- Record EEG signals at minimum 250-500 Hz sampling rate [15]

- Record fNIRS signals at 10-12.5 Hz sampling rate [14] [16]

- Implement synchronized task paradigms with randomized trial sequences

- For motor execution/imagery: Use block designs with 20s rest, 5s task periods [15]

- Include sufficient inter-trial intervals to allow hemodynamic response return to baseline

Preprocessing Guidelines:

- EEG Processing: Apply bandpass filtering (0.5-40 Hz), remove ocular and motion artifacts using independent component analysis (ICA) [11]

- fNIRS Processing: Convert raw intensity signals to optical density, then to HbO/HbR concentrations using Modified Beer-Lambert Law [12] [5]. Apply bandpass filtering (0.01-0.2 Hz) to remove physiological noise [5]

- Temporal Alignment: Address inherent fNIRS hemodynamic delay (typically 2-6 seconds) through temporal correction algorithms [14]

Application in Brain-Computer Interface Research

Task-Specific Implementation Protocols

Motor Imagery BCI Protocol:

- Task Design: Implement left vs. right hand motor imagery trials (25 each, randomized)

- Visual Cueing: Present directional arrows (5s duration) following rest period (20s) [15]

- Sensor Placement: Focus on C3, C4 positions over motor cortex with additional prefrontal coverage [15]

- Feature Extraction:

- Classification: Utilize support vector machines (SVM) with early temporal features for rapid BCI response [15]

Mental Workload/Cognitive BCI Protocol:

- Task Design: Implement n-back tasks (0-back vs. 2-back) or mental arithmetic vs. baseline [16]

- Sensor Placement: Emphasize prefrontal cortex coverage [5]

- Feature Extraction:

- Classification: Apply linear discriminant analysis (LDA) or deep learning approaches [5] [17]

Advanced Integration Methodologies

Spatial-Temporal Alignment Network (STA-Net): Advanced deep learning approaches address the inherent temporal misalignment between EEG and fNIRS signals. The STA-Net architecture includes:

- fNIRS-guided Spatial Alignment (FGSA): Uses fNIRS spatial information to identify sensitive brain regions and weight corresponding EEG channels [14]

- EEG-guided Temporal Alignment (EGTA): Generates temporally aligned fNIRS signals using cross-attention mechanisms to compensate for hemodynamic delay [14]

- This approach has demonstrated classification accuracies of 69.65% for motor imagery, 85.14% for mental arithmetic, and 79.03% for word generation tasks [14]

Decision-Level Fusion with Uncertainty Modeling:

- Implement Dirichlet distribution parameter estimation to model uncertainty in unimodal decisions [17]

- Apply Dempster-Shafer Theory for two-layer reasoning to fuse evidence from both modalities [17]

- This approach has achieved 83.26% accuracy in motor imagery classification, outperforming single-modality implementations [17]

The integration of EEG and fNIRS technologies presents a powerful approach to overcoming the fundamental trade-off between temporal and spatial resolution in non-invasive brain imaging. Through strategic multimodal fusion, researchers can leverage EEG's millisecond-level temporal resolution alongside fNIRS's superior spatial localization capabilities, enabling more robust and accurate BCIs. The experimental protocols and methodological considerations outlined in this application note provide a framework for optimizing simultaneous EEG-fNIRS setups, with particular relevance for motor imagery and cognitive task classification in BCI research. As integration methodologies continue to advance, particularly through deep learning approaches that explicitly address spatial-temporal alignment challenges, hybrid EEG-fNIRS systems are poised to significantly enhance the performance and practical applicability of next-generation brain-computer interfaces.

The integration of Electroencephalography (EEG) and functional Near-Infrared Spectroscopy (fNIRS) represents a transformative approach in brain-computer interface (BCI) research, offering a more comprehensive view of brain function than either modality could provide alone [18]. This synergistic framework capitalizes on the complementary strengths of both techniques: EEG provides excellent temporal resolution on the millisecond scale, capturing rapid neural electrical activity, while fNIRS offers valuable spatial information regarding brain activation by localizing hemodynamic responses associated with neuronal activity [18] [2]. Simultaneous EEG-fNIRS recordings bridge a critical gap in neuroimaging, enabling researchers to correlate the fast dynamics of electrophysiological activity with the slower hemodynamic changes that reflect metabolic demands of neural processing [19].

The fundamental synergy stems from measuring different aspects of brain function: EEG records electrical potentials generated by synchronized neuronal firing, while fNIRS measures concentration changes in oxygenated (HbO) and deoxygenated hemoglobin (HbR) in the blood, which serve as proxies for neural metabolic activity [20]. This complementary relationship makes the combined approach particularly valuable for investigating complex cognitive processes, developing more robust BCIs, and advancing clinical applications in neurology and psychiatry [2] [21].

Fundamental Principles and Complementary Nature

Technical Basis of EEG and fNIRS

Electroencephalography (EEG) detects electrical activity generated by the synchronized firing of neuronal populations beneath the scalp. With a temporal resolution in the millisecond range, EEG excels at tracking the rapid dynamics of brain activity but suffers from limited spatial resolution due to signal attenuation and smearing as electrical potentials pass through various tissues before reaching scalp electrodes [2] [19].

Functional Near-Infrared Spectroscopy (fNIRS) employs near-infrared light to measure cortical brain activity by detecting hemodynamic responses associated with neuronal activity. Light in the 700-900 nm range is shone through the scalp, and detectors measure backscattered light, allowing calculation of concentration changes in oxygenated and deoxygenated hemoglobin based on differential absorption characteristics [20]. While fNIRS provides superior spatial resolution compared to EEG (approximately 2 cm depth), its temporal resolution is limited by the inherent speed of the hemodynamic response, which typically unfolds over seconds [19].

The Synergistic Relationship

The complementary characteristics of EEG and fNIRS create a powerful synergistic relationship for studying brain function, as illustrated in the following diagram:

This synergy enables researchers to investigate the relationship between electrical brain activity and subsequent metabolic responses, providing a more complete picture of neurovascular coupling—the fundamental process linking neural activity to cerebral blood flow changes [2]. The combined approach is particularly advantageous for BCI applications, where understanding both the timing and spatial distribution of brain activity is essential for accurate classification of user intent [18] [17].

Applications in Brain-Computer Interface Research

Motor Imagery and Execution

Motor imagery (MI) represents one of the most widely investigated applications for hybrid EEG-fNIRS BCI systems. Research has demonstrated that combining temporal EEG patterns with spatial fNIRS activation profiles significantly improves classification accuracy of imagined movements. A recent study utilizing deep learning and evidence theory for EEG-fNIRS signal integration achieved an average accuracy of 83.26% for MI classification, representing a 3.78% improvement over state-of-the-art unimodal methods [17]. This enhanced performance stems from the complementary information provided by each modality: EEG captures event-related desynchronization/synchronization during motor imagery, while fNIRS detects hemodynamic changes in the motor cortex [18].

Cognitive Monitoring and Semantic Decoding

Hybrid EEG-fNIRS systems show considerable promise for decoding semantic information during cognitive tasks. Recent research has successfully differentiated between semantic categories (animals vs. tools) during silent naming and sensory-based imagery tasks using simultaneous EEG-fNIRS recordings [19]. The experimental paradigm included:

- Silent naming: Participants silently named displayed objects

- Visual imagery: Mental visualization of objects

- Auditory imagery: Imagination of sounds associated with objects

- Tactile imagery: Imagination of touching objects

This approach to semantic neural decoding could enable more intuitive BCIs that communicate conceptual meaning directly, bypassing the character-by-character spelling used in current systems [19].

Clinical and Diagnostic Applications

The integration of EEG and fNIRS has demonstrated significant potential across various clinical domains, leveraging their complementary strengths for improved diagnosis and monitoring:

Table: Clinical Applications of EEG-fNIRS Integration

| Clinical Domain | Application | Benefits of EEG-fNIRS Integration |

|---|---|---|

| Disorders of Consciousness | Detecting neural signatures of cognitive processes | Combines EEG's sensitivity to transient changes with fNIRS's spatial localization of active regions [21] [22] |

| Stroke Rehabilitation | Motor function recovery training | Provides comprehensive feedback on both electrical and hemodynamic responses during therapy [22] [23] |

| ADHD | Training inhibitory control and working memory | Enables monitoring of both rapid neural oscillations and sustained prefrontal activation [2] [22] |

| Epilepsy | Seizure focus localization and monitoring | Correlates ictal electrical discharges with localized hemodynamic changes [2] |

| Anesthesia Monitoring | Depth of anesthesia assessment | Combines EEG-based anesthetic depth indicators with fNIRS cerebral oxygenation monitoring [2] |

Experimental Protocols and Methodologies

Protocol 1: Semantic Category Decoding

This protocol outlines the methodology for differentiating between semantic categories (animals vs. tools) using simultaneous EEG-fNIRS recordings during various mental imagery tasks [19].

Participant Preparation and Equipment

- Participants: Recruit right-handed native speakers (for language-dependent tasks) with normal or corrected-to-normal vision. Sample size: 12 participants for combined EEG-fNIRS recording.

- EEG System: 64-channel system with sampling rate ≥1000 Hz, electrode impedances maintained below 5 kΩ.

- fNIRS System: Continuous-wave system with laser sources, avalanche photodiode detectors, sampling rate ≥62.5 Hz, measuring HbO and HbR concentration changes.

- Synchronization: Use photoelectric marking or hardware synchronization to align EEG and fNIRS data streams with sub-second precision.

Stimuli and Task Design

- Stimulus Set: 18 animals and 18 tools selected for recognizability and suitability across mental tasks. Images converted to grayscale, cropped to 400×400 pixels, and presented against white background.

- Task Conditions:

- Silent Naming: Participants silently name the displayed object in their mind.

- Visual Imagery: Participants visualize the object in their mind.

- Auditory Imagery: Participants imagine sounds associated with the object.

- Tactile Imagery: Participants imagine the feeling of touching the object.

- Trial Structure: Each trial consists of (1) stimulus presentation (2-3 seconds), (2) mental task period (3-5 seconds), and (3) rest interval (5+ seconds). Task order randomized across blocks.

Data Acquisition Procedure

- Apply EEG cap and fNIRS probes according to manufacturer specifications, ensuring proper contact and signal quality.

- Position fNIRS sources and detectors to cover frontal, temporal, and parietal regions based on the hypothesized neural correlates of semantic processing.

- Conduct a brief practice session to familiarize participants with tasks.

- Record simultaneous baseline activity during rest condition.

- Present stimuli in randomized order across task conditions, with adequate rest periods between blocks to minimize fatigue.

- Monitor data quality throughout acquisition, checking for artifacts and signal integrity.

The following diagram illustrates the experimental workflow:

Protocol 2: Conflict Processing Using Stroop Task

This protocol details the simultaneous EEG-fNIRS recording during Stroop task performance, a classic paradigm for investigating conflict monitoring and processing [24].

Experimental Setup

- Participants: 21 healthy volunteers with normal color vision, no history of neurological or psychiatric disorders.

- EEG Configuration: 34-channel recording according to 10-20 system, sampling rate 1000 Hz, band-pass filter 0.05-100 Hz, 50 Hz notch filter.

- fNIRS Configuration: 20-channel system (4 sources, 16 detectors) covering frontal and parietal lobes, sampling rate 100 Hz, measuring Δ[HbO] and Δ[Hb] at 785 nm and 850 nm wavelengths.

- Stimuli: Chinese color-word matching task with neutral and incongruent conditions.

Task Procedure

- Neutral Stimuli: Upper character is a non-color word presented in colored ink; lower character is a color word presented in white.

- Incongruent Stimuli: Upper character is a color word presented in a different colored ink.

- Task: Participants judge whether the color of the upper character matches the meaning of the lower character.

- Response: Left mouse button for match, right button for non-match.

- Block Design: 4 blocks (neutral, neutral, incongruent, incongruent), each with 16 trials.

- Trial Structure: Stimulus presentation (2 seconds) followed by inter-trial interval (5 seconds).

- Timing: 30-second rest periods before first block and after last block.

Data Quality Assurance

- Maintain EEG electrode impedances below 5 kΩ throughout recording.

- Verify fNIRS signal quality by checking raw intensity levels before formal experiment.

- Use photoelectric marking system to synchronize stimulus presentation with data acquisition.

- Monitor for movement artifacts and provide reminders to minimize motion.

Data Processing and Fusion Approaches

The integration of EEG and fNIRS data can be accomplished at multiple levels, each with distinct advantages:

Table: Data Fusion Approaches for EEG-fNIRS Integration

| Fusion Level | Description | Methods | Applications |

|---|---|---|---|

| Hardware Level | Physical integration of EEG electrodes and fNIRS optodes | Customized helmets, 3D-printed mounts, elastic caps with integrated components [2] | All simultaneous recording paradigms |

| Data Level | Direct combination of raw or preprocessed signals | Common average referencing, joint filtering, tensor-based fusion [18] | Signal quality enhancement, artifact removal |

| Feature Level | Concatenation or transformation of extracted features | STFT for EEG time-frequency images + fNIRS spectral entropy [18] | Motor imagery classification, cognitive state monitoring |

| Decision Level | Fusion of classification outcomes from separate models | Dempster-Shafer theory, weighted voting, Bayesian fusion [17] | Semantic decoding, conflict processing, clinical diagnosis |

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful implementation of simultaneous EEG-fNIRS experiments requires careful selection of equipment and materials. The following table details key components and their functions:

Table: Essential Materials for Simultaneous EEG-fNIRS Research

| Category | Item | Specifications | Function/Purpose |

|---|---|---|---|

| EEG System | Amplifier and Electrodes | 34+ channels, sampling rate ≥1000 Hz, impedance <5 kΩ [24] | Records electrical brain activity with high temporal resolution |

| fNIRS System | Sources and Detectors | 2 wavelengths (785nm, 850nm), sampling rate ≥62.5 Hz [20] | Measures hemodynamic responses via light absorption |

| Integration Hardware | Custom Helmet | 3D-printed or thermoplastic custom fit [2] | Maintains precise, stable positioning of both modalities |

| Synchronization | Photoelectric Marker | Light-sensitive trigger device | Ensures temporal alignment of EEG and fNIRS data |

| Software | Analysis Package | MATLAB, Python, NIRS-SPM, Homer2 | Preprocessing, feature extraction, data fusion |

| Stimulus Presentation | Display Software | PsychToolbox, Presentation, E-Prime | Controls experimental paradigm timing |

| Quality Assurance | Impedance Checker | Electrode tester <5 kΩ | Verifies EEG signal quality at recording start |

Advanced Data Analysis and Fusion Methodologies

Multimodal DenseNet Fusion (MDNF) Model

Recent advances in deep learning have yielded sophisticated frameworks for integrating EEG and fNIRS data. The Multimodal DenseNet Fusion (MDNF) model represents a cutting-edge approach that transforms EEG data into two-dimensional time-frequency images using Short-Time Fourier Transform (STFT), then applies transfer learning to extract discriminative features which are integrated with fNIRS-derived spectral entropy features [18]. This architecture effectively bridges the gap between temporal richness of EEG and spatial specificity of fNIRS, demonstrating superior classification accuracy across various cognitive and motor imagery tasks [18].

The MDNF implementation involves:

- EEG Transformation: Convert raw EEG signals to 2D spectrogram images via STFT

- Feature Extraction: Utilize pre-trained DenseNet for automated feature learning from EEG spectrograms

- fNIRS Processing: Compute spectral entropy features from hemodynamic signals

- Feature Fusion: Integrate EEG and fNIRS features in a unified representation space

- Classification: Employ fully connected layers for task-specific prediction

Evidence Theory for Decision Fusion

An innovative end-to-end signal fusion method combines deep learning with Dempster-Shafer Theory (DST) for motor imagery classification [17]. This approach includes:

- EEG Pathway: Spatiotemporal feature extraction using dual-scale temporal convolution and depthwise separable convolution, enhanced with hybrid attention mechanisms

- fNIRS Pathway: Spatial convolution across channels to explore regional activation differences, combined with parallel temporal convolution and gated recurrent units (GRU)

- Decision Fusion: Uncertainty quantification via Dirichlet distribution parameter estimation, followed by two-layer reasoning using DST to fuse evidence from both modalities

This methodology has demonstrated state-of-the-art performance, achieving 83.26% accuracy in MI classification tasks [17].

Implementation Considerations and Technical Challenges

Hardware Integration and Signal Quality

Successful simultaneous EEG-fNIRS recording requires addressing several technical challenges:

- Probe Placement: Strategic selection of EEG channels based on neuroanatomical locations and their associations with cognitive and motor functions is essential [18]. Similarly, fNIRS optode placement must cover regions of interest while minimizing interference with EEG electrodes.

- Cross-Modal Artifacts: Physiological artifacts (e.g., cardiac pulsation, respiration) affect both modalities differently and require specialized filtering approaches.

- Synchronization: Precise temporal alignment of EEG and fNIRS data streams is critical. Hardware synchronization provides the most accurate alignment, while software methods offer more flexibility [2].

- Comfort and Stability: Extended recordings require comfortable, stable probe placement to minimize motion artifacts. Customized helmets using 3D printing or thermoplastic materials can improve fit and stability [2].

Data Processing Pipelines

Effective data processing requires modality-specific approaches:

- EEG Processing: Filtering (0.5-50 Hz bandpass, 50/60 Hz notch), artifact removal (ocular, cardiac, motion), segmentation, and feature extraction (time-domain, frequency-domain, time-frequency representations)

- fNIRS Processing: Conversion of raw light intensity to optical density, motion artifact correction, bandpass filtering (0.01-0.5 Hz) to remove physiological noise, conversion to hemoglobin concentration changes via modified Beer-Lambert law

- Multimodal Integration: Temporal alignment, common referencing, and application of fusion algorithms at appropriate levels (data, feature, or decision fusion)

The following diagram illustrates a comprehensive data processing workflow:

Historical Development and Commercial Evolution of Hybrid EEG-fNIRS Systems

The integration of electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) represents a paradigm shift in non-invasive neuroimaging, creating a powerful hybrid modality for brain-computer interface (BCI) research. This fusion addresses fundamental limitations inherent in each standalone technique: EEG provides excellent temporal resolution but suffers from poor spatial localization, while fNIRS offers superior spatial resolution but slower temporal response [2]. The complementary nature of these modalities has catalyzed their integration, enabling unprecedented insights into brain function across diverse clinical and research applications.

The evolution of hybrid EEG-fNIRS systems has progressed from preliminary proof-of-concept studies to sophisticated, commercially viable platforms. This journey has been marked by significant interdisciplinary collaboration between engineers, neuroscientists, and clinicians. Modern systems now demonstrate robust synchronization capabilities, user-friendly interfaces, and expanding applications in both laboratory and real-world settings [25]. The commercial landscape has similarly evolved, with increasing numbers of manufacturers offering integrated solutions that cater to the growing demand for multimodal brain imaging in research and therapeutic applications.

Historical Development and Technological Evolution

Early Foundations and Conceptual Framework

The historical development of hybrid EEG-fNIRS systems emerged from the recognized need to overcome limitations of unimodal brain imaging approaches. Initial research in the early 2000s focused on establishing the technical feasibility of simultaneous acquisition, addressing fundamental challenges related to hardware integration and signal synchronization [25]. These pioneering studies demonstrated that electrophysiological (EEG) and hemodynamic (fNIRS) measurements could be successfully co-registered, providing complementary information about brain activity.

The conceptual framework for hybridization was formally articulated by Pfurtscheller et al. (2010), who established four essential criteria for genuine hybrid systems: (1) direct acquisition of brain activity, (2) utilization of multiple brain signal acquisition modalities, (3) real-time signal processing for communication between brain and computer, and (4) provision of feedback outcomes [25]. This framework distinguished true hybrid BCIs from simple multi-modal recording setups and established standards for the field's development.

Hardware Integration Milestones

The evolution of hardware integration has progressed through several distinct phases, each addressing critical technical challenges:

Initial Solutions (Pre-2010): Early systems utilized separate EEG and fNIRS equipment with post-hoc synchronization, resulting in temporal alignment limitations. Researchers often adapted existing EEG caps by manually creating openings for fNIRS optodes, leading to suboptimal contact pressure and positioning consistency [2].

Dedicated Hybrid Caps (2010-2017): The development of purpose-built integration caps represented a significant advancement. Initial commercial offerings used elastic fabrics with predefined openings for both electrode and optode placement, improving reproducibility but still facing challenges with maintaining consistent optode-scalp contact pressure across different head shapes [2].

Advanced Customization (2017-Present): Recent innovations incorporate 3D printing and thermoplastic materials to create subject-specific helmets that optimize probe placement and contact pressure. These customized solutions enhance signal quality but at increased cost and complexity [2]. Modern commercial systems now offer integrated caps with optimized layouts for specific applications, such as motor imagery or prefrontal cortex monitoring.

Synchronization Techniques Evolution

The progression of synchronization methods has been crucial for effective data fusion:

Software-based Synchronization: Early approaches used software triggers and shared clock systems between separate devices, achieving synchronization at the tens of milliseconds level, sufficient for fNIRS but suboptimal for high-temporal-resolution EEG analysis [2].

Hardware-level Integration: Advanced systems implemented unified processors that simultaneously handle EEG and fNIRS input/output, achieving microsecond-level synchronization precision. This integration enables truly simultaneous data acquisition essential for investigating neurovascular coupling dynamics [2].

Modern Commercial Systems: Contemporary commercial platforms incorporate dedicated synchronization modules with hardware triggers and shared analog-digital converters, ensuring temporal alignment sufficient for analyzing complex inter-modal relationships during cognitive tasks [26].

Table 1: Evolution of Hybrid EEG-fNIRS System Capabilities

| Time Period | Primary Integration Method | Synchronization Precision | Key Commercial Developments |

|---|---|---|---|

| 2005-2010 | Modified EEG caps with fNIRS openings | 50-100 ms (software triggers) | First research prototypes; Custom solutions |

| 2011-2017 | Dedicated hybrid caps (elastic fabric) | 10-50 ms (improved triggers) | Early commercial systems (NIRx, Artinis with EEG partners) |

| 2018-2023 | 3D-printed custom interfaces | 1-5 ms (hardware synchronization) | Integrated commercial platforms (g.tec hybrid systems) |

| 2024-Present | Subject-specific optimized layouts | <1 ms (unified processors) | Commercial systems with real-time analysis capabilities |

Technical Specifications and Performance Metrics

Comparative Modal Characteristics

The fundamental rationale for EEG-fNIRS integration stems from their complementary characteristics across multiple dimensions of measurement. EEG records electrical potentials generated by synchronized neuronal activity with millisecond temporal resolution, ideal for capturing rapid neural dynamics during cognitive tasks or in response to stimuli [2]. However, the electrical signals are attenuated and distorted by passage through cerebrospinal fluid, skull, and scalp, resulting in limited spatial resolution of approximately 2-3 cm under optimal conditions [19].

Conversely, fNIRS measures hemodynamic responses associated with neural activity through light absorption changes in oxygenated and deoxygenated hemoglobin. While slower in temporal response (typically 2-10 Hz sampling versus 256-1000 Hz for EEG), fNIRS provides superior spatial localization (5-10 mm resolution) and direct measurement of regional brain activation [26]. fNIRS is less susceptible to movement artifacts and electromagnetic interference, making it suitable for more naturalistic environments [2].

The neurovascular coupling relationship—the biological link between neural activity and subsequent hemodynamic response—forms the physiological basis for correlating EEG and fNIRS signals. This relationship exhibits complex temporal dynamics that vary across brain regions and cognitive states, with hemodynamic responses typically lagging 4-8 seconds behind electrical activity [27].

Table 2: Performance Comparison of Neuroimaging Modalities

| Parameter | EEG | fNIRS | Hybrid EEG-fNIRS | fMRI |

|---|---|---|---|---|

| Temporal Resolution | Millisecond level | ~2-10 Hz | Millisecond (EEG) + ~2-10 Hz (fNIRS) | 0.5-2 Hz |

| Spatial Resolution | 2-3 cm | 5-10 mm | 5-10 mm (fNIRS-guided) | 1-3 mm |

| Portability | High | High | Moderate-high | Low |

| Cost | Low-moderate | Moderate | Moderate | High |

| Artifact Resistance | Low (electrical interference) | Moderate (movement) | Moderate (complementary) | High |

| Measurement Depth | Cortical surface | Superficial cortex (2-3 cm) | Superficial cortex | Whole brain |

Commercial System Evolution

The commercial landscape for hybrid EEG-fNIRS systems has expanded significantly, with several manufacturers now offering integrated solutions:

Early Commercialization (2010-2017): Initial commercial offerings focused on compatibility between existing EEG and fNIRS systems from the same manufacturer or partners. These systems required significant technical expertise to operate and synchronize effectively [25].

Current Integrated Systems (2018-Present): Modern commercial systems feature unified hardware platforms with simplified user interfaces. Examples include g.tec's hybrid systems with integrated amplifiers and NirScan's combined acquisition units [26]. These systems typically incorporate 32-64 EEG channels alongside 16-64 fNIRS channels, with sampling rates up to 1000 Hz for EEG and 10-50 Hz for fNIRS.

Performance Metrics: Contemporary systems achieve classification accuracies of 80-95% for various BCI tasks, representing 5-15% improvement over unimodal approaches [18] [7]. The development of standardized communication protocols (Lab Streaming Layer) has facilitated integration of equipment from different manufacturers, increasing flexibility for researchers.

Experimental Protocols and Methodologies

Protocol 1: Motor Imagery for BCI Applications

Motor imagery (MI) protocols represent one of the most established applications for hybrid EEG-fNIRS systems, particularly in rehabilitation and assistive technology development.

Participant Preparation and Setup

Participant Selection: Recruit right-handed participants (to minimize hemispheric dominance variability) with normal or corrected-to-normal vision. For clinical studies, include both healthy controls and patient populations (e.g., intracerebral hemorrhage patients) with appropriate consent procedures [26].

Equipment Setup: Use a customized hybrid cap with 32 EEG electrodes positioned according to the international 10-20 system and 32 fNIRS sources with 30 detectors creating 90 measurement channels through source-detector pairing at 3 cm separation distances [26]. Ensure proper scalp coupling through hair parting and application of appropriate conductive (EEG) and optical (fNIRS) gels.

Signal Quality Verification: Check EEG impedance levels (<10 kΩ) and fNIRS scalp coupling index (SCI > 0.7) before beginning experimental tasks. Reject channels failing quality thresholds from subsequent analysis [26].

Experimental Paradigm

Baseline Recording: Acquire 1-minute eyes-closed followed by 1-minute eyes-open baseline measurements, demarcated by auditory cues (200 ms beep) [26].

Task Structure: Implement a trial-based design with the following sequence:

- Visual Cue (2 s): Display a directional arrow (left/right) indicating the required motor imagery.

- Execution Phase (10 s): Present a central fixation cross following an auditory cue (200 ms beep). Participants perform kinesthetic motor imagery of grasping with the indicated hand at approximately 1 imagined grasp per second.

- Inter-trial Rest (15 s): Blank screen for rest and return to baseline [26].

Session Structure: Conduct multiple sessions with at least 30 trials each (15 left/right hand MI), with intersession breaks adjusted based on participant fatigue.

Data Acquisition Parameters

- EEG Settings: Sampling rate 256-1000 Hz, bandpass filter 0.5-45 Hz, recording reference at Cz or average reference.

- fNIRS Settings: Sampling rate 10-12.5 Hz, wavelengths 760 nm and 850 nm to measure oxygenated (HbO) and deoxygenated hemoglobin (HbR) concentration changes.

- Synchronization: Use event markers from stimulus presentation software (e.g., E-Prime) transmitted simultaneously to both recording systems [26].

Protocol 2: Semantic Neural Decoding

Semantic decoding protocols investigate the neural representation of conceptual knowledge, with applications to advanced communication BCIs.

Stimuli and Task Design

Stimulus Selection: Utilize images representing distinct semantic categories (e.g., 18 animals and 18 tools) selected for suitability across multiple mental tasks. Convert images to grayscale, crop to 400×400 pixels, and present against white background [19].

Mental Tasks: Implement four distinct mental tasks in randomized order across blocks:

- Silent Naming: Participants silently name the displayed object in their native language.

- Visual Imagery: Participants visualize the object in their minds.

- Auditory Imagery: Participants imagine sounds associated with the object.

- Tactile Imagery: Participants imagine the feeling of touching the object [19].

Trial Structure: Each trial consists of stimulus presentation (3-5 s) followed by the mental task period (3-5 s), with inter-trial intervals of 10-15 s.

Data Collection Parameters

- EEG Configuration: 30 electrodes positioned according to the international 10-5 system, sampling rate 1000 Hz (downsampled to 200 Hz) [19].

- fNIRS Configuration: 36 channels (14 sources, 16 detectors) with 30 mm inter-optode distance, following the 10-20 system, sampling rate 12.5 Hz (downsampled to 10 Hz), wavelengths 760 nm and 850 nm [19].

- Participant Instructions: Emphasize minimizing physical movements (eye movements, facial expressions, head/body motions) during mental tasks to reduce artifacts.

Data Processing and Analysis Framework

A standardized processing pipeline ensures reproducible analysis of hybrid EEG-fNIRS data:

Preprocessing Steps

EEG Preprocessing: Apply bandpass filtering (0.5-45 Hz), remove line noise, re-reference to average reference, detect and reject artifacts using independent component analysis (ICA), particularly for ocular and muscle artifacts.

fNIRS Preprocessing: Convert raw intensity to optical density, apply bandpass filtering (0.01-0.2 Hz for task-related signals), detect motion artifacts using moving standard deviation or peak-to-peak amplitude thresholds, correct using wavelet-based or PCA-based methods [28]. For systemic physiological noise removal, employ short-separation regression (if available) or principal component filtering [28].

Temporal Alignment: Precisely align EEG and fNIRS data using synchronization triggers, accounting for inherent hemodynamic delay (typically 4-8 seconds) in fNIRS responses relative to EEG.

Feature Extraction and Fusion

EEG Features: Extract time-frequency features using wavelet transform or short-time Fourier transform, particularly in motor imagery paradigms focusing on sensorimotor rhythms (8-30 Hz). For cognitive tasks, extract event-related potentials (ERPs) or power in specific frequency bands.

fNIRS Features: Calculate oxygenated (HbO) and deoxygenated (HbR) hemoglobin concentration changes using modified Beer-Lambert law. Extract features including mean, peak, slope, and area under the curve during task periods.

Feature Fusion: Implement either early fusion (concatenating features before classification) or late fusion (combining classifier decisions) approaches. For motor imagery, combining EEG band power with fNIRS HbO concentrations typically yields optimal results [18].

Classification Approaches

Traditional Machine Learning: Utilize support vector machines (SVM), linear discriminant analysis (LDA), or random forests with carefully selected feature combinations.

Deep Learning Architectures: Implement multimodal denseNet fusion (MDNF) models that transform EEG data into 2D time-frequency representations using short-time Fourier transform and combine with fNIRS spectral entropy features [18].

Ensemble Methods: Apply stacking ensemble learning combining multiple classifiers (Naïve Bayes, SVM, Random Forest, k-NN) with genetic algorithm-based feature selection [7].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Components for Hybrid EEG-fNIRS Research

| Component Category | Specific Items | Function/Purpose | Technical Specifications |

|---|---|---|---|

| Acquisition Hardware | Hybrid EEG-fNIRS cap | Provides structural foundation for electrode/optode placement | 32-64 EEG channels, 16-64 fNIRS channels, international 10-20 system compliance |

| EEG amplifier | Records electrical brain activity | 24-64 channels, sampling rate ≥256 Hz, input impedance >100 MΩ | |

| fNIRS system | Measures hemodynamic responses | 2+ wavelengths (760, 850 nm), sampling rate ≥10 Hz, source-detector separation 30 mm | |

| Synchronization module | Ensures temporal alignment of multimodal data | Hardware triggers, shared clock, <1 ms precision | |

| Disposable Supplies | EEG electrolyte gel | Ensures electrical conductivity between scalp and electrodes | Chloride-based, low impedance, non-irritating |

| fNIRS optical gels | Improves light coupling between optodes and scalp | High refractive index matching, non-toxic | |

| Abrasive prep gel | Gentle scalp exfoliation to reduce impedance | Mild abrasive particles in electrolyte solution | |

| Disposable electrode disks | Single-use EEG electrodes | Ag/AgCl composition, adhesive backing | |

| Stimulus Presentation | Presentation software | Controls experimental paradigm timing | Precision timing, trigger output, E-Prime, PsychoPy, or Presentation |

| Display monitor | Visual stimulus presentation | High refresh rate (≥120 Hz), minimal latency | |

| Response collection device | Records participant responses | Button boxes, keyboards, or specialized input devices | |

| Data Analysis Tools | Signal processing software | Preprocessing and analysis of multimodal data | MATLAB with EEGLAB, NIRS-KIT, Homer2, MNE-Python |

| Classification libraries | Machine learning implementation | scikit-learn, TensorFlow, PyTorch with custom hybrid BCI extensions | |

| Calibration & Quality Assurance | Impedance checker | Verifies EEG electrode-scalp contact | <10 kΩ threshold for acceptable connections |

| Optical power meter | Validates fNIRS source output | Measures intensity at optode tips | |

| Phantom test objects | System validation and calibration | Tissue-simulating materials with known optical properties |

Commercial Applications and Future Directions

The commercial evolution of hybrid EEG-fNIRS systems has expanded beyond research laboratories into various practical applications. Current commercial systems demonstrate robust performance in clinical neurodiagnostics, neurorehabilitation, and consumer neuroscience applications.

Emerging Commercial Applications

Clinical Rehabilitation: Hybrid systems show particular promise in stroke rehabilitation, with systems specifically validated for intracerebral hemorrhage patients demonstrating the ability to track motor recovery through combined electrophysiological and hemodynamic monitoring [26]. Commercial systems are increasingly incorporating patient-specific adaptation algorithms to accommodate pathological neurovascular coupling.

Assistive Communication: The development of semantic decoding BCIs using hybrid systems offers potential for more natural communication interfaces, moving beyond character-by-character spelling to direct concept communication [19]. Commercial entities are exploring these approaches for locked-in syndrome and other severe communication impairments.

Neuromarketing and Consumer Research: The commercial sector has adopted hybrid systems for evaluating consumer responses to products and advertisements, leveraging the comprehensive brain activity assessment provided by combined electrical and hemodynamic monitoring.

Future Commercial Directions

The future commercial evolution of hybrid EEG-fNIRS systems will likely focus on several key areas:

Miniaturization and Wearability: Next-generation systems are transitioning toward more compact, wearable designs suitable for real-world monitoring outside laboratory environments. This includes developments in wireless technology, battery life optimization, and ergonomic design.

Real-Time Processing Integration: Commercial systems are increasingly incorporating real-time analysis capabilities, enabling immediate feedback for neurorehabilitation and BCI applications. Advanced processors and optimized algorithms allow complex multimodal fusion with minimal latency.

Standardization and Interoperability: Industry-wide standards for data formats, synchronization protocols, and interface specifications are emerging to facilitate multi-site studies and technology transfer between research and clinical applications.

AI-Enhanced Analytics: Commercial systems are beginning to integrate artificial intelligence and machine learning directly into acquisition platforms, providing automated interpretation and reducing the expertise required for system operation and data analysis.

The continued commercial evolution of hybrid EEG-fNIRS systems promises to further bridge the gap between laboratory research and practical applications, ultimately expanding access to sophisticated brain monitoring technologies across diverse fields including clinical medicine, neuroscience research, and human-computer interaction.

Building and Implementing a Hybrid EEG-fNIRS BCI: From Hardware Setup to Data Fusion

This document provides detailed application notes and protocols for the design and implementation of a unified helmet-based acquisition system for simultaneous electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS). The integration of these two non-invasive neuroimaging modalities leverages their complementary strengths to advance brain-computer interface (BCI) research. EEG provides millisecond-level temporal resolution of electrical brain activity, while fNIRS offers superior spatial localization for hemodynamic responses, enabling the development of more robust and accurate hybrid BCI systems [29] [30]. The helmet form factor is critical for ensuring consistent sensor placement, user comfort, and mobility, which are essential for conducting valid and reproducible experiments in both clinical and laboratory settings [26].

System Architecture and Integration Principles

The core of a unified EEG-fNIRS system is a co-located, modular sensor platform integrated into a single helmet. The design must facilitate simultaneous data acquisition from both modalities with precise temporal synchronization.

Key Integration Principles:

- Co-location of Sensors: EEG electrodes and fNIRS optodes must be positioned on the scalp to cover the same cortical regions of interest, particularly the motor cortex for motor imagery (MI) paradigms [26] [30]. This allows for the direct correlation of electrical and hemodynamic activity from the same brain area.

- Temporal Synchronization: A common hardware trigger must initialize both the EEG amplifier and the fNIRS system to ensure data streams are aligned with millisecond precision. This is often achieved via a synchronization pulse from the stimulus presentation software (e.g., E-Prime) sent to both acquisition devices [26].

- * form factor and Ergonomics:* The helmet should be designed for a secure and comfortable fit to minimize motion artifacts. Considerations include total weight, center of gravity, and the use of customizable, padded liners to accommodate different head sizes and shapes [31].

Table 1: Core System Components and Specifications

| Component | Key Specifications | Integration Role |

|---|---|---|

| EEG Amplifier [26] | ≥ 32 channels; Sampling Rate: ≥ 256 Hz; Input Referenced | Captures electrical potentials from the scalp with high temporal resolution. |

| fNIRS System [26] | Continuous-wave; Sampling Rate: ~11 Hz; Lasers & Photodetectors | Measures hemodynamic changes (HbO/HbR) via near-infrared light. |

| Hybrid EEG-fNIRS Cap [26] | Integrated 32-electrode & 62-optode (32 sources, 30 detectors) layout. | Provides fixed, co-located geometry for sensors; ensures coverage of target cortices. |

| Stimulus Presentation Software [26] | e.g., E-Prime; Capable of sending event markers. | Presents experimental paradigm and outputs synchronization pulses for data alignment. |

The logical data acquisition and synchronization workflow is outlined below.

The Researcher's Toolkit: Essential Materials and Reagents

A successful experimental setup requires specific materials for sensor integration, signal quality assurance, and participant safety.

Table 2: Essential Research Reagents and Materials

| Item | Function / Purpose | Application Notes |

|---|---|---|

| Conductive EEG Gel (e.g., NeuroPrep Gel) [30] | Reduces impedance between the scalp and EEG electrodes by filling irregularities. | Applied via blunt-tipped syringe. Essential for high-fidelity signal acquisition but requires post-session cleaning. |

| Ten20 Paste [30] | An alternative conductive medium for securing EEG electrodes and maintaining low impedance. | Offers stable connectivity for longer recording sessions. |

| Abrasive Skin Prep Gel | Gently exfoliates the scalp skin at electrode sites to remove dead skin cells and oils. | Significantly lowers initial skin impedance, improving signal quality. |

| Isopropyl Alcohol (70%) | Cleanses the scalp and hair before EEG setup and removes conductive gel post-session. | Ensures a clean interface for sensors and maintains hygiene. |

| Blunt-Tipped Syringes | Precise application of conductive gel onto individual EEG electrodes within the cap. | Preents gel bridging between adjacent electrodes, which can cause signal shorts. |

| fNIRS Optode Holders | Securely positions optical sources and detectors on the scalp at a fixed distance (typically 3 cm). | Integrated into the hybrid cap design to maintain optimal source-detector separation for penetration depth. |

| Disposable ECG Electrodes | Can be used as ground or reference electrodes for the EEG system. | Placed on bony landmarks (e.g., mastoid). |

Experimental Protocol: A Standard Motor Imagery Paradigm

The following protocol details a classic left-hand/right-hand motor imagery task, a common paradigm in BCI research for developing motor restoration applications [26] [30].

4.1. Participant Preparation and Setup

- Informed Consent: Obtain written informed consent approved by an institutional ethics committee (e.g., TJ-IRB202412123 [26]).

- Helmet Fitting: Measure the participant's head circumference and select the appropriate hybrid cap size (e.g., Model M for 54-58 cm [26]). Securely fit the cap, ensuring the Cz electrode is aligned with the vertex of the head.

- EEG Preparation: For each electrode, part the hair and abrade the skin with preparatory gel. Fill the electrodes with conductive gel using a blunt-tipped syringe until impedances are stabilized below 10 kΩ.

- fNIRS Setup: Verify that all fNIRS optodes are in firm contact with the scalp. Check signal quality on the fNIRS acquisition software.

- Synchronization Check: Initiate a test recording to verify that event markers from the stimulus software are correctly recorded in both the EEG and fNIRS data streams.

4.2. Experimental Procedure The participant is seated comfortably in a chair approximately 1 meter from the monitor. The session structure is as follows:

A single trial within the block follows a structured timeline:

- Visual Cue (2 seconds): A directional arrow (pointing left or right) is displayed on the screen, instructing the participant which hand to imagine moving.

- Execution/MI Task (10 seconds): The screen displays a fixation cross. Following an auditory cue (a 200 ms beep), the participant performs kinesthetic motor imagery. They should imagine grasping with the cued hand at a rate of one grasp per second, without executing any physical movement [26].

- Inter-Trial Interval (15 seconds): A blank screen is shown, allowing the participant to rest and neural signals to return to baseline.

4.3. Data Acquisition Parameters Adhere to the following settings for consistent data quality:

- EEG Sampling Rate: 256 Hz or higher [26] [30].

- fNIRS Sampling Rate: ~11 Hz [26].

- fNIRS Wavelengths: Typically two wavelengths (e.g., 730 nm and 850 nm) to calculate concentration changes in oxygenated (HbO) and deoxygenated hemoglobin (HbR).

- Event Markers: Record the precise onset of the visual cue, execution phase, and end of trial in both data streams.

Data Processing and Analysis Workflow

Post-experiment, the synchronized data undergoes a multi-stage processing pipeline to extract meaningful features for BCI classification.

5.1. Pre-processing Steps

Table 3: Data Pre-processing Protocols

| Modality | Processing Step | Protocol Details & Parameters |

|---|---|---|

| EEG | Bandpass Filtering | Apply a 0.5-40 Hz filter to isolate relevant neural oscillations (e.g., Mu/Beta rhythms). |

| EEG | Artifact Removal | Use algorithms like Independent Component Analysis (ICA) to identify and remove components associated with eye blinks, eye movements, and muscle activity. |

| EEG | Epoching | Segment data into trials time-locked to the onset of the motor imagery cue (e.g., -2 to 15 seconds). |

| fNIRS | Optical Density Conversion | Convert raw light intensity signals to optical density. |

| fNIRS | Hemodynamic Response Calculation | Use the Modified Beer-Lambert Law to convert optical densities into concentration changes of HbO and HbR. |

| fNIRS | Bandpass Filtering | Apply a 0.01-0.2 Hz filter to remove physiological noise (e.g., cardiac cycle ~1 Hz, respiration ~0.3 Hz). |

| fNIRS | Epoching | Segment HbO/HbR data into trials aligned with the MI cue. |

5.2. Feature Extraction and Fusion

- EEG Features: Calculate the power spectral density in specific frequency bands (e.g., Mu 8-12 Hz, Beta 13-30 Hz) over the sensorimotor cortex during the task execution period [30].

- fNIRS Features: Extract the mean, slope, or peak value of the HbO signal during the task period, as HbO typically shows a more pronounced response during cortical activation [30].

- Data Fusion: Concatenate the selected EEG and fNIRS features into a single, high-dimensional feature vector for each trial. This hybrid feature set leverages the temporal precision of EEG and the spatial specificity of fNIRS, which has been shown to enhance classification accuracy by 5-10% compared to unimodal systems [26] [30].

Step-by-Step Guide to Simultaneous Data Acquisition and Signal Pre-processing

Simultaneous Electroencephalography (EEG) and functional Near-Infrared Spectroscopy (fNIRS) offer a powerful, multimodal approach for non-invasive brain imaging. By combining EEG's millisecond-level temporal resolution with fNIRS's superior spatial localization and hemodynamic monitoring, researchers can obtain a comprehensive view of brain activity [19] [30] [32]. This integrated methodology is particularly valuable for developing robust Brain-Computer Interfaces (BCIs), as it helps overcome the limitations inherent in using either modality alone, such as EEG's susceptibility to electrical noise and motion artifacts, and fNIRS's inherent physiological delay [30]. This protocol provides a detailed guide for the simultaneous acquisition and pre-processing of EEG and fNIRS data, framed within BCI research applications.

Hardware Setup and Data Acquisition

Equipment Configuration

The initial phase involves the physical integration of EEG and fNIRS systems. Careful configuration is essential to minimize interference and ensure temporal synchronization.

- Synchronization: A hardware trigger signal must be established between the EEG amplifier and the fNIRS system. This trigger, typically sent at the onset of each experimental trial or block, is crucial for aligning the two data streams during subsequent analysis. The fNIRS system's computer can often generate this signal via a parallel port or a dedicated digital I/O card.

- Electrode and Optode Placement: Position EEG electrodes and fNIRS optodes on the scalp according to the international 10-10 or 10-20 systems. Use custom caps that integrate holders for both to ensure stable positioning and minimize cross-talk. Prioritize the brain regions of interest for the specific BCI paradigm (e.g., motor cortex for motor imagery, prefrontal cortex for cognitive tasks). The distance between fNIRS source and detector optodes should generally be 3-4 cm to achieve a cortical penetration depth of approximately 2 cm [19] [30].

- Impedance and Signal Quality Check: For EEG, ensure that electrode-scalp impedances are reduced to below 10 kΩ, often requiring skin preparation and the application of conductive gel or paste [30]. For fNIRS, verify that optode-scalp coupling is sufficient by checking signal intensity levels and rejecting channels with poor light transmission.

Experimental Protocol for Data Acquisition

A standardized experimental protocol is critical for collecting high-quality, reproducible data. The following workflow outlines a typical session for a semantic decoding or mental imagery BCI paradigm, based on established research [19].

Table 1: Key Research Reagent Solutions and Materials

| Item | Function & Specification |

|---|---|

| EEG Amplifier System | Records electrical brain activity from the scalp. Requires high sampling rate (≥500 Hz) and synchronization input. |

| fNIRS System | Measures hemodynamic responses (HbO/HbR) using near-infrared light. Must support external triggering. |

| Integrated EEG/fNIRS Cap | Head cap with pre-configured layouts holding both EEG electrodes and fNIRS optodes for co-localized measurement. |

| EEG Electrodes & Gel | Ag/AgCl electrodes with conductive gel or paste are used to maintain signal fidelity and reduce impedance. |

| fNIRS Optodes | Sources emit NIR light; detectors measure light intensity after tissue penetration. |

| Stimulus Presentation Software | Software (e.g., PsychoPy, E-Prime) to present cues and send synchronization triggers. |

Signal Pre-processing Workflow

Raw, simultaneously acquired data requires modality-specific pre-processing to remove artifacts and noise before integrated analysis.

EEG Signal Pre-processing

EEG signals are weak and susceptible to various artifacts. The goal is to isolate neural activity from noise.

Core Steps:

- Downsampling: Reduce the sampling rate to decrease data volume and computational load while retaining sufficient information for analysis [32].

- Filtering: Apply band-pass filters (e.g., 0.5-45 Hz) to remove slow drifts and high-frequency noise, including line noise (e.g., 50/60 Hz notch filter) [32].

- Bad Channel Removal: Identify and interpolate channels with consistently poor signal quality.

- Artifact Removal: Use advanced algorithms like Independent Component Analysis (ICA) to separate and remove artifacts stemming from eye blinks, eye movements, and muscle activity [32].

- Epoching: Segment the continuous data into trials time-locked to the event triggers (e.g., from -1 s pre-stimulus to +5 s post-stimulus).

- Baseline Correction: Remove the mean signal from the pre-stimulus period from each epoch.

fNIRS Signal Pre-processing