Validating Virtual Reality Biomarkers for Mental Disorders: A New Frontier in Objective Diagnosis and Drug Development

This article explores the transformative potential of virtual reality (VR)-derived biomarkers in creating objective, biologically-grounded diagnostic tools for mental disorders.

Validating Virtual Reality Biomarkers for Mental Disorders: A New Frontier in Objective Diagnosis and Drug Development

Abstract

This article explores the transformative potential of virtual reality (VR)-derived biomarkers in creating objective, biologically-grounded diagnostic tools for mental disorders. Aimed at researchers, scientists, and drug development professionals, it synthesizes current evidence on the development, application, and validation of these digital biomarkers. The scope spans from foundational principles and methodological frameworks for data capture to the troubleshooting of implementation barriers and the critical validation of VR biomarkers against established standards like neuroimaging and clinical outcomes. By examining multimodal integration, AI-powered analytics, and the pathway to clinical adoption, this resource provides a comprehensive roadmap for leveraging VR to enhance precision in mental health diagnostics and therapeutic development.

The New Science of VR Biomarkers: Defining Digital Phenotypes for Mental Health

Virtual reality (VR) biomarkers are emerging as a transformative tool in mental health research and drug development, offering objective, quantifiable measures that overcome the limitations of traditional subjective assessments. By capturing rich behavioral, physiological, and neurophysiological data within standardized, immersive environments, VR biomarkers provide unprecedented insights into cognitive and emotional processes. This guide compares the performance of various VR biomarker paradigms against traditional methods, detailing experimental protocols, key findings, and essential research tools. The integration of VR with multimodal sensing and machine learning is establishing a new standard for validating digital biomarkers in mental disorders research, enabling more precise and translative outcomes for clinical trials and therapeutic development.

The Case for Objectivity: VR Biomarkers vs. Traditional Checklists

Traditional diagnostic approaches for mental disorders, such as symptom checklists and clinical interviews, are limited by their reliance on self-report, susceptibility to memory bias, and inherent subjectivity [1] [2]. These methods are unable to capture the fine-grained, real-time behavioral and physiological correlates of mental states. In contrast, VR biomarkers provide a novel pathway to objective assessment by creating controlled, ecologically valid environments where researchers can continuously and unobtrusively measure a user's responses.

Key Performance Advantages of VR Biomarkers:

- Objectivity & Quantification: VR biomarkers translate subjective experiences into quantifiable data points, such as hand movement speed, scanpath length, and physiological arousal, reducing reliance on introspection and clinician interpretation [3].

- Ecological Validity: VR environments can simulate complex, real-world scenarios (e.g., a food-ordering kiosk, a social interaction) that are more reflective of daily challenges than a questionnaire, thereby capturing more authentic behavioral signatures [1] [3].

- High-Density Data Capture: VR platforms enable the synchronous collection of multimodal data streams, including performance metrics, eye-tracking, electroencephalography (EEG), and heart rate variability (HRV), providing a holistic view of a participant's state [1].

- Enhanced Sensitivity for Early Detection: Studies have demonstrated that VR-derived biomarkers can detect subtle deficits associated with conditions like Mild Cognitive Impairment (MCI) with high specificity, often before they are apparent on traditional tests [3].

Comparative Performance Data: VR Biomarkers in Action

The following tables summarize quantitative data from key studies, comparing the performance of VR-based assessments against traditional methods and highlighting the diagnostic accuracy achieved through multimodal integration.

Table 1: Comparative Diagnostic Accuracy of VR vs. Traditional Biomarkers

| Condition Assessed | VR Biomarkers & Method | Traditional Method | Key Performance Metrics | Reference |

|---|---|---|---|---|

| Mild Cognitive Impairment (MCI) | Virtual kiosk test (hand movement, eye movement, errors, time) | Neuropsychological Tests (SNSB-C) & MRI | VR Only: 87.5% Sensitivity, 90% SpecificityMRI Only: 90.9% Sensitivity, 71.4% SpecificityVR + MRI (Multimodal): 94.4% Accuracy, 100% Sensitivity, 90.9% Specificity | [3] [4] |

| Adolescent Major Depressive Disorder (MDD) | VR emotional task with EEG, Eye-Tracking, & HRV | Clinical Diagnosis (DSM-5) & Self-Report Scales | SVM Model Classification: 81.7% Accuracy, 0.921 AUCKey Biomarkers: EEG theta/beta ratio, saccade count, fixation duration, HRV LF/HF ratio | [1] |

| Immersion & Task Difficulty | EEG during VR jigsaw puzzles (idle, easy, hard) | Post-Session Self-Report Questionnaires | Machine Learning Classification: 86-97% Accuracy for differentiating states (e.g., easy vs. hard) | [5] |

Table 2: Core Digital Phenotyping Features for Mental Health Monitoring [2]

| Device Category | Core Features (High Coverage & Importance) | Promising Additional Features |

|---|---|---|

| Actiwatch | Accelerometer, General Activity | Sleep (underused but important) |

| Smart Bands | Heart Rate, Steps, Sleep, Phone Usage | GPS, Electrodermal Activity (EDA), Skin Temperature |

| Smartwatches | Sleep, Heart Rate | Steps, Accelerometer (widely used but less decisive) |

Experimental Protocols for Key VR Biomarker Paradigms

VR Kiosk Test for Mild Cognitive Impairment (MCI)

This protocol is designed to detect subtle deficits in instrumental activities of daily living (IADLs), which are early indicators of MCI [3].

Objective: To classify participants as healthy controls or having MCI based on behavioral biomarkers collected during a simulated daily task. Participants: The study typically involves older adults (e.g., 54 participants, with 22 healthy controls and 32 with MCI), diagnosed according to a gold-standard neuropsychological test battery [3]. VR Apparatus: A head-mounted display (HMD) running a custom virtual environment that simulates a food-ordering kiosk. Procedure:

- The participant is immersed in the VR environment and given the task of ordering a specific food item using the virtual kiosk interface.

- The system automatically and continuously records four primary VR-derived biomarkers:

- Hand Movement Speed: Velocity and fluency of hand controllers while navigating the interface.

- Scanpath Length: Total distance of eye-gaze movement during task completion.

- Time to Completion: Total time taken to successfully complete the order.

- Number of Errors: Instances of incorrect selections or task deviations. Data Analysis: The collected biomarkers are used to train a machine learning model, such as a Support Vector Machine (SVM), to classify participants. Performance is validated against the clinical diagnosis [3].

Multimodal Framework for Adolescent Depression

This protocol uses a VR-based emotional task to elicit physiological responses indicative of Major Depressive Disorder (MDD) in adolescents [1].

Objective: To differentiate adolescents with MDD from healthy controls using synchronized EEG, eye-tracking, and HRV data. Participants: Case-control study involving adolescents (e.g., 51 with first-episode MDD and 64 healthy controls) [1]. Apparatus:

- VR Environment: A 10-minute immersive scenario, such as a "magical forest," featuring an interactive AI agent that conducts a structured dialogue about personal worries and hopes.

- Sensors: BIOPAC MP160 system or equivalent for EEG and ECG (for HRV), and a portable telemetric ophthalmoscope for eye-tracking. Data is synchronized using a platform like LabStreamingLayer (LSL) [1]. Procedure:

- Participants engage in the 10-minute VR emotional task, interacting with the AI agent.

- The following data are collected simultaneously in real-time:

- EEG: Spectral power in different frequency bands (e.g., theta, beta).

- Eye-Tracking: Saccade count, fixation duration, and pupillary response.

- HRV: Derived from ECG, specifically the Low Frequency/High Frequency (LF/HF) ratio. Data Analysis: Statistical analyses (e.g., ANCOVA) identify significant group differences in physiological metrics. A machine learning model (e.g., SVM) is then trained on these features to classify MDD status [1].

EEG Biomarkers of Immersion and Task Difficulty

This protocol investigates the use of EEG to objectively measure cognitive immersion and engagement in VR, moving beyond subjective questionnaires [5].

Objective: To classify a user's state (idle, easy task, hard task) in VR based on EEG signals. Participants: Typically, healthy adults (e.g., 14 participants) without neurological conditions [5]. VR Task: Participants complete a VR jigsaw puzzle with varying levels of difficulty, often manipulated by the number of puzzle pieces. EEG Recording: EEG data is continuously recorded from multiple channels (e.g., 3 or 9 central channels) while participants are in a baseline state (idle) and during the easy and hard puzzle conditions. Data Analysis & Machine Learning:

- Feature Extraction: Temporal, frequency-domain (power in delta, theta, alpha, beta bands), and non-linear features are extracted from the EEG signals.

- Model Training & Validation: Multiple machine learning algorithms (e.g., Stochastic Gradient Descent - SGD, Support Vector Classifier - SVC, Random Forest - RF) are trained and validated to classify the EEG data according to the three states.

- The high classification accuracy (86-97%) demonstrates the potential of EEG features as robust biomarkers for immersion level [5].

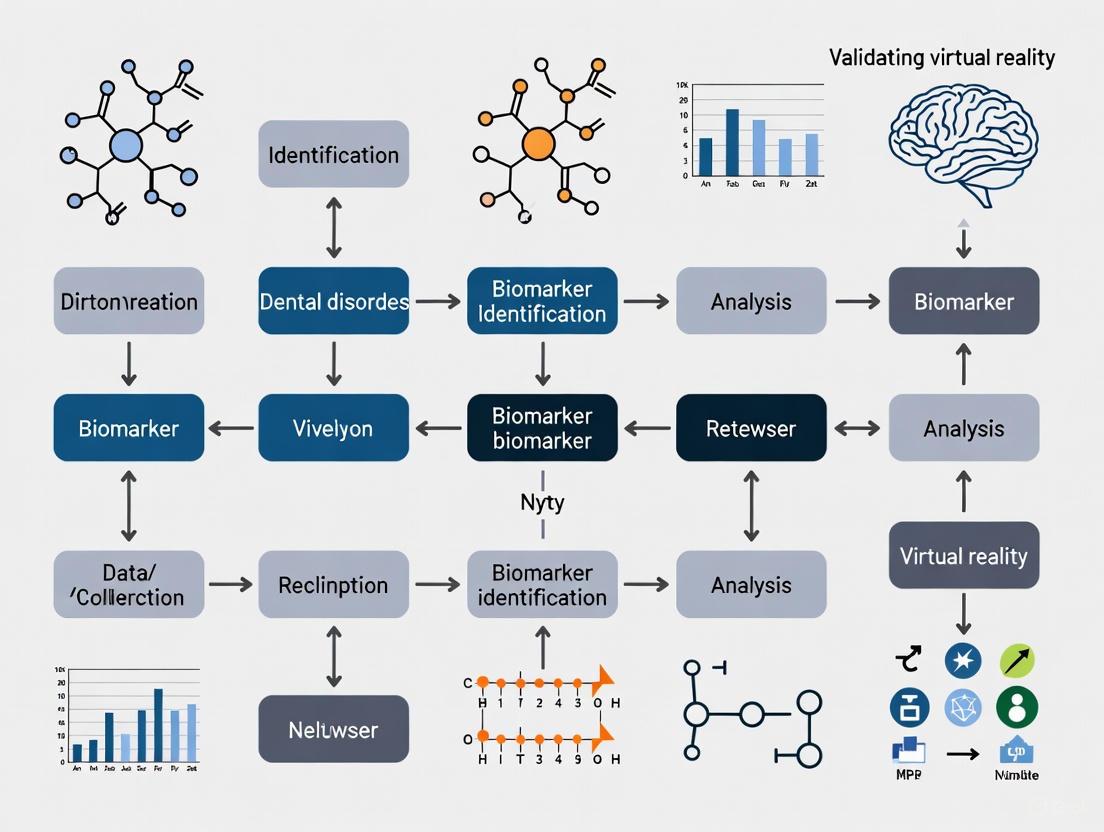

Visualizing the Workflow: From Data Collection to Biomarker Validation

The following diagram illustrates the standard experimental and analytical pipeline for developing and validating VR biomarkers.

Diagram Title: VR Biomarker Development Pipeline

The Scientist's Toolkit: Essential Research Reagents & Materials

Building a rigorous VR biomarker research program requires a suite of specialized hardware and software solutions. The following table details key components and their functions in typical experimental setups.

Table 3: Essential Research Toolkit for VR Biomarker Studies

| Tool Category | Specific Examples | Research Function & Application |

|---|---|---|

| VR Hardware | Head-Mounted Displays (HMDs) with integrated eye-tracking | Presents immersive environments; tracks gaze, pupillometry, and blink data for assessing attention and cognitive load [1]. |

| Physiological Data Acquisition Systems | BIOPAC MP160 system, portable EEG systems, See A8 ophthalmoscope | Records high-fidelity, synchronized physiological data (EEG, ECG, EDA) and eye movements as objective correlates of mental state [1]. |

| Data Synchronization Software | LabStreamingLayer (LSL) | Precisely time-aligns data streams from different sensors (EEG, ET, HRV) with events in the VR environment, which is critical for multimodal analysis [1]. |

| VR Development Platforms | Unity, Unreal Engine, A-Frame framework | Enables the creation of custom, ecologically valid virtual scenarios and tasks tailored to specific research questions (e.g., virtual kiosk, forest environment) [1] [3]. |

| Machine Learning Libraries | Scikit-learn (for SVM, Random Forest), TensorFlow, PyTorch | Used for feature selection, model training, and classification to identify biomarker patterns and build diagnostic or predictive models [5] [1] [3]. |

| Clinical Assessment Tools | Seoul Neuropsychological Screening Battery (SNSB), CES-D | Provides gold-standard clinical phenotyping for participant grouping and validation of VR biomarker findings against established metrics [1] [3]. |

The evidence demonstrates that VR biomarkers represent a significant advancement over subjective symptom checklists, providing the objectivity, ecological validity, and multivariate data required for modern mental health research and drug development. The integration of VR with multimodal sensing and machine learning creates a powerful platform for identifying robust digital signatures of disorders like MCI and depression with high accuracy. As the field matures, standardizing experimental protocols and reagent kits will be crucial for validating these biomarkers and translating them into tools that can reliably assess therapeutic efficacy in clinical trials, ultimately accelerating the development of new treatments.

Virtual Reality (VR) has evolved from an expensive novelty into a robust tool for clinical research and intervention [6]. Its ability to create controlled, immersive, and reproducible environments is particularly valuable for psychiatry and neuroscience, offering new avenues for therapy and the development of objective digital biomarkers for mental disorders [7] [3]. This guide compares the application of VR against traditional methods across key domains, supported by experimental data and detailed methodologies.

Comparative Efficacy of VR-Based Interventions

VR's therapeutic application spans multiple mental health conditions, primarily leveraging its capacity for controlled exposure and skill training. The table below summarizes its performance compared to traditional methods.

Table 1: Comparison of VR-Based Therapies vs. Traditional Methods

| Condition/Therapy | Key Finding | Comparative Outcome | Source Study Details |

|---|---|---|---|

| Social Anxiety Disorder (SAD) & Agoraphobia | No significant difference in symptom reduction between VR-CBT and traditional in-vivo CBT at post-treatment and 1-year follow-up. | Both groups showed significant improvements; VR-CBT offered a feasible, flexible alternative without compromising efficacy [8]. | Design: RCT, 177 participants.VR Intervention: 14 weekly group sessions using HMDs with 360° videos of anxiogenic situations (e.g., public speaking, crowded buses) [8]. |

| Psychosis Stigma in Professionals | Both VR and control groups showed improved attitudes and reduced stigma; no change in empathy. | VR intervention (simulating hallucinations) was not superior to control VR in outcomes, but had higher user satisfaction [9]. | Design: RCT, 180 mental health professionals.VR Intervention: Single ≤7-minute session using a smartphone-based HMD to simulate auditory and visual hallucinations in a home environment [9]. |

| Specific Phobias & PTSD | Effective as a medium for exposure therapy, especially when in-vivo exposure is impractical, dangerous, or costly [6] [10]. | Provides a safe, confidential, and controllable alternative to in-vivo or imaginal exposure, potentially improving patient access and adherence [10]. | Protocol: Virtual Reality Exposure Therapy (VRET) allows therapists to precisely control and tailor exposure stimuli based on a patient's fear hierarchy [6] [10]. |

VR in Biomarker Discovery and Cognitive Assessment

Beyond therapy, VR is proving instrumental in the objective assessment of cognitive and functional deficits, generating digital biomarkers that correlate with neurobiological changes.

Table 2: VR-Generated Biomarkers for Objective Assessment

| Assessment Target | VR-Derived Biomarkers | Performance vs. Traditional Methods | Source Study Details |

|---|---|---|---|

| Mild Cognitive Impairment (MCI) | Hand movement speed, scanpath length, time to completion, number of errors on a virtual kiosk test [3]. | SVM model using VR biomarkers alone achieved 90% specificity and 87.5% sensitivity in classifying MCI. A multimodal model combining VR and MRI biomarkers achieved 94.4% accuracy [3]. | Design: Validation study, 54 participants.VR Task: Virtual kiosk test for food ordering.Integration: VR biomarkers (high specificity) were combined with MRI biomarkers (high sensitivity) in a multimodal learning model for superior detection [3]. |

| Vestibular Dysfunction (post-mTBI) | Gaze stability, balance, and cognitive-motor integration metrics during military-relevant VR tasks. | Aims to correlate functional performance in VR with neurophysiological changes via rs-fMRI to support return-to-duty decisions [11]. | Design: Pilot study protocol (Praxis).VR Intervention: 4-week rehabilitation using VR and wearable sensors to deliver multisensory exercises. Outcome measures include functional performance and neuroimaging biomarkers [11]. |

| Mood Disorders | Data from wearables and smartphones: physical activity, sleep patterns, geolocation, voice analytics [12]. | Digital biomarkers offer continuous, longitudinal, and objective metrics, in contrast to intermittent self-reported clinical scales [12]. | Methodology: Passive and active data collection via consumer devices. Machine learning models analyze complex datasets to identify patterns related to symptom severity [12]. |

Detailed Experimental Protocols

To ensure reproducibility and critical evaluation, here are the methodologies from key cited experiments.

- Objective: To evaluate the effectiveness of a VR intervention on improving attitudes, empathy, and reducing stigma toward people with psychotic disorders.

- Study Design: Randomized Controlled Trial (RCT).

- Participants: 180 mental health care professionals (allied health staff, nurses, physicians).

- Intervention Group: Viewed a 7-minute VR scenario depicting a home environment with simulated auditory hallucinations (e.g., negative voices, laughing, crying) and visual distortions (e.g., floating words "die").

- Control Group: Viewed the same VR home environment without any hallucinations or distortions.

- Hardware: Smartphone device inserted into a VR headset equivalent to Google Cardboard.

- Outcome Measures: Standardized scales for attitudes, stigma, and empathy, administered at baseline, post-intervention, and 1-month follow-up.

- Objective: To integrate VR-derived and MRI biomarkers to enhance the early detection of Mild Cognitive Impairment (MCI).

- Study Design: Validation study.

- Participants: 54 adults (22 healthy controls, 32 with MCI).

- VR Biomarker Collection: Participants completed a "virtual kiosk test," where they performed a food-ordering task in a VR environment. Four biomarkers were extracted:

- Hand movement speed

- Scanpath length (eye movement)

- Time to completion

- Number of errors

- MRI Biomarker Collection: T1-weighted MRI scans were performed to collect 22 structural biomarkers from memory-associated brain regions.

- Data Integration: A Support Vector Machine (SVM) model was trained using the significant biomarkers from both modalities to classify participants as healthy or having MCI.

The workflow for this multimodal approach is outlined below.

The Scientist's Toolkit: Essential Research Reagents and Materials

This table details key solutions and technologies used in VR mental health research.

Table 3: Key Research Reagent Solutions in VR Mental Health Research

| Item/Technology | Function in Research | Specific Examples & Notes |

|---|---|---|

| Head-Mounted Display (HMD) | Creates an immersive virtual environment by occluding the outside world and displaying 3D computer-generated imagery [6]. | Ranges from high-end tethered devices (e.g., Oculus Rift) to cost-effective mobile solutions (e.g., Google Cardboard) [6] [9]. |

| VR Development Engine | Software platform used to create and render interactive, realistic virtual environments for therapy or assessment. | Unreal Engine [9] is used to create controlled scenarios with high visual fidelity. |

| Biometric Sensors | Capture objective physiological and behavioral data during VR sessions to quantify user response. | Eye-tracking within HMDs, hand motion controllers, and wearable devices (e.g., Actigraph, smart patches) to measure gait, heart rate, and electrodermal activity [11] [12] [3]. |

| Virtual Reality Exposure Therapy (VRET) Software | Provides pre-designed or customizable virtual environments for conducting exposure therapy. | Environments are tailored to specific phobias (e.g., heights, flying) or PTSD triggers, allowing graded exposure [6] [10]. |

| Data Analytics & Machine Learning Platform | Processes and analyzes the complex multimodal data (behavioral, physiological, neuroimaging) to identify digital biomarkers. | Used to build classification models (e.g., Support Vector Machines) that distinguish between clinical groups and healthy controls [12] [3]. |

Synthesis and Future Directions

The evidence confirms that VR is a validated medium for delivering therapeutic interventions, particularly exposure therapy, with efficacy comparable to traditional methods [8]. Its greater promise for research and drug development may lie in its capacity to generate objective, quantifiable digital biomarkers of functional impairment and cognitive decline [3]. The integration of VR with other data modalities like MRI, wearable sensors, and machine learning analytics is creating a new paradigm for validating biomarkers and assessing treatment efficacy in mental health [11] [12] [3]. Future work should focus on standardizing protocols, conducting large-scale studies, and integrating AI to further personalize and enhance interventions [10] [7].

Virtual reality (VR) has emerged as a powerful tool in mental disorders research, offering unprecedented opportunities for the development of objective biomarkers. By creating controlled, yet ecologically valid environments, VR enables the precise measurement of behavioral domains that are directly relevant to psychiatric pathology. This guide provides a comparative analysis of three key behavioral domains—eye-tracking, movement kinematics, and task performance—that are measured using VR technologies, with supporting experimental data and their validation status for mental disorders research.

Comparative Analysis of Key VR-Measured Behavioral Domains

The table below summarizes the core characteristics, measurement approaches, and evidence base for the three primary behavioral domains measurable via VR.

Table 1: Comparative Overview of Key VR-Measured Behavioral Domains

| Behavioral Domain | Key Measured Parameters | Primary VR Capabilities Utilized | Disorders with Strongest Evidence | Sample Classification Accuracy |

|---|---|---|---|---|

| Eye-Tracking | Fixations, saccades, smooth pursuit, scanpath length, pupillometry [13] [14] | Head-mounted display with integrated eye trackers, video oculography (VOG) [14] | Psychosis [15], ADHD [13] | 92% AUC (ADHD) [13]; 65% balanced accuracy (psychosis) [15] |

| Movement Kinematics | Hand movement speed, controller trajectory, navigation path efficiency, motor activity level [16] [4] | Motion controllers, hand tracking, positional tracking | MCI [4], ADHD [16] | 90% specificity (MCI) [4] |

| Task Performance | Errors (omission/commission), time to completion, tasks correctly performed, irrelevant actions [16] [13] [4] | Performance metrics within simulated functional tasks | ADHD [16] [13], MCI [4] | Higher % of irrelevant actions in ADHD [16] |

Detailed Experimental Protocols and Evidence

Eye-Tracking Biomarkers

Protocol: Smooth Pursuit Eye Movements (SPEM) for Psychosis

- Objective: To identify sensorimotor biomarkers for psychotic disorders using smooth pursuit eye movements [15].

- Methodology: Participants track a small moving object on a screen while eye movements are recorded. Key parameters include mean eye velocity, initial eye acceleration, and initiation latency [15].

- Analysis: Machine-learning models (multivariate pattern analysis) are trained on SPEM parameters to distinguish psychosis probands from healthy controls [15].

- Validation: Comprehensive external validation across multiple independent samples (B-SNIP, PARDIP, FOR2107) demonstrates robust classification with balanced accuracies ranging from 58% to 66% [15].

Protocol: Naturalistic Eye Tracking in ADHD (EPELI Task)

- Objective: To quantify attention and executive function deficits in children with ADHD using a lifelike VR task [13].

- Methodology: Participants perform a prospective memory game in VR (EPELI) with 13 scenarios of everyday chores, using a head-mounted display with a 90 Hz eye tracker [13].

- Analysis: Eye movement patterns are analyzed throughout the task, with a support vector machine classifier trained on this data [13].

- Validation: The classifier demonstrated excellent discrimination with 0.92 area under the curve (AUC), significantly outperforming traditional task performance measures [13].

Movement Kinematics Biomarkers

Protocol: Virtual Kiosk Test for Mild Cognitive Impairment (MCI)

- Objective: To capture behaviors associated with subtle deficits in instrumental activities of daily living for early MCI detection [4].

- Methodology: Participants interact with a virtual food-ordering kiosk while movement kinematics are tracked. Key parameters include hand movement speed and controller movement [4].

- Analysis: Comparison of kinematic biomarkers between healthy controls and MCI patients, with a support vector machine model achieving 90% specificity using VR-derived biomarkers alone [4].

- Integration: A multimodal approach combining VR-derived and MRI biomarkers achieved superior classification (94.4% accuracy, 100% sensitivity, 90.9% specificity) [4].

Task Performance Biomarkers

Protocol: Executive Performance in Everyday Living (EPELI) for ADHD

- Objective: To assess attention and executive function deficits in ecologically valid conditions that resemble situations where ADHD symptoms are manifested [16] [13].

- Methodology: Participants perform everyday chores in a virtual environment, with tasks including morning routines or returning from school, comprising 4-6 subtasks each [13].

- Performance Metrics: Percentage of irrelevant actions, navigation path length, number of correctly performed tasks, and amount of controller movement [16] [13].

- Findings: Children with ADHD showed higher percentages of irrelevant actions, longer navigation paths, more excessive actions, fewer correctly performed tasks, and greater controller movement compared to typically developing controls [16] [13].

Signaling Pathways and Experimental Workflows

The following diagram illustrates the integrated workflow from VR data acquisition to clinical biomarker validation, highlighting how multiple behavioral domains contribute to diagnostic insights.

VR Biomarker Development Workflow

The Scientist's Toolkit: Essential Research Reagents

Table 2: Key Research Solutions for VR Biomarker Studies

| Tool Category | Specific Examples | Research Function | Key Characteristics |

|---|---|---|---|

| VR Hardware with Integrated Eye Tracking | Tobii, Pupil Labs, Varjo, Fove [14] | Provides primary data acquisition for eye movement parameters | Uses Video Oculography (VOG); cameras mounted in HMD track eye orientation [14] |

| Behavioral Assessment Platforms | Nesplora Aquarium, EPELI, Virtual Kiosk Test [16] [13] [4] | Delivers standardized functional tasks in ecologically valid environments | Measures specific cognitive domains (attention, executive function, IADL) [16] [13] [4] |

| Motion Tracking Systems | VR controllers, hand tracking algorithms, positional tracking [16] [4] | Captures movement kinematics and motor activity | Quantifies hand movement speed, navigation efficiency, motor control [16] [4] |

| Machine Learning Frameworks | Support Vector Machines (SVM), Multivariate Pattern Analysis [13] [15] [4] | Analyzes complex multimodal data for classification | Identifies patterns distinguishing clinical groups from controls [13] [15] [4] |

VR-based measurement of eye-tracking, movement kinematics, and task performance represents a paradigm shift in mental disorders research, offering objective, quantifiable biomarkers with strong ecological validity. While eye-tracking currently shows the most robust classification accuracy for disorders like ADHD and psychosis, multimodal approaches that integrate multiple behavioral domains demonstrate superior predictive power. The field continues to evolve toward more sophisticated analytical approaches and standardized protocols that will further validate these digital biomarkers for both research and clinical applications.

The validation of virtual reality (VR) biomarkers for mental disorders research represents a paradigm shift in neuroscience and psychiatric diagnostics. Traditional diagnostic approaches often rely on subjective reports and clinical interviews, which lack biological grounding and are susceptible to observer bias [1]. VR technology, integrated with multimodal physiological sensing, offers a promising pathway for more objective diagnostics by creating standardized, immersive environments that can elicit ecologically valid neurophysiological responses [1] [17]. This guide systematically compares the performance of various VR-based neurophysiological assessment methodologies, providing researchers with experimental data and protocols for establishing validated biomarkers for mental health conditions. The core advantage of VR lies in its ability to seamlessly collect behavioral and physiological metrics—such as body movement, gaze patterns, and biosignals—without disrupting user engagement, thereby fostering deeper cognitive, social, and physical involvement that enhances the reliability of psychological assessments [1]. By synchronously capturing data within controlled yet naturalistic virtual environments, researchers can identify robust biomarkers of psychiatric conditions, transcending the limitations of traditional methods and potentially transforming early identification and intervention strategies for mental health [1].

Comparative Analysis of VR-Based Neurophysiological Biomarkers

Table 1: Comparative Performance of Neurophysiological Modalities in VR-Based Assessment

| Physiological Modality | Key Biomarkers Identified | Association with Mental Health Conditions | Supported by Experimental Evidence |

|---|---|---|---|

| EEG (Electroencephalography) | Higher theta/beta ratio [1]; Elevated beta/alpha ratio indicating high arousal [18]; Increased beta wave activity [18] | Associated with depression severity [1]; Differentiates emotional states in VR vs. real environments [18] | Case-control study with 115 adolescents [1]; Controlled comparison study of VR vs. real spaces [18] |

| HRV (Heart Rate Variability) | Elevated LF/HF ratio [1]; Transient increase in parasympathetic activity (pNN50) [18] | Significantly associated with depression severity [1]; Differentiates autonomic responses in VR environments [18] | Case-control study with 115 adolescents [1]; Pilot study on emotional equivalence [18] |

| Eye-Tracking (ET) | Reduced saccade counts; Longer fixation durations [1] | Robust biomarker for Major Depressive Disorder (MDD) in adolescents [1] | Case-control study with 115 adolescents [1] |

| Multimodal Integration (EEG+ET+HRV) | Combined biomarker profile; Machine learning classification features [1] | Achieved 81.7% classification accuracy for MDD with AUC of 0.921 [1] | SVM model trained on multimodal features from 115 participants [1] |

Table 2: Quantitative Experimental Results from Key VR Neurophysiology Studies

| Study Reference | Participant Population | VR Intervention/Task | Key Quantitative Findings | Statistical Significance |

|---|---|---|---|---|

| Wu et al. (2025) [1] | 51 MDD adolescents, 64 healthy controls | 10-minute VR-based emotional task with AI agent interaction | MDD group showed: EEG theta/beta ratio ↑, saccade counts ↓, fixation duration ↑, HRV LF/HF ratio ↑; SVM classification accuracy: 81.7% (AUC 0.921) | All group differences: p < 0.05 [1] |

| Emotional Equivalence Study (2025) [18] | Not specified | Comparison of identical spaces in VR vs. real world | Real-world: associated with comfort/preference; VR: evoked higher arousal impressions; EEG: elevated beta/alpha ratios in VR | Physiological measures showed consistent differences [18] |

| Cognitive Performance Study (2023) [19] | 41 older adults (mean age 62.8) | 4 Enhance VR games assessing memory, attention, flexibility | VR environments demonstrated high tolerance and usability; No significant correlation with traditional pen-and-paper tests | Hardware well-tolerated even by VR-naive participants [19] |

Detailed Experimental Protocols and Methodologies

VR-Based Emotional Task for Adolescent Depression Screening

The groundbreaking study by Wu et al. (2025) developed a comprehensive protocol for assessing major depressive disorder (MDD) in adolescents using VR-integrated multimodal sensing [1]. The experimental design involved:

- Participant Recruitment: 51 adolescents diagnosed with first-episode MDD according to DSM-5 criteria and 64 healthy controls recruited through a school-based screening program [1].

- VR Environment: A custom-developed immersive scenario using the A-Frame framework, featuring a magical forest lakeside panoramic background with an AI agent named "Xuyu" for interactive dialogue [1].

- Task Structure: A 10-minute structured interaction consisting of: (1) Introduction (1 minute), (2) Immersive Relaxation (5 minutes), (3) Supportive Interaction (3 minutes), and (4) Conclusion (1 minute) [1].

- Physiological Monitoring: Real-time data collection using the BIOPAC MP160 system for EEG, ECG, and ocular motility, with a See A8 portable telemetric ophthalmoscope for eye-tracking [1].

- Data Synchronization: LabStreamingLayer (LSL) technology for aligning multimodal physiological data with VR task events [1].

This protocol successfully identified robust physiological biomarkers, including significantly higher EEG theta/beta ratios, reduced saccade counts, longer fixation durations, and elevated HRV LF/HF ratios in adolescents with MDD compared to healthy controls [1].

Emotional Equivalence Protocol: VR vs. Real Environments

A critical methodological approach for validating VR biomarkers involves direct comparison with real-world environments [18]. The pilot investigation into emotional equivalence employed:

- Experimental Design: Construction of identically designed VR and real spaces to enable direct comparison of emotional responses [18].

- Assessment Methods: Combination of subjective evaluations (Semantic Differential method) and physiological indices (EEG and HRV) [18].

- Baseline Estimation: Implementation of two specialized methods for determining physiological baselines: the Pre-Stimulus Averaging Method and the Resting-State Prediction Method to account for habituation effects during VR viewing [20].

- Controlled Conditions: Careful matching of visual stimuli between VR and real conditions while controlling for non-visual environmental factors such as sound and scent [18].

This protocol revealed that real-world environments were associated with impressions of comfort and preference, whereas VR environments evoked impressions characterized by heightened arousal, with elevated beta wave activity and increased beta/alpha ratios observed in the VR condition [18].

Diagram 1: Experimental workflow for VR-real world emotional equivalence studies

Cognitive Assessment Protocols in Virtual Environments

VR-based cognitive assessment represents another significant application in mental health research. The Enhance VR study protocol demonstrates this approach [19]:

- Participant Profile: 41 older adults (mean age 62.8 years) without neurodegenerative or psychiatric disorders [19].

- Hardware: Meta Quest (Oculus VR) standalone headset with two controllers for interaction [19].

- VR Cognitive Tasks: Four specific gamified exercises based on validated neuropsychological principles:

- Magic Deck: Inspired by Paired Associates Learning test for memory assessment [19].

- Memory Wall: Motivated by Visual Pattern Test for short-term memory evaluation [19].

- Pizza Builder: Inspired by divided attention assessments requiring simultaneous task management [19].

- React: Based on Wisconsin Card Sorting Task and Stroop test for cognitive flexibility [19].

- Comparison Methodology: Random assignment to either traditional neuropsychological testing or VR assessment sessions on different days to compare assessment methodologies [19].

This protocol demonstrated that VR-based cognitive assessment was extremely well tolerated, intuitive, and accessible even to those with no prior VR experience, supporting the ecological validity of VR environments for neuropsychological evaluation [19].

Signaling Pathways and Neurophysiological Logical Framework

The relationship between VR stimulation, physiological responses, and clinical applications follows a logical pathway that can be mapped to validate VR biomarkers for mental health research.

Diagram 2: Neurophysiological pathways linking VR stimulation to clinical applications

The logical framework demonstrates how controlled VR stimuli elicit responses across both central and autonomic nervous systems, generating measurable biomarkers that can be leveraged for various clinical applications in mental health research [1] [18] [21]. The EEG theta/beta ratio has been specifically associated with depression severity, while HRV LF/HF ratios reflect autonomic dysregulation linked to psychiatric conditions [1]. Eye-tracking metrics provide behavioral indicators of attentional patterns characteristic of mental disorders [1].

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3: Essential Research Tools for VR Neurophysiology Studies

| Tool Category | Specific Products/Technologies | Key Functions | Research Applications |

|---|---|---|---|

| VR Hardware Platforms | Meta Quest (Oculus VR) [19]; HTC Vive [17]; Head-Mounted Displays (HMDs) [21] | Create immersive virtual environments; Enable user interaction through controllers | Cognitive assessment [19]; Mindfulness interventions [21]; Emotional task delivery [1] |

| Physiological Data Acquisition Systems | BIOPAC MP160 system [1]; Portable telemetric ophthalmoscope (See A8) [1] | Record EEG, ECG, ocular motility; Capture eye-tracking data | Multimodal sensing during VR tasks [1]; Real-time biosignal collection [1] |

| VR Development Frameworks | A-Frame framework [1]; Virtools Dev software [17] | Develop custom VR environments; Create interactive 3D scenarios | Building controlled experimental paradigms [1]; Designing ecological valid scenarios [17] |

| Data Synchronization Solutions | LabStreamingLayer (LSL) [1] | Align multimodal physiological data with VR events; Ensure temporal precision | Multimodal data integration [1]; Time-locked analysis of responses [1] |

| Analysis & Machine Learning Tools | Support Vector Machine (SVM) [1]; Statistical analysis packages (R, Python) | Classify MDD status based on features; Identify significant biomarker differences | Developing diagnostic models [1]; Analyzing physiological patterns [1] |

The integration of VR with multimodal physiological monitoring represents a transformative approach in mental health research, offering objective biomarkers that complement traditional subjective assessments. Experimental evidence demonstrates that VR-based paradigms can successfully identify robust neurophysiological signatures of mental health conditions, with EEG, HRV, and eye-tracking metrics showing consistent differentiation between clinical populations and healthy controls [1]. The strong classification performance of machine learning models applied to these multimodal features (81.7% accuracy for MDD with AUC of 0.921) underscores the clinical potential of this approach [1].

Future research directions should address several key challenges, including the need for standardized VR protocols specifically tailored for mental health assessment [1], refinement of baseline estimation methods for physiological data [20], and larger-scale validation studies across diverse populations. Additionally, further investigation is needed to establish the emotional equivalence between VR and real-world environments, as current research indicates measurable differences in arousal states and physiological responses [18]. As the field advances, the translation of these VR biomarker platforms into wearable or mobile systems promises to enhance the scalability and accessibility of objective mental health screening, potentially revolutionizing early detection and intervention strategies for psychiatric disorders [1].

The rising global prevalence of Alzheimer's disease underscores the critical need for accessible early screening of its preclinical stage, mild cognitive impairment (MCI). Virtual Kiosk Tests (VKTs) represent an emerging class of digital biomarkers that leverage immersive virtual reality (VR) to assess instrumental activities of daily living (IADL). This guide objectively compares the performance of VKTs against traditional screening tools and other biomarker-based approaches, presenting synthesized experimental data to validate VR-based biomarkers for mental disorders research. Evidence indicates that VKTs achieve high diagnostic accuracy, offer superior ecological validity, and integrate effectively into multimodal screening frameworks, presenting a compelling tool for researchers and drug development professionals.

Mild cognitive impairment (MCI), particularly the amnestic subtype (aMCI), is a transitional stage between healthy aging and Alzheimer's disease (AD), with approximately 80% of individuals eventually progressing to AD [22]. Early detection is paramount, as it represents a critical window for interventions that may slow progression or even restore cognitive function [23] [3]. Traditional screening tools face significant limitations:

- Brief Cognitive Tests: Tools like the Montreal Cognitive Assessment (MoCA) are common but can lack the sensitivity to detect subtle, early deficits [24] [25].

- Conventional Biomarkers: Neuroimaging (MRI, PET) and cerebrospinal fluid analysis, while valuable, are often expensive, invasive, and lack feasibility for widespread, repeated screening [23] [25].

- Neuropsychological Batteries: Although comprehensive, these are time-consuming and can suffer from issues like ceiling effects and poor ecological validity, meaning they poorly reflect real-world cognitive challenges [3] [24].

Virtual Kiosk Tests address these gaps by using immersive VR to simulate a common IADL—ordering food at a self-service kiosk. This approach captures ecologically valid behavioral data in a standardized, controlled environment [23].

Virtual Kiosk Test: Protocol & Measured Biomarkers

Core Experimental Protocol

The VKT methodology is standardized to ensure reproducibility and reliable data collection across participants. The following workflow outlines the key stages of a typical VKT experiment, from participant preparation to data analysis.

Participant Preparation: Participants are typically recruited from memory clinics and diagnosed according to established criteria (e.g., Petersen criteria) by experienced neurologists [23] [22]. Key inclusion criteria often include being over 50 years old and having normal sensory perception [23].

VR Setup: Participants sit on a chair for safety and use a head-mounted display (e.g., HTC Vive Pro Eye) and a hand controller. Eye movements are tracked via sensors in the HMD, and hand movements are tracked using base stations [23] [22].

Task Execution: Participants are instructed to memorize a complex order (e.g., "Order a shrimp burger, cheese sticks, and a Coca-Cola using a credit card with password 6289") [22]. They then perform the ordering task in the virtual environment, which involves multiple steps such as selecting food items, choosing a payment method, and entering a PIN [23] [22].

Key Digital Biomarkers Captured

The VKT generates quantitative, objective metrics across several behavioral domains:

1. Hand Movement Kinematics

- Hand Movement Speed: Slower average speed is indicative of MCI [23].

- 3D Trajectory Length: Longer, less efficient movement paths are associated with cognitive impairment [24].

2. Eye Movement Metrics

- Proportion of Fixation Duration: Lower percentage of time fixated on target menu items suggests attentional deficits [23].

- Scanpath Length: Longer total gaze distance indicates less efficient visual search strategies [22].

3. Task Performance Metrics

- Time to Completion: Longer total time to complete the task [23].

- Number of Errors: Higher error counts (e.g., incorrect selections) [23].

- Hesitation Latency: Delays in initiating actions, reflecting executive dysfunction [24].

Performance Comparison: VKTs vs. Alternative Modalities

The following tables synthesize quantitative data from recent studies, comparing the diagnostic performance of VKTs against other screening methods and biomarkers.

Table 1: Comparative Diagnostic Accuracy for MCI Detection

| Screening Method | Reported Accuracy | Reported Sensitivity | Reported Specificity | Key Strengths | Key Limitations |

|---|---|---|---|---|---|

| Virtual Kiosk Test (VKT) | 93.3% [23] | 100% [23] [3] | 90.9% [3] | High ecological validity, cost-effective, short test duration (5-15 mins) [23] | Requires VR equipment, potential for cybersickness |

| VKT + EEG-SSVEP | 98.38% [22] | - | - | Provides linked behavioral & neurological insight [22] | Complex setup, requires specialized EEG equipment & expertise |

| VKT + MRI Biomarkers | 94.4% [3] | 100% [3] | 90.9% [3] | Multimodal validation, links behavior to structural brain changes [3] | High cost of MRI, less accessible for routine screening |

| VR Stroop Test (VRST) | AUC: 0.981 [24] | - | - | Excellent discriminant power, high construct validity [24] | Assesses specific cognitive domain (executive function) |

| MoCA (Traditional Tool) | AUC: 0.962 [24] | Lower than VR [25] | Lower than VR [25] | Widespread use, fast administration [25] | Lower sensitivity for early MCI, lacks ecological validity [24] [25] |

| MRI Biomarkers (Unimodal) | - | 90.9% [3] | 71.4% [3] | High sensitivity, quantifies brain structure [3] | Low specificity, high cost, unsuitable for frequent monitoring [3] |

Table 2: Statistical Significance of Key VKT Biomarkers (MCI vs. Healthy Controls)

| Digital Biomarker | Statistical Result | P-Value | Cognitive Domain Assessed |

|---|---|---|---|

| Hand Movement Speed | t~49~ = 3.45 | P = .004 [23] | Psychomotor speed, executive function |

| Proportion of Fixation Duration | t~49~ = 2.69 | P = .04 [23] | Attention, visual search efficiency |

| Time to Completion | t~49~ = -3.44 | P = .004 [23] | Processing speed, task efficiency |

| Number of Errors | t~49~ = -3.77 | P = .001 [23] | Memory, executive function |

| 3D Hand Trajectory Length | Highest AUC = 0.981 [24] | P < .001 (implied) | Motor planning, executive control |

A meta-analysis of 29 studies on VR for MCI detection found that VR-based assessments have a pooled sensitivity of 0.883 and specificity of 0.887, confirming the robust performance of this approach across various implementations [25].

The Scientist's Toolkit: Essential Research Reagents & Materials

Implementing a Virtual Kiosk Test in a research setting requires specific hardware and software components. The following table details the essential solutions and their functions.

Table 3: Key Research Reagents and Solutions for VKT Implementation

| Item Name / Category | Example Model / Software | Primary Function in Protocol |

|---|---|---|

| Head-Mounted Display (HMD) | HTC Vive Pro Eye [23] [22] | Presents the virtual environment; integrated eye-tracking enables collection of gaze metrics. |

| Hand Motion Controller | HTC Vive Controller [22] [24] | Tracks hand movement kinematics (speed, trajectory) during task interaction. |

| Position Tracking System | HTC Vive Base Stations [23] [22] | Provides precise spatial tracking of the HMD and controller within the physical space. |

| Software & Game Engine | Unity [24] | Platform for developing, rendering, and running the interactive virtual kiosk environment. |

| Data Processing & Analysis | Custom Python/MATLAB scripts; SVM classifiers [23] [3] | For processing time-series data, extracting features, and building classification models. |

| Performance Validation | Seoul Neuropsychological Screening Battery (SNSB-C) [23] [3] | Gold-standard neuropsychological test used for participant diagnosis and correlational validation. |

Integration in a Multimodal Biomarker Framework

VKTs are most powerful when integrated into a broader biomarker strategy. Research shows that VKT performance correlates with both neurological and neuropsychological measures, establishing its construct validity.

- Correlation with Brain Structure: A multimodal study found a significant correlation between impaired VKT performance and brain atrophy in memory-related regions like the hippocampus and entorhinal cortex, measured via MRI [3]. This links behavioral deficits observed in VR to their underlying neurological substrates.

- Correlation with Neural Function: Integrating VKT with EEG-SSVEP has demonstrated a relationship between behavioral impairments and a compromised dorsal stream of the visual pathway, which governs behavioral responses to visual stimuli [22].

- Correlation with Cognitive Domains: VKT features (e.g., time to completion, errors) show significant correlations with standardized tests of executive function, attention, and memory [23] [24].

The following diagram illustrates the convergent validity of the VKT and its position within a multimodal assessment framework.

Discussion and Future Directions

Virtual Kiosk Tests demonstrate a compelling balance of high diagnostic accuracy, ecological validity, and practical feasibility for early MCI screening. The synthesized data shows that VKTs consistently outperform traditional brief cognitive screens and can serve as a specific, cost-effective tool for population-level screening prior to more invasive and expensive confirmatory biomarker tests [3] [25].

For researchers and drug development professionals, VKTs offer a reliable digital biomarker for enriching clinical trial cohorts with early MCI patients and for providing sensitive, objective outcome measures to track intervention effects. Future work should focus on standardizing protocols across sites, validating VKTs in more diverse populations, and further exploring their predictive value for conversion from MCI to Alzheimer's dementia. The integration of VKTs with other digital data streams, such as passive smartphone monitoring, presents a promising avenue for continuous, real-world cognitive assessment.

Building and Deploying VR Biomarker Assays: From Lab to Clinical Trial

Designing Ecologically Valid VR Environments for Specific Disorders

Virtual Reality (VR) has emerged as a transformative tool in mental health research and treatment development, particularly for its potential to create controlled yet ecologically valid assessment and intervention environments. Ecological validity refers to the extent to which laboratory findings generalize to real-world settings, encompassing both verisimilitude (the degree to which test demands resemble everyday life demands) and veridicality (the empirical relationship between test performance and real-world functioning) [26]. For researchers and pharmaceutical developers targeting specific disorders, designing VR environments with strong ecological validity is crucial for generating clinically meaningful biomarkers and treatment outcomes that translate beyond laboratory settings.

The tension between experimental control and real-world relevance has long challenged clinical neuroscience [26]. Traditional paper-and-pencil neuropsychological tests often assess cognitive constructs without clear connections to daily functioning [26]. VR technology offers a resolution to this dilemma by enabling precise stimulus control within simulations that closely mimic real-world challenges faced by people with mental disorders [27] [26]. This capacity for creating standardized yet ecologically relevant environments makes VR particularly valuable for developing digital biomarkers that can sensitively measure treatment effects in clinical trials.

Theoretical Framework: Core Principles of Ecologically Valid VR Design

Key Dimensions of Ecological Validity

| Dimension | Definition | Research Application | Clinical Relevance |

|---|---|---|---|

| Verisimilitude | Similarity between task demands in VR and everyday life [26] | Designing supermarket shopping tasks for ADHD assessment [27] | Predicts real-world functional capacity |

| Veridicality | Empirical relationship between VR performance and real-world functioning [26] | Correlating VR attention measures with academic performance [28] | Validates biomarkers for treatment outcome prediction |

| Personal Relevance | Match between VR scenario and patient-specific challenges | Customizing social scenarios for social anxiety disorder | Enhances engagement and treatment generalization |

| Dynamic Complexity | Incorporation of multi-sensory, unpredictable elements | Adding distractors to continuous performance tests [28] | Captures real-world cognitive challenges |

Critical Technical Elements for Enhancing Ecological Validity

Several technical and design elements collectively contribute to the ecological validity of VR environments for mental health applications:

Immersion Level: Higher immersion through head-mounted displays (HMDs) enhances the feeling of presence, though both immersive and non-immersive systems have applications depending on the target disorder and assessment goals [27]. HMDs were perceived as more immersive than cylindrical room-scale VR in audio-visual research, though both showed ecological validity for perceptual parameters [29].

Naturalistic Interaction: Interfaces that allow users to employ their own body movements (rather than keyboards or joysticks) facilitate more comparable performance between gamers and non-gamers, making assessments more applicable to broader populations [27].

Contextual Embedding: Placing cognitive tasks within emotionally engaging narratives or familiar real-world contexts enhances affective experience and social interactions, making responses more representative of real-world behavior [26].

Multi-sensory Integration: Incorporating visual, auditory, and even haptic cues that mirror real-world experiences strengthens the illusion of reality and elicits more naturalistic responses [29].

Disorder-Specific VR Environment Design: Applications and Evidence

Psychotic Disorders: Simulating Subjective Experiences

VR interventions for psychotic disorders have primarily focused on fostering empathy and reducing stigma among healthcare professionals, while also showing promise for assessment and rehabilitation.

A randomized controlled trial with 180 mental health professionals demonstrated that a VR intervention simulating auditory hallucinations (e.g., hearing voices saying "die" and "poison") and visual hallucinations (e.g., floating items, shadow-like figures) in a home environment significantly improved attitudes and reduced stigma toward people with psychotic disorders [9]. The intervention, delivered via head-mounted display and lasting approximately 7 minutes, presented increasing frequency of negative auditory content corresponding with visual hallucinations, culminating in suicidal ideation voices [9].

Experimental Protocol: The VR environment was constructed using Unreal Engine and based on systematic review of effective stigma reduction elements [9]. Clinical input and peer specialist feedback ensured authentic representation of psychotic experiences. The control group experienced the same virtual home environment without hallucination simulations.

ADHD and Attention Disorders: Environmental Distraction Modulation

VR continuous performance tests (CPTs) have addressed ecological validity limitations of traditional attention assessments by incorporating real-world distractors. The "Pay Attention!" program exemplifies this approach with four key design innovations [28]:

- Multiple Real-World Scenarios: Four familiar environments (room, library, outdoors, café) instead of artificial laboratory settings

- Graduated Difficulty Levels: Four distinct difficulty levels varying in distraction intensity, stimulus complexity, and inter-stimulus intervals

- Home-Based Assessment: Deployment in naturalistic home settings rather than clinical laboratories

- Extended Assessment Period: Multiple testing sessions over time to capture intra-individual variability

Experimental Protocol: A feasibility study with 20 Korean adults implemented 12 blocks of testing over two weeks. Each block presented CPT tasks within the different environmental contexts with varying distraction levels. Performance metrics (commission errors, omission errors, reaction time variability) were tracked alongside psychological assessments and EEG measurements [28].

The results demonstrated that higher commission errors specifically emerged in the "very high" difficulty level featuring complex stimuli and increased distraction. A significant correlation between overall distraction level and CPT accuracy validated the ecological relevance of the environmental manipulations [28].

Acquired Brain Injury: Activities of Daily Living Simulation

A systematic review of 70 studies on VR for acquired brain injury (ABI) revealed diverse ecological environments targeting real-world functioning [27]. The most common simulations included:

- 12 different kitchen environments for meal preparation tasks

- 11 supermarket scenarios for shopping and navigation challenges

- 10 shopping malls for complex wayfinding and purchasing

- 16 street environments for crossing safety and navigation

- 11 city contexts for large-scale spatial orientation

- 10 other everyday life scenarios for various daily activities

These environments primarily assessed and rehabilitated cognitive functions within the context of activities of daily living (ADLs), addressing the critical need for functional relevance in neurorehabilitation [27]. The ecological approach moves beyond construct-driven assessment to function-led evaluation that directly predicts real-world capabilities.

Quantitative Outcomes: Comparative Efficacy of VR Interventions

Performance Comparison Across Disorders and Environments

| Disorder | VR Environment Type | Key Outcome Measures | Effect Size/Performance | Comparison Condition |

|---|---|---|---|---|

| Psychotic Disorders | Home environment with hallucination simulations [9] | Attitudes, Stigma, Empathy | Significant improvements in attitudes and stigma (p<0.05) | VR control without hallucinations |

| ADHD | "Pay Attention!" with multi-level distractions [28] | Commission Errors, Omission Errors | Significantly higher commission errors at highest difficulty | Traditional CPT without ecological distractors |

| Acquired Brain Injury | Kitchen, supermarket, street scenarios [27] | Functional Independence Measures | Modest evidence for functional improvement | Traditional occupational therapy |

| Medical Education | Various anatomical and procedural trainers [30] | Examination Pass Rates | OR=1.85 (95% CI: 1.32-2.58) | Traditional education methods |

Ecological Validity Metrics Across VR Systems

| VR System Type | Perceptual Validity | Psychological Restoration | Physiological Response | Realism Rating |

|---|---|---|---|---|

| Head-Mounted Display (HMD) | High ecological validity [29] | Moderate accuracy vs. real-world [29] | Valid for EEG change metrics [29] | Higher immersion [29] |

| Cylindrical Room-Scale VR | High ecological validity [29] | Slightly better accuracy than HMD [29] | More accurate for EEG time-domain features [29] | Lower immersion [29] |

| Computer Screen VR | Moderate ecological validity [27] | Not systematically assessed | Limited physiological engagement | Lower presence [27] |

| CAVE Systems | Limited research available [29] | Limited research available | Limited research available | Limited research available |

Methodological Considerations for VR Biomarker Development

Experimental Design Workflow for VR Environment Validation

Research Reagent Solutions: Essential Materials for VR Experimentation

| Research Tool | Function | Example Application | Technical Specifications |

|---|---|---|---|

| Head-Mounted Displays (HMDs) | Create immersive visual experience | Hallucination simulation for psychosis [9] | Varying levels of immersion and field of view |

| Game Engines (Unreal Engine) | Develop interactive 3D environments | Creating home environment for psychosis simulation [9] | Real-time rendering capabilities |

| Physiological Monitors (EEG, HR) | Objective arousal and cognitive load measurement | Attention monitoring during VR CPT [28] | Synchronization with VR presentation |

| Virtual Environment Libraries | Standardized scenario repositories | Kitchen, supermarket, street scenarios for ABI [27] | Customization capacity for specific disorders |

| Cybersickness Assessment Tools | Measure VR-induced discomfort | Essential for ABI populations with higher susceptibility [27] | Multiple symptom dimensions |

The development of ecologically valid VR environments for specific disorders represents a promising pathway for creating clinically meaningful digital biomarkers in mental health research and pharmaceutical development. Current evidence demonstrates that VR can effectively bridge the gap between laboratory control and real-world relevance across multiple disorders, including psychotic disorders, ADHD, and acquired brain injury.

Key design principles emerging from the research include:

- Disorder-specific environmental relevance matching particular functional challenges

- Graduated difficulty and distraction levels to avoid ceiling and floor effects

- Multi-sensory integration enhancing realism and engagement

- Naturalistic interaction modalities reducing technological barriers

Future research should address current limitations, including standardization of outcome measures, development of normative profiles across different populations, and systematic assessment of cybersickness particularly in vulnerable clinical groups [27]. Additionally, further validation studies comparing VR measures with real-world functioning across different disorders will strengthen the ecological validity of these approaches.

For pharmaceutical researchers, VR environments offer the potential for sensitive, ecologically relevant biomarkers that can detect subtle treatment effects and predict real-world functional outcomes. The continued refinement of these virtual environments holds significant promise for enhancing the validity and clinical utility of mental health intervention research.

The quest for objective biomarkers in mental health research is increasingly turning to immersive technologies like virtual reality (VR) combined with sophisticated multimodal data fusion. This approach integrates diverse neurophysiological and behavioral data streams—eye-tracking, electroencephalography (EEG), heart rate variability (HRV), and motion data—to capture the complex dynamics of brain function and behavior that underlie psychiatric disorders [31]. In the era of big data, where vast amounts of information are generated at unprecedented rates, innovative data-driven fusion methods are essential for integrating diverse perspectives to extract meaningful insights and achieve a more comprehensive understanding of complex psychiatric conditions [32]. Traditional separate analysis of each data modality may only reveal partial insights or miss important correlations between different data types, whereas multimodal fusion enables researchers to uncover hidden patterns and relationships that would otherwise remain undetected [32].

The validation of VR biomarkers represents a paradigm shift from subjective symptom reporting toward biologically-grounded, objective diagnostic tools. This is particularly crucial in conditions like major depressive disorder (MDD), where current diagnostic approaches predominantly rely on symptom checklists and clinical interviews that lack biological grounding and are susceptible to subjectivity [31]. Empirical research indicates that over 50% of depression cases are either misdiagnosed or overlooked, significantly compromising treatment effectiveness [31]. Multimodal fusion approaches allow researchers to incorporate multiple factors including genetics, environment, cognition, and treatment outcomes across various brain disorders, potentially uncovering subtle abnormalities or biomarkers that may benefit targeted treatments and personalized medical interventions [32].

Experimental Approaches in Multimodal Data Collection

VR-Integrated Experimental Protocols

Recent research has pioneered sophisticated protocols for collecting multimodal data within controlled yet ecologically valid VR environments. One notable case-control study focused on adolescent depression screening developed a 10-minute VR-based emotional task where participants engaged in interactive dialogues with an AI agent named "Xuyu" while physiological data were collected in real-time [31]. The VR environment featured a panoramic magical forest landscape by a lakeside, creating a standardized yet immersive context for emotional exploration. During the session, participants discussed themes of personal worries, distress, and hopes for the future, providing a rich behavioral context for the simultaneously acquired physiological measurements [31].

Another innovative approach examined visuomotor integration using a complex aircraft identification scenario. This protocol collected simultaneous EEG (34 electrodes), functional near-infrared spectroscopy (fNIRS) with 44 channels covering frontal and parietal cortex, eye movements, and manual joystick responses [33]. The experiment consisted of six blocks, each containing both easy tasks (with fixed target positions) and hard tasks (with random target locations), allowing researchers to examine cognitive load and attentional processes across different difficulty levels. This comprehensive setup enabled the capture of implicit behaviors (eye movements) alongside explicit motor responses, providing unique insights into how cognitive processes unfold over time [33].

Cognitive Load Assessment Protocols

Research on cognitive load measurement has developed specialized reading protocols to examine how different types of cognitive load manifest in physiological signals. One study with 102 non-native English speakers investigated how background music (BGM) affects reading comprehension and cognitive processes [34]. Participants read English passages either with self-selected preferred BGM or in silence while researchers collected eye movement data, electrodermal activity (EDA), heart rate (HR), and heart rate variability (HRV). The study employed the triarchic model of cognitive load, examining:

- Extraneous load: Created by adding background music

- Intrinsic load: Manipulated through text complexity

- Germane load: Reflected by comprehension accuracy [34]

This approach allowed researchers to identify which physiological signals were most sensitive to different types of cognitive load, providing a framework for non-intrusive cognitive state monitoring during complex tasks.

Data Fusion Methodologies and Analytical Frameworks

Machine Learning Approaches for Diagnostic Classification

Sophisticated machine learning frameworks have demonstrated remarkable success in classifying psychiatric conditions based on multimodal data. In the adolescent depression study, researchers trained a support vector machine (SVM) model to classify MDD status based on selected features from EEG, eye-tracking, and HRV data [31]. The model achieved an impressive 81.7% classification accuracy with an area under the curve (AUC) of 0.921, significantly outperforming traditional diagnostic approaches. Key physiological features that drove classification accuracy included:

- EEG metrics: Higher theta/beta ratios in adolescents with MDD

- Eye-tracking measures: Reduced saccade counts and longer fixation durations

- HRV parameters: Elevated LF/HF ratios indicating autonomic nervous system dysregulation [31]

The theta/beta and LF/HF ratios both showed significant associations with depression severity, suggesting their potential as quantitative biomarkers for tracking symptom progression and treatment response.

Deep Graph Learning for Treatment Prediction

For treatment prediction, advanced deep learning approaches have emerged that can model the complex relationships between multimodal brain networks. One groundbreaking study analyzed resting-state fMRI and EEG connectivity data from 265 patients from the EMBARC study—130 treated with sertraline and 135 with placebo [35]. Researchers developed a novel deep learning framework using graph neural networks (GNNs) to integrate data-augmented connectivity and cross-modality correlations, aiming to predict individual symptom changes by revealing multimodal brain network signatures [35].

The model demonstrated promising prediction accuracy, with an R² value of 0.24 for sertraline and 0.20 for placebo, and exhibited potential in transferring predictions using only EEG data. Critical brain regions identified for predicting sertraline response included the inferior temporal gyrus (fMRI) and posterior cingulate cortex (EEG), while for placebo response, the precuneus (fMRI) and supplementary motor area (EEG) were particularly important [35]. This approach demonstrates how fusion of complementary neuroimaging modalities can uncover clinically meaningful biomarkers for predicting treatment outcomes.

Table 1: Performance Comparison of Multimodal Fusion Approaches

| Study Objective | Data Modalities | Fusion Method | Key Performance Metrics |

|---|---|---|---|

| Adolescent MDD Screening [31] | EEG, Eye-tracking, HRV | Support Vector Machine (SVM) | 81.7% classification accuracy, AUC: 0.921 |

| Antidepressant Treatment Prediction [35] | fMRI, EEG | Graph Neural Networks (GNN) | R² = 0.24 (sertraline), R² = 0.20 (placebo) |

| Cognitive Load Assessment [34] | Eye-tracking, EDA, HR, HRV | Multimodal Learning Analytics | EM predicted all 3 load types; HR/HRV predicted extraneous and germane load |

Multimodal Fusion Techniques

The field has developed various technical approaches for fusing multimodal data, each with distinct advantages and applications. Joint Independent Component Analysis (jICA) jointly analyzes multiple datasets by concatenating them along a certain dimension, based on the assumption that two or more features share the same mixing matrix and maximize independence among joint components [32]. Multimodal Canonical Correlation Analysis (mCCA) explores inter-subject relationships by identifying maximally correlated components across modalities, while mCCA + jICA combines both approaches to leverage their complementary strengths [32]. Emerging deep learning approaches directly handle high-dimensional raw data to extract individual variations by integrating multi-level dimensionality reduction and subject-level reconstruction techniques [32].

Key Physiological Biomarkers Across Modalities

EEG-Derived Biomarkers

Electroencephalography provides crucial information about brain electrical activity with millisecond temporal resolution, making it particularly valuable for capturing dynamic neural processes. Research has consistently identified distinctive EEG patterns associated with psychiatric conditions. In adolescent depression, significantly higher EEG theta/beta ratios have been observed in those with MDD compared to healthy controls [31]. This metric reflects an imbalance between cortical inhibition and arousal, potentially indicating regulatory deficits in depression. For antidepressant treatment prediction, studies have identified the posterior cingulate cortex as a critical region in EEG connectivity patterns that predict sertraline response [35]. The superior temporal resolution of EEG also enables the capture of event-related potentials (ERPs) that index specific cognitive processes such as attention, working memory, and error monitoring, which are frequently impaired across psychiatric disorders.

Eye-Tracking Biomarkers

Eye movement patterns provide a rich window into cognitive processes including attention, engagement, and information processing. Research has identified several robust oculometric biomarkers for psychiatric conditions. Adolescents with MDD demonstrate reduced saccade counts and longer fixation durations compared to healthy controls, potentially reflecting altered attentional allocation and processing speed [31]. In cognitive load assessment during reading tasks, measures such as fixation duration, saccade amplitude, and regression count have proven predictive of all three types of cognitive load—extraneous, intrinsic, and germane [34]. These eye movement patterns can indicate difficulties in lexical processing and post-lexical semantic integration, providing non-invasive markers of cognitive effort and processing efficiency.

HRV and Autonomic Nervous System Biomarkers

Heart rate variability offers valuable insights into autonomic nervous system regulation, which is frequently disrupted in psychiatric disorders. Research consistently shows elevated LF/HF ratios in adolescents with MDD, indicating sympathetic nervous system dominance and reduced parasympathetic modulation [31]. These HRV-derived metrics reflect autonomic dysregulation linked to depression severity and have shown significant associations with depression severity scores [31]. In cognitive load research, HR and HRV measures have demonstrated sensitivity to extraneous and germane cognitive load during reading tasks, providing objective physiological indices of cognitive resource allocation [34]. Additionally, electrodermal activity (EDA), particularly skin conductance response (SCR), captures phasic sympathetic nervous system activation that correlates with emotionally salient stimuli and cognitively demanding moments [34].

Table 2: Key Physiological Biomarkers Identified Through Multimodal Fusion

| Modality | Biomarker | Association with Mental States | Clinical/Research Utility |

|---|---|---|---|

| EEG | Theta/Beta Ratio [31] | Elevated in adolescent MDD | Potential indicator of cortical regulation imbalance |

| EEG | Posterior Cingulate Cortex Connectivity [35] | Predictive of sertraline response | Treatment prediction biomarker |

| Eye-Tracking | Saccade Count [31] | Reduced in adolescent MDD | Attentional allocation marker |

| Eye-Tracking | Fixation Duration [31] [34] | Prolonged in MDD; sensitive to cognitive load | Processing speed and effort indicator |

| HRV | LF/HF Ratio [31] | Elevated in adolescent MDD | Autonomic nervous system dysregulation marker |

| HRV | Heart Rate Variability [34] | Predictive of extraneous and germane cognitive load | Cognitive resource allocation index |

The Scientist's Toolkit: Essential Research Reagents and Solutions

Implementing robust multimodal fusion research requires specialized equipment and analytical tools. Below is a comprehensive table of essential research reagents and solutions used in the featured studies:

Table 3: Essential Research Reagents and Solutions for Multimodal Studies

| Tool/Reagent | Specification/Model | Primary Function | Example Use Case |

|---|---|---|---|

| VR Development Framework | A-Frame framework [31] | Creates immersive web-based VR environments | Developing magical forest scenario for depression assessment |

| Physiological Data Acquisition | BIOPAC MP160 system [31] | Synchronized recording of EEG, ECG, and other physiological signals | Collecting multimodal data during VR emotional tasks |

| Portable Ophthalmoscope | See A8 telemetric ophthalmoscope [31] | Records ocular motility and eye movement data | Tracking gaze patterns during VR tasks |

| EEG Recording Systems | 34-electrode whole-brain EEG [33] | Captures electrical brain activity with millisecond resolution | Monitoring neural dynamics during visuomotor tasks |

| fNIRS Systems | 44-channel fNIRS covering frontal and parietal cortex [33] | Measures hemodynamic responses using near-infrared light | Assessing brain activation during cognitive tasks |

| Eye-Tracking Integration | Integrated with VR headset [31] | Records gaze patterns and pupillary responses within immersive environments | Monitoring attentional allocation during VR scenarios |

| Machine Learning Framework | Support Vector Machine (SVM) [31] | Classifies physiological patterns associated with clinical conditions | Differentiating MDD patients from healthy controls |

| Deep Learning Architecture | Graph Neural Networks (GNN) [35] | Models complex relationships in brain network data | Predicting antidepressant treatment outcomes |

Comparative Performance of Fusion Approaches