Validating Virtual Reality: Establishing Concurrent Validity for the VR Multiple Errands Test in Assessing Real-World Functioning

This article examines the concurrent validity of the Virtual Reality Multiple Errands Test (VR MET) as an ecologically valid tool for assessing real-world executive functioning.

Validating Virtual Reality: Establishing Concurrent Validity for the VR Multiple Errands Test in Assessing Real-World Functioning

Abstract

This article examines the concurrent validity of the Virtual Reality Multiple Errands Test (VR MET) as an ecologically valid tool for assessing real-world executive functioning. Aimed at researchers and drug development professionals, it explores the foundational theory behind VR-based assessment, methodologies for implementation and validation, strategies for optimizing technical and psychometric properties, and comparative evidence against traditional measures. The synthesis of current research underscores the VR MET's potential to bridge the gap between clinic-based cognitive scores and functional capacity, offering significant implications for endpoint measurement in clinical trials and cognitive rehabilitation.

The Ecological Validity Gap: Why Traditional Assessments Fail to Predict Real-World Functioning

Neuropsychological assessment is a cornerstone of diagnosing and treating neurological disorders, with the primary goals of detecting neurological dysfunction, characterizing cognitive strengths and weaknesses, and guiding treatment planning [1]. These assessments are crucial for conditions including mild cognitive impairment (MCI), dementia, traumatic brain injury (TBI), stroke, Parkinson's disease, multiple sclerosis, epilepsy, and attention deficit hyperactivity disorder (ADHD) [2]. However, the very tools that constitute the "gold standard" in cognitive assessment harbor significant limitations that impact their diagnostic accuracy, clinical utility, and practical application. Traditional paper-and-pencil neuropsychological tests, while well-validated and psychometrically robust, lack similarity to real-world tasks and fail to adequately simulate the complexity of everyday activities [3]. This fundamental disconnect creates a critical gap between what these tests measure in a clinical setting and how patients actually function in their daily lives. As the field of neuropsychology evolves beyond mere lesion localization to in-depth characterization of brain-behavior relationships, the limitations of traditional assessments become increasingly consequential for researchers, clinicians, and drug development professionals seeking to demonstrate the real-world efficacy of cognitive interventions.

Fundamental Limitations of Traditional Neuropsychological Assessment

The Ecological Validity Problem

Ecological validity refers to the "functional and predictive relationship between the person's performance on a set of neuropsychological tests and the person's behavior in a variety of real world settings" [4]. This concept comprises two key components: representativeness (how well a test mirrors real-world demands) and generalizability (how well test performance predicts everyday functioning) [4]. Traditional assessments suffer from poor ecological validity as they take a "construct-led" approach that isolates single cognitive processes in abstract measures, resulting in poor alignment with real-world functioning [4]. This abstraction leads to a concerning statistical reality: traditional executive function tests account for only 18% to 20% of the variance in everyday executive ability [4]. This means approximately 80% of what determines a person's cognitive functioning in daily life remains unmeasured by conventional tests, creating a substantial validity gap for researchers and clinicians.

Practical and Methodological Constraints

Beyond ecological validity concerns, traditional neuropsychological assessments face significant practical limitations that affect their implementation and interpretation:

Extended Administration Times: Complete neuropsychological evaluations typically require 6 to 8 hours over one or more sessions, creating substantial burden for patients, particularly older adults or those with cognitive impairments [2].

Prolonged Wait Times: Patients referred for neuropsychological testing face average wait times of 5 to 10 months for adults and 12 months or longer for children, potentially allowing conditions like MCI to progress to more advanced stages before assessment and intervention [2].

Evaluator Bias: Traditional methodologies relying on questionnaires and guided exercises are influenced by the professional conducting the assessment, whose expectations, beliefs, or prior experiences may unconsciously influence test interpretation and scoring [5].

Cultural and Accessibility Limitations: Neuropsychological tests may not be equally applicable to patients from different cultural and linguistic backgrounds, with factors including language, reading level, and test familiarity potentially affecting performance independent of actual cognitive ability [2].

Table 1: Key Limitations of Traditional Neuropsychological Assessment

| Limitation Category | Specific Challenge | Impact on Clinical/Research Utility |

|---|---|---|

| Ecological Validity | Poor representation of real-world demands | Limited generalizability to daily functioning |

| Task impurity problem | Scores reflect multiple cognitive processes beyond targeted EF | |

| Methodological Issues | Artificial testing environment | Fails to capture performance in context-rich settings |

| Lack of multi-dimensional assessment | Cannot integrate affect, physiological state, context | |

| Practical Constraints | Extended administration time (6-8 hours) | Patient fatigue, limited clinical throughput |

| Long wait times (5-10 months) | Delayed diagnosis and treatment initiation | |

| Psychometric Concerns | Limited sensitivity to subtle deficits | Ineffective for detecting early or prodromal decline |

| Cultural/test bias | Reduced accessibility and accuracy across diverse populations |

Virtual Reality as a Paradigm Shift in Neuropsychological Assessment

Theoretical Foundations and Advantages

Virtual reality represents a fundamental shift in neuropsychological assessment methodology by addressing the core limitations of traditional approaches. VR enables the creation of controlled, standardized environments that simulate real-world contexts while maintaining experimental control [3] [5]. The theoretical foundation of VR assessment rests on its capacity to create "functionally relevant, systematically controllable, multisensory, interactive 3D stimulus environments" that mimic ecologically relevant challenges found in everyday life [6]. This approach offers several distinct advantages:

Enhanced Ecological Validity: VR environments can simulate complex, functionally relevant scenarios (e.g., a virtual kitchen, classroom, or shopping environment) that closely mirror real-world cognitive demands [3] [5].

Reduced Evaluator Bias: VR systems automatically record objective performance data without requiring examiner interpretation, standardizing administration and scoring across patients and clinics [5].

Multi-Dimensional Assessment: VR enables the simultaneous capture of cognitive performance, behavioral responses, and physiological metrics within ecologically valid contexts [6] [4].

Increased Engagement: Immersive VR environments demonstrate potential to enhance participant engagement through gamification and realistic scenarios, potentially yielding more accurate representations of cognitive abilities [4].

Concurrent Validity of VR-Based Neuropsychological Assessment

A critical question for researchers and clinicians is whether VR-based assessments demonstrate adequate concurrent validity with established traditional measures. A 2024 meta-analysis investigating the concurrent validity between VR-based assessments and traditional neuropsychological assessments of executive function revealed statistically significant correlations across all subcomponents, including cognitive flexibility, attention, and inhibition [3]. The results supported VR-based assessments as a valid alternative to traditional methods for evaluating executive function, with sensitivity analyses confirming the robustness of these findings even when lower-quality studies were excluded [3].

Table 2: Evidence for Concurrent Validity Between VR and Traditional Neuropsychological Assessments

| Cognitive Domain | VR Assessment | Traditional Comparison | Validation Outcome |

|---|---|---|---|

| Overall Executive Function | Multiple VR paradigms | Traditional paper-and-pencil tests | Significant correlations supported concurrent validity [3] |

| Attention Processes | Virtual Classroom continuous performance task | Traditional attention measures | Systematic improvements across age span in normative sample (n=837) [6] |

| Visual Attention | vCAT in immersive VR classroom | Traditional attention tests | Normative data showing expected developmental patterns [6] |

| Multiple EF Components | Various immersive VR paradigms | Gold-standard traditional tasks | Common validation against traditional tasks, though reporting inconsistencies noted [4] |

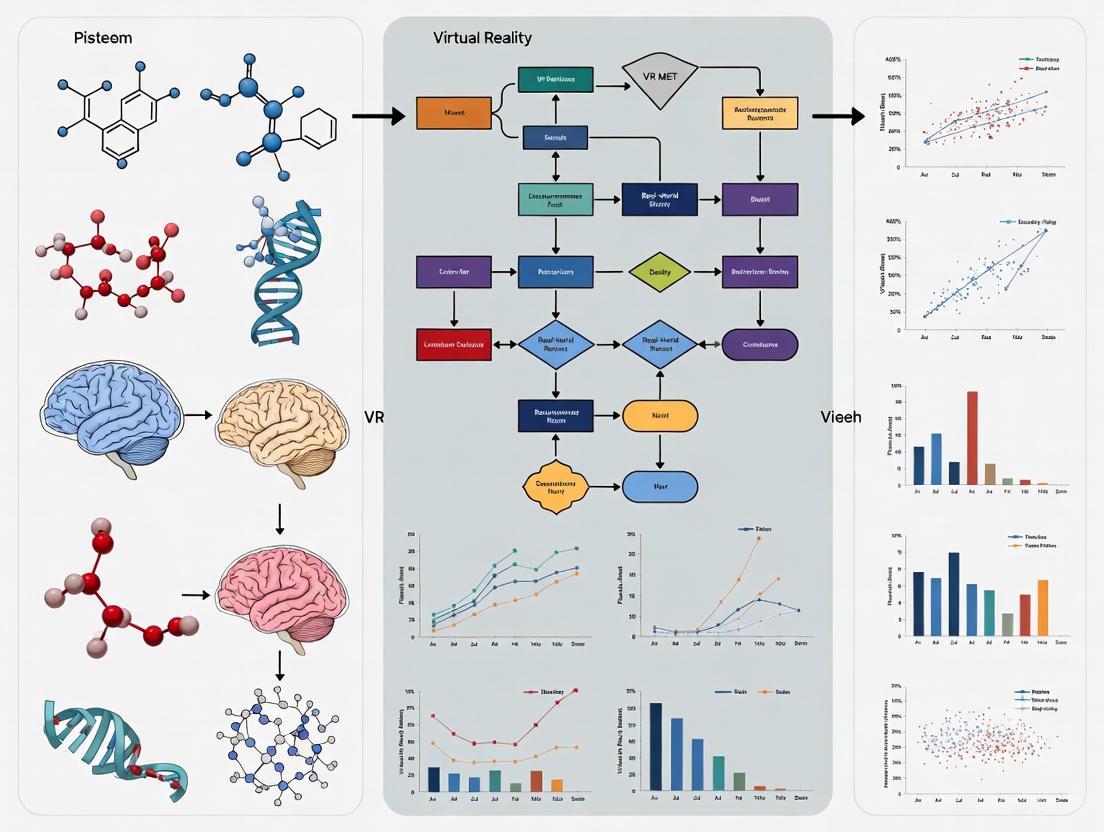

The following diagram illustrates the conceptual relationship between traditional assessment limitations and VR-based solutions within the validation framework:

Conceptual Framework: From Traditional Limitations to VR Validation

Experimental Evidence and Validation Protocols

Systematic Review and Meta-Analysis Methodology

The most comprehensive evidence for VR assessment validity comes from systematic reviews and meta-analyses. A 2024 meta-analysis investigating concurrent validity between VR-based and traditional executive function assessments followed PRISMA guidelines, identifying 1605 articles through searches of PubMed, Web of Science, and ScienceDirect from 2013-2023 [3]. After duplicate removal and screening, nine articles fully met the inclusion criteria for quantitative synthesis [3]. The analysis employed Comprehensive Meta-Analysis Software Version 3, transforming Pearson's r values into Fisher's z values to account for sample size, with heterogeneity evaluated using I² and random-effects models applied when heterogeneity was high (I² > 50%) [3]. Sensitivity analyses confirmed robustness after excluding lower-quality studies, supporting the conclusion that VR-based assessments demonstrate significant correlations with traditional measures across executive function subcomponents [3].

Normative Data Collection in VR Environments

Substantial research has established normative performance data in VR environments, demonstrating expected developmental patterns and psychometric properties. One study established normative data for visual attention using a Virtual Classroom Assessment Tracker (vCAT) with a large sample (n=837) of neurotypical children aged 6-13 [6]. Participants completed a 13-minute continuous performance test of visual attention within an immersive VR classroom environment delivered via head-mounted display [6]. The assessment measured core metrics including errors of omission (proxy for inattentiveness), errors of commission (proxy for impulsivity), accuracy, reaction time, reaction time variability, d-prime (signal-to-noise differentiation), and global head movement (measurements of hyperactivity and distractibility) [6]. Results showed systematic improvements across age spans on most metrics and identified sex differences on key variables, supporting VR as a viable methodology for capturing attention processes under ecologically relevant conditions [6].

The following workflow illustrates a typical experimental protocol for validating VR-based neuropsychological assessments:

VR Assessment Validation Workflow

The Scientist's Toolkit: Research Reagent Solutions for VR Neuropsychological Assessment

Table 3: Essential Research Tools for VR Neuropsychological Assessment

| Tool Category | Specific Examples | Research Function | Validation Evidence |

|---|---|---|---|

| VR Hardware Platforms | Oculus Rift, HTC Vive HMDs | Deliver immersive environments with head tracking | Most studies used commercial HMDs; 63% of solutions were immersive [7] [4] |

| Assessment Software | Nesplora Attention Kids Aula, Virtual Classroom (vCAT) | Administer cognitive tasks in ecologically valid contexts | Continuous performance tests in VR classroom show systematic age-related improvements [5] [6] |

| Cognitive Task Paradigms | CAVIR (Cognition Assessment in Virtual Reality) | Assess daily life cognitive functions in virtual scenarios | VR kitchen scenario validated against TMT-B, CANTAB, fluency tests [3] |

| Data Capture Systems | Head movement tracking, response time recording | Quantify behavioral responses beyond traditional metrics | Head movement tracking provides hyperactivity measures in ADHD assessment [6] |

| Validation Instruments | Traditional EF tests (TMT, SCWT, WCST) | Establish concurrent validity with gold standards | Significant correlations between VR and traditional measures across EF subcomponents [3] |

The limitations of traditional neuropsychological tests present significant challenges for researchers, clinicians, and drug development professionals. The poor ecological validity, limited sensitivity to subtle deficits, practical constraints, and methodological issues inherent in traditional assessment approaches compromise their utility for detecting early cognitive decline and measuring real-world functional outcomes. Virtual reality-based assessment methodologies offer a promising paradigm shift by creating standardized, engaging, and ecologically valid environments that capture multi-dimensional aspects of cognitive functioning. Evidence from meta-analyses and systematic reviews supports the concurrent validity of VR-based assessments with traditional measures, particularly for executive function evaluation. For the research community, including those in pharmaceutical development, VR assessment platforms provide enhanced sensitivity to detect subtle cognitive changes and stronger predictive validity for real-world functioning—critical factors for demonstrating treatment efficacy in clinical trials. As the field advances, further validation studies, standardized administration protocols, and comprehensive normative data will be essential to fully establish VR as a complementary approach that addresses the fundamental limitations of traditional neuropsychological tests.

The field of cognitive assessment is undergoing a fundamental transformation, moving from traditional paper-and-pencil tests toward ecologically valid virtual reality (VR) environments. This shift addresses a critical limitation in neuropsychological evaluation: the gap between controlled testing environments and real-world cognitive functioning. Ecological validity refers to how well assessment results predict or correlate with performance in everyday life, a domain where traditional methods often fall short. While standardized paper-and-pencil tests have established reliability in controlled settings, they frequently lack similarity to real-world tasks and fail to adequately simulate the complexity of daily activities [3].

The emergence of VR-based assessment represents a convergence of technological advancement and psychological science. VR allows subjects to engage in real-world activities implemented in virtual environments, enabling natural movement recognition and facilitating immersion in scenarios that closely mimic daily challenges [3]. This technological evolution enables researchers and clinicians to bridge the laboratory-to-life gap, offering controlled environments that ensure safety while allowing for objective, automatic measurement and management of responses to ecologically relevant activities [3]. The fundamental thesis driving this transition is that VR-based assessments demonstrate strong concurrent validity with traditional measures while offering superior predictive value for real-world functioning.

Theoretical Foundations: From Laboratory to Life

The Limitations of Traditional Assessment

Traditional neuropsychological assessments have primarily relied on paper-and-pencil instruments administered in controlled clinical settings. These include well-established measures such as the Trail Making Test (TMT), Stroop Color-Word Test (SCWT), Wisconsin Card Sorting Test (WCST), and comprehensive batteries like the Delis-Kaplan Executive Function System (D-KEFS) and Cambridge Neuropsychological Test Automated Battery (CANTAB) [3]. While these tools have demonstrated utility in detecting cognitive impairment, their ecological limitations are increasingly recognized. They often lack similarity to real-world tasks and fail to adequately simulate the complexity of everyday activities, resulting in limited generalizability to daily functioning [3].

The fundamental issue lies in the artificial nature of traditional testing environments. Paper-and-pencil tests typically deconstruct cognitive functions into isolated components, removing the rich contextual cues, multisensory integration, and motor components inherent in real-world activities. This decomposition, while useful for identifying specific deficits, often fails to capture how these cognitive processes interact in naturalistic settings where multiple demands occur simultaneously.

Defining Ecological Validity in Neuropsychological Assessment

Ecological validity in neuropsychological assessment encompasses two distinct dimensions: verisimilitude (the degree to which test items resemble real-world tasks) and veridicality (the empirical demonstration that test performance predicts real-world functioning). Traditional assessments often sacrifice verisimilitude for standardization and reliability. VR-based assessments aim to balance these competing demands by creating standardized environments that maintain both verisimilitude and veridicality through carefully designed virtual scenarios that mimic real-world challenges while maintaining experimental control.

Executive functions, increasingly defined as separable yet interrelated components involved in goal-directed thinking and behavior, are particularly suited to ecological assessment [3]. The three key subcomponents—working memory, inhibition, and cognitive flexibility—operate in concert during daily activities, making them difficult to assess comprehensively through traditional methods that often target these components in isolation [3].

Virtual Reality Assessment: Methodological Foundations

Technological Infrastructure and Implementation

VR-based cognitive assessment leverages immersive technology to create controlled yet ecologically rich environments. The technical infrastructure typically includes:

- Head-Mounted Displays (HMDs): Provide stereoscopic visuals and head-tracking capabilities to create a sense of presence in the virtual environment.

- Motion Tracking Systems: Capture natural body movements and interactions with virtual objects.

- Spatial Audio Systems: Deliver realistic soundscapes that enhance situational awareness.

- Response Recording Systems: Automatically capture performance metrics with millisecond precision.

These technological components work in concert to create immersive scenarios that engage multiple sensory modalities while maintaining strict experimental control. The virtual environments can be precisely standardized across administrations while allowing for adaptive difficulty and complex scenario development that would be impractical or unsafe in the real world.

Key VR Assessment Platforms and Their Applications

Several VR assessment platforms have emerged with demonstrated validity in clinical research:

- Cognition Assessment in Virtual Reality (CAVIR): An immersive VR test of daily-life cognitive functions in an interactive VR kitchen scenario that has shown significant correlations with traditional neuropsychological tests and real-world functioning [3] [8].

- Virtual Reality Everyday Assessment Lab (VR-EAL): A comprehensive assessment battery conducted in a virtual apartment environment that evaluates multiple cognitive domains simultaneously.

- NeuroVR Assessment Platform: A customizable platform allowing researchers to develop domain-specific virtual environments for targeted cognitive assessment.

These platforms represent a new generation of assessment tools that preserve the psychometric rigor of traditional tests while incorporating ecological relevance through immersive scenario-based evaluation.

Comparative Analysis: Quantitative Evidence

Concurrent Validity Between VR and Traditional Assessment

A 2024 meta-analysis investigating the concurrent validity between VR-based assessments and traditional neuropsychological assessments revealed statistically significant correlations across all executive function subcomponents [3]. The analysis, which included nine studies meeting strict inclusion criteria, demonstrated that VR-based assessments show consistent relationships with established paper-and-pencil measures, supporting their validity as cognitive assessment tools.

Table 1: Concurrent Validity Between VR-Based and Traditional Neuropsychological Assessments

| Executive Function Subcomponent | Effect Size | Statistical Significance | Number of Studies |

|---|---|---|---|

| Overall Executive Function | Significant correlation | p < 0.05 | 9 |

| Cognitive Flexibility | Significant correlation | p < 0.05 | 4 |

| Attention | Significant correlation | p < 0.05 | 3 |

| Inhibition | Significant correlation | p < 0.05 | 3 |

The meta-analysis employed Comprehensive Meta-Analysis Software (CMA) Version 3, with Pearson's r values transformed into Fisher's z for analysis. Heterogeneity was evaluated using I², with random-effects models applied when heterogeneity was high (I² > 50%). Sensitivity analyses confirmed the robustness of the findings, even when lower-quality studies were excluded [3].

Ecological Validity and Real-World Functioning

The critical advantage of VR-based assessment emerges in its relationship to real-world functioning. Research with the CAVIR platform demonstrates its value in predicting daily-life functional capacity in clinical populations.

Table 2: Ecological Validity of CAVIR vs. Traditional Measures in Mood and Psychosis Spectrum Disorders

| Assessment Method | Correlation with ADL Process Ability | Statistical Significance | Sensitivity to Cognitive Impairment |

|---|---|---|---|

| CAVIR (VR Kitchen Scenario) | r(45) = 0.40 | p < 0.01 | Sensitive to impairment and ability to differentiate employment capacity |

| Traditional Neuropsychological Tests | Not significant | p ≥ 0.09 | Limited sensitivity to real-world functioning |

| Interviewer-Rated Functional Capacity | Not significant | p ≥ 0.09 | Limited association with actual ADL performance |

| Subjective Cognition Reports | Not significant | p ≥ 0.09 | Poor correlation with objective ADL ability |

A study published in the Journal of Affective Disorders (2025) involving 70 patients with mood or psychosis spectrum disorders and 70 healthy controls found that CAVIR performance showed a weak to moderate association with better Activities of Daily Living (ADL) process ability in patients (r(45) = 0.40, p < 0.01), even after adjusting for sex and age [8]. In contrast, traditional neuropsychological performance, interviewer- and performance-based functional capacity, and subjective cognition were not significantly associated with ADL process ability [8].

Experimental Protocols and Methodologies

Protocol 1: CAVIR Assessment for Executive Functions

The Cognition Assessment in Virtual Reality (CAVIR) test represents a methodological advancement in ecological assessment with the following experimental protocol:

- Environment: Immersive VR kitchen scenario with interactive elements including cabinets, appliances, and cooking utensils.

- Participants: Symptomatically stable patients with mood or psychosis spectrum disorders and matched healthy controls.

- Procedure: Participants complete a series of goal-directed tasks in the virtual kitchen, such as following a recipe, managing multiple cooking tasks simultaneously, and responding to unexpected events.

- Primary Metrics: Task completion time, errors (omission and commission), efficiency of movement, sequencing accuracy, and compliance with safety procedures.

- Comparison Measures: Standard neuropsychological tests including Trail Making Test B (TMT-B), CANTAB tasks, and verbal fluency tests.

- Functional Outcome Measures: Assessment of Motor and Process Skills (AMPS) to evaluate real-world activities of daily living.

- Statistical Analysis: Spearman's correlations between CAVIR performance, neuropsychological test scores, and ADL ability; group comparisons between patients and controls; sensitivity and specificity analyses for detecting cognitive impairment [8].

This protocol demonstrates how VR assessment captures the multidimensional nature of real-world cognitive challenges while maintaining standardized administration and objective scoring.

Protocol 2: VR-Based Assessment of Learning and Knowledge Transfer

Research on VR learning environments for specialized training domains reveals important methodological considerations for assessment:

- Design Framework: "Design for learning" approach examining interrelationships between human factors, pedagogy, and VR learning affordances.

- Comparison Condition: Traditional textbook-based learning covering identical factual and conceptual knowledge.

- Assessment Metrics: Pre/post-intervention knowledge tests, intrinsic motivation scales, situational interest questionnaires, self-efficacy measures.

- VR-Specific Measures: Presence, agency, cognitive load, embodiment, user experience (pragmatic and hedonic qualities).

- Participant Monitoring: Semi-structured interviews and observation notes to capture qualitative aspects of the learning experience.

- Analysis Approach: Quantitative analysis of knowledge test results and questionnaire data supported by qualitative data from interviews [9].

This comprehensive assessment approach reveals that while textbook-based learning may more effectively transfer factual and conceptual knowledge, VR environments generate higher levels of intrinsic motivation and situational interest—affective factors crucial for long-term engagement and skill application [9].

Visualizing the Assessment Paradigm Shift

Figure 1: Conceptual Framework of Ecological Validity in Assessment Approaches

Table 3: Research Reagent Solutions for VR-Based Cognitive Assessment

| Tool/Platform | Primary Function | Research Application | Key Features |

|---|---|---|---|

| CAVIR | Cognition assessment in virtual reality kitchen | Evaluating daily-life cognitive skills in clinical populations | Correlates with neuropsychological performance and ADL ability [8] |

| Immersive HMDs | Visual and auditory immersion | Creating presence in virtual environments | Head tracking, stereoscopic display, integrated audio |

| Motion Tracking Systems | Capturing movement and interaction | Quantifying naturalistic behavior in virtual spaces | Position tracking, gesture recognition, controller input |

| Comprehensive Meta-Analysis Software | Statistical analysis of effect sizes | Synthesizing validity evidence across studies | Effect size calculation, heterogeneity analysis, bias detection [3] |

| QUADAS-2 Checklist | Quality assessment of diagnostic accuracy studies | Evaluating methodological rigor of validation studies | Risk of bias assessment, applicability concerns [3] |

Implications for Research and Clinical Practice

Advancing Clinical Trials and Drug Development

The enhanced ecological validity of VR-based assessment has significant implications for clinical trials in neuropsychiatric disorders and cognitive-enhancing interventions. By providing more sensitive and functionally relevant outcome measures, VR assessment can:

- Detect Subtle Treatment Effects: Capture cognitive improvements that translate to real-world functioning, potentially revealing efficacy that traditional measures might miss.

- Reduce Sample Size Requirements: Through increased sensitivity to clinically meaningful changes, VR assessment may enable smaller, more efficient trial designs.

- Demonstrate Functional Benefits: Provide evidence of treatment effects on daily-life activities, supporting value propositions for new therapeutic agents.

- Facilitate Personalized Medicine: Identify specific cognitive profiles that predict treatment response based on performance in ecologically relevant scenarios.

Future Directions and Methodological Considerations

As VR-based assessment evolves, several key areas require further development:

- Standardization Across Platforms: Establishment of common metrics and administration protocols to enable cross-study comparisons.

- Cultural Adaptation: Development of culturally relevant virtual environments for global research applications.

- Longitudinal Validation: Studies examining the predictive validity of VR assessment for long-term functional outcomes.

- Integration with Biomarkers: Combining VR performance data with neurophysiological and neuroimaging measures for multimodal assessment.

- Accessibility and Implementation: Addressing practical barriers to widespread adoption in research and clinical settings.

The methodological rigor demonstrated in recent studies—including systematic literature searches, strict inclusion criteria, quality assessment using QUADAS-2, and comprehensive statistical analysis—provides a template for future validation studies [3].

The transition from paper-and-pencil assessment to virtual environments represents a paradigm shift in cognitive evaluation, driven by the imperative for greater ecological validity. Substantial evidence now supports the concurrent validity of VR-based assessments with traditional neuropsychological measures, while demonstrating superior relationships with real-world functioning [3] [8]. As research methodologies continue to evolve and technology becomes more sophisticated and accessible, VR-based assessment is poised to transform how researchers and clinicians evaluate cognitive function, ultimately bridging the critical gap between laboratory measurement and everyday life performance.

For researchers in clinical trials and drug development, these advanced assessment tools offer the potential to more effectively capture the functional impact of interventions, demonstrating treatment effects that matter to patients' daily lives. The integration of ecological validity with methodological rigor positions VR assessment as an essential component of next-generation cognitive evaluation in both research and clinical practice.

Executive functions (EFs) are higher-order cognitive abilities essential for managing goal-directed tasks across various aspects of daily life. The accurate assessment of these functions is critical in both clinical and research settings, as impairments can significantly undermine academic performance, reduce the ability to carry out independent activities of daily living, and negatively affect disease management [3]. Traditional neuropsychological assessments have primarily relied on paper-and-pencil tests conducted in controlled laboratory environments. However, these methods lack similarity to real-world tasks and fail to adequately simulate the complexity of everyday activities, resulting in low ecological validity and limited generalizability to real-life functioning [3].

The Multiple Errands Test (MET) represents a significant advancement in addressing these limitations by assessing executive functions within realistic daily living contexts. This assessment approach aligns with the growing recognition that executive functions comprise separable yet interrelated components—including working memory, inhibition, and cognitive flexibility—that work together to support complex cognitive tasks [3]. With the emergence of virtual reality (VR) technologies, researchers have developed VR-based versions of the MET that further enhance its utility by providing standardized, controlled environments that simulate real-world demands while maintaining experimental rigor.

Core Executive Function Constructs Measured by the MET

Cognitive Flexibility and Task Switching

Cognitive flexibility, a core executive function component, refers to the mental ability to switch between thinking about different concepts or to simultaneously think about multiple concepts. The MET effectively evaluates this construct by requiring participants to adapt to changing task demands, shift between sub-tasks efficiently, and modify strategies in response to environmental feedback. Within the MET framework, cognitive flexibility is operationalized through tasks that necessitate rapid behavioral adjustments and mental set shifting, mirroring the cognitive demands encountered in daily life situations where individuals must juggle multiple competing tasks [3].

The MET's approach to assessing cognitive flexibility demonstrates superior ecological validity compared to traditional measures like the Wisconsin Card Sorting Test (WCST) or Trail Making Test (TMT). By embedding cognitive flexibility demands within realistic task scenarios, the MET captures not only the efficiency of cognitive switching but also the application of this ability in contexts that closely resemble real-world challenges [3].

Planning and Organization

Planning and organization represent fundamental executive processes that enable individuals to develop and implement effective strategies for achieving goals. The MET comprehensively assesses these abilities by requiring participants to formulate multi-step plans, organize task sequences logically, and execute activities in a structured manner. The test environment, whether physical or virtual, presents participants with multiple tasks that must be completed within specific constraints, thereby demanding sophisticated planning abilities that traditional discrete tasks cannot capture [10].

In MET protocols, planning capacity is measured through metrics such as the logical sequencing of tasks, efficiency of route planning when physical navigation is required, and the effective allocation of resources including time. These measurements provide insights into an individual's ability to manage complex, multi-component tasks similar to those encountered in instrumental activities of daily living such as meal preparation, medication management, and financial organization [10].

Working Memory and Task Monitoring

Working memory, the system responsible for temporarily storing and manipulating information, is critically engaged throughout MET performance. Participants must retain task instructions, monitor completed and pending tasks, and keep track of evolving rules and constraints while executing multiple errands. This continuous demand on working memory resources mirrors the cognitive load experienced in real-world scenarios where individuals must maintain and manipulate information while engaging in goal-directed behavior [3].

The MET's assessment of working memory differs significantly from traditional laboratory tasks like digit span or n-back tests by placing working memory demands within the context of functionally relevant activities. This approach provides valuable information about how working memory capacities translate to performance in everyday situations, offering enhanced predictive validity for real-world functioning [3].

Inhibitory Control and Rule Adherence

Inhibitory control, the ability to suppress dominant or automatic responses when necessary, is systematically evaluated through the MET's structured rule systems. Participants must resist instinctive approaches to task completion, adhere to specified restrictions, and inhibit prepotent responses that would violate test constraints. This component assesses the integrity of frontally-mediated inhibitory mechanisms that are crucial for appropriate social and functional behavior across daily contexts [3].

Rule violations and error types during MET administration provide rich qualitative data about the nature of inhibitory deficits, distinguishing between impulsive responding, perseverative behavior, and difficulties with rule maintenance. This nuanced assessment surpasses the capabilities of traditional inhibition measures such as the Stroop Color-Word Test, which evaluates inhibition in a more decontextualized manner [3].

Virtual Reality MET: Paradigm Advancement and Concurrent Validity

Technological Implementation and Ecological Verisimilitude

Virtual reality platforms for administering the MET represent a significant methodological advancement that preserves the ecological validity of the original assessment while enhancing standardization and measurement precision. These systems create immersive virtual environments that simulate real-world settings such as kitchens, supermarkets, and community spaces, allowing for the assessment of executive functions within contexts that closely mirror daily challenges [3] [10].

The CAVIR (Cognition Assessment in Virtual Reality) system exemplifies this approach, presenting participants with an interactive VR kitchen scenario that requires the execution of multi-step tasks similar to those in traditional MET protocols. These VR environments maintain high levels of verisimilitude—the degree to which cognitive demands mirror those encountered in naturalistic environments—while enabling precise automated measurement of performance metrics [3] [10].

Psychometric Properties and Validation Evidence

Recent meta-analytic evidence supports the concurrent validity of VR-based assessments of executive function, including VR adaptations of the MET paradigm. A comprehensive meta-analysis investigating the relationship between VR-based assessments and traditional neuropsychological measures revealed statistically significant correlations across all executive function subcomponents, including cognitive flexibility, attention, and inhibition [3].

Table 1: Concurrent Validity Coefficients Between VR-Based and Traditional EF Measures

| EF Component | Effect Size Correlation | Statistical Significance | Number of Studies |

|---|---|---|---|

| Overall Executive Function | Moderate to Large | p < 0.001 | 9 |

| Cognitive Flexibility | Significant | p < 0.05 | Multiple |

| Attention | Significant | p < 0.05 | Multiple |

| Inhibition | Significant | p < 0.05 | Multiple |

Sensitivity analyses confirmed the robustness of these findings, with effect sizes remaining significant even when lower-quality studies were excluded from analysis. The meta-analysis included 9 studies that fully met inclusion criteria after screening 1605 initially identified articles, demonstrating the rigorous methodology underlying these conclusions [3].

Additional validation research using specific VR systems further supports their psychometric properties. The CAVIRE-2 system, which assesses six cognitive domains through 13 virtual scenarios, demonstrated moderate concurrent validity with the Montreal Cognitive Assessment (MoCA) and good test-retest reliability with an Intraclass Correlation Coefficient of 0.89 [10]. The system also showed strong discriminative ability for identifying cognitive impairment, with an area under the curve (AUC) of 0.88, sensitivity of 88.9%, and specificity of 70.5% at the optimal cut-off score [10].

Table 2: Psychometric Properties of VR-Based Cognitive Assessment Systems

| Psychometric Property | Measure/Result | Comparison Instrument |

|---|---|---|

| Concurrent Validity | Moderate correlation | MoCA |

| Test-Retest Reliability | ICC = 0.89 | Test-retest interval |

| Internal Consistency | Cronbach's α = 0.87 | Item analysis |

| Discriminative Ability | AUC = 0.88 | Cognitively normal vs. impaired |

| Sensitivity | 88.9% | At optimal cut-off |

| Specificity | 70.5% | At optimal cut-off |

Comparative Experimental Protocols and Methodologies

VR-MET Implementation Protocols

The implementation of MET paradigms within virtual reality follows standardized protocols that balance ecological validity with experimental control. Typical VR-MET sessions involve:

Environment Setup: Participants don VR headsets and controllers, with systems calibrated to ensure optimal tracking and immersion.

Instruction Phase: Clear task instructions are provided, often including practice trials to familiarize participants with the VR interface.

Task Execution: Participants complete a series of errands or tasks within the virtual environment, such as purchasing specific items in a virtual store while adhering to rules and constraints.

Performance Monitoring: The system automatically records multiple performance metrics, including completion time, errors, rule violations, and efficiency measures.

Post-Test Assessment: Participants may complete traditional neuropsychological tests or provide subjective feedback about their VR experience [3] [10].

The CAVIRE-2 system exemplifies this approach with its 14 discrete scenes, including one starting tutorial session and 13 virtual scenes simulating both basic and instrumental activities of daily living in familiar settings. This comprehensive assessment can be completed in approximately 10 minutes, demonstrating the efficiency of well-designed VR assessment platforms [10].

Validation Study Designs

Validation research for VR-based MET assessments typically employs cross-sectional designs comparing performance between well-characterized clinical and control groups. Key methodological elements include:

Participant Recruitment: Studies typically include both healthy participants and individuals with known executive function deficits (e.g., mild cognitive impairment, ADHD, Parkinson's disease).

Counterbalanced Administration: Traditional and VR-based assessments are administered in counterbalanced order to control for practice effects and fatigue.

Blinded Assessment: Researchers administering traditional assessments are often blinded to VR performance results, and vice versa.

Comprehensive Statistical Analysis: Analyses include correlation analyses between assessment modalities, group comparison analyses, receiver operating characteristic (ROC) analyses for diagnostic accuracy, and reliability analyses [3] [10].

This methodological rigor ensures that validity evidence meets established standards for neuropsychological assessment tools and supports the use of VR-based MET implementations in both research and clinical applications.

Visualizing the VR-MET Assessment Framework

The following diagram illustrates the conceptual framework and experimental workflow of VR-based Multiple Errands Test assessment:

VR MET Assessment Framework - This diagram illustrates the core executive function constructs measured by the Multiple Errands Test, the assessment environments, performance metrics, and validity evidence supporting VR implementations.

The Researcher's Toolkit: Essential Methodological Components

Table 3: Research Reagent Solutions for VR MET Implementation

| Tool/Component | Function/Application | Implementation Example |

|---|---|---|

| Immersive VR Headset | Creates controlled virtual environments for assessment | Head-mounted displays with motion tracking capabilities |

| VR Controllers | Enables natural interaction with virtual objects | Motion-tracked handheld devices with input buttons |

| Virtual Environment Software | Presents realistic scenarios for EF assessment | Custom-designed virtual kitchens, supermarkets, or community spaces |

| Automated Scoring Algorithms | Objectively quantifies performance metrics | Software that records completion time, errors, and efficiency measures |

| Traditional Neuropsychological Tests | Provides validation criteria for concurrent validity | Trail Making Test, Stroop Test, Wisconsin Card Sorting Test |

| Data Recording Systems | Captures comprehensive performance data | Integrated systems that log user interactions, timing, and errors |

The Multiple Errands Test represents a significant advancement in the ecological assessment of executive functions, with virtual reality implementations offering enhanced standardization, precision, and practical utility. Substantial evidence supports the concurrent validity of VR-based MET assessments with traditional executive function measures, while simultaneously addressing the ecological limitations of conventional neuropsychological tests [3] [10].

For researchers and drug development professionals, VR-based MET protocols provide sensitive tools for detecting executive function deficits and monitoring intervention effects within contexts that closely mirror real-world functional demands. The continuing refinement of these assessment technologies promises to further bridge the gap between laboratory-based cognitive assessment and the complex cognitive demands of daily life, offering enhanced predictive validity for functional outcomes across clinical populations.

Executive functions are higher-order cognitive processes essential for managing the complex, multi-task demands of everyday life. Traditional neuropsychological assessments, while valuable, often lack ecological validity, meaning they fail to adequately simulate the complexity of real-world activities and have limited generalizability to daily functioning [3]. The Multiple Errands Test (MET) was developed precisely to address this gap. It is a performance-based assessment designed to evaluate how deficits in executive functions manifest during everyday activities by having participants complete a series of real-world tasks under a set of specific rules [11] [12]. Originally developed by Shallice and Burgess in 1991, the MET was born from the observation that some patients with frontal lobe lesions performed well on standardized tests yet experienced significant difficulties in their daily lives [11] [12]. The test was theoretically grounded in Norman and Shallice's Supervisory Attentional System (SAS) model, which describes the cognitive system responsible for monitoring plans and actions in novel, non-routine situations [12]. By creating a complex, low-structure environment, the MET provides a window into a person's ability to plan, organize, and manage competing demands in a way that closely mirrors real-life challenges.

The Evolution of the MET: From Shopping Precinct to Standardized Versions

The original MET, administered in a pedestrian shopping precinct, required participants to complete eight written tasks. These included six simple errands (e.g., purchasing specific items), one time-dependent task, and one more demanding task involving obtaining and writing down four pieces of information [11]. Performance was evaluated based on the number and type of errors, such as rule breaks, inefficiencies, interpretation failures, and task failures [11]. The success and clinical utility of the original MET led to the development of numerous adaptations to suit different environments and populations. However, the need for site-specific modifications made it difficult to establish standardized psychometric properties and compare results across studies [12]. This drove efforts to create more uniform versions.

Table: Key Versions of the Multiple Errands Test

| Version Name | Environment | Key Features & Modifications | Primary Population |

|---|---|---|---|

| Original MET [11] | Shopping Precinct | 8 tasks; 6 simple errands, 1 time-based task, 1 complex 4-subtask activity. | Acquired Brain Injury (ABI) |

| MET-Hospital Version (MET-HV) [11] | Hospital Grounds | 12 subtasks; more concrete rules, simpler tasks. | Wider range of participants, including ABI |

| MET-Simplified Version (MET-SV) [11] | Small Shopping Plaza | 12 tasks; more explicit rules, simplified demands, space for recording information. | Neurologically impaired adults |

| Baycrest MET (BMET) [11] | Hospital/Research Center | 12 items, 8 rules; standardized scoring and manualized administration. | Acquired Brain Injury |

| Big-Store MET [12] | Large Department Store | Standardized for use in large chain stores without site-specific modifications. | Community-dwelling adults (ABI and healthy) |

| Virtual MET (VMET) [11] | Virtual Reality Environment | Video-capture virtual supermarket; safe, controlled, and objective measurement. | Patients with motor or mobility impairments |

| MET-Home [12] | Home Environment | First version usable across different sites without adaptation. | Stroke, ABI |

| Paper MET [13] | Clinical Setting (Imagined) | Simplified paper version using a map of an imaginary city; low cost and highly applicable. | Schizophrenia, Bipolar Disorder, Autism |

The core principle unifying these versions is the requirement to complete multiple simple tasks (often purchasing items, collecting information, and meeting at a specified time) while adhering to a set of rules, such as spending as little money as possible, not entering a shop without buying something, and not using aids other than a watch [11] [14]. The proliferation of these versions underscores the clinical value of the MET while also highlighting the historical challenge of achieving standardization.

The Shift to Virtual Reality: The Virtual MET

The transition of the MET into virtual environments represents a significant advancement in ecological assessment. The Virtual MET (VMET) was developed within a functional video-capture virtual supermarket, maintaining the same number of tasks as the hospital version but replacing the meeting task with checking the contents of a shopping cart at a particular time [11]. This shift to VR offers several key advantages. It provides a safe and controlled environment where patients can be assessed without the risks associated with community ambulation. It also allows for highly standardized administration across different clinics, overcoming the site-specific limitations of physical versions. Furthermore, VR enables the precise and objective measurement of behavior, including metrics like navigation paths and time on task, which can be difficult to capture reliably in a real-world setting [11] [3]. A critical benefit is that the VMET can be used with individuals who have motor impairments that would preclude them from the extensive ambulation required by physical versions of the test [14].

Concurrent Validity: Examining the Relationship Between VR MET and Real-World Functioning

For any new assessment tool to be adopted, it must demonstrate strong psychometric properties, particularly concurrent validity—the degree to which a new test correlates with an established one when administered at the same time [3]. A 2024 meta-analysis systematically investigated this by analyzing the correlation between VR-based assessments of executive function and traditional neuropsychological tests [3]. The analysis focused on subcomponents of executive function, revealing statistically significant correlations between VR-based assessments and traditional measures across all subcomponents, including cognitive flexibility, attention, and inhibition [3]. The robustness of these findings was confirmed through sensitivity analyses. This supports the use of VR-based assessments, including the VMET, as a valid alternative to traditional methods for evaluating executive function [3].

Table: Concurrent Validity of VR-Based Executive Function Assessments vs. Traditional Tests [3]

| Executive Function Subcomponent | Correlation with Traditional Measures | Key Findings from Meta-Analysis |

|---|---|---|

| Overall Executive Function | Statistically Significant | Significant correlations support VR as a valid assessment tool. |

| Cognitive Flexibility | Statistically Significant | |

| Attention | Statistically Significant | Results were robust in sensitivity analyses, even when lower-quality studies were excluded. |

| Inhibition | Statistically Significant |

Specific studies on MET versions further reinforce this validity. For instance, the Big-Store MET demonstrated moderate to large effect sizes (d = 0.48-1.06) in distinguishing between adults with acquired brain injury and healthy controls, providing evidence for its known-group validity [15]. Furthermore, the Paper MET, a simplified version, has shown strong associations with essential psychosocial outcomes, including lower quality of life, well-being, and self-esteem in large cohorts of patients with schizophrenia, bipolar disorder, and autism spectrum disorder [13]. This demonstrates that the MET, even in non-VR forms, captures deficits that are meaningfully linked to real-world community living.

Experimental Protocols and Methodologies

To illustrate the research underpinning the MET's development and validation, here are the methodologies from two key studies.

- Aim: To develop a standardized community version of the MET for use in large department stores without site-specific modifications and establish its feasibility and reliability.

- Phase 1 - Content Validity: Scientists and clinicians with expertise in the MET (n=4) reviewed the proposed Big-Store version and evaluated its consistency with previously published versions.

- Phase 2 - Feasibility and Reliability:

- Participants: A convenience sample of 14 community-dwelling adults who self-reported as healthy.

- Procedure: Participants were assessed by two trained raters simultaneously administering the Big-Store MET.

- Data Analysis: Feasibility was assessed based on completion time (within 30 minutes), score variability, and participant acceptability. Inter-rater reliability was calculated using Intraclass Correlation Coefficients (ICCs).

- Outcome: The study found the Big-Store MET to be feasible and demonstrated excellent inter-rater reliability (ICCs = 0.92–0.99) for most performance scores.

- Aim: To investigate the concurrent validity between VR-based assessments and traditional neuropsychological assessments of executive function.

- Literature Search: Conducted in February 2024 across PubMed, Web of Science, and ScienceDirect databases from 2013 to 2023, identifying 1605 articles.

- Study Selection: Following PRISMA guidelines, nine articles met the full inclusion criteria (e.g., used VR to assess executive function, provided correlation data with traditional tests).

- Data Analysis: Pearson's r values were transformed into Fisher's z for analysis. Heterogeneity was assessed using I², with a random-effects model applied due to high heterogeneity. Effect sizes for overall executive function and subcomponents (cognitive flexibility, attention, inhibition) were analyzed.

- Quality Assessment: The quality of included studies was assessed independently by two reviewers using the QUADAS-2 checklist.

Figure 1: Conceptual Framework of MET and Ecological Validity. This diagram illustrates the theoretical basis of the MET. It is designed to capture executive dysfunction as it manifests in a naturalistic, multi-task context. Performance on the MET's core components (task completion, rule adherence, and strategy use) is theorized to be a better predictor of real-world functioning than traditional neuropsychological (NP) tests and has been empirically linked to key psychosocial outcomes [12] [13].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table: Key Materials and Tools for MET Research

| Tool / Material | Function in MET Research | Example from Search Results |

|---|---|---|

| Real-World Testing Environment | Provides the novel, unpredictable context necessary to observe real-world executive function. | Shopping precinct, hospital grounds, large department store [11] [12]. |

| Virtual Reality System & Software | Creates a safe, controlled, and standardized environment for administering the VMET; enables precise data capture. | GestureTek IREX system for VMET; Meta Horizon Studio for VR environment creation [11] [16]. |

| Standardized Instruction & Scoring Sheets | Ensures consistent administration and reliable recording of errors (inefficiencies, rule breaks, task failures). | Used across all versions (e.g., BMET manual, MET-HV scoring sheet) [11] [12]. |

| Traditional Neuropsychological Tests | Serves as the "gold standard" for establishing the concurrent validity of new MET versions. | Trail Making Test (TMT), Stroop Color-Word Test (SCWT), Delis-Kaplan Executive Function System (D-KEFS) [3] [14]. |

| Psychosocial Outcome Measures | Links MET performance to meaningful, real-world quality of life and community participation. | Quality of Life scales, Well-being scales, Self-Esteem measures [13]. |

Figure 2: MET Validation Workflow for New Versions. This flowchart outlines the standard methodological pathway for developing and validating new versions of the Multiple Errands Test, as exemplified by studies on the Big-Store MET and VMET [12]. The process begins with development, followed by establishing content validity through expert review, then progresses through stages of feasibility, reliability, and multiple types of validity testing before the version is ready for full application.

The Multiple Errands Test has evolved significantly from its origins as a specialized tool for assessing patients with frontal lobe lesions into a family of standardized assessments with strong ecological validity. The core concept—evaluating executive function through performance in multi-task, rule-bound, real-world scenarios—has proven robust across physical, hospital, home, and virtual environments. The transition to Virtual Reality marks a particularly promising development, offering enhanced standardization, safety, and objective measurement while maintaining the ecological validity that defines the test. Recent meta-analytic evidence confirms the strong concurrent validity of VR-based assessments with traditional measures, solidifying their role in a comprehensive cognitive assessment battery. For researchers and clinicians, the MET provides an indispensable tool for understanding the real-world impact of executive dysfunction and for designing targeted rehabilitation strategies that improve functional outcomes and quality of life.

Building a Valid Instrument: Methodologies for Developing and Implementing the VR MET

Key Design Principles for a Psychometrically Sound VR MET

The pursuit of ecological validity in neuropsychological assessment has catalyzed the development of Virtual Reality-based Medical Evaluation Tools (VR METs). These tools aim to bridge the gap between sterile clinical environments and the complexity of real-world functioning. This guide explores the key design principles for developing a psychometrically sound VR MET, framed within the critical context of establishing concurrent validity with real-world tasks. We synthesize current research and validation protocols to provide researchers and drug development professionals with an evidence-based framework for creating and evaluating VR assessments that can reliably predict patient functioning in everyday life.

Traditional neuropsychological assessments, while well-normed, often lack similarity to real-world tasks and fail to adequately simulate the complexity of everyday activities [3]. This results in low ecological validity and limited generalizability of findings to a patient's daily life. Virtual Reality (VR) technology presents a paradigm shift, allowing subjects to engage in real-world activities implemented in virtual environments [3]. A VR MET leverages this capability to create controlled, immersive simulations that can objectively and automatically measure responses to functionally relevant activities [3].

The core thesis driving VR MET development is that these tools must demonstrate strong concurrent validity—the extent to which a new test correlates with an established one when both are administered simultaneously [3]. For a VR MET, this means its outcomes should correlate significantly with both traditional neuropsychological measures and, crucially, with metrics of real-world functioning. Research has confirmed statistically significant correlations between VR-based assessments and traditional measures across multiple cognitive subcomponents, supporting their use as a valid alternative for evaluating executive function [3].

Core Design Principles for a Psychometrically Sound VR MET

Creating a VR MET that is both engaging and scientifically rigorous requires adherence to several foundational design principles.

Prioritize Psychological Fidelity Over Visual Realism

A common misconception is that high-fidelity graphics are the primary determinant of a successful simulation. Evidence suggests that psychological fidelity—the accurate representation of the perceptual and cognitive features of the real task—is far more critical for effective transfer of learning to the real world [17]. A simulation must capture the fundamental cognitive demands (e.g., planning, inhibition, cognitive flexibility) of the real-world task it aims to assess, even if the visual realism is simplified.

Ensure Ergonomic and Biomechanical Fidelity

The simulation must elicit realistic motor movements. In a driving assessment VR MET, for instance, this might mean incorporating a steering wheel and pedals rather than relying on handheld controllers [18]. In a rehabilitation context, it requires that movements in the virtual environment accurately reflect the user's real-world kinematics, as demonstrated in a shoulder rehabilitation study that used a gold-standard motion capture system to validate a custom VR application [19].

Build on a Foundation of Ecological Validity

The scenarios and tasks within the VR MET must be relevant to the everyday challenges faced by the target population. This is the core advantage of VR: the ability to immerse users in realistic scenarios, such as a virtual kitchen to assess daily life cognitive functions [3] or a road traffic environment to evaluate driving skills [18]. One study found that 81.25% of participants perceived their VR driving scenarios as realistic, confirming the potential for high ecological validity [18].

Integrate Robust, Multi-Modal Data Collection

A key advantage of a VR MET is the capacity to collect rich, objective data beyond simple task accuracy. This includes performance metrics (e.g., errors, time to completion), kinematic data (e.g., movement speed, coordination), and physiological responses, all captured in real-time [18]. This multi-faceted data provides a more comprehensive picture of a user's abilities than traditional pen-and-paper tests.

Design for User-Centered Acceptability

The system must be usable and acceptable to the target population. This involves minimizing VR-related side effects like simulator sickness and ensuring high usability scores. Positive user experiences foster engagement and reduce dropout rates. For example, a VR driving assessment was recommended for future use by 97.5% of participants, highlighting high acceptability [18].

Validation Methodologies: Establishing Concurrent Validity

A VR MET is only as valuable as its validated relationship with real-world functioning. The following experimental protocols and metrics are essential for establishing this link.

Core Validation Protocols

The table below summarizes the key methodologies used to validate a VR MET.

Table 1: Key Experimental Protocols for VR MET Validation

| Validation Method | Description | Key Outcome Measures | Example from Literature |

|---|---|---|---|

| Concurrent Validity Analysis | Administering the VR MET and established traditional measures simultaneously to the same participants. | Correlation coefficients (e.g., Pearson's r) between VR tasks and traditional neuropsychological tests. | A meta-analysis found significant correlations between VR-based and traditional assessments of executive function [3]. |

| Expert-Novice Paradigm | Comparing performance on the VR MET between known experts and novices in the target skill. | Significant performance differences between groups, supporting the tool's construct validity. | This method is proposed as a test of a simulation's construct validity [17]. |

| Crossover Comparison with Gold-Standard Equipment | Comparing data from the VR MET with data from gold-standard laboratory equipment. | Agreement metrics, mean absolute error, and statistical comparisons of kinematic or physiological data. | A shoulder rehab VR app was validated against a stereophotogrammetric motion capture system [19]. |

| Psychometric Comparison with Traditional Tests | Comparing scores from a VR-based psychometric test with those from traditional, standardized tests. | Correlation of scores on constructs like peripheral vision, reaction time, and motor accuracy. | A VR driver assessment showed strong correlations between its tests and critical driving skills [18]. |

Establishing Fidelity and Validity: A Workflow

The following diagram illustrates the logical workflow and key relationships in designing and validating a VR MET, based on established frameworks [17].

Quantitative Evidence for Validity

The growing body of evidence supporting VR assessments is summarized in the table below, which compiles key findings from recent studies.

Table 2: Summary of Quantitative Validation Evidence for VR-Based Assessments

| Domain / Study Focus | VR MET Used | Comparison / Validation Method | Key Quantitative Finding |

|---|---|---|---|

| Executive Function [3] | Various VR assessments of cognitive flexibility, attention, and inhibition | Meta-analysis of correlations with traditional paper-and-pencil tests | Statistically significant correlations were found across all executive function subcomponents. |

| Driver Assessment [18] | Custom VR platform for peripheral vision, reaction time, and precision | User surveys on realism and effectiveness | 81.25% of participants perceived scenarios as realistic; 85% agreed the system effectively measured critical driving skills. |

| Medical Education (OSCE) [20] | VR-based Objective Structured Clinical Examination (OSCE) station | Comparison with identical in-person OSCE station | The VR OSCE was rated on par with the in-person station for workload, fairness, and realism. |

| Shoulder Rehabilitation [19] | Custom VR app for post-operative shoulder exercises | Kinematic comparison with stereophotogrammetric system (gold standard) | Results for flexion and abduction showed low total mean absolute error values. |

The Scientist's Toolkit: Essential Research Reagents & Materials

The following table details key hardware, software, and methodological "reagents" essential for conducting rigorous VR MET research and development.

Table 3: Essential Research Reagent Solutions for VR MET Development

| Item / Solution | Function in VR MET Research | Specific Examples |

|---|---|---|

| Standalone VR Headset | Provides an untethered, immersive virtual environment for the user. Serves as the primary display and tracking system for the head and hands. | Oculus Quest 2 [21] [19] [18] |

| Game Engines | Software framework used to design, develop, and render the interactive 3D environments and logic of the VR MET. | Unity3D [19] [18] |

| Indirect Calorimetry System | Gold-standard equipment for measuring energy expenditure (oxygen consumption) to objectively quantify the physical intensity of VR exergaming protocols. | Cortex METAMAX 3B [22] |

| Motion Capture System | Gold-standard for validating the kinematic and biomechanical fidelity of movements performed within the VR MET. Provides high-accuracy spatial data. | Qualisys system with reflective markers [19] |

| Validated Questionnaires (Psychometrics) | To measure user experience, perceived exertion, usability, technology acceptance, and simulator sickness, which are critical for assessing feasibility and acceptability. | System Usability Scale (SUS), Simulator Sickness Questionnaire (SSQ), Technology Acceptance Model (TAM) [20], Raw NASA TLX [20] |

| Heart Rate Monitor | An objective physiological measure of exertion and affective state during VR MET activities. | Polar V800 [22] |

The development of a psychometrically sound VR MET is a multifaceted endeavor that extends beyond technical programming to rigorous scientific validation. The core principles outlined—psychological fidelity, ecological validity, and robust data collection—provide a roadmap for creating tools that can truly capture the complexities of real-world functioning. The experimental protocols and validation workflows offer a template for researchers to systematically demonstrate the concurrent validity of their systems.

Future progress in this field will likely involve standardizing these validation protocols across different VR MET applications, from cognitive assessment in neurology trials to functional capacity evaluation in rehabilitation. Furthermore, as VR technology becomes more sophisticated and accessible, the integration of biometric sensing and artificial intelligence for adaptive task delivery will create even more powerful and personalized assessment tools. For drug development professionals, a validated VR MET offers the potential for highly sensitive, functionally relevant endpoints in clinical trials, ultimately providing a clearer picture of a therapeutic's impact on a patient's daily life.

In both neuroscience and pharmaceutical development, accurately measuring functional cognition—the ability to perform everyday tasks—is crucial for evaluating cognitive health and treatment efficacy. Traditional neuropsychological tests often suffer from low ecological validity, meaning performance on these tests does not robustly predict real-world functioning [23]. Regulatory authorities like the Food and Drug Administration (FDA) have consequently mandated the demonstration of functional improvements alongside cognitive gains for drug approval in conditions like Alzheimer's disease and schizophrenia [23] [24].

Virtual Reality (VR) has emerged as a powerful solution, enabling the creation of standardized, immersive simulations of daily activities. These assessments measure cognitive domains such as memory, attention, and executive function within engaging, real-world contexts, thereby offering superior predictive power for functional outcomes [23]. This guide focuses on two prominent examples: VStore, a supermarket shopping task, and discusses the conceptual framework for CAVIR, a kitchen-based assessment.

VStore: A Deep Dive into the Supermarket Assessment

VStore is a novel, fully immersive VR shopping task designed to simultaneously assess traditional cognitive domains and functional capacity [23] [25]. It was developed to address the limitations of standard cognitive batteries, which are often time-consuming, burdensome for patients, and poor at predicting real-world skills [24]. By embedding cognitive tasks within an ecologically valid minimarket environment, VStore creates a direct proxy for everyday functioning.

Experimental Protocol and Methodology

The validation and feasibility studies for VStore followed a rigorous experimental protocol, detailed in the table below.

Table 1: Key Experimental Protocols for VStore Validation

| Study Aspect | Protocol Details |

|---|---|

| Participant Cohorts | • Healthy Volunteers: Aged 20-79 years (n=142 across studies) [23]• Clinical Cohort: Patients with psychosis (n=210 total across three studies) [24] |

| Equipment & Setup | • Head-Mounted Display (HMD): Fully immersive VR headset [24].• Task Environment: A maze-like minimarket to engage spatial navigation [23]. |

| Primary VStore Outcomes | 1. Verbal recall of 12 grocery items [23] [24]2. Time to collect all items [23] [24]3. Time to select items on a self-checkout machine [23] [24]4. Time to make the payment [23]5. Time to order a hot drink [23]6. Total task completion time [23] [24] |

| Validation Measures | • Construct Validity: Compared against the Cogstate computerized cognitive battery (measuring attention, processing speed, working memory, etc.) [23].• Feasibility/Acceptability: Measured via completion rates and adverse effects questionnaires [24]. |

Diagram 1: VStore Experimental Workflow

Key Quantitative Findings and Validation Data

VStore has been validated in multiple studies. The table below summarizes its key performance metrics against established standards and its ability to differentiate populations.

Table 2: VStore Performance and Validation Data

| Validation Metric | Result | Significance / Interpretation |

|---|---|---|

| Construct Validity | Performance was best predicted by Cogstate tasks measuring attention, working memory, and paired associate learning, plus age and tech familiarity (R² = 47% of variance) [23]. |

Confirms VStore engages intended cognitive domains and aligns with standard measures. |

| Sensitivity to Age | Ridge regression model with VStore outcomes predicted age (MSE 185.80); was 87% sensitive and 91.7% specific to age cohorts (AUC = 94.6%) [23]. | Demonstrates high sensitivity to age-related cognitive decline. |

| Feasibility & Acceptability | Exceptionally high completion rate (99.95%) across 210 participants. No VR-induced adverse effects reported [24]. | Tool is well-tolerated and practical for healthy and clinical populations. |

| Clinical Utility | Showed a clear difference in performance between patients with psychosis and matched healthy controls [24]. | Has potential for discriminating impaired from unimpaired cognition. |

CAVIR: Extending the Framework to the Kitchen

While the search results do not provide specific experimental data for a "CAVIR" assessment, the conceptual framework for a kitchen-based VR functional assessment is a logical and valuable extension of the principles established by VStore. The kitchen environment presents a rich domain for assessing more complex instrumental activities of daily living (IADLs), such as meal preparation, which involves planning, sequencing, and safety awareness.

The diagram below illustrates how the validated framework from VStore can be adapted to create a kitchen-based assessment.

Diagram 2: From Supermarket to Kitchen VR Assessment Framework

The Researcher's Toolkit for VR Functional Assessments

Implementing VR assessments like VStore requires specific hardware, software, and methodological considerations. The following table details the essential "research reagents" and their functions.

Table 3: Essential Research Reagents and Tools for VR Functional Assessment

| Tool Category | Specific Example | Function in Research Context |

|---|---|---|

| VR Hardware | Meta Quest 3/3S, HTC Vive Pro 2 [26] [27] [28] | Provides the immersive display and tracking. Standalone headsets (Quest) offer ease of use, while PC-tethered (Vive) offer high fidelity. |

| Validation Software | Cogstate Computerized Cognitive Battery [23] | An established computerized tool used to test the construct validity of the novel VR task. |

| Primary Outcome Metrics | VStore: Time-based metrics and verbal recall scores [23] [24] | Serve as the primary dependent variables, quantifying functional cognition. |

| Tolerability Questionnaire | VR-induced adverse effects survey (e.g., for cybersickness) [24] | Ensures participant safety and acceptability, critical for clinical trials. |

| Data Analysis Plan | Ridge Regression & ROC Analysis [23] | Statistical methods to validate the tool against age and standard measures, and determine its classificatory accuracy. |

VStore stands as a rigorously validated prototype for VR-based functional cognition assessment. Its strong concurrent validity with gold-standard cognitive measures, high sensitivity to age-related decline, and exceptional feasibility in clinical populations make it a promising tool for both research and clinical trials [23] [24]. The natural progression of this work involves developing and validating assessments in other critical domains of daily life, such as the kitchen.

The future of cognitive assessment in medicine and drug development lies in tools that can objectively and ecologically measure whether a patient can successfully navigate the complexities of everyday life. VR functional assessments like VStore are paving the way for a new generation of endpoints that are not only statistically significant but also clinically meaningful.

Virtual Reality (VR) is rapidly transforming the assessment of cognitive functions, moving beyond traditional neuropsychological tests by offering enhanced ecological validity. This refers to how well test performance predicts real-world behavior [4]. For researchers and drug development professionals, establishing concurrent validity—the extent to which a new test correlates with an established one administered at the same time [3]—is a critical step in validating these tools.

The Virtual Multiple Errands Test (VMET) and similar shopping tasks exemplify this approach. They are immersive adaptations of the classic Multiple Errands Test (MET), which measures executive functions in real-world settings like shopping centers [29] [23]. By replicating these complex environments in VR, researchers can maintain experimental control and safety while capturing cognitive processes that are more directly applicable to patients' daily lives [29] [4]. This guide provides a structured checklist for evaluating how well VR-based assessments map to real-world skills, supported by direct experimental comparisons and quantitative data.

Comparative Data: VR vs. Traditional Assessments and Real-World Performance

The following tables summarize key quantitative findings from validation studies, highlighting the relationship between VR task performance, traditional cognitive tests, and real-world functioning.