A Researcher's Guide to Validating VideoFreeze Settings for Robust and Reproducible Rodent Behavior Data

This article provides a comprehensive framework for researchers and scientists in biomedical and drug development to establish, optimize, and validate settings for the VideoFreeze software, a key tool for automated...

A Researcher's Guide to Validating VideoFreeze Settings for Robust and Reproducible Rodent Behavior Data

Abstract

This article provides a comprehensive framework for researchers and scientists in biomedical and drug development to establish, optimize, and validate settings for the VideoFreeze software, a key tool for automated assessment of Pavlovian conditioned freezing. Covering foundational principles, methodological setup, advanced troubleshooting, and rigorous statistical validation, the guide emphasizes best practices to ensure data accuracy, enhance reproducibility, and support high-quality preclinical research in studies of learning, memory, and pathological fear.

Understanding VideoFreeze: Principles of Automated Freezing Detection and Its Role in Preclinical Research

Freezing behavior, defined as the complete cessation of movement except for respiration, represents a cornerstone measure of conditioned fear in behavioral neuroscience. Its quantification has evolved from purely manual scoring by trained observers to sophisticated, automated software systems. However, this transition introduces critical challenges, including parameter calibration and context-dependent validity, which can significantly impact the reliability of fear memory assessment. This Application Note provides a comprehensive framework for defining and quantifying freezing, detailing protocols for both manual and automated scoring, and presenting validation data to guide researchers in optimizing VideoFreeze software settings for robust and reproducible results. The content is framed within the essential context of software validation, underscoring the necessity of aligning automated measurements with ethological definitions to accurately capture subtle behavioral phenotypes in rodent models.

Freezing behavior is a species-specific defense reaction (SSDR) that is a quintessential readout of associative fear memory in rodents. Its definition, originating from ethological observations, is the "absence of movement of the body and whiskers with the exception of respiratory motion" [1]. Within the Predatory Imminence Continuum theory, freezing is characterized as a post-encounter defensive behavior, manifesting when a threat has been detected but physical contact has not yet occurred. This state maps onto the emotional state of fear, distinct from anxiety (pre-encounter) or panic (circa-strike) [2]. The accurate measurement of this behavior is thus not merely a technical task but is fundamental to interpreting the neural and psychological mechanisms of learning and memory.

The move toward automated behavior assessment, while offering gains in objectivity and throughput, is fraught with the challenge of ensuring that software scores faithfully reflect this ethological definition. Studies consistently highlight that automated measurements can diverge from manual scores, particularly when assessing subtle behavioral effects, as is common in generalization research or when studying transgenic animals with mild phenotypic deficits [1]. Furthermore, an over-reliance on freezing as the sole metric can lead to the misclassification of animals that express fear through alternative behaviors, such as reduced locomotion or "darting" [3]. Consequently, validating automated systems like VideoFreeze is not a simple one-time calibration but an ongoing process that requires a deep understanding of the behavior itself, the software's parameters, and the specific experimental context. This document establishes the protocols and validation data necessary to achieve this alignment, ensuring that modern neuroscience applications remain grounded in Darwinian definitions.

Defining the Behavior: Ethology and Measurement Fundamentals

Core Definition and Behavioral Topography

The operational definition of freezing is the complete absence of visible movement, excluding those necessitated by respiration. This definition requires the human observer or software algorithm to distinguish between immobility and other low-movement activities such as resting, grooming, or eating. Key anatomical points of reference include the torso, limbs, and head, which must be motionless. In manual scoring, trained observers use a button-press or similar method to mark the onset and offset of freezing epochs based on this visual criterion [4].

Beyond Freezing: The Behavioral Repertoire of Fear

While freezing is the dominant fear response, it is not the only one. A comprehensive behavioral assessment acknowledges and accounts for other defensive behaviors, which is crucial for avoiding the misclassification of "resilient-to-freezing" phenotypes [3].

- Reduced Locomotion: Hypolocomotion is a well-established measure of anxiety in novel environments and is increasingly recognized as a complementary measure of fear. A dual-measure approach, combining freezing time and locomotor activity, provides a more comprehensive and accurate assessment of the fear response, capturing animals that may not meet strict freezing thresholds [3].

- Active Fear Responses: Behaviors such as "darting"—a rapid, explosive movement—have been identified, particularly in female rats, as an active fear coping strategy. Relying solely on freezing would misclassify these animals as non-responsive [3].

- Scanning and Risk-Assessment: Behaviors like lateral head scanning are considered investigatory and are linked to information gathering about environmental features, reflecting a different defensive state [5].

The following table summarizes key behaviors and their significance in fear conditioning paradigms.

Table 1: Behavioral Repertoire in Rodent Fear Conditioning

| Behavior | Definition | Proposed Emotional State | Measurement Modality |

|---|---|---|---|

| Freezing | Cessation of all movement except for respiration. | Fear (Post-encounter defense) | Manual scoring; Video analysis (pixel change) [1] [2] |

| Reduced Locomotion | Decreased total distance traveled or average velocity. | Fear/Anxiety | Automated tracking (video or infrared beams) [3] [2] |

| Darting | A sudden, high-velocity movement. | Active fear response | Video tracking [3] |

| Head Scanning | Lateral movement of the head, often while stationary. | Investigatory/Risk-assessment | Pose estimation software [5] |

Automated Quantification: Principles and Validation of VideoFreeze

Core Operational Principle

VideoFreeze (Med Associates, Inc.) and similar software (e.g., Phobos, EthoVision) operate on a common principle: quantifying movement by analyzing the difference between consecutive video frames. The software converts frames to binary (black and white) images and calculates the number of non-overlapping pixels. When this number, often termed the motion index, falls below a predefined freezing threshold for a minimum duration (minimum freeze duration), an epoch is scored as freezing [4].

Critical Software Parameters and Optimization Caveats

The accuracy of VideoFreeze is highly dependent on two key parameters, and their optimization is a non-trivial process.

- Freezing Threshold: This motion index value distinguishes movement from immobility. A threshold that is too low may fail to detect small, non-freezing movements (e.g., tail twitches), inflating freezing scores. A threshold that is too high may ignore the small pixel changes caused by respiratory motion during genuine freezing, leading to underestimation [1].

- Minimum Freeze Duration: This parameter specifies the consecutive time the motion index must remain below the threshold to count as a freeze, typically set at 1-2 seconds (30-60 frames). This prevents brief, innate movements from interrupting a freezing bout [1] [4].

A significant caveat is that optimal parameters are not universal. They can vary with animal species (rat vs. mouse), strain, context configuration, lighting, camera placement, and even the type of chamber inserts used. One study reported a stark divergence between software and manual scores in one context but not another, despite using identical software settings and a previously validated threshold (motion threshold 50 for rats). Subsequent adjustments to camera white balance failed to resolve this discrepancy, highlighting the complex and context-specific nature of parameter optimization [1].

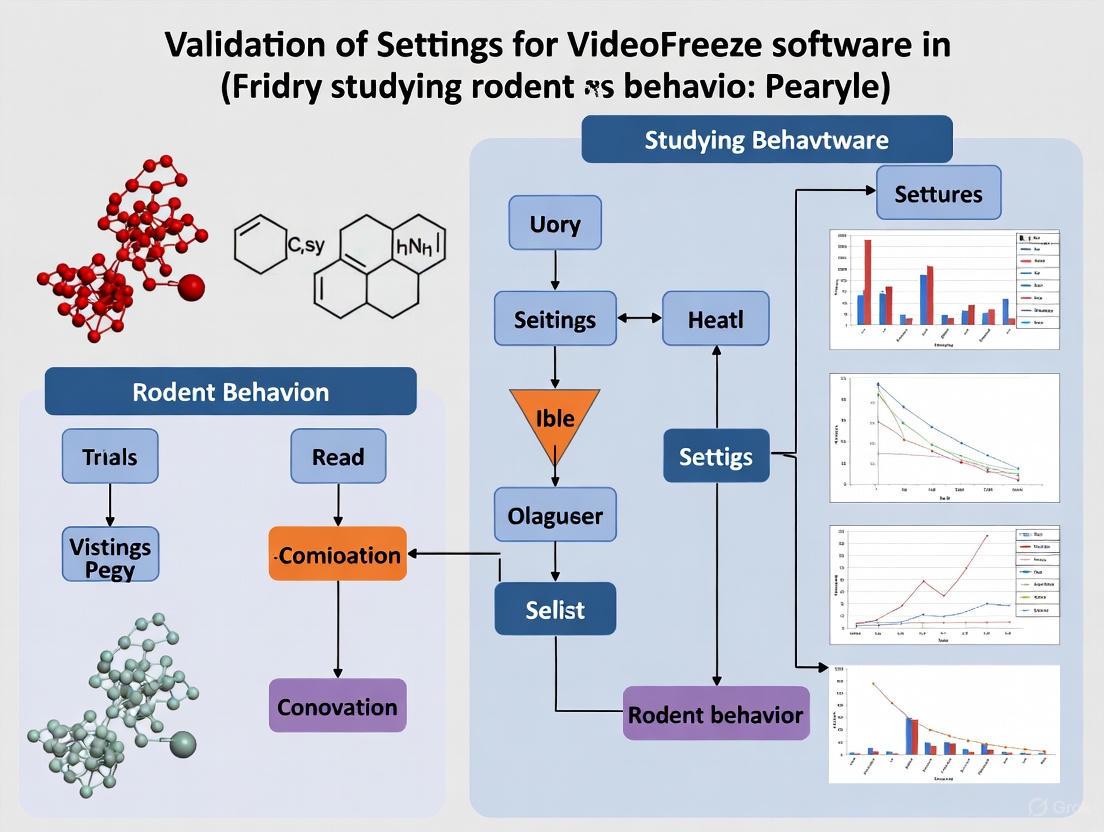

The following diagram illustrates the core workflow and validation challenge for automated freezing analysis.

Quantitative Validation Data

To guide initial parameter selection, the following table consolidates published parameters from various studies. These should serve as a starting point for rigorous in-house validation.

Table 2: Published Software Parameters for Freezing Detection

| Software | Species | Freezing Threshold | Minimum Freeze Duration | Correlation with Manual Scoring (r) | Context Notes |

|---|---|---|---|---|---|

| VideoFreeze | Mouse | Motion Index: 18 | 1 second (30 frames) | High [1] | Validated by Anagnostaras et al., 2010 [1] |

| VideoFreeze | Rat | Motion Index: 50 | 1 second (30 frames) | Variable by context [1] | Used by Zelikowsky et al., 2012; context-dependent divergence reported [1] |

| Phobos | Mouse/Rat | Auto-calibrated (e.g., ~500 pixels default) | Auto-calibrated (e.g., 0-2 s range) | >0.9 [4] | Self-calibrating software; uses brief manual scoring to set parameters [4] |

Detailed Experimental Protocols

Protocol 1: Manual Scoring of Freezing Behavior

This protocol establishes the gold-standard method against which automated systems are validated.

1. Materials and Reagents:

- Video Recordings: High-resolution (e.g., 384x288 pixels minimum) videos of experimental subjects from a top-down or consistent angle [4].

- Scoring Software: A program that allows for continuous event logging (e.g., EthoVision, ANY-maze, or a simple timer with event markers).

- Blinding: The scorer must be blind to the experimental group assignment of each subject.

2. Procedure: 1. Familiarization: The scorer reviews the operational definition of freezing and practices on training videos not included in the study. 2. Scoring Session: Play the video. Press and hold a designated key at the precise onset of a freezing epoch, defined as the moment all movement (excluding respiration) ceases. 3. Epoch Termination: Release the key at the first sign of any non-respiratory movement, marking the offset of the freezing epoch. 4. Data Export: The software calculates total freezing time and/or bout duration. Percentage freezing is calculated as (total freezing time / total session time) * 100.

3. Analysis and Interpretation: * Calculate inter-rater reliability between two or more independent observers using Cohen's Kappa or intra-class correlation coefficient. A Kappa value of >0.6 is generally considered substantial agreement [1]. * The average of multiple observers' scores is often used as the final manual score for validation purposes.

Protocol 2: Validating and Optimizing VideoFreeze Settings

This protocol describes a systematic approach to calibrating VideoFreeze software for a specific experimental setup.

1. Materials and Reagents:

- Med Associates Video Fear Conditioning System with VideoFreeze software.

- A calibration set of video recordings (n=10-20) that represent the full range of freezing behavior (low, medium, high) expected in your experiments.

- The manually scored data for the calibration video set (from Protocol 1).

2. Procedure: 1. Initial Parameter Selection: Input a published baseline parameter set (e.g., from Table 2) into VideoFreeze. 2. Automated Batch Analysis: Run the calibration video set through VideoFreeze using the initial parameters. 3. Data Comparison: Export the software-generated freezing scores and compare them to the manual scores for each video and for defined time bins within each video (e.g., 20-second epochs) [4]. 4. Parameter Iteration: Systematically vary the Freezing Threshold (in steps of 50-100) and Minimum Freeze Duration (in steps of 0.25-0.5 seconds). Re-analyze the videos with each new parameter combination. 5. Optimal Parameter Selection: Identify the parameter set that produces the highest correlation (Pearson's r) with manual scores and has a linear fit slope closest to 1 and an intercept closest to 0. This ensures not just correlation but also agreement in the absolute values [4].

3. Analysis and Interpretation: * Primary Metric: Pearson's correlation coefficient (r) between automated and manual scores for epoch-by-epoch analysis. A value of >0.9 is excellent. * Secondary Metric: Cohen's Kappa to assess agreement on the classification of freezing vs. non-freezing epochs. * Bland-Altman Plots: Can be used to visualize the agreement and identify any systematic bias (e.g., software consistently overestimating freezing).

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Software for Freezing Behavior Research

| Item Name | Vendor / Source | Function / Application |

|---|---|---|

| Modular Test Cage | Coulbourn Instruments, Med Associates | Standardized operant chamber for fear conditioning [6]. |

| VideoFreeze Software | Med Associates, Inc. | Commercial automated software for quantifying freezing behavior [1] [2]. |

| ANY-maze Video Tracking Software | Stoelting Co. | Versatile video tracking software for multiple behaviors including locomotion and zone preference [6]. |

| Phobos Software | GitHub (Open Source) | Freely available, self-calibrating software for automatic measurement of freezing [4]. |

| Tail-Coat Apparatus | Custom Fabrication | Lightweight, wearable conductive apparatus for delivering tail-shock in head-fixed mice [7]. |

| Programmable Animal Shocker | Coulbourn Instruments | Delivers precise footshock (unconditioned stimulus) in fear conditioning paradigms [6]. |

| HNBQ System | Custom Software | A pose estimation-based system for fine-grained analysis of mouse locomotor behavior and posture [5]. |

The following diagram synthesizes the recommended end-to-end workflow for implementing a validated freezing behavior assay, from experimental design to data interpretation.

In conclusion, the precise definition and accurate quantification of freezing behavior are fundamental to the integrity of fear conditioning research. While automated systems like VideoFreeze offer powerful advantages, their output is only as valid as their calibration. This Application Note underscores that a one-size-fits-all approach to parameter settings is inadequate. By adhering to the detailed protocols for manual scoring and software validation, and by adopting a dual-measure approach that considers both freezing and locomotor activity, researchers can significantly enhance the accuracy, reliability, and translational value of their behavioral data in drug development and neuroscience research.

Core Algorithm and Principles

VideoFreeze automates the measurement of freezing behavior in rodents by analyzing pixel-level changes in video footage. The software operates on the fundamental principle that the absence of movement, except for respiration, defines freezing behavior. It quantifies this by comparing consecutive video frames and calculating the number of pixels whose grayscale values change beyond a specific, user-defined threshold [8].

The underlying algorithm functions through a streamlined pipeline:

- Frame Comparison: Each pair of consecutive video frames is compared.

- Pixel Difference Calculation: The software identifies pixels whose intensity (grayscale value) has changed from one frame to the next.

- Motion Index Calculation: The number of changed pixels is summed to create a "motion index" for that frame transition.

- Threshold Application: This motion index is compared against a predetermined freezing threshold. If the index is below this threshold, the animal's behavior for that interval is classified as freezing [4] [1].

A second critical parameter is the minimum freeze duration, which specifies the consecutive number of frames for which the motion must remain below the threshold for the episode to be counted as a freezing bout. This helps ignore brief, transient movements [1].

Logical Workflow of the VideoFreeze Algorithm

The following diagram illustrates the core decision-making process of the VideoFreeze software.

Experimental Validation and Parameter Optimization

Validating the software's output against manual scoring is a critical step to ensure reliability, particularly when studying subtle behavioral effects or using different context configurations [1].

Key Quantitative Findings from Validation Studies

Table 1: Optimized Motion Threshold Parameters for VideoFreeze from Peer-Reviewed Studies

| Species | Motion Index Threshold | Minimum Freeze Duration | Experimental Context | Correlation with Manual Scoring | Source |

|---|---|---|---|---|---|

| Mouse | 18 | 30 frames (1 s) | Standard fear conditioning | High correlation reported | [1] |

| Rat | 50 | 30 frames (1 s) | Standard fear conditioning | Context-dependent agreement | [1] |

Table 2: Context-Dependent Performance of VideoFreeze in Rat Studies

| Testing Context | Software vs. Manual Scoring | Agreement (Cohen's Kappa) | Notes |

|---|---|---|---|

| Context A (Standard) | Software scores significantly higher (74% vs 66%) | 0.05 (Poor) | Discrepancy persisted despite white balance adjustment [1]. |

| Context B (Similar) | No significant difference (48% vs 49%) | 0.71 (Substantial) | Good agreement between automated and manual scores [1]. |

Experimental Protocol for Validating VideoFreeze Settings

Objective: To determine the optimal motion threshold and minimum freeze duration for a specific experimental setup and rodent strain by correlating software output with manual scoring.

Materials:

- VideoFreeze software (Med Associates) [9].

- Video recordings from your fear conditioning experiments.

- Computer with timer and scoring interface.

Procedure:

- Video Selection: Select a representative subset of videos (e.g., 3-5 from different experimental groups) for manual scoring. Ensure videos cover a range of freezing levels [4].

- Manual Scoring: A trained observer, blind to the software scores, manually records freezing episodes. Freezing is defined as the "absence of movement of the body and whiskers with the exception of respiratory motion" [1]. Using a simple button-press interface to mark the start and end of each episode is recommended [4].

- Software Calibration:

- Run the same set of videos through VideoFreeze.

- Systematically test a range of motion thresholds (e.g., from 18 for mice to higher values like 50 for rats) and minimum freeze durations (e.g., 0.5 s to 2 s) [4] [1].

- For each parameter combination, export the total freezing time and/or frame-by-frame data.

- Data Analysis:

- Calculate the correlation (e.g., Pearson's r) between the manual freezing scores and the automated scores for each parameter combination.

- Select the parameter set that yields the highest correlation and a linear fit closest to the manual scores [4].

- Validation: Apply the optimized parameters to a new, unseen set of videos and confirm that the high correlation with manual scoring is maintained.

Experimental Design for Validation

This diagram outlines the key steps for a robust validation of VideoFreeze parameters.

The Scientist's Toolkit

Table 3: Essential Research Reagents and Materials for VideoFreeze Experiments

| Item | Function / Description | Example Use-Case |

|---|---|---|

| VideoFreeze Software | Commercial software for automated freezing analysis; allows user-defined stimulus parameters and outputs measures like percent time motionless and motion index [9]. | Core platform for scoring fear conditioning videos. |

| Standard Fear Conditioning Chamber | A controlled environment (e.g., from Med Associates) with specific features (grid floor, inserts) to serve as a conditioning context [1]. | Providing a consistent and reliable experimental arena. |

| Contextual Inserts | Physical modifications (e.g., curved walls, different floor types, textured panels) used to create distinct testing environments [1]. | Studying contextual fear memory and generalization. |

| Calibration Reference Video | A short video segment manually scored by an expert, used to calibrate software parameters for a specific setup [4]. | Ensuring software settings are optimized for your lab's conditions. |

Automated behavioral analysis systems, such as VideoFreeze, have become indispensable tools in behavioral neuroscience, enabling high-throughput, objective assessment of fear memory in rodents through the quantification of freezing behavior. However, the accuracy of these systems is not inherent; it is contingent upon rigorous validation against the gold standard of human expert scoring. Freezing behavior is defined as the suppression of all movement except that required for respiration, a species-specific defense reaction [10]. The transition from labor-intensive manual scoring to automated systems addresses issues of inter-observer variability and tedium, but introduces a critical dependency on software parameters and hardware setup [10] [4]. Without proper validation, automated systems risk generating data that are precise yet inaccurate, potentially leading to erroneous conclusions in studies of learning, memory, and the efficacy of therapeutic compounds. This application note details the principles and protocols essential for ensuring that automated freezing scores from VideoFreeze faithfully represent the true behavioral state of the animal, a non-negotiable prerequisite for generating reliable and reproducible scientific data.

Core Principles of Validation for Automated Freezing Analysis

The validation of an automated system like VideoFreeze is not merely a box-ticking exercise but a fundamental process to ensure the system meets specific, rigorous criteria. A system must do more than simply correlate with human scores; it must achieve a near-identical linear relationship for the data to be considered valid for scientific analysis [10].

Essential Requirements for a Validated System

Anagnostaras et al. (2010) outline several non-negotiable requirements for an automated freezing detection system [10]:

- Measurement of Movement: The system must quantitatively measure movement, equating near-zero movement with freezing.

- Sensitivity to Subtle Movements: The system must detect small movements, such as grooming or sniffing, and correctly not count them as freezing. Furthermore, if no animal is present, the system should score 100% freezing, demonstrating an ability to reject video noise.

- High Signal-to-Noise Ratio: The signals generated by small, non-freezing movements must be well above the level of inherent video noise.

- Rapid Detection: The analysis must operate in near-real-time to capture the onset and cessation of freezing episodes accurately.

- Statistical Agreement with Human Observers: The scores generated must correlate very well with those from trained human observers. Crucially, the linear fit between automated and manual scores should have a correlation coefficient near 1, a y-intercept near 0, and a slope near 1 [10]. This ensures the automated system is not just tracking human judgment, but replicating it across the entire range of possible freezing values (0-100%).

Consequences of Improper Validation

Failure to properly validate system settings leads to predictable and critical errors in data collection, as illustrated in the table below.

Table 1: Common Validation Failures and Their Consequences in Automated Freezing Analysis

| Scoring Outcome | Impact on Linear Fit | Potential Cause | Effect on Data |

|---|---|---|---|

| Over-estimation of freezing, particularly at low movement levels [11] | Slope may not be 1; Low correlation; Y-intercept > 0 [11] | Motion Threshold too HIGH; Minimum Freeze Duration too SHORT [11] | Inflates freezing scores, misrepresenting baseline activity and fear memory. |

| Under-estimation of freezing at low and mid levels [11] | Slope may not be 1; Low correlation; Y-intercept < 0 [11] | Motion Threshold too LOW; Minimum Freeze Duration too LONG [11] | Suppresses freezing scores, leading to false negatives in detecting fear memory. |

Quantitative Validation Data and Parameter Optimization

The performance of VideoFreeze is governed by two key parameters: the Motion Threshold (the arbitrary movement value above which the subject is considered moving) and the Minimum Freeze Duration (the duration the subject's motion must be below the threshold for a freeze episode to be counted) [11]. Systematic validation studies have quantified the impact of these parameters.

Table 2: Impact of Analysis Parameters on Validation Metrics as Demonstrated by Anagnostaras et al. (2010) [11]

| Parameter Setting | Impact on Correlation (r) | Impact on Y-Intercept | Impact on Slope |

|---|---|---|---|

| Increasing Motion Threshold | Variable | Lower, often non-negative intercepts achieved at a threshold of 18 (a.u.) [11] | Variable |

| Increasing Minimum Freeze Duration (Frames) | Larger frame numbers yielded higher correlations [11] | Variable | Larger frame numbers yielded a slope closer to 1 [11] |

| Optimal Combination (from study) | High correlation | Intercept closest to 0 | Slope closest to 1 |

| Motion Threshold: 18 (a.u.); Frames: 30 [11] | High correlation achieved [11] | Lowest non-negative intercept [11] | Slope near 1 [11] |

This quantitative approach reveals that validation is a balancing act. For instance, a higher motion threshold might improve the intercept but could negatively impact the slope if set too high. The optimal combination identified in the cited study (Motion Threshold of 18 and Minimum Freeze Duration of 30 frames) provided the best compromise of high correlation, an intercept near 0, and a slope near 1 [11]. It is critical to note that these optimal values are specific to the VideoFreeze system and its proprietary "Motion Index" algorithm; other software, such as the open-source tool Phobos, would require independent calibration and validation using a similar methodology [4].

Experimental Protocol for Validating VideoFreeze Settings

This protocol provides a step-by-step methodology for validating VideoFreeze software settings against manual scoring by a human observer, based on established procedures [10] [11].

Pre-Validation Setup and Apparatus Configuration

- Apparatus Preparation: Ensure the fear conditioning chamber is configured with appropriate contextual inserts. The chamber should be placed in a sound-attenuating cubicle lined with acoustic foam to minimize external noise [11].

- Video System Calibration: Use a low-noise digital video camera with a near-infrared (NIR) illumination system. NIR lighting allows for consistent video quality independent of visible light cues, which may be manipulated experimentally [11]. Ensure the camera is mounted to provide a clear, top-down view of the entire chamber floor.

- Subject and Video Preparation: Select a set of 10-20 video recordings from fear conditioning experiments that represent the full spectrum of freezing behavior (e.g., from high exploration to complete freezing). These videos should be recorded under the same conditions (chamber type, lighting, camera angle) as future planned experiments.

Manual Scoring by Human Observers

- Train Observers: Multiple trained observers, blinded to experimental conditions, should score the videos. Training ensures a consistent understanding of freezing, defined as "the suppression of all movement except that required for respiration" [10] [11].

- Scoring Method: Use instantaneous time sampling every 5-10 seconds. At each interval, the observer makes a binary judgment: "Freezing: YES or NO" [11]. Alternatively, observers can score continuously by pressing a button to mark the start and end of each freezing episode.

- Calculate Manual Freezing Score: For time-sampling, calculate the percent freezing as:

(Number of YES observations / Total number of observations) * 100%. For continuous scoring, it is:(Total time freezing / Total session time) * 100%[11].

Automated Scoring and Parameter Calibration

- Initial Parameter Sweep: Analyze the same set of videos using VideoFreeze software across a range of Motion Threshold and Minimum Freeze Duration values. For example, test Motion Thresholds from 10 to 30 (in steps of 2) and Minimum Freeze Durations from 0.5 to 2.0 seconds (in steps of 0.25s) [11].

- Statistical Comparison: For each parameter combination, calculate the linear regression between the automated percent-freeze scores and the human-scored percent-freeze scores (using the average human score if multiple observers are used).

- Optimal Parameter Selection: Select the parameter combination that yields a linear fit with:

- Validation and Documentation: Once the optimal parameters are identified, document them thoroughly in lab records and standard operating procedures (SOPs). These settings should be used for all subsequent experiments conducted under identical apparatus and recording conditions. Re-validation is required if any aspect of the hardware or recording environment is changed.

The following workflow diagram summarizes this validation process:

The Scientist's Toolkit: Essential Research Reagents and Materials

A properly configured fear conditioning system relies on several integrated components. The following table details the essential materials and their functions for achieving reliable and valid automated freezing analysis.

Table 3: Key Research Reagent Solutions for Video-Based Fear Conditioning

| Item | Function/Description | Critical for Validation |

|---|---|---|

| Sound-Attentuating Cubicle | Enclosure lined with acoustic foam to minimize external noise and vocalizations between chambers [11]. | Ensures behavioral responses are to controlled stimuli, not external noise. |

| Near-Infrared (NIR) Illumination System | Provides consistent, non-visible lighting for the camera [11]. | Eliminates variable shadows and allows scoring in darkness; crucial for visual cue experiments. |

| Low-Noise Digital Video Camera | High-quality camera to capture rodent behavior with minimal video noise [11]. | Reduces false movement detection, which is fundamental for accurate motion index calculation. |

| Contextual Inserts | Modular walls and floor covers to alter the chamber's geometry, texture, and visual cues [11]. | Enables context discrimination experiments; validation must be consistent across all contexts used. |

| Calibrated Shock Generator | Delivers a precise, consistent electric footshock as the Unconditioned Stimulus (US) [12] [13]. | Standardizes the aversive experience across subjects and experimental days. |

| Precision Speaker System | Delivers an auditory cue (tone, white noise) as the Conditioned Stimulus (CS) at calibrated dB levels [12] [13]. | Ensures consistent presentation of the CS for associative learning. |

| Validation Video Archive | A curated set of video recordings spanning the full range of freezing behavior. | Serves as a gold-standard reference for initial validation and periodic system checks. |

The path to generating publication-quality, reliable data with automated behavioral analysis software is unequivocally dependent on rigorous, empirical validation. As detailed in this application note, tools like VideoFreeze are powerful but not infallible; their output is only as valid as the parameters upon which they are set. The process of comparing automated scores to human expert scoring—and demanding a linear fit with a slope of 1, an intercept of 0, and a high correlation coefficient—is not an optional preliminary step. It is a core, foundational component of the experimental method itself. By adhering to the principles and protocols outlined herein, researchers in neuroscience and drug development can have full confidence that their automated freezing scores are a faithful and accurate reflection of true fear behavior, thereby solidifying the integrity of their findings on the mechanisms of memory and the effects of novel therapeutic compounds.

Automated behavioral assessment has become a cornerstone of modern behavioral neuroscience, offering benefits in efficiency and objectivity compared to manual human scoring. Among these tools, VideoFreeze (Med Associates, Inc.) has been established as a premier solution for assessing conditioned fear behavior in rodents. This software automatically quantifies freezing behavior—the complete absence of movement except for those necessitated by respiration—which is a species-typical defensive response and a validated measure of associative fear memory. However, the reliability of automated scoring depends heavily on proper parameter optimization and calibration to ensure software scores accurately reflect the animal's behavior across different experimental contexts and conditions [1].

The growing research domains of fear generalization and genetic research face the particular challenge of distinguishing subtle behavioral effects, where automated measurements may diverge from manual scoring if not carefully implemented [1]. This application note details the use of VideoFreeze within a research pipeline, providing validated protocols for fear conditioning, memory studies, and pharmacological screening, with a specific focus on the critical importance of parameter validation to generate robust, reproducible findings.

Validation and Parameter Optimization

A primary consideration for employing VideoFreeze is the empirical optimization of software settings. A foundational study systematically validated parameters for mice, recommending a motion index threshold of 18 and a minimum freeze duration of 30 frames (1 second) [1]. For rats, which have larger body mass and consequently produce more pixel changes from respiratory movement, a higher motion threshold of 50 has been successfully implemented [1].

Key Considerations for Parameter Validation

Discrepancies between automated and manual scoring can arise from contextual variations. Research has demonstrated that good agreement between VideoFreeze and human observers can be achieved in one context (e.g., Context B, kappa = 0.71), while being poor in another (e.g., Context A, kappa = 0.05) despite using identical software settings and camera calibration procedures [1]. Factors such as chamber inserts, lighting conditions (white balance), and grid floor type can influence pixel-change algorithms and require careful attention during setup [1].

Table 1: Key Parameters for VideoFreeze Validation

| Parameter | Species | Recommended Value | Notes |

|---|---|---|---|

| Motion Index Threshold | Mouse | 18 [1] | Balances detection of non-freezing movement with ignoring respiration. |

| Rat | 50 [1] | Higher threshold accounts for larger animal size and greater pixel change from breathing. | |

| Minimum Freeze Duration | Mouse & Rat | 30 frames (1 sec) [1] | Standard duration to define a freezing bout. |

| Validation Metric | - | Cohen's Kappa [1] | Assesses agreement between software and human observer scores. |

Alternative and Complementary Software

While VideoFreeze is widely used, other software solutions exist. ImageFZ is a freely available video analysis system that can automatically control auditory cues and footshocks, and analyze freezing behavior with reliability comparable to a human observer [12]. Phobos is another freely available, self-calibrating software that uses a brief manual quantification by the user to automatically adjust its two core parameters (freezing threshold and minimum freezing time), demonstrating intra- and inter-user variability similar to manual scoring [4].

Detailed Experimental Protocols

Protocol 1: Contextual Fear Conditioning and Generalization Test

This protocol is designed to assess hippocampal-dependent contextual fear memory and its generalization to a similar, but distinct, context [1].

- Animals: Male Wistar rats (approximately 275 g).

- Apparatus: Med Associates fear conditioning chambers.

- Context A: Standard chamber with a grid floor, black triangular "A-frame" insert, and illumination with both infrared and white light. Cleaned with a household cleaning product.

- Context B: Standard chamber with a staggered grid floor, white plastic curved back wall insert, and infrared light only. Cleaned with a different cleaning product.

- Day 1: Conditioning.

- Place the rat in Context A.

- Allow a 4-minute exploration period.

- Administer five unsignaled footshocks (0.8 mA, 1 s duration), separated by a 90-second inter-trial interval.

- Return the rat to its home cage 1 minute after the last shock.

- Day 2: Testing.

- Place the rat in either Context A (for memory test) or Context B (for generalization test) for 8 minutes. No shocks are delivered.

- Record the session for analysis with VideoFreeze.

- VideoFreeze Settings: Motion threshold = 50, Minimum freeze duration = 30 frames [1].

- Data Analysis: Compare the percentage of time spent freezing during the 8-minute test between the two groups. Successful discrimination is indicated by significantly less freezing in Context B than in Context A.

Protocol 2: Auditory Cued Fear Conditioning and Extinction

This protocol, adapted from recent studies, assesses amygdala-dependent cued fear memory and its subsequent extinction, and is highly suitable for pharmacological screening [14] [15].

- Animals: C57BL/6J mice (e.g., 8 weeks old).

- Apparatus: Med Associates Video Fear Conditioning system with two distinct contexts (A and B).

- Day 1: Fear Conditioning (Context A).

- Place the mouse in Context A.

- Allow a 90-second adaptation period.

- Deliver three pairings of a conditioned stimulus (CS: 30 s tone, 2.2 kHz, 96 dB) that co-terminates with an unconditioned stimulus (US: 2 s, 0.7 mA footshock). Each pairing is separated by a 30-second inter-trial interval.

- Leave the mouse in the chamber for a final 30 seconds before returning it to its home cage.

- Clean the chamber with 75% ethanol between sessions [15].

- Day 2: Cued Fear Extinction (Context B).

- (For pharmacological studies) Administer the drug (e.g., Psilocybin at 1 mg/kg, i.p.) or vehicle 30 minutes prior to the session [14].

- Place the mouse in the modified Context B.

- After a 90-second adaptation, present 15 CS tones (without the US) with a fixed inter-tone interval.

- Record freezing behavior automatically via VideoFreeze.

- Subsequent Days: Extinction Retention and Fear Renewal Test.

- Day 3: Test for extinction retention by re-exposing the mouse to the CS in Context B.

- Day 11: Test for fear renewal by exposing the mouse to the CS in a novel, third context (Context C) [14].

- Data Analysis: Analyze freezing levels across CS presentations on each day. A successful drug-facilitated extinction would be indicated by a more rapid reduction in freezing during the Day 2 extinction session and lower freezing during the retention and renewal tests.

Applications in Pharmacological and Neuromodulation Research

VideoFreeze provides a robust quantitative readout for screening novel therapeutic compounds and understanding neural circuit mechanisms underlying fear memory.

Pharmacological Screening: The Case of Psilocybin

A 2024 study utilized VideoFreeze to systematically evaluate the effect of psilocybin on fear extinction [14]. The key findings were:

- Acute Enhancement: Psilocybin (0.5, 1, and 2 mg/kg) robustly enhanced fear extinction when administered 30 minutes prior to the extinction session (Day 2).

- Long-Term, Dose-Sensitive Effects: The 1 mg/kg dose was most effective, leading to enhanced extinction retention (Day 3) and suppressed fear renewal in a novel context (Day 11), effects that persisted for 8 days.

- Mechanism: The effect was dependent on concurrent extinction training and was blocked by 5-HT2A receptor antagonism [14].

Neural Circuit Manipulation: Stellate Ganglion Block (SGB)

A 2025 study employed VideoFreeze to investigate the mechanism of SGB, a clinical procedure for PTSD, in a mouse model of conditioned fear [15]. The study demonstrated that SGB, when performed after fear conditioning, diminished the consolidation of conditioned fear memory. This effect was correlated with hypoactivity of the locus coeruleus noradrenergic (LCNE) projections to the basolateral amygdala (BLA), a critical circuit for fear memory. Artificially activating this LCNE-BLA pathway reversed the beneficial effect of SGB [15].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 2: Key Research Reagents and Materials for VideoFreeze Studies

| Item | Function/Description | Example Use |

|---|---|---|

| VideoFreeze Software (Med Associates) | Automated video-based analysis of freezing behavior; calculates motion index, freezing bouts, and percent time freezing. | Core software for all fear conditioning and extinction experiments [9]. |

| Near-IR Video Fear Conditioning System | Standardized apparatus for fear conditioning; includes sound-attenuating boxes, shockable grid floors, and tone generators. | Provides controlled environment for behavioral testing [16] [15]. |

| Psilocybin | A classic serotonergic psychedelic being investigated for its fear extinction facilitating properties. | Used at 1 mg/kg (i.p.) to enhance extinction learning and block fear renewal; effect is 5-HT2A receptor dependent [14]. |

| Ropivacaine (0.25%) | A local anesthetic used to reversibly block the stellate ganglion. | Used to investigate the role of the sympathetic nervous system and LCNE-BLA circuit in fear memory consolidation [15]. |

| Adeno-Associated Viruses (AAVs) | Viral vectors for targeted gene expression, such as chemogenetics or optogenetics, in specific neural populations. | Used to selectively manipulate neural circuits (e.g., LCNE-BLA pathway) to test causal relationships [15]. |

| Pseudorabies Virus (PRV) | A trans-synaptic retrograde tracer used to map neural circuits. | Injected into the stellate ganglion to identify upstream brain nuclei like the LC that project to it [15]. |

Setting Up for Success: A Step-by-Step Protocol for Configuring and Calibrating VideoFreeze

This application note provides a comprehensive framework for establishing and validating a laboratory setup for rodent fear conditioning research using automated behavioral analysis software such as VideoFreeze. Proper configuration of camera placement, lighting conditions, and chamber considerations is paramount for generating reliable, reproducible data in behavioral neuroscience. This guide details standardized protocols and technical specifications to optimize experimental conditions, minimize artifacts, and ensure accurate detection of freezing behavior—the primary metric in fear conditioning paradigms. Implementation of these guidelines will enhance data validity for researchers investigating learning, memory, and pathological fear in rodent models.

Pavlovian fear conditioning has become a predominant model for studying learning, memory, and pathological fear in rodents due to its efficiency, reproducibility, and well-defined neurobiology [10]. The paradigm's utility in large-scale genetic and pharmacological screens has necessitated the development of automated scoring systems to replace tedious and potentially biased human scoring. Video-based systems like VideoFreeze utilize digital video and sophisticated algorithms to detect freezing behavior—defined as the complete suppression of all movement except for respiration [10]. However, the accuracy of these systems is highly dependent on the physical experimental setup. Suboptimal camera placement, inappropriate lighting, or improper chamber configuration can introduce significant artifacts, leading to inaccurate freezing measurements and potentially invalid experimental conclusions. This document provides evidence-based guidelines for configuring these critical elements to ensure the highest data quality and experimental reproducibility.

Core System Components and Specifications

Research Reagent Solutions

The table below outlines the essential materials required for establishing a fear conditioning laboratory optimized for video-based analysis.

Table 1: Essential Materials for Fear Conditioning Laboratory Setup

| Component | Function & Importance | Technical Specifications |

|---|---|---|

| Near-Infrared (NIR) Camera | Captures high-quality video in darkness or low light without disturbing rodent behavior. | High-resolution (e.g., 30 fps), NIR-sensitive, fixed focal length, mounted for a clear, direct view of the chamber [17]. |

| NIR Light Source | Illuminates the chamber for the camera without providing visible light that could affect rodent behavior. | Wavelength invisible to rodents (~850 nm); positioned to provide even, shadow-free illumination [17] [18]. |

| Fear Conditioning Chamber | The primary context for associative learning; design impacts behavioral outcomes. | Constructed of acrylic; available in various shapes and sizes (e.g., mouse: 17x17x25 cm, rat: 26x26x30 cm) [19]. |

| Contextual Inserts | Allow modification of the chamber's appearance to create distinct contexts for cued vs. contextual testing. | Interchangeable walls and floors with different patterns, colors, or textures [17] [19]. |

| Grid Floor | Delivers the aversive unconditioned stimulus (mild footshock). | Stainless-steel rods; spaced appropriately (e.g., mouse: 0.5-1.0 cm) and connected to a calibrated shock generator [12] [19]. |

| Sound Attenuation Cubicle | Houses the chamber to isolate the subject from external auditory and visual disturbances. | Sound-absorbing interior; often includes a speaker for presenting auditory conditioned stimuli [12] [19]. |

Camera Placement and Technical Requirements

Optimal camera placement is the cornerstone of reliable video tracking. The camera must have an unobstructed, direct view of the entire chamber floor to ensure the rodent is always within the frame and properly detected.

Perspective and Mounting: The camera should be mounted directly above (for a top-down view) or directly in front of the chamber, ensuring the rodent's entire body is visible without perspective distortion. For standard rectangular chambers, ceiling mounting is preferred. Med Associates, for instance, mounts its camera on the cubicle door to ensure proper positioning [17]. The camera must be firmly fixed to prevent vibrations that could cause motion artifacts misinterpreted as animal movement.

Field of View and Resolution: The camera's field of view should tightly frame the testing chamber to maximize the pixel area dedicated to the subject. Higher-resolution cameras (e.g., recording at 30 frames per second) allow the software to distinguish between subtle movements such as a whisker twitch, tail flick, and true freezing behavior [17]. This high-fidelity data is crucial for accurate threshold setting.

Focus and Lensing: The camera must be manually focused on the plane of the chamber floor. Autofocus should be disabled to prevent the system from refocusing during the session, which can temporarily disrupt tracking. A fixed focal length lens is ideal for consistency across experiments.

Lighting Considerations: Visible vs. Near-Infrared (NIR)

Lighting is a critical variable that influences both rodent behavior and video quality.

The Case for Near-Infrared (NIR) Lighting: Rodents are nocturnal animals, and bright visible lighting can be aversive and anxiety-provoking, thereby altering their natural behavioral repertoire [17]. NIR light is outside the visible spectrum of both rodents and humans, allowing experiments to be conducted in what is effectively complete darkness from the animal's perspective. This eliminates the potential confound of light stress and enables the measurement of more naturalistic behaviors. Preliminary research suggests that mice act differently in dim light versus complete darkness, showing increased activity and center zone entries in an open field when in the dark [18].

Technical Implementation of NIR: An NIR light source must be positioned to provide uniform illumination across the entire chamber. Uneven lighting can create shadows or "hot spots" that confuse video analysis algorithms. Many commercial systems, like the Med Associates NIR Video Fear Conditioning system, integrate NIR lighting directly [17]. For custom setups, IR-emitting LED panels or lamps can be used. The camera must be sensitive to the specific wavelength of the NIR light used (typically 850-940 nm).

Using Visible Light as a Cue: While NIR is preferred for general illumination, visible light can be effectively used as a discrete conditioned stimulus (CS) within a session. Because the recording is done with an NIR-sensitive camera, the presentation of a visible light cue does not impact the quality of the video recording [17]. This allows for great flexibility in experimental design.

Chamber Design and Contextual Modification

The fear conditioning chamber serves as more than just a container; it is a primary stimulus in contextual learning.

Chamber Materials and Construction: Chambers are typically constructed of clear or opaque acrylic to allow for easy cleaning and clear sight lines. A foam-lined cubicle can help with sound attenuation [17]. The chamber should be easy to clean thoroughly between subjects with disinfectants like 70% ethanol or super hypochlorous water to remove olfactory cues that could bias results [12].

Contextual Manipulation for Cued Testing: To specifically test for cued fear memory, it is imperative to dissociate the auditory cue from the original training context. This requires presenting the cue in a chamber that is distinctly different in multiple sensory modalities. This can be achieved by:

- Changing the Shape: Using a triangular chamber instead of a square one [12].

- Altering the Floor: Replacing the grid floor with a smooth, solid plastic covering [20] [12].

- Modifying the Walls: Using contextual inserts with different patterns, colors, or textures [17] [19].

- Adjusting Lighting: Lowering the light intensity (e.g., from 100 lux to 30 lux) or switching from white light to NIR-only [12].

- Introducing New Odors: Placing a drop of food essence (e.g., mint or orange extract) in the new context [12].

The following diagram illustrates the core considerations for chamber setup and their relationship to data quality.

Detailed Experimental Protocols

Protocol 1: Standard Contextual and Cued Fear Conditioning

This protocol outlines a standard three-day procedure for assessing both hippocampal-dependent contextual memory and amygdala-dependent cued fear memory [20] [21] [12].

Day 1: Conditioning

- Apparatus Setup: Use the conditioning chamber (Context A) with grid floors, illuminated with white light (e.g., 100 lux). Ensure the chamber is clean and free of residual odors.

- Animal Preparation: Transfer mice from the holding room to the testing room at least 30 minutes before the session to allow for habituation.

- Conditioning Session:

- Place the mouse in the conditioning chamber.

- Allow a 120-second habituation period.

- Present a 30-second auditory conditioned stimulus (CS), such as a white noise or pure tone (e.g., 55 dB).

- During the last 2 seconds of the CS, deliver a mild footshock unconditioned stimulus (US) (e.g., 0.5 mA for 2 seconds).

- Repeat this CS-US pairing 2-3 times with a 30-90 second inter-trial interval.

- Leave the mouse in the chamber for an additional 60-90 seconds after the final shock.

- Return the mouse to its home cage.

- Data Acquisition: VideoFreeze software records the entire session, calculating a motion index.

Day 2: Context Test (Hippocampal-Dependent Memory)

- Apparatus Setup: Use the exact same Context A as on Day 1.

- Testing Session:

- Place the mouse in Context A.

- Record behavior for 5-10 minutes (e.g., 300 seconds) without presenting any tones or shocks.

- Data Analysis: The software measures the percent time spent freezing, which reflects contextual fear memory.

Day 3: Cued Test (Amygdala-Dependent Memory)

- Apparatus Setup: Use a novel context (Context B). This should differ from Context A in shape (e.g., triangular insert), floor (smooth plastic), lighting (NIR only or 30 lux), and odor (e.g., orange extract instead of mint) [20] [12].

- Testing Session:

- Place the mouse in Context B.

- Allow a 180-300 second baseline period to assess generalized fear to the new context.

- Present the CS (the same auditory cue used in training) 2-3 times for 30 seconds each.

- Record behavior for the entire session.

- Data Analysis: Freezing during the CS presentations is compared to freezing during the pre-CS baseline. Increased freezing specifically during the CS indicates successful cued fear memory.

The workflow for this standard protocol is summarized below.

Protocol 2: Context Discrimination Assay

This more advanced protocol tests the animal's ability to distinguish between two highly similar contexts, a process that requires fine-grained pattern separation in the dentate gyrus [20].

- Pre-training Habituation (Day 1): In the morning, place mice in Context A for 10 minutes. In the afternoon, place them in Context B for 10 minutes. No shocks are given.

- Training (Day 2): Place mice in Context A. After 148 seconds (pre-shock period), deliver a single footshock (e.g., 0.5 mA, 2 seconds). Remove the mouse 30 seconds later.

- Testing (Day 2, 4+ hours post-training): Place the mice in Context B for 180 seconds with no shock.

- Data Analysis: The key measure is the difference in freezing during the pre-shock period in Context A versus the equivalent period in Context B on the test day. Successful discrimination is indicated by significantly higher freezing in the shock-paired context (A) than in the neutral context (B). All freezing scoring is performed using the default settings of the VideoFreeze software [20].

Protocol 3: System Validation and Calibration

Before commencing experimental studies, it is crucial to validate that the automated VideoFreeze system is accurately scoring freezing behavior against the gold standard of human observation.

- Sample Recording: Record video sessions of rodents (including a range of strains, genotypes, and freezing levels) during fear conditioning tests.

- Manual Scoring: A trained human observer, blind to experimental conditions, scores the videos. Freezing is typically defined as the absence of all movement except for respiration, and is often measured using instantaneous time sampling every 3-10 seconds [10].

- Automated Scoring: The same video files are analyzed using the VideoFreeze software with the chosen threshold settings.

- Statistical Comparison: The data from the human scorer and the automated system are compared. A well-validated system should show:

Table 2: Key Parameters for Standard Fear Conditioning Protocols

| Protocol Phase | Duration | Stimulus Parameters | Lighting & Context | Primary Measurement |

|---|---|---|---|---|

| Conditioning | ~5-8 min total | 2-3 x (30 sec Tone + 2 sec 0.3-0.5 mA Shock) | Context A: Bright light (100 lux), grid floor | Acquisition of fear learning |

| Context Test | 5 min (300 sec) | No tone or shock | Context A: Identical to conditioning | % Freezing: Hippocampal-dependent memory |

| Cued Test | 10 min (600 sec) | 3 x 30 sec Tone presentations | Context B: Dim/NIR light, solid floor, different shape/odor | % Freezing to Tone vs. Baseline: Amygdala-dependent memory |

Troubleshooting and Quality Control

Even with an optimal setup, regular quality control is essential.

- Poor Contrast or Detection: Ensure the animal's fur color contrasts with the chamber floor (e.g., dark mouse on white floor, albino mouse on black floor) [12]. Verify that NIR illumination is uniform and that the camera is not over- or underexposed.

- Inconsistent Freezing Scores Between Systems: This is a known issue. Correlate your system's output with manual scoring as described in the validation protocol. Do not assume a system is accurate based on manufacturer claims alone [10].

- High Baseline Freezing: This can be caused by excessive ambient noise, vibrations, or stress from bright lighting. Ensure the sound-attenuation cubicle is effective, and use NIR lighting to minimize anxiety.

- Failure to Discriminate Contexts: If the context discrimination assay fails, increase the number of differentiating features between Context A and B (e.g., different transport methods, housing rooms, and more distinct odors and textures) [20].

The validity of data generated in fear conditioning experiments is inextricably linked to the precision of the laboratory setup. Meticulous attention to camera placement, the implementation of NIR lighting to minimize behavioral confounds, and the thoughtful design of experimental chambers and contexts are not merely technical details but fundamental prerequisites for rigorous scientific inquiry. By adhering to the guidelines and protocols outlined in this document, researchers can confidently establish a behavioral testing environment that ensures the accurate detection of freezing behavior, thereby yielding reliable, reproducible, and meaningful results in the study of learning, memory, and fear.

Validating software settings is a critical prerequisite for ensuring the reliability and reproducibility of automated fear conditioning data in behavioral neuroscience. Automated systems like VideoFreeze (Med Associates) provide significant advantages in throughput and objectivity over manual scoring but require meticulous configuration to accurately reflect rodent freezing behavior [22] [23]. Incorrect parameter settings can lead to systematic measurement errors, potentially compromising data interpretation and experimental outcomes, particularly in studies detecting subtle behavioral effects such as fear generalization or in phenotyping genetically modified animals [23]. This Application Note provides detailed protocols for defining core session parameters, stimuli, and trial structures to optimize VideoFreeze performance, framed within the broader context of methodological validation for rodent behavior research.

The Scientist's Toolkit: Essential Research Reagents and Materials

The table below catalogs the essential equipment and software required to implement the fear conditioning and validation protocols described in this note.

Table 1: Key Research Reagent Solutions for Fear Conditioning Experiments

| Item Name | Function/Application | Key Specifications |

|---|---|---|

| VideoFear Conditioning (VFC) System [9] | Core apparatus for presenting stimuli and recording behavior. | Includes conditioning chambers, shock generators, sound sources, and near-infrared camera systems. |

| VideoFreeze Software [9] [23] | Automated measurement and analysis of freezing behavior. | User-defined stimuli, intervals, and session durations; calculates motion index, freezing episodes, and percent time freezing. |

| Phobos Software [22] | A freely available, self-calibrating alternative for automated freezing analysis. | Uses a brief manual quantification to auto-adjust parameters; requires .avi video input; minimal resolution 384×288 pixels at 5 fps. |

| ImageFZ Software [13] | Free video-analyzing system for fear conditioning tests. | Controls up to 4 apparatuses; automatically presents auditory cues and footshocks based on a text file. |

| Contextual Inserts & Odors [23] [13] | Creating distinct environments for contextual fear testing and discrimination. | Varying chamber shapes (e.g., square, triangular), floor types (grid, flat), lighting levels, and cleaning solutions (e.g., ethanol, hypochlorous water, various cleaners). |

Configuring Core Software Parameters

Defining Freezing Detection Parameters

The accurate quantification of freezing behavior hinges on two primary software parameters: the motion index threshold and the minimum freeze duration. The motion index represents the amount of pixel change between video frames, and the threshold is the level below which the animal is considered freezing [22]. The minimum freeze duration is the consecutive time the motion index must remain below the threshold for a behavior to be classified as a freezing episode [23].

Optimized parameters are species-dependent and must be validated for your specific setup. Published settings include:

- For Mice: A motion index threshold of 18 and a minimum freeze duration of 1 second (30 frames) [23].

- For Rats: A motion index threshold of 50 and a minimum freeze duration of 1 second (30 frames) [23].

These parameters must balance the detection of small, non-freezing movements (e.g., tail twitches) with the ignoring of respiratory and cardiac motions during genuine freezing bouts [23].

Impact of Environmental and Hardware Settings

Environmental and hardware configurations significantly impact the performance of automated scoring. Key factors to control include:

- Contextual Cues: The physical setup of the conditioning chamber (e.g., grid floor type, wall inserts, lighting) must be consistent within an experimental group. Differences in context can unexpectedly alter the agreement between automated and manual scoring, even with identical software parameters [23].

- Camera Calibration: Proper white balance and contrast are crucial. Studies have shown that discrepancies between software and human scores can persist despite calibration attempts, underscoring the need for ongoing validation [23]. The subject should be clearly distinguishable from the background, which can be achieved by using a white floor for dark-furred mice and a black floor for albino mice [13].

Experimental Protocols for Software Validation

Protocol: Validating VideoFreeze Settings Against Manual Scoring

This protocol is designed to test the accuracy of automated freezing scores against the gold standard of human observation, which is especially critical when working with new contexts or animal models [23].

- Apparatus Setup: Establish two distinct testing contexts (Context A and B) using different chamber inserts, floor types, lighting, and olfactory cues (e.g., different cleaning solutions) [23].

- Subject Training & Testing:

- Train a cohort of rats or mice in Context A using a standard fear conditioning protocol (e.g., a 2-min acclimation followed by 5 unsignaled footshocks).

- 24 hours later, test the subjects in either Context A or the novel Context B for 8 minutes without any shocks [23].

- Behavioral Scoring:

- Automated Scoring: Analyze all test sessions using VideoFreeze with the predetermined parameters (e.g., motion threshold 50 for rats).

- Manual Scoring: Have at least two trained observers, blind to the software scores and experimental groups, manually score freezing from the video recordings. Freezing is defined as the absence of all movement except for respiration [23].

- Data Analysis & Validation:

- Calculate the percent freezing for each animal using both methods.

- Assess inter-rater agreement between the human observers using a statistic like Cohen's kappa.

- Compare automated and manual scores using correlation analysis and tests of agreement (e.g., Bland-Altman plots). High correlation does not guarantee agreement; the absolute values and the ability to detect significant differences between contexts should be consistent between methods [23].

Protocol: Self-Calibrating Software with Phobos

For laboratories without access to commercial systems, the free Phobos software provides a rigorous alternative that integrates calibration into its workflow [22].

- Video Acquisition: Record fear conditioning sessions meeting minimum specifications: native resolution of 384x288 pixels and a frame rate of 5 frames per second. Convert videos to .avi format [22].

- Software Calibration:

- Select a reference video and use the Phobos interface to manually score freezing for a 2-minute segment by pressing a button to mark the start and end of each freezing epoch.

- The software automatically analyzes this video using multiple combinations of freezing thresholds and minimum durations, selecting the parameter set that yields the highest correlation and a linear fit closest to the manual scoring [22].

- Batch Analysis: Apply the calibrated parameter set to analyze all other videos recorded under the same conditions.

- Validation Check: The software prompts a warning if the manual scoring used for calibration is below 10% or above 90% of the total time, as these extremes can compromise the calibration quality [22].

Quantitative Data from Validation Studies

Empirical studies highlight the importance and outcomes of proper software configuration. The following table summarizes key quantitative findings from the literature.

Table 2: Comparative Freezing Scores and Agreement from Validation Studies

| Study Context | Scoring Method | Freezing in Context A (%) | Freezing in Context B (%) | Statistical Agreement (Cohen's Kappa) |

|---|---|---|---|---|

| Rat Fear Discrimination [23] | VideoFreeze (Threshold 50) | 74 | 48 | N/A |

| Rat Fear Discrimination [23] | Manual Scoring | 66 | 49 | N/A |

| Inter-Method Agreement in Context A [23] | Software vs. Manual | N/A | N/A | 0.05 (Poor) |

| Inter-Method Agreement in Context B [23] | Software vs. Manual | N/A | N/A | 0.71 (Substantial) |

| Phobos Software [22] | Automated vs. Manual | N/A | N/A | Intra- and interuser variability similar to manual scoring |

Workflow and Signaling Diagrams

Experimental Workflow for Software Validation

The diagram below outlines the logical sequence for establishing and validating a fear conditioning software configuration.

Parameter Optimization Logic in Phobos Software

This diagram illustrates the self-calibrating logic implemented by the Phobos software to determine optimal freezing detection parameters.

In the study of learned fear and memory using rodent models, Pavlovian conditioned freezing has emerged as a predominant behavioral paradigm due to its robustness, efficiency, and well-characterized neurobiological underpinnings [10]. The automation of freezing behavior analysis through systems like VideoFreeze has become essential for large-scale genetic and pharmacological screens, eliminating the tedium and potential bias of manual scoring while enabling high-throughput data collection [10] [11]. However, the accuracy of these automated systems hinges entirely on the proper calibration of core parameters, particularly the motion threshold and minimum freeze duration [11].

Motion threshold calibration represents a critical validation step that directly determines the fidelity of automated scoring to human expert observation. An inappropriately set threshold can systematically overestimate or underestimate freezing behavior, potentially leading to erroneous conclusions about memory function or fear expression [11]. This application note provides a comprehensive framework for initial parameter selection, validation methodologies, and troubleshooting strategies to ensure accurate, reproducible freezing data within the VideoFreeze environment, specifically contextualized for researchers engaged in preclinical drug development and basic memory research.

Core Principles of Automated Freezing Detection

Defining the Behavioral Units and Analysis Framework

Automated freezing analysis in VideoFreeze operates on a "linear analysis" principle where every data point is examined against two primary parameters [11]:

- Motion Threshold: An arbitrary limit above which the subject is considered moving. This threshold is applied to a "Motion Index" that quantifies pixel-level changes between video frames.

- Minimum Freeze Duration: The duration that a subject's motion must remain below the motion threshold for a freezing episode to be registered and counted.

The system generates several key dependent measures from this analysis, with Percent Freeze (time immobile/total session time) being the most fundamental metric for assessing fear memory [11]. Proper calibration ensures that these automated measures align with the classical definition of freezing as "the suppression of all movement except that required for respiration" [10].

Validation Requirements for Automated Systems

According to established validation principles, an automated freezing detection system must meet several critical requirements to be considered scientifically valid [10] [11]:

- The system must detect and reject video noise, scoring 100% freezing when no animal is present.

- Small movements (grooming, sniffing) must be differentiated from true freezing, with their signals well above video noise levels.

- Detection must occur with sufficient temporal resolution to capture natural freezing bouts.

- Computer-generated scores must correlate highly with those from trained human observers, with a correlation coefficient near 1, a y-intercept near 0, and a slope of approximately 1 in linear regression models.

The motion threshold parameter directly governs requirements 1 and 2, while the minimum freeze duration primarily affects requirements 3 and 4.

Quantitative Framework for Initial Parameter Selection

Empirical Data from Validation Studies

Groundbreaking validation work by Anagnostaras et al. (2010) systematically evaluated parameter combinations to identify optimal settings for automated freezing detection [10]. This research provided crucial quantitative guidance for initial parameter selection, as summarized in the table below.

Table 1: Optimal Parameter Combinations from Validation Studies

| Parameter | Recommended Value | Experimental Impact | Validation Correlation |

|---|---|---|---|

| Motion Threshold | 18-20 (arbitrary units) | Lower thresholds under-detect freezing; higher thresholds over-detect freezing [11] | Threshold of 18 yielded lowest non-negative intercept [11] |

| Minimum Freeze Duration | 30 frames (~1-2 seconds) | Shorter durations overestimate freezing; longer durations underestimate freezing [11] | Larger frame numbers yielded slope closer to 1 and higher correlation [11] |

| Frame Rate | 5-30 frames/sec | Affects temporal resolution of detection [4] | Higher frame rates provide finer movement resolution |

Interactive Parameter Optimization Workflow

The following diagram illustrates the systematic approach to initial parameter selection and validation, integrating both the empirical data from validation studies and interactive calibration tools:

Diagram 1: Parameter Optimization Workflow

This workflow emphasizes the iterative nature of parameter optimization, where systematic comparison between automated and manual scoring drives refinement of motion threshold and minimum freeze duration values.

Experimental Protocols for Parameter Validation

Protocol 1: Establishing the Gold Standard Manual Scoring

Purpose: To generate reliable manual freezing scores for correlation with automated system output.

Materials:

- Video recordings of fear conditioning sessions (5-30 fps)

- Stopwatch or event-logging software

- Multiple trained observers (blinded to experimental conditions)

Methodology:

- Training Phase: Observers must achieve >90% inter-rater reliability using standardized freezing definitions [10].

- Scoring Modality: Employ instantaneous time sampling every 8-10 seconds OR continuous scoring with stopwatch [10] [11].

- Behavioral Definition: Freezing is scored when no movement (except respiration) is observed [10].

- Data Structure: Record freezing in discrete time bins (20-60 seconds) matching automated output structure [4].

Validation Metrics: Calculate inter-rater reliability (Cohen's Kappa >0.8) and correlation between observers (R² >0.9) before proceeding with automated validation.

Protocol 2: Systematic Parameter Validation

Purpose: To identify optimal motion threshold and minimum freeze duration for specific experimental conditions.

Materials:

- VideoFreeze system or equivalent automated scoring platform

- 5-10 representative video files spanning expected freezing range (0-100%)

- Custom scripts for batch processing (optional)

Methodology:

- Parameter Matrix Testing:

- Motion threshold: Test values from 10-30 au in increments of 2

- Minimum freeze duration: Test values from 0.5-3.0 seconds in increments of 0.25 seconds

- Batch Processing: Run automated scoring with all parameter combinations

- Correlation Analysis: For each combination, calculate:

- Pearson correlation coefficient (R) between automated and manual scores

- Linear regression parameters (slope and y-intercept)

- Root mean square error (RMSE)

Decision Criteria: Select parameters that simultaneously achieve [10] [11]:

- Correlation coefficient (R) > 0.95

- Regression slope between 0.95-1.05

- Y-intercept between -5% and +5%

Table 2: Troubleshooting Common Parameter Selection Issues

| Scoring Problem | Linear Fit Pattern | Probable Cause | Parameter Adjustment |

|---|---|---|---|

| Over-estimated freezing (esp. at low movement) | Y-intercept > 0, possible low correlation | Motion threshold too HIGH and/or Minimum freeze duration too SHORT [11] | Decrease motion threshold by 2-5 units Increase minimum freeze by 0.25-0.5 sec |

| Under-estimated freezing (esp. at low-mid movement) | Y-intercept < 0, possible low correlation | Motion threshold too LOW and/or Minimum freeze duration too LONG [11] | Increase motion threshold by 2-5 units Decrease minimum freeze by 0.25-0.5 sec |

| Poor discrimination of brief movements | Low correlation across range, normal intercept | Minimum freeze duration potentially too short | Increase minimum freeze duration to 1.5-2.0 seconds |

| Missed freezing bouts | Slope < 1, possible high intercept | Motion threshold potentially too sensitive | Increase motion threshold by 3-7 units |

Table 3: Key Research Reagents and Solutions for Freezing Behavior Analysis

| Category | Item | Specification/Function | Application Notes |

|---|---|---|---|

| Hardware Systems | VideoFreeze (MED Associates) | Integrated NIR video system with controlled illumination [11] | Minimizes video noise; ensures consistent tracking across lighting conditions |

| Validation Tools | Phobos Software | Freely available, self-calibrating freezing analysis [4] | Useful for cross-validation; employs 2-min manual calibration for parameter optimization |

| Reference Standards | Manually Scored Video Libraries | Gold-standard datasets for validation | Should span 0-100% freezing range; include diverse movement types |

| Analysis Software | ezTrack | Open-source video analysis pipeline [24] | Provides alternative validation method; compatible with various video formats |

Advanced Calibration Strategies

Context-Specific Parameter Optimization

Different experimental contexts may require parameter adjustments to maintain scoring accuracy:

- Apparatus Variations: Chamber size, geometry, and contextual inserts affect pixel change dynamics [11].

- Animal Factors: Coat color, size, and strain-specific movement patterns can influence optimal thresholds [11].

- Recording Conditions: Camera angle, resolution, and lighting consistency impact motion index values [24].

Quality Control Metrics for Ongoing Validation

Implement routine quality control measures to ensure consistent scoring performance over time:

- Weekly Validation Checks: Process standard reference videos to detect parameter drift.

- Inter-system Consistency: When multiple systems are used, validate parameters across all units.

- Blinded Re-scoring: Periodically re-score subsets of videos manually to confirm automated accuracy.

Proper calibration of the motion threshold represents a foundational step in ensuring the validity of fear conditioning data obtained through automated scoring systems. By adopting the systematic approach outlined in this application note—incorporating rigorous validation protocols, iterative parameter optimization, and comprehensive troubleshooting strategies—researchers can achieve the high correlation with manual scoring that is essential for meaningful behavioral phenotyping. This calibration framework provides a standardized methodology for establishing accurate, reproducible freezing measures that are crucial for both basic memory research and preclinical drug development.