A Technical Guide to Simultaneous VR-fMRI Recording: Protocols, Applications, and Best Practices for Neuroscience Research

Simultaneous Virtual Reality and functional Magnetic Resonance Imaging (VR-fMRI) is an emerging paradigm that combines the ecological validity of immersive environments with the powerful neuroimaging capabilities of fMRI.

A Technical Guide to Simultaneous VR-fMRI Recording: Protocols, Applications, and Best Practices for Neuroscience Research

Abstract

Simultaneous Virtual Reality and functional Magnetic Resonance Imaging (VR-fMRI) is an emerging paradigm that combines the ecological validity of immersive environments with the powerful neuroimaging capabilities of fMRI. This article provides a comprehensive technical guide for researchers and drug development professionals, detailing the foundational principles, methodological protocols, and optimization strategies for successful simultaneous recording. It covers the integration of MR-compatible VR hardware, advanced artifact removal techniques for clean data acquisition, and the application of this technology in clinical and cognitive neuroscience, including the study of Alzheimer's disease and mild cognitive impairment. The article also addresses common troubleshooting challenges and outlines validation frameworks to ensure data quality and interpretability, offering a roadmap for leveraging this cutting-edge tool in biomedical research.

The Convergence of Immersion and Imaging: Core Principles of VR-fMRI

Technical Support Center: Troubleshooting Guides and FAQs

Frequently Asked Questions (FAQs)

Q1: What is the primary scientific value of combining VR with fMRI? Combining VR with fMRI provides a unique opportunity to enhance the ecological validity of brain research. While fMRI is a powerful tool for understanding neural underpinnings, traditional experiments can lack real-world context. VR creates immersive, navigable environments where memories can be formed and retrieved, allowing researchers to study brain activity in more naturalistic settings while maintaining experimental control. This is particularly valuable for researching context-dependent processes like memory and navigation [1].

Q2: My VR headset is not being detected by the system in the scanner control room. What should I check? This is a common setup issue. First, verify that the link box (an interface unit) is powered ON. Then, unplug all connections from the link box and securely reconnect them. Finally, reset the headset using the SteamVR software interface [2].

Q3: Participants report a blurry image in the VR headset. How can this be resolved? A blurry image is typically caused by a poor physical fit. Instruct the participant to move the headset up and down on their face until they find the position of clearest vision. Then, tighten the headset's dial and adjust the side straps to secure it in this position [2].

Q4: We are experiencing lagging images or tracking issues in our VR simulation. How can we diagnose this? Check the simulation's frame rate by pressing the 'F' key on the keyboard. For a smooth experience, the frame rate should be at least 90 fps. If the frame rate is low, restart the computer. If the issue persists, check the base station setup for obstructions or perform a room setup in SteamVR [2].

Q5: Can I use a standard VR headset and data glove inside an fMRI scanner? No, standard commercial equipment is not safe or functional inside the high magnetic field of an MRI scanner. You must use MR-conditional (also known as MRI-compatible) equipment specifically designed to operate without risks like becoming dangerous projectiles or degrading image quality. Examples include the NordicNeuroLab VisualSystem HD for visual presentation and the 5DT Data Glove 16 MRI, which is metal-free and uses fiberoptic sensors [3] [4].

Troubleshooting Guide

The table below summarizes common technical problems and their solutions.

Table 1: VR-fMRI Technical Troubleshooting Guide

| Problem Area | Specific Issue | Troubleshooting Steps |

|---|---|---|

| VR Headset | Image not centered [2] | In the module, have the participant look straight ahead and press the 'C' button on the keyboard to re-center the view. |

| VR Headset | Menu appears unexpectedly [2] | A button on the side of the headset was likely pressed. Have the participant look away from the menu, then press the button again to close it. |

| Base Stations | Base station not detected [2] | 1. Check power connection (green light should be on).2. Ensure it has a clear line of sight to the tracking area.3. Run the automatic channel configuration in SteamVR. |

| Controllers & Trackers | Hand controller/tracker not detected [2] | 1. Ensure the device is turned on and fully charged.2. Re-pair the device through the SteamVR interface. |

| Force Plates | Inaccurate weight display [2] | Tare the force plates. Ensure no one is standing on them, then open a relevant VR module and press the 'tare' button. |

| Software | SteamVR errors [2] | 1. Check all cable connections to the link box and restart SteamVR.2. Restart the VR headset via the link box button.3. Restart the PC.4. Check for and install Windows updates. |

Experimental Protocols & Methodologies

Core Protocol: fMRI-compatible VR System for Motor Imitation

This protocol, adapted from a foundational study, details how to set up a system for studying action observation and imitation using real-time virtual avatar feedback inside the scanner [3].

1. System Setup and Hardware

- VR Simulation Software: Use a development platform like Virtools with a VR plugin (e.g., VRPack) that communicates via the open-source VRPN (Virtual Reality Peripheral Network).

- Motion Capture: Use an MRI-compatible data glove, such as the 5DT Data Glove 16 MRI, to measure hand and finger joint angles. The glove uses fiberoptic sensors and long cables to relay data from the scanner room to the control room.

- Visual Presentation: Subjects view the virtual environment via MR-compatible video goggles.

2. Experimental Task Design

- Paradigm: A blocked design is used, alternating between conditions.

- Conditions:

- Observation with Intent to Imitate (OTI): The subject observes a virtual hand avatar (1st-person perspective) performing a finger sequence, animated by pre-recorded data.

- Imitation: The subject imitates the previously observed sequence while viewing a virtual hand avatar animated in real-time by their own movements from the data glove.

- Rest/Control: Subjects view static virtual hands or moving non-anthropomorphic objects.

- Synchronization: The experiment script in the control room receives TTL pulse triggers from the fMRI scanner via a serial port to synchronize task timing with image acquisition.

3. Data Acquisition and Analysis

- fMRI Parameters: Data is acquired using a 3T MRI scanner. A standard T1-weighted anatomical scan (e.g., MPRAGE) is collected alongside T2*-weighted functional scans (BOLD contrast).

- Analysis Focus: Analyze brain activity within a distributed frontoparietal network (the "action observation-execution network") and regions associated with the sense of agency, such as the angular gyrus and insular cortex [3].

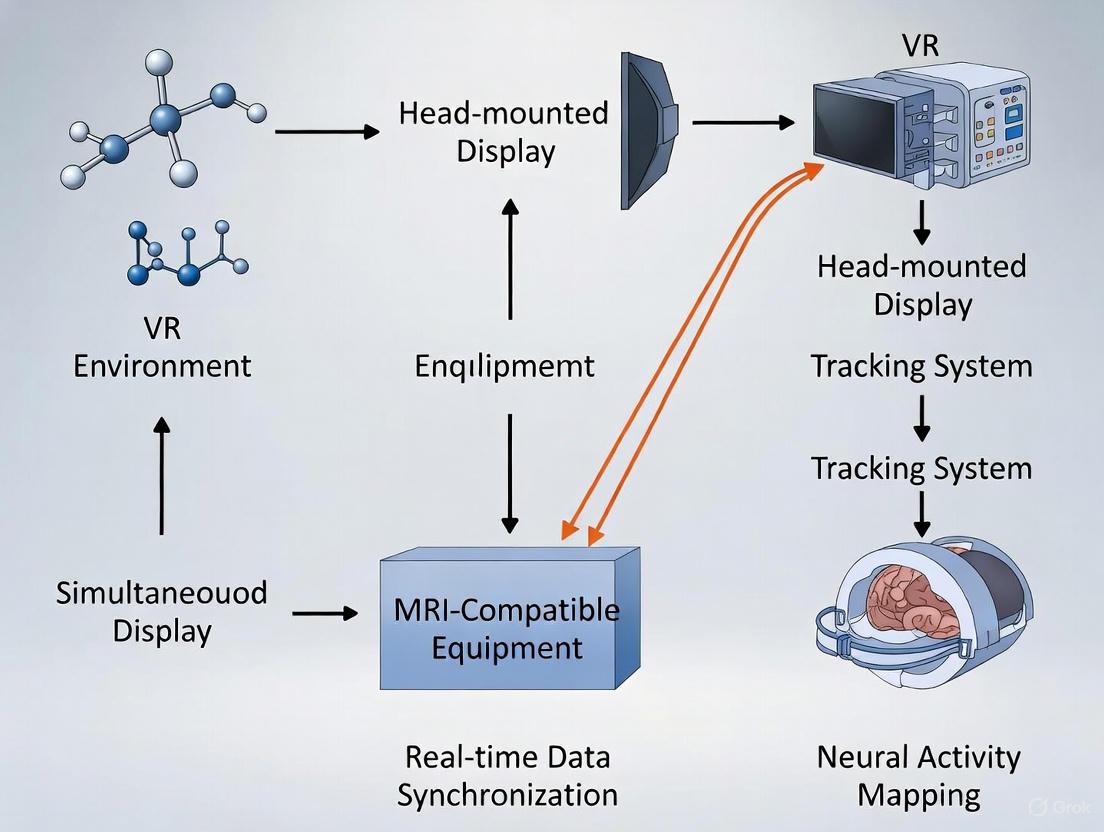

Workflow Diagram: VR-fMRI Integration for Motor Imitation Study

The diagram below illustrates the flow of information and tasks in a typical VR-fMRI experiment involving real-time hand tracking.

The Scientist's Toolkit: Essential Research Reagents & Materials

For researchers embarking on a VR-fMRI project, having the right "research reagents"—the core hardware and software components—is essential for success. The following table lists key items and their functions.

Table 2: Essential Materials for VR-fMRI Research

| Item Name | Type | Critical Function & Notes |

|---|---|---|

| MR-Compatible VR Goggles (e.g., NordicNeuroLab VisualSystem HD) [4] | Hardware | Provides stereoscopic visual stimuli. Must be MR-conditional to function safely at high field strengths (e.g., 3T) without degrading image quality. |

| MR-Compatible Data Glove (e.g., 5DT Data Glove 16 MRI) [3] | Hardware | Tracks complex hand and finger kinematics. Uses safe, fiberoptic sensors and long cables to connect to the control room. |

| Motion Tracking System (e.g., Flock of Birds by Ascension Tech) [3] | Hardware | Tracks 6-degrees-of-freedom limb or body position. Cameras and sensors must be placed outside the scanner room; system must be MR-safe. |

| VR Development Software (e.g., C++/OpenGL, Virtools) [3] | Software | Platform for creating and rendering custom virtual environments and controlling experimental logic. |

| Virtual Reality Peripheral Network (VRPN) [3] | Software | An open-source library that provides a unified interface for various VR hardware devices, simplifying integration. |

| fMRI Analysis Software Suite (e.g., FSL, SPM, AFNI, FreeSurfer) [5] [6] | Software | Used for preprocessing and statistical analysis of the acquired fMRI data. Proficiency in one or more of these is required. |

| High-Performance Computing (HPC) Resources [6] | Infrastructure | Essential for data storage and processing. Large datasets (e.g., from 100+ subjects) require cluster or cloud computing (AWS, Google Cloud). |

Troubleshooting Guides

FAQ 1: What are the primary types of interference in simultaneous VR-fMRI, and how can they be mitigated?

Issue: Simultaneous VR-fMRI experiments are plagued by electromagnetic interference that corrupts both EEG (if used) and fMRI data quality. This multi-modal interference presents a complex technical challenge.

Solutions:

- For EEG Data Corruption: Implement a multi-pronged approach involving both hardware and software solutions.

- Hardware Mitigation: Use compact EEG setups with short, shielded transmission leads to reduce the induction of artifacts. Incorporate dedicated reference sensors to actively monitor artifacts for subsequent correction [7].

- Software/Post-Processing Mitigation: Apply advanced denoising algorithms like DeepCor, a deep generative model that has been shown to outperform other methods (e.g., outperforming CompCor by 215% in enhancing BOLD signal responses to stimuli) [8].

- For fMRI Data Corruption: The presence of EEG equipment can disrupt the MRI's radiofrequency (RF) pulses.

- Hardware Mitigation: Use EEG caps with adapted, slim cables compatible with dense head RF arrays. Employ resistive materials for EEG leads or add resistors to segment their length, thereby mitigating RF interactions [7].

- Quality Assessment: Always conduct a control experiment to quantify the fMRI data degradation. One study reported EEG-induced fMRI temporal signal-to-noise ratio (tSNR) losses of 6–11% [7].

Table 1: Summary of Interference Types and Mitigation Strategies

| Interference Type | Primary Effect | Recommended Mitigation Strategy | Reported Efficacy |

|---|---|---|---|

| Gradient Artifact (EEG) | EEG signal swamped by time-varying MRI gradients [7] | Compact EEG setup with short leads; Model-based post-processing [7] | Allows detection of hallmarks like resting-state alpha [7] |

| Pulse Artifact (EEG) | EEG signal corrupted by ballistocardiogram (cardiac) [7] | Reference sensors; Data-based approaches (e.g., ICA) [7] | Corrects artifacts to a degree comparable with outside recordings [7] |

| RF Disruption (fMRI) | Perturbation of B1 field by EEG leads, reducing SNR [7] | Adapted EEG leads (resistive materials); Compatibility with dense RF coils [7] | Limits tSNR loss to 6-11% [7] |

| fMRI Thermal Noise | General noise affecting BOLD signal [8] | DeepCor denoising algorithm [8] | Outperforms CompCor by 215% [8] |

FAQ 2: What safety protocols are essential for VR-fMRI research?

Issue: Immersive VR experiments in an MRI environment introduce novel physical and psychological risks that traditional Institutional Review Board (IRB) protocols may not adequately address [9].

Solutions:

- Physical Safety: Ensure all VR equipment is MR-compatible. This includes using non-magnetic materials and verifying that devices will not heat up or become projectiles inside the magnetic field. A comprehensive safety evaluation is mandatory before any human subject testing [7].

- Psychological Safety: VR experiences are embodied and can feel like real memories, posing a risk of psychological distress (e.g., panic attacks in high-stress simulations) [9].

- Informed Consent: Implement the "3 C's of Ethical Consent in XR": Context (ensure participants understand the immersive experience), Control (maintain participant agency, including a clear and easy way to exit the simulation), and Choice (allow fully informed decisions about data sharing and future use) [9].

- Safeguards: Have a clear and immediate exit procedure from the VR environment and a debriefing protocol.

- Data Privacy: Motion and biometric data collected by commercial VR and biofeedback devices can be used to re-identify individuals, even from anonymized datasets [9].

- Data Handling: Establish secure data storage requirements, set limits on data re-use, and inform participants of these privacy risks during the consent process [9].

Table 2: Safety and Ethics Protocol Checklist

| Risk Category | Potential Harm | Essential Safeguards | References |

|---|---|---|---|

| Physical Safety | Heating of equipment; Projectiles; RF interference [7] | Use MR-compatible equipment only; Pre-scan safety screening; Comprehensive safety evaluation [7] | [9] [7] |

| Psychological Safety | Panic, anxiety, or distress from immersive VR content [9] | "3 C's" of Ethical Consent; Clear exit strategy; Debriefing protocol [9] | [9] |

| Data Privacy | Re-identification from motion or biometric data [9] | Secure data storage; Limits on data re-use; Informed consent regarding privacy risks [9] | [9] |

FAQ 3: How can I correct for data corruption in my fMRI signals?

Issue: fMRI data is inherently noisy, which can obscure the neural signal of interest, especially the subtle BOLD responses in VR studies.

Solutions:

- Utilize Advanced Denoising Algorithms: Traditional methods like CompCor have been superseded by more powerful deep-learning approaches.

- DeepCor: This is a deep generative model that disentangles and removes noise from fMRI data. It is applicable to single-participant data and has been shown to enhance the BOLD signal response to specific stimuli (e.g., face stimuli) by 215% compared to CompCor [8].

- Experimental Design: When analyzing task-based fMRI data, ensure you have a proper baseline or control condition. For example, in a multisensory learning study, compare brain activation in the trained audio-visual condition to unimodal visual stimulation to isolate multisensory integration effects [10].

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for VR-fMRI Research

| Item Name | Function / Application | Technical Specifications / Examples |

|---|---|---|

| High-Density RF Head Coil | Critical for achieving high SNR and sub-millimeter resolution in fMRI at high fields (e.g., 7T) [7]. | Dense "mesh" of small receive elements; Must be compatible with EEG cap setup [7]. |

| Compact, MR-Compatible EEG System | For simultaneous EEG-fMRI acquisition to capture neural activity with high temporal precision [7]. | Short, shielded transmission leads; Adapted for use inside RF coil; Includes reference sensors for artifact correction [7]. |

| DeepCor Denoising Software | Removes noise from fMRI data to enhance BOLD signal quality [8]. | Deep generative model; Outperforms CompCor; Applicable to single-subject data [8]. |

| VR HMD & Tracking System | Presents immersive, controlled visual and auditory stimuli for ecological valid cognitive and rehabilitation tasks [10] [11] [12]. | HTC Vive; Custom software for specific paradigms (e.g., neuroproprioceptive facilitation) [11] [12]. |

| Safety & Ethics Framework | Safeguards participant well-being and data privacy, addressing novel risks of immersive tech [9]. | "3 C's of Ethical Consent" (Context, Control, Choice); IRB safety guide for immersive technology [9]. |

Definitions and Standards

What does "MR-Conditional" mean?

An item designated as MR Conditional is safe for use in the MRI environment only under specific, tested conditions. This is not a blanket approval; it is a precise safety envelope defined by the manufacturer through standardized testing. Staff must verify and apply these conditions every time the item enters the MRI suite [13].

How is MR-Conditional different from MR Safe and MR Unsafe?

The American Society for Testing and Materials (ASTM) International standard F2503 defines three safety classifications for devices in the MRI environment [14]:

| Classification | Meaning | Visual Label | Examples |

|---|---|---|---|

| MR Safe | Poses no known hazards in any MRI environment. | Green Square [13] | Non-metallic patient pads, plastic tools, certain immobilization devices [13]. |

| MR Conditional | Safe only within specific, tested conditions (e.g., field strength, SAR limits). | Yellow Triangle [13] | Specialist VR HMDs, patient monitors, many implants [4] [13]. |

| MR Unsafe | Known to pose hazards and must never enter magnetic fields. | Red Icon [13] | Standard oxygen cylinders, ferromagnetic tool carts, conventional IV poles [13]. |

MR-Conditional Hardware for VR-fMRI

What are the key components of a VR-fMRI system?

Simultaneous VR and fMRI recording requires a suite of MR-Conditional equipment to present stimuli, record responses, and monitor participants without compromising safety or data integrity.

Table: Essential MR-Conditional Hardware for VR-fMRI Research

| Hardware Category | Specific Device Examples | Key Function | Critical MR-Conditional Considerations |

|---|---|---|---|

| Head-Mounted Display (HMD) | NordicNeuroLab VisualSystem HD [4] | Presents immersive 3D visual stimuli. | Must use shielded electronics and MR-safe materials to avoid image artifacts and ensure safety at target field strength (e.g., up to 3T) [4]. |

| Input & Motion Tracking | 5DT Data Glove 16 MRI [3] | Measures complex hand and finger kinematics in real-time. | Must be fiber-optic and metal-free to operate safely in the magnet [3]. |

| Physiological Monitoring | BIOPAC MP200 System with MRI-safe amplifiers (e.g., for ECG, EDA, Respiration) [15] | Records physiological signals (ECG, EMG, EDA, respiration) for psychophysiological studies. | Requires specialized RF-filtered cable sets and signal conditioning to remove MR gradient interference from the data [15]. |

| Audio Presentation | MR-Conditional headphones or earphones | Delivers auditory stimuli and instructions. | Must be non-magnetic and designed to function without introducing artifacts or safety risks from the rapidly switching gradients. |

Troubleshooting Common Experimental Issues

Q: Our functional images show significant artifacts when the MR-Conditional VR HMD is in use. What could be the cause?

- Verify Compliance: First, confirm that the HMD is approved for your scanner's specific static field strength (e.g., 1.5T or 3T) and that all conditions of use from the manufacturer's Instructions for Use (IFU) are being met [13].

- Inspect Equipment: Check for any physical damage to the HMD or its cabling. Even minor compromise to the shielding can lead to interference.

- Cable Management: Ensure all cables are routed according to the manufacturer's guidelines. Improper routing can increase RF coupling, leading to artifacts. Use approved cable management kits [13].

Q: We are experiencing severe noise in our physiological data (ECG/EDA) during fMRI sequences. How can we clean the signal?

- Use Smart Amplifiers: Employ MRI-specific signal conditioning systems (e.g., BIOPAC's MRI Smart Amplifiers) that are designed to filter interference at the source [15].

- Apply Post-Processing Filters: In software, apply comb-band-stop (CBS) filters to remove harmonic artifacts caused by the EPI sequence, particularly with Multiband excitation [15].

- Optimize Sequence Timing: If possible, coordinate with your MR physicist to adjust sequence parameters. Coronal EPI MB scans, for instance, are noted to generate less harmonic interference in physiological data than Axial EPI MB scans [15].

Q: Participants report discomfort or a "heating sensation" when using the data glove inside the bore. What should we do?

- Immediately Stop the Scan: Participant safety is paramount. Halt the scan and investigate the issue.

- Check Placement and Insulation: Verify that the glove and its leads are not making direct contact with the participant's skin without appropriate insulation. Ensure there are no cable loops that could act as RF antennas [13].

- Review SAR Limits: The Specific Absorption Rate (SAR) measures RF power deposition. Confirm that your scan protocol operates within the SAR limits specified in the MR-Conditional guidelines for all equipment in use [13].

Q: Our VR system's tracking of hand movements seems delayed or inaccurate during the fMRI scan. How can we improve it?

- Isolate the Cause: Test the tracking system outside the scanner room to rule out inherent software or hardware issues.

- Assess Electrical Interference: The scanner's gradients can induce noise in electronic tracking systems. Ensure all equipment is properly shielded and that you are using MRI-compatible tracking devices (like the fiber-optic 5DT data glove) that are less susceptible to electromagnetic interference [3].

- Calibrate in Situ: Perform calibration procedures for the tracking system with the participant positioned in the scanner bore, as the environment can affect performance.

Experimental Protocol: VR-Based Motor Imitation Task

The following workflow is adapted from a foundational study that integrated a VR system with fMRI to investigate brain-behavior interactions during a hand imitation task [3].

Objective: To delineate brain-behavior interactions during observation and imitation of movements using virtual hand avatars in an fMRI environment [3].

Materials and Reagents:

Table: Research Reagent Solutions for VR-fMRI Motor Task

| Item | Function/Justification |

|---|---|

| MRI-Compatible VR HMD | Presents the virtual hand avatar in a first-person perspective to enhance embodiment. |

| 5DT Data Glove 16 MRI | Metal-free glove with fiberoptic sensors to measure 14 joint angles of the hand in real-time without MR interference [3]. |

| VR Simulation Software | Renders the virtual environment and streams real-time kinematic data to animate the virtual hand (e.g., using Virtools with VRPN plugin) [3]. |

| fMRI Scanner (3T) | Acquires Blood-Oxygen-Level-Dependent (BOLD) signals to map brain activity. |

| Fiberoptic Cable System | Safely transmits data from the glove in the scanner room to the control room computer [3]. |

Detailed Methodology:

- Subject Setup: The subject lies in the scanner and dons the MR-Conditional data glove on the right hand and the VR HMD.

- Task Design (Blocked): The experiment follows a blocked design:

- Observation with Intent to Imitate (OTI): The subject observes a pre-recorded finger movement sequence performed by a virtual hand avatar from a first-person perspective, with the instruction to imitate it next.

- Imitation with Feedback: The subject imitates the previously observed sequence. The virtual hand avatar is now animated in real-time by the subject's own movements, measured by the data glove.

- Rest Control: The subject views a static virtual hand. Control trials with moving non-anthropomorphic objects can also be included [3].

- Data Acquisition: Throughout the blocks, the following data are simultaneously recorded:

- fMRI Data: Continuous BOLD imaging using an EPI sequence.

- Kinematic Data: The data glove streams precise measurements of finger joint angles.

- Data Analysis: Brain activity (fMRI data) is analyzed and correlated with the behavioral performance (kinematic data) to identify networks involved in observation, imitation, and the sense of agency [3].

Safety and Compliance Workflow

Before any equipment enters the MRI suite, a rigorous safety check must be performed.

Key Steps for MR-Conditional Equipment [14] [13]:

- Check the Label: Always look for the MR Conditional (yellow triangle) icon on the device itself.

- Consult Documentation: Retrieve the manufacturer's Instructions for Use (IFU) or MR safety sheet for the exact model and serial number.

- Verify Conditions: Confirm the device is approved for your scanner's field strength and that your scan protocol adheres to all specified limits (e.g., SAR, spatial gradient).

- Implement and Train: Integrate these conditions into your standard operating procedures and ensure all research staff are trained on the specific requirements for each piece of equipment.

The integration of Virtual Reality (VR) with functional Magnetic Resonance Imaging (fMRI) represents a paradigm shift in cognitive neuroscience, enabling the study of brain function under ecologically valid conditions. This combination allows researchers to probe the neurophysiological underpinnings of complex behaviors by linking immersive, naturalistic experiences with the high spatial resolution of the Blood-Oxygen-Level-Dependent (BOLD) signal. VR-fMRI provides a unique window into brain dynamics, facilitating the investigation of neural mechanisms underlying episodic memory, spatial navigation, and executive functions within controlled yet realistic environments [16]. The core strength of this multimodal approach lies in its capacity to elucidate how distributed brain networks—including medial temporal, prefrontal, and parietal regions—support cognitive processes that are intimately tied to real-world experiences [16]. However, this powerful convergence also introduces significant technical challenges related to electromagnetic interference, data quality, and experimental design that must be systematically addressed to ensure valid and reliable findings.

Core Technical Challenges in Simultaneous VR-fMRI

Simultaneous VR-fMRI acquisition presents unique technical obstacles that can compromise data quality and participant safety if not properly managed. The table below summarizes the primary artifacts and their mitigation strategies.

Table 1: Key Technical Challenges and Solutions in VR-fMRI Research

| Challenge Type | Specific Artifacts/Issues | Proposed Solutions & Mitigation Strategies |

|---|---|---|

| MRI-Induced EEG Artifacts | Gradient Artifacts (GA), Ballistocardiogram (BCG) artifacts, Motion Artifacts (MA) [7] | Compact EEG setups with short transmission leads; Reference sensors for artifact monitoring; Advanced post-processing (e.g., ICA-AROMA, template subtraction) [7] [17] [18] |

| EEG-Induced fMRI Artifacts | Disruption of the MR radiofrequency (B1) field; SNR loss in fMRI [7] | Use of resistive materials for EEG leads; Strategic routing of cables to be compatible with dense RF arrays; EEG cap design minimizing metallic components [7] |

| VR-Related Data Quality | Head motion induced by immersive VR; Sensorimotor conflict causing motion sickness [19] [16] | Robust denoising pipelines (e.g., ICA-AROMA, CC, Scrubbing); Training sessions for participants; Limiting VR session duration [16] [18] |

| Safety Concerns | Radiofrequency (RF)-induced heating at EEG electrodes [17] [7] | Using MR-compatible equipment with built-in safety resistors; Monitoring Specific Absorption Rate (SAR) and B1+RMS; Phantom testing to verify safe heating levels [7] [17] |

Frequently Asked Questions (FAQs) & Troubleshooting Guides

Data Acquisition & Quality

Q: What are the most effective strategies for minimizing fMRI quality loss when using EEG inside the scanner?

Research indicates that EEG equipment can cause a 6-11% loss in temporal Signal-to-Noise Ratio (tSNR) in fMRI data [7]. To mitigate this:

- Hardware Optimization: Use an EEG cap with slim, adapted cables designed for compatibility with high-density head RF arrays. This reduces physical displacement of the coil and minimizes RF field disruption [7].

- Lead Management: Ensure EEG leads are routed securely to avoid forming loops and are kept as short as possible. Using leads with higher resistivity can also dampen unwanted RF interactions [7].

- Quality Control: Always acquire a reference fMRI scan without the EEG cap to quantify the specific tSNR loss attributable to the EEG system in your setup [7].

Q: Our EEG data during simultaneous fMRI is swamped by artifacts. Which correction pipelines are most effective?

A multi-step approach is crucial for cleaning EEG data collected inside the MR scanner.

- Gradient Artifact (GA) Removal: Apply an average template subtraction method. This requires recording the scanner's slice-timing pulses (TR markers) directly into the EEG file for precise synchronization [17].

- Pulse Artifact (BCG) Correction: Utilize optimal basis sets (OBS) or apply Independent Component Analysis (ICA) to identify and remove cardioballistic artifacts. Semi-automatic correction with manual verification of detected heartbeats is recommended for reliability [17].

- Advanced Denoising: For residual artifacts, data-driven approaches like ICA-AROMA (Automatic Removal of Motion Artifacts) have proven effective, particularly for non-lesional brain conditions [18].

Experimental Design & Safety

Q: How can we design a VR-fMRI experiment that is both immersive and controls for excessive head motion?

- Paradigm Design: Implement a block design rather than a continuous, event-related design. This provides natural breaks, allowing participants to rest and reduces fatigue-induced motion [3] [16].

- Participant Preparation: Conduct a thorough training session outside the scanner. This familiarizes participants with the VR task and controls, reducing novelty-driven movements during the actual scan [20].

- Session Management: Adhere to the "20-10 rule" for VR immersion: 20 minutes of VR, followed by a 10-minute break. This helps prevent cybersickness and cognitive overload, which can exacerbate motion [19].

Q: What are the critical safety protocols for simultaneous EEG-fMRI, especially at higher field strengths like 7T?

Safety is paramount, with the primary risk being RF-induced heating at the EEG electrodes.

- Equipment Certification: Only use MR-compatible EEG systems that are explicitly certified for use with your scanner's field strength (e.g., 3T or 7T). These include built-in safety resistors [7] [17].

- Phantom Testing: Before human studies, conduct phantom tests to measure temperature changes under your specific fMRI sequences. A comparative benchmark is that a multi-band (MB) sequence with a B1+RMS of 0.8 µT showed lower heating than a standard single-band (SB) sequence with a B1+RMS of 1.0 µT [17].

- Impedance Management: Maintain all electrode-skin impedances below 5 kΩ, as higher impedances can increase the risk of localized heating [17].

Detailed Experimental Protocols

Protocol for a VR-fMRI Study on Episodic Memory

This protocol is adapted from systematic reviews of VR-fMRI studies on episodic memory [16].

Objective: To investigate the neural correlates of episodic memory encoding and retrieval using a naturalistic VR paradigm. Participants: Healthy adults, right-handed, with no history of neurological disorders. (Sample size: ~15-20 based on previous studies [3]). VR Task Design:

- Encoding Phase: Participants navigate a virtual environment (e.g., a museum or a city) and are instructed to remember specific objects and their locations.

- Retrieval Phase: Participants are then asked to recall and identify the objects and their spatial contexts.

- Control Task: A non-spatial, low-level visual task within VR to establish a baseline. fMRI Acquisition Parameters (Example for a 3T scanner):

- Sequence: Multi-band EPI (to achieve a short TR, e.g., 440ms-2000ms)

- Voxel size: 2-3 mm isotropic

- Slices: Whole-brain coverage (e.g., 28-60 slices)

- The B1+RMS value must be checked to ensure it falls within safe limits for any equipment inside the bore [17]. Data Analysis:

- fMRI Preprocessing: Standard pipeline including realignment, normalization, and smoothing. Denoising with a pipeline such as

CC + SpikeReg + 24HMPis recommended for tasks that may induce motion [18]. - General Linear Model (GLM): Contrast activity during encoding and retrieval vs. the control task. Key regions of interest include the Hippocampus (HPC), Parahippocampal Gyrus (PHG), Prefrontal Cortex (PFC), and Angular Gyrus [16].

Workflow for a Simultaneous EEG-fMRI-VR Experiment

The diagram below outlines the integrated workflow for setting up and running a simultaneous EEG-fMRI-VR experiment.

Diagram 1: Integrated VR-fMRI-EEG experimental workflow.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Essential Materials for VR-fMRI Research

| Item Category | Specific Example(s) | Critical Function & Notes |

|---|---|---|

| VR Presentation System | MRI-compatible VR goggles (e.g., with RF-shielded displays) | Presents visual stimuli within the high-magnetic-field environment without causing interference. |

| Motion Tracking | MRI-compatible data gloves (e.g., 5DT Data Glove 16 MRI); Eye-tracking systems | Captures kinematic data of hand movements or gaze in real-time to animate avatars or assess behavior [3]. |

| EEG System | MR-compatible EEG amplifier (e.g., BrainAmp MR plus); Cap with integrated safety resistors (e.g., BrainCap MR) | Records electrophysiological data with built-in resistors to mitigate heating risks [7] [17]. |

| fMRI Coil | Dense, multi-channel head RF array (e.g., 64-channel head coil) | Provides high Signal-to-Noise Ratio (SNR) for fMRI, essential for sub-millimeter resolution [7]. |

| Data Analysis Software | BrainVision Analyzer (EEG); FSL, SPM, CONN (fMRI); EEGLAB; Custom scripts in Python/MATLAB | Preprocessing and statistical analysis of multi-modal data, including specialized toolboxes for artifact removal [17] [18]. |

| Safety & Sync Equipment | SyncBox (for scanner pulse synchronization); Fluoroptic thermometer (for phantom heating tests) | Ensures temporal alignment of data streams and verifies safety standards during protocol development [17]. |

Implementing the Protocol: A Step-by-Step Guide to VR-fMRI Setup and Data Acquisition

Simultaneous Virtual Reality (VR) and functional Magnetic Resonance Imaging (fMRI) recording presents unique technical challenges, primarily due to the incompatibility of standard electronic equipment with the high-strength magnetic fields of MRI scanners. MR-safe VR equipment is specifically engineered to operate within this hostile electromagnetic environment without compromising patient safety or data integrity. These specialized systems use shielded electronics and MR-safe materials to prevent image artifacts, avoid projectile hazards, and ensure accurate stimulus delivery during neuroimaging experiments. The core requirement for any device used in this context is compliance with the ASTM F2503 standard, which categorizes equipment as MR Safe, MR Conditional, or MR Unsafe [21] [22]. Understanding these classifications is fundamental for establishing safe and effective VR-fMRI research protocols.

Technical Specifications of the VisualSystem HD

The VisualSystem HD (VSHD) from NordicNeuroLab represents a specialized solution designed specifically for fMRI environments. This system is classified as MR Conditional, meaning it is safe for use under specific conditions—in this case, at magnetic field strengths up to 3 Tesla [4]. The system overcomes the fundamental obstacle of combining modern VR technology with MR imaging by employing carefully shielded electronics that do not significantly degrade MR image quality [4].

Table 1: Key Technical Specifications of the VisualSystem HD

| Component | Specification | Research Application Benefit |

|---|---|---|

| Display Type | Dual Full HD OLED (one for each eye) | Enables stereoscopic 3D imaging for immersive spatial tasks [23] |

| Native Resolution | 1920×1200 @ 60Hz/71Hz | Presents sharp, high-quality graphics and text for visual stimuli [23] |

| Field of View | 80% larger than previous models | Increases immersion, potentially enhancing ecological validity [23] |

| Interpupillary Distance (IPD) Adjustment | 44 to 75 mm | Ensures proper fit and visual clarity for a wide range of participants [23] |

| Diopter Correction | -8 to +5 | Allows subjects with vision impairments to participate without glasses [23] |

| Integrated Eye Tracking | Binocular, 60 fps, 640x480 resolution | Provides objective measures of gaze and task engagement during scanning [23] |

| Safety Certifications | IEC60601-1, IEC 60601-1-2 | Certified for patient safety and electromagnetic compatibility in medical environments [23] |

The system is part of a broader fMRI ecosystem that includes a SyncBox for synchronization with the MRI scanner, response collection devices, and stimulus presentation software (nordicAktiva), forming a complete solution for functional exams [23].

Alternative MR-Safe VR Hardware

While the VisualSystem HD is an integrated solution, other companies provide VR hardware that can be adapted for research and clinical use in medical environments.

DPVR manufactures both PC-tethered and wireless VR headsets that can be implemented in hospital or medical settings. Their P1 Ultra model is notable for its customizable modules, which can include interfaces for monitoring physiological data such as heart rate or brain-computer interfaces, providing additional data streams for multimodal research [24]. These headsets have been utilized by partners for applications including music therapy (Ceragem) and vision treatment (Vivid Vision) [24].

Furthermore, platforms like Psious and XRHealth represent software solutions that operate on VR hardware to deliver therapeutic interventions for conditions like anxiety, phobias, and stress, demonstrating the broader applicability of VR in clinical research settings [24].

Essential Research Reagent Solutions

For a VR-fMRI research laboratory, the "reagents" are the core hardware and software components required to conduct simultaneous recording experiments.

Table 2: Essential Research Reagents for VR-fMRI Simultaneous Recording

| Item | Function | Example Products/Models |

|---|---|---|

| MR-Conditional VR Headset | Presents visual stimuli inside the scanner bore; must not create artifacts or pose safety risks. | NordicNeuroLab VisualSystem HD, DPVR Headsets with medical-grade customization [24] [23] |

| Stimulus Presentation Software | Controls the timing, sequence, and logic of VR stimuli presented to the participant. | nordicAktiva, custom scripts (e.g., via Unity) [23] |

| Synchronization Interface | Aligns the presentation of VR stimuli with the acquisition of fMRI volumes for precise temporal alignment. | SyncBox [23] |

| Response Collection Device | Records participant behavioral responses (e.g., button presses) during the fMRI scan. | ResponseGrip [23] |

| Data Integration & Analysis Suite | Processes and analyzes the combined fMRI and behavioral data; may include specialized VR analytics. | nordicBrainEx [23] |

| MR-Safe Eye-Tracking System | Monitors participant gaze, pupil dilation, and engagement, providing crucial behavioral metrics. | Integrated system in VisualSystem HD [23] |

Experimental Setup and Workflow Protocol

Configuring a system for simultaneous VR-fMRI recording requires a meticulous workflow to ensure safety and data quality. The following diagram outlines the critical path from equipment preparation to data acquisition.

Troubleshooting Common Technical Issues

Even with proper setup, researchers may encounter technical challenges. This section addresses common problems and their solutions in a FAQ format.

Q1: We are experiencing significant noise or artifacts in our fMRI images since introducing the VR system. What should we check?

- A: This is often caused by electromagnetic interference. First, verify that all components of your VR system are officially rated and labeled as MR Conditional for your scanner's field strength (e.g., 3T) [23] [22]. Ensure all cables are properly shielded and routed according to the manufacturer's guidelines, away from the scanner bore and other sensitive equipment. Using fiber-optic extensions for video signals can sometimes mitigate this issue.

Q2: The timing between our VR stimulus presentation and the fMRI volume acquisition is inconsistent. How can we improve synchronization?

- A: Implement a dedicated hardware synchronization solution. Systems like the NordicNeuroLab SyncBox are designed for this purpose, as they receive a TTL (Transistor-Transistor Logic) pulse from the MRI scanner at the start of each volume acquisition and use this to trigger events in the stimulus software with high precision [23]. Always run a mock scan without a subject to validate the synchronization timing before collecting experimental data.

Q3: Our participant cannot see the VR stimuli clearly. What calibrations are necessary?

- A: Visual clarity is paramount. The VR headset must be properly fitted to the participant. Utilize the built-in diopter correction (typically ranging from -8 to +5) and interpupillary distance (IPD) adjustment (e.g., 44-75mm) on the headset to match the participant's vision and anatomy [23]. This is a critical step during participant setup, similar to adjusting an ophthalmoscope.

Q4: How do we ensure our VR equipment remains safe and compliant for use in the MRI environment?

- A: Adhere to a strict labeling and audit protocol. All equipment must be clearly labeled according to ASTM F2503 standards with MR Conditional icons (yellow triangle) [21] [22]. Conduct regular audits to check that labels are intact and legible. Furthermore, maintain a log for cleaning and inspecting hygiene components, such as replaceable face sponges, to prevent contamination and equipment damage [24].

Application in a Research Context: A Sample Experimental Protocol

To ground these technical protocols in research practice, consider the following simplified methodology, inspired by recent studies that combine VR and fMRI.

Protocol: Investigating Spatial Processing with Stereoscopic VR [25]

- Objective: To identify the neural correlates of processing objects in peripersonal (reachable) space versus extrapersonal (non-reachable) space using stereoscopic VR during fMRI.

- Stimuli & Task: Participants perform a visual discrimination task on graspable objects presented at different apparent distances within a VR environment. The paradigm includes alternating blocks of monoscopic and stereoscopic presentation to isolate the effect of depth perception.

- Key Controls: The pixel size of the objects is controlled across distances to ensure that neural activation differences are due to spatial processing and not low-level visual features [25].

- fMRI Parameters: Standard whole-brain EPI acquisition. Preprocessing typically includes realignment, normalization, and smoothing. Contrasts are defined for [Stereoscopic Peripersonal > Monoscopic Peripersonal] and [Peripersonal Space > Extrapersonal Space].

- Expected Outcomes: The study hypothesizes that stimuli in peripersonal space will engage the dorsal visual stream, including areas like the posterior intraparietal sulus, which is associated with action-oriented processing and affordances. In contrast, extrapersonal space is expected to activate more ventral stream regions related to semantic and scene analysis [25].

The logical structure of such an experiment, from hypothesis to analysis, can be visualized as follows:

Troubleshooting Guides and FAQs

Synchronization and Timing Issues

Q1: What are the primary causes of latency or jitter between the fMRI trigger and the VR stimulus presentation, and how can they be minimized?

Latency (constant delay) and jitter (variable delay) most often originate from software communication pathways, hardware processing time, or the VR system's graphics rendering pipeline [26].

- Solution 1: Optimize Software Communication: Utilize dedicated, high-speed data acquisition systems and application programming interfaces (APIs) designed for real-time experimentation. The Experiments in Virtual Environments (EVE) framework, built on Unity 3D, is an example of a system that provides standardized modules for data synchronization and storage, helping to mitigate these issues [27].

- Solution 2: Verify and Calibrate Timing: Regularly perform a timing calibration procedure. This involves sending a test trigger from the fMRI scanner to the VR presentation computer while using a photodiode or other sensor to measure the precise delay between the trigger signal and the actual stimulus onset on the VR display. This measured delay can often be accounted for in the experiment software.

- Solution 3: Hardware Selection: Ensure all components, especially the VR computer's graphics card, meet or exceed the recommended specifications for low-latency rendering. The use of fMRI-compatible equipment, such as fiber-optic data gloves, is essential to prevent interference and ensure signal integrity [3].

Q2: The VR system fails to receive the TTL pulses from the fMRI scanner. What should I check?

This is typically a hardware connection or configuration problem.

- Checklist:

- Physical Connection: Verify the cable from the scanner's TTL output port is securely connected to the correct input port (e.g., a parallel port or a dedicated digital I/O card) on the VR control computer.

- Voltage Level Compatibility: Confirm that the voltage level of the TTL pulse (e.g., 5V) is within the acceptable range for the input port on the VR computer. Using an oscilloscope to check for the presence and quality of the pulse is the most reliable method.

- Software Configuration: Ensure your experiment software (e.g., Unity with the EVE framework) is configured to listen to the correct hardware port for incoming triggers [27].

- Grounding Loops: Check for potential grounding issues that can corrupt the signal.

Hardware and Software Integration

Q3: Which VR hardware and software solutions have been successfully integrated with fMRI in published research?

Successful integration has been achieved with a variety of components, emphasizing MRI-compatibility. The table below summarizes key solutions documented in research.

Table 1: Research Reagent Solutions for VR-fMRI Integration

| Component Type | Specific Solution / Example | Function / Key Feature |

|---|---|---|

| Input Device | 5DT Data Glove 16 MRI [3] | MRI-compatible glove for measuring hand joint angles using fiber optics. |

| Input Device | Ascension "Flock of Birds" 6DOF sensors [3] | Tracks position and orientation (6 degrees of freedom). |

| Software Framework | Experiments in Virtual Environments (EVE) [27] | Unity-based framework for designing experiments, managing data synchronization, and storage. |

| Software Framework | Virtools with VRPack [3] | Development environment used to create virtual environments for fMRI integration. |

| Visual Display | MRI-compatible HMDs or projection systems | Presents the VR stimulus; must be non-magnetic and not interfere with the magnetic field. |

Q4: How do I manage the data streams from the fMRI scanner, VR system, and physiological sensors to ensure they are synchronized?

This requires a centralized synchronization strategy.

- Solution: Implement a master system that records all data streams with a common timing clock. One effective method is to use software like LabChart to record physiological data and receive the fMRI TTL pulses on a separate channel [27]. The VR software should timestamp all behavioral and interaction data (e.g., button presses, avatar position) using the same clock reference established by the scanner triggers. The EVE framework, for instance, provides infrastructure for synchronizing data from different sources and storing it in a unified database [27].

Data Quality and Artifact Resolution

Q5: What are the common sources of artifact in fMRI data during VR experiments, and how can they be addressed?

Beyond the usual sources of artifact, VR experiments introduce specific challenges.

- Head Motion: The immersive nature of VR can lead to increased head movement.

- Mitigation: Use additional padding and comfort items to stabilize the participant's head, and provide clear instructions to remain as still as possible.

- Hardware Interference: Non-MRI-compatible equipment can create electromagnetic noise or pose a safety risk.

- Mitigation: Use only certified MRI-compatible VR equipment, such as fiber-optic gloves and non-ferrous displays [3]. All equipment must be tested in the scanner environment prior to the experiment.

- Physiological Noise: The cognitive and emotional load of VR can increase heart and respiration rates, which modulate the BOLD signal.

- Mitigation: Record physiological data (e.g., ECG, EDA, respiration) concurrently so they can be used as regressors in the fMRI data analysis to remove these confounding effects [27].

The following diagram illustrates the flow of signals and data in a typical VR-fMRI setup, highlighting potential points of failure for synchronization.

Diagram 1: VR-fMRI System Data and Trigger Flow

FAQs: Core Principles of VR Cognitive Assessment

Q1: What are the key advantages of using VR over traditional paper-and-pencil tests for cognitive assessment?

VR cognitive assessment offers three primary advantages:

- Enhanced Ecological Validity: VR can create multimodal sensory stimuli in interactive environments that mimic real-world activities and daily surroundings, providing a more accurate assessment of real-life cognitive functioning than artificial lab settings [28] [29].

- Comprehensive Domain Assessment: Systems like CAVIRE can assess all six cognitive domains defined by DSM-5 (complex attention, executive function, language, learning and memory, perceptual-motor function, and social cognition), whereas traditional tests like MMSE are less effective for certain domains like executive function [28].

- Improved Engagement: Gamified VR tasks transform repetitive cognitive testing into immersive, interactive experiences that increase participant motivation and reduce boredom and fatigue, potentially improving data quality [12] [30].

Q2: How should VR tasks be designed to ensure they accurately target specific cognitive domains?

Effective VR task design requires:

- Incorporating Established Paradigms: Base VR tasks on validated neuropsychological principles and traditional tests (e.g., using Whack-the-Mole for response inhibition, Corsi block-tapping for visuospatial memory, or Stroop test variants for cognitive flexibility) [12] [31].

- Simulating Daily Activities: Design tasks that mimic activities of daily living (ADLs), such as virtual kitchen scenarios for assessing memory, attention, and planning skills, which enhances ecological validity [28] [29].

- Implementing Adaptive Difficulty: Use algorithms that automatically adjust task difficulty based on user performance to maintain appropriate challenge levels and prevent ceiling/floor effects [31].

Q3: What specific considerations are needed when designing VR assessments for clinical populations with cognitive impairments?

Special considerations for clinical populations include:

- Safety and Tolerance: Implement safeguards for participants with potential vulnerability to VR-induced symptoms (e.g., nausea, headache, giddiness). Studies report successful use with older adults with MCI and various neuropsychiatric disorders when appropriate precautions are taken [28] [30] [32].

- Simplified Interaction Design: Account for potential technological inexperience by creating intuitive interfaces and providing comprehensive tutorial sessions before assessment [28] [31].

- Clinical Validation: Establish sensitivity to cognitive impairments specific to target disorders (e.g., mood disorders, psychosis spectrum disorders, MCI) through rigorous validation against standard neuropsychological tests and clinical diagnosis [29] [30].

FAQs: Technical Protocols for VR-fMRI Simultaneous Recording

Q4: What are the primary technical challenges of simultaneous VR-fMRI recording, and how can they be mitigated?

The main challenges involve cross-modal interference, which can be addressed through:

Table: VR-fMRI Interference Types and Mitigation Strategies

| Interference Type | Impact on Data | Mitigation Strategies |

|---|---|---|

| MRI on VR | Artifacts on motion tracking and visual presentation due to magnetic fields | Fiber-optic data transmission, magnetic-compatible displays, temporal synchronization |

| VR on fMRI | RF disruption from electronic components, reduced fMRI signal quality | Compact EEG/VR setups with short leads, specialized RF-shielded components, reference sensors |

| Subject Safety | Potential heating from induced currents | Current-limiting resistors, careful cable routing, thermal monitoring |

| Data Quality | Reduced temporal signal-to-noise ratio (tSNR) | Reference sensors for artifact correction, post-processing algorithms, optimized coil design |

Based on EEG-fMRI literature which shares similar technical challenges [7]:

- Hardware Optimization: Use compact transmission systems with short, well-shielded cables to reduce artifact incidence at their origin. Implement specialized lead materials and resistors to segment lengths, minimizing RF interactions [7].

- Reference Sensors: Incorporate dedicated sensors to monitor artifacts, enabling advanced post-processing correction of residual artifacts in both fMRI and physiological data [7].

- Physical Integration: Adapt equipment to fit within dense RF head coil arrays without compromising fMRI sensitivity or acceleration capabilities [7].

Q5: What experimental design considerations are crucial for successful VR-fMRI hyperscanning studies?

For VR-fMRI hyperscanning (simultaneous multi-person recording):

- Temporal Synchronization: Implement robust synchronization protocols like Network Time Protocol (NTP) to align data acquisition across multiple scanners, maintaining timing discrepancies below the repetition time (TR) of fMRI sequences (e.g., <500ms for TR=2000ms) [33].

- Task Design for Social Cognition: Develop paradigms that examine cooperative and competitive interactions, such as sender-receiver games with alternating roles, to investigate neural correlates of social decision-making [33].

- Standardized Data Collection: Adhere to Brain Imaging Data Structure (BIDS) standards for organizing neuroimaging data, enabling reproducibility and data sharing across research groups [33].

Troubleshooting Guides

Problem: Excessive VR-Induced Symptoms (Nausea, Headache) in Participants

Potential Causes:

- Rapid movement in VR environment conflicting with vestibular input

- Inadequate calibration for individual interpupillary distance (IPD)

- Prolonged exposure without adequate breaks

Solutions:

- Limit initial session duration and gradually increase exposure

- Ensure proper HMD fit and IPD adjustment for each participant

- Implement stationary VR environments rather than locomotion-intensive scenarios

- Provide clear instructions to focus on stable visual reference points when possible

- Consider anti-motion sickness medications for susceptible participants in longer studies [30]

Problem: Significant Artifacts Corrupting fMRI Data During VR Presentation

Potential Causes:

- RF interference from VR electronic components

- Induction artifacts from cabling within the magnetic field

- Subject motion amplified by VR engagement

Solutions:

- Implement additional shielding for VR components

- Use fiber-optic instead of electrical cabling for data transmission

- Incorporate reference sensors for artifact correction in post-processing

- Apply motion correction algorithms designed for sustained movement during VR

- Validate fMRI data quality with and without VR equipment through phantom tests [7]

Problem: Discrepancies Between VR and Traditional Neuropsychological Assessment Scores

Potential Causes:

- Differing cognitive demands in immersive vs. traditional testing environments

- Gamification elements altering motivational factors

- Variability in technological familiarity among participants

Solutions:

- Establish convergent validity through correlation studies with multiple standard measures

- Control for technological familiarity through pre-training sessions

- Analyze whether VR provides complementary information about real-world functioning rather than direct replacement of traditional tests

- Consider that VR may assess different aspects of cognition (e.g., real-world functional capacity) compared to traditional tests [29] [31]

Experimental Protocols for Key VR Cognitive Assessments

Protocol 1: CAVIRE System for Comprehensive Cognitive Domain Assessment

Table: CAVIRE Implementation Protocol

| Component | Specification | Purpose |

|---|---|---|

| Hardware | HTC Vive Pro HMD, Lighthouse sensors, Leap Motion device, Rode VideoMic Pro microphone | Enable tracking of natural hand/head movements and speech capture in 3D environment |

| Software | Unity game engine with integrated API for voice recognition | Create 13 different virtual environments simulating daily activities |

| Assessment Domains | Six DSM-5 cognitive domains via 13 task segments | Comprehensive cognitive profiling across multiple domains |

| Scoring | Automated algorithm calculating VR scores and completion time | Objective assessment with minimal administrator bias |

| Session Structure | Tutorial session followed by cognitive assessment with multiple attempts allowed per task within time limits | Ensure participant understanding while assessing learning capacity |

| Validation | Comparison with MoCA, MMSE, functional status assessments | Establish clinical validity and sensitivity to cognitive impairment |

Implementation Details [28]:

- Participants: 109 individuals aged 65-84, grouped as cognitively healthy (MoCA ≥26) or impaired (MoCA <26)

- Procedure: All participants completed CAVIRE assessment after standard cognitive testing

- Outcome Measures: VR scores, time taken across six cognitive domains, receiver-operating characteristic analysis

- Results: Cognitively-healthy participants achieved significantly higher VR scores and shorter completion times (all p's < 0.005), with AUC of 0.7267 for distinguishing groups

Protocol 2: Enhance VR for Gamified Cognitive Assessment

Table: Enhance VR Assessment Protocol

| Component | Specification | Traditional Test Equivalent |

|---|---|---|

| Magic Deck | Memorize location of cards with colorful abstract patterns | Paired Associates Learning (PAL) test |

| Memory Wall | Recall increasingly complex patterns of lit cubes | Visual Pattern Test |

| Pizza Builder | Simultaneously take orders and assemble pizzas | Divided attention assessments |

| React | Sort incoming stimuli by changing criteria | Wisconsin Card Sorting Task and Stroop test |

| Hardware | Meta Quest standalone headset with hand controllers | N/A |

| Scoring | In-game points system with adaptive difficulty | Standardized test scoring |

Implementation Details [31]:

- Participants: 41 older adults (mean age=62.8 years) without neurodegenerative or psychiatric disorders

- Design: Randomized testing order (traditional neuropsychological assessment vs. VR sessions)

- Traditional Assessment Battery: MoCA, Stroop task, WCST, Trail Making Test, Rey-Osterrieth figure, Word Pair Test, Clock Drawing Test, Corsi Block-Tapping, Visual Search Test

- Results: High tolerance and usability, though direct score correlations with traditional tests were limited, suggesting VR may tap into different cognitive aspects

Research Reagent Solutions

Table: Essential Materials for VR-fMRI Cognitive Assessment Research

| Component | Function | Examples/Specifications |

|---|---|---|

| VR Hardware | Create immersive environments | HTC Vive Pro, Meta Quest, Leap Motion for hand tracking, Lighthouse sensors |

| fMRI-Compatible Equipment | Enable safe operation in magnetic environment | Fiber-optic data transmission, specialized RF-shielded components, non-magnetic materials |

| Physiological Monitoring | Capture complementary physiological data | EEG caps adapted for MRI environments, reference sensors for artifact correction, pulse oximeters |

| Software Platforms | Environment development and data integration | Unity game engine, specialized VR assessment applications (CAVIRE, Enhance VR) |

| Synchronization Systems | Temporal alignment of multimodal data | Network Time Protocol (NTP) servers, trigger interfaces, custom synchronization software |

| Validation Tools | Establish clinical and technical validity | Standard neuropsychological tests (MoCA, MMSE), functional assessments (Barthel Index, Lawton IADLs) |

Experimental Workflow Diagrams

VR-fMRI Experimental Workflow

VR-fMRI Technical Challenges and Solutions

Troubleshooting Common VR-fMRI Experimental Challenges

| Problem Category | Specific Issue | Potential Causes | Recommended Solutions |

|---|---|---|---|

| Data Acquisition | Low signal-to-noise ratio in fMRI data [4] | Magnetic interference from VR equipment; B0 field inhomogeneities [4]. | Use MR-conditional VR goggles with shielded electronics (e.g., VisualSystem HD); acquire field map for unwarping [4]. |

| VR visual presentation is unstable or laggy | Computer system latency; improper software configuration; network delays in data streaming. | Pre-load all 3D models; use a dedicated, high-performance computer; test and optimize paradigm offline [34]. | |

| Experimental Design & Analysis | Inflated effect sizes in ROI analysis [35] | Circular analysis bias; using statistically significant voxels from the same dataset to define an ROI [35]. | Use independent localizer scans or cross-validation to define ROIs; employ unbiased whole-brain correction [35]. |

| Incorrect image orientation or alignment [35] | DICOM header issues; inconsistent coordinate systems between software; improper manual reorientation. | Check and enforce consistent orientation (e.g., LPI) using tools like fslswapdim [35]; verify alignment with a template. |

|

| Physiological & Data Streaming | Difficulty synchronizing physiological, VR, and fMRI data | Lack of automated event marking; different hardware systems not on synchronized clocks. | Use software (e.g., AcqKnowledge, Vizard, COBI) that supports network data transfer and automated marker sending [34]. |

| Unwarping artifacts in functional images | B0 magnetic field inhomogeneities, particularly at tissue-air interfaces [35]. | Acquire a field map during scanning. Use FSL's fugue or fsl_prepare_fieldmap for unwarping [35] [36]. |

Frequently Asked Questions (FAQs)

Data Preprocessing & Analysis

Q: What is resampling and when do I need to do it? A: Resampling changes the resolution or dimensions of an image. It is necessary when you need to align images with different voxel sizes or templates, such as when applying a mask from one image (e.g., an anatomical) to another (e.g., a functional statistical map) [35].

- In FSL: Use

flirt -in mask.nii.gz -ref stats.nii.gz -out mask_RS.nii.gz -applyxfm - In AFNI: Use

3dresample -input mask.nii.gz -master stats.nii.gz -prefix mask_RS.nii.gz[35]

Q: What constitutes a "biased analysis" and how can I avoid it? A: A biased, or circular, analysis occurs when you define your Region of Interest (ROI) based on the statistical results from the very same dataset. This inflates effect sizes because it selectively includes noise voxels that, by chance, passed the significance threshold [35].

- Avoidance Strategy: Use independent ROIs defined from an separate localizer scan, an anatomical atlas, or a prior study. For valid confirmatory analysis, the ROI must be defined a priori, without reference to the final statistical map [35].

Technical Setup

Q: My anatomical and functional images appear to have different orientations. How can I fix this?

A: Use FSL's fslswapdim command to reorient an image. For example, if an image is in Right-Posterior-Inferior (RPI) orientation and you need Left-Posterior-Inferior (LPI), the command would be: fslswapdim input_image.nii.gz RL PA IS output_image.nii.gz. Always visually check the reoriented image overlaid on your functional data to confirm alignment [35].

Q: How can I integrate physiological data streams with my VR-fMRI experiment? A: Software solutions like BIOPAC's AcqKnowledge and WorldViz's Vizard, when used with systems like COBI, allow for network data transfer (NDT). This setup enables the streaming of physiological data (e.g., heart rate) and automated sending of event markers from the VR environment to the data acquisition software, ensuring synchronization across all modalities [34].

Experimental Protocols & Quantitative Data

Key Findings on Hippocampal-Cortical Connectivity

The following table synthesizes core quantitative findings from foundational studies on hippocampal-cortical connectivity, which can inform the design and interpretation of VR-fMRI experiments.

| Study & Method | Key Stimulation Parameter | Primary Brain Regions Activated | Effect on Functional Connectivity |

|---|---|---|---|

| Optogenetic fMRI (Zhou et al., 2017) [37] | 1 Hz stimulation of dDG | Bilateral V1, V2, LGN, SC, Cingulate Cortex [37] | Enhanced interhemispheric rsfMRI connectivity in hippocampus and various cortices [37]. |

| Human fMRI (NeuroImage, 2023) [38] | Memory encoding and retrieval tasks | Sparse, task-general during encoding; Medial PFC, inferior parietal, parahippocampal cortices during retrieval [38]. | Stable anterior/posterior hippocampal connectivity across rest and tasks, superposed by increased retrieval-recollection network connectivity [38]. |

Detailed Protocol: Low-Frequency Hippocampal Circuit Investigation

This protocol is adapted from optogenetic studies [37] and provides a framework for designing VR tasks that probe similar low-frequency hippocampal-cortical networks in humans.

- Stimulation Paradigm: Design a VR task that naturally elicits slow, rhythmic activity (~1 Hz) in the dorsal hippocampus. This could involve slow spatial navigation, gradual exploration of a virtual environment, or a paced memory encoding task.

- fMRI Acquisition: Acquire both task-based fMRI and resting-state fMRI (before and after the task) to assess task-evoked activity and changes in functional connectivity.

- Data Analysis:

- Task Activation: Use a general linear model (GLM) to identify brain regions with significant BOLD responses during the slow-frequency VR task. Focus on the visual cortex (V1, V2), cingulate cortex, and thalamic regions [37].

- Functional Connectivity: Use resting-state data to compute correlations between the hippocampus (anterior and posterior segments separately) and the whole brain. Contrast post-task vs. pre-task connectivity to identify stimulation-induced enhancements [38] [37].

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item Name | Function / Application | Example / Note |

|---|---|---|

| MR-Conditional VR System | Presents immersive 3D stimuli in the scanner. | VisualSystem HD (NordicNeuroLab); uses shielded electronics and MR-safe materials for use up to 3T [4]. |

| Data Synchronization Suite | Streams and synchronizes physiological, VR event, and fMRI data. | AcqKnowledge software, Vizard VR software, and COBI (for fNIRS/physiology) with Network Data Transfer (NDT) [34]. |

| FSL | A comprehensive library of MRI analysis tools. | Includes FEAT (FMRI analysis), MELODIC (ICA), BET (brain extraction), FLIRT/FNIRT (registration), and FUGUE (unwarping) [36]. |

| Field Map | Corrects for geometric distortions in EPI (fMRI) data caused by B0 field inhomogeneities. | Acquired during scanning; processed using FSL's fugue or fsl_prepare_fieldmap [35] [36]. |

| Unbiased ROI Atlas | For defining regions of interest for confirmatory analysis without circularity. | Anatomically defined atlases (e.g., AAL, Harvard-Oxford) or independent functional localizers [35]. |

Experimental and Analytical Workflows

Diagram: VR-fMRI Experimental Setup and Synchronization

Diagram: Analysis Workflow for Hippocampal Connectivity

Technical Support & Troubleshooting Hub

This section addresses common technical challenges encountered during simultaneous fNIRS and Virtual Reality (VR) experiments, providing practical solutions to ensure data integrity.

Frequently Asked Questions (FAQs) and Troubleshooting Guides

Q1: Our fNIRS signals are consistently noisy during participant movement in the VR environment. What steps can we take?

- Problem: Motion artifacts corrupting fNIRS data.

- Solutions:

- Preventive Measures: Secure the fNIRS cap and optodes firmly using headbands or caps designed for movement studies.

- Technical Add-ons: Integrate an accelerometer with the fNIRS system to record head movement data. This data can later be used with adaptive filtering techniques to clean the signal [39].

- Post-Processing: Employ signal processing methods such as Principal Component Analysis (PCA) or other published motion correction algorithms to remove movement artifacts during data analysis [39].

Q2: Participant perspiration during immersive VR tasks is affecting our optical signals. How can this be mitigated?

- Problem: Sweat alters the optical characteristics at the scalp-optode interface, causing signal drift.

- Solutions:

- Environmental Control: Maintain a cool and comfortable room temperature with adequate ventilation.

- Physical Barriers: Use absorbent pads or sweatbands around, but not under, the optodes to wick away moisture.

- Signal Processing: Note that once the sensor-skin interface is saturated, the effect can become stable and may be removable during data processing [39].

Q3: We suspect interference from cardiac and respiratory cycles in our fNIRS data. How can we isolate the neural signal?

- Problem: Physiological noise from heartbeats and breathing is superimposed on the hemodynamic response.

- Solutions:

- This is a known confound that can be addressed in post-processing. Techniques such as filtering and PCA have been successfully used by research groups to separate these systemic signals from task-related neural activity [39].

Q4: The VR headset display is flickering or the tracking is lost during a critical part of the experiment.

- Problem: VR hardware instability.

- Solutions:

- Recalibrate Tracking: Ensure the play area is well-lit (but avoid direct sunlight) and free of reflective surfaces. Reboot the headset and reset the Guardian/play area boundary [40].

- Check Connections: For PC-powered VR, ensure all cables are securely connected. A restart of the headset and the VR application often resolves temporary glitches [40].

Q5: How do we verify that our fNIRS setup is functioning correctly before starting an experiment?

- Problem: Ensuring signal quality and device functionality.

- Solutions:

- Signal Quality Check: Prior to the experiment, select measurement parameters for the best signal-to-noise ratio (SNR): use maximum LED current and minimum detector gain [39].

- Phantom Tests: Conduct regular system quality checks using phantom tests to validate performance. SNR levels in phantom tests are typically over 90 dB for properly functioning systems [39].

- Channel Inspection: Before recording, check the signal quality for each channel and reject any bad channels with consistently poor signal strength [41].

Quantitative Data & System Parameters

The tables below summarize key fNIRS specifications and the hemodynamic response profile critical for experimental design.

Table 1: Key fNIRS System Specifications and Performance Metrics

| Parameter | Specification / Value | Context & Notes |

|---|---|---|

| Spatial Resolution | ~1-2 cm | Resolution is determined by source-detector separation and photon path [39] [42]. |

| Penetration Depth | 1.5 - 2 cm | Allows for measurement of cortical activity [39] [42]. |

| Temporal Resolution | ~100 Hz | Sufficient for tracking the hemodynamic response [42]. |

| Source-Detector Separation | ~2.5 cm | Standard distance for a good balance between depth sensitivity and signal strength [39]. |

| Typical Wavelengths | 730 nm, 850 nm | Selected to differentiate between oxy- and deoxy-hemoglobin [39]. |

| Signal-to-Noise Ratio (SNR) | >90 dB | Achievable in phantom tests with optimal parameters (max LED current, min detector gain) [39]. |

| Trigger Delay (BNC) | <5 msec | Minimal delay for synchronizing fNIRS with other devices like VR systems [39]. |

Table 2: Hemodynamic Response and Experimental Timing

| Parameter | Typical Timing | Experimental Design Implication |

|---|---|---|

| Hemodynamic Response Onset | 2 - 6 seconds | Dictates the minimum block length or inter-stimulus interval in task design [39]. |

| Delayed Response (e.g., sleep deprivation) | Up to 10 seconds | Highlights need for participant screening and potentially longer trial durations [39]. |

| Protocol Design Guidance | Align with fMRI | Review fMRI literature for stimuli number, timing, and design as both measure the same biomarker [39]. |

Experimental Protocols: VR-fNIRS for MCI Assessment

This section details a specific methodology from a foundational study integrating fNIRS with VR for Mild Cognitive Impairment (MCI) assessment [43].

Validated VR Task Protocols

The following tasks were designed to engage cognitive functions known to be affected in MCI, such as executive function, memory, and visuospatial skills, within an ecologically valid VR environment.

Table 3: Description of VR Tasks for Eliciting Cognitive Load

| VR Task Name | Description | Cognitive Functions Assessed |

|---|---|---|

| Fruit Cutting | Subjects use a virtual knife to cut fruits thrown towards them. | Hand-eye coordination, processing speed, attention, and executive function. |

| Food Hunter | A virtual restaurant environment where subjects must find and collect specific food ingredients based on instructions. | Spatial navigation, memory, task-switching, and problem-solving. |

Integrated Workflow Diagram

The following diagram illustrates the end-to-end experimental and analytical workflow for the VR-fNIRS MCI assessment system.

fNIRS Data Processing and Graph Construction

The core analytical innovation lies in processing fNIRS data into a structured graph for machine learning.

- Data Segmentation: The continuous fNIRS data for each subject is segmented into non-overlapping 20-second windows, yielding 30 samples per subject per condition [43].

- Multi-Domain Feature Extraction:

- Temporal Features (TFs): Capture dynamic changes in oxygenation (HbO/HbR) over time. These are Euclidean data and serve as node attributes in the graph [43].

- Frequency Features (FFs): Identify shifts in neural oscillations derived from the fNIRS signal. These are also Euclidean and serve as node attributes [43].

- Spatial Features (SFs): Reflect functional connectivity between different brain regions (fNIRS channels). These are non-Euclidean data and define the edges between nodes in the graph [43].

- Graph Convolutional Network (GCN): The constructed graph is fed into a GCN model. The GCN excels at processing this graph-structured data, enabling structure-aware integration of features and facilitating the modelling of region-level interactions to enhance MCI identification [43].

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 4: Essential Hardware and Software for VR-fNIRS Integration

| Item Name | Category | Function / Role in the Experiment |

|---|---|---|

| Continuous Wave (CW) fNIRS System | Core Hardware | Measures changes in oxy- and deoxy-hemoglobin concentration in the cortex using a continuous infrared light signal. It is portable, affordable, and safer than laser-based systems [39] [42]. |

| fNIRS Optode Cap | Core Hardware | Holds light sources and detectors in a predetermined array over the scalp. Targeted brain regions for MCI often include the prefrontal cortex [39] [43]. |

| Immersive VR Headset | Core Hardware | Presents the virtual environment to the participant, providing an ecologically valid and engaging context for cognitive tasks [43]. |

| MRI-Compatible Data Glove | Supplementary Hardware | Tracks fine finger and hand movements in real-time within the VR environment, enabling interactive tasks [3]. |

| Accelerometer | Supplementary Hardware | Records head movement data, which is crucial for developing advanced filters to remove motion artifacts from fNIRS signals [39]. |

| Graph Convolutional Network (GCN) | Software / Analysis | A deep learning model designed to work with graph-structured data. It is used to classify MCI by integrating temporal, frequency, and spatial features from fNIRS [43]. |

Navigating Technical Challenges: Strategies for Artifact Reduction and Data Fidelity