AI-Powered Motion Tracking for Behavioral Analysis: Advanced Algorithms and Applications in Drug Development

This article provides researchers, scientists, and drug development professionals with a comprehensive analysis of how artificial intelligence is revolutionizing behavioral analysis through motion tracking.

AI-Powered Motion Tracking for Behavioral Analysis: Advanced Algorithms and Applications in Drug Development

Abstract

This article provides researchers, scientists, and drug development professionals with a comprehensive analysis of how artificial intelligence is revolutionizing behavioral analysis through motion tracking. It explores the foundational principles of AI algorithms, details cutting-edge methodological applications in preclinical and clinical research, addresses critical troubleshooting and optimization challenges, and offers a rigorous validation framework for comparing algorithmic performance. By synthesizing the latest advancements, this guide serves as an essential resource for leveraging motion tracking to enhance the efficiency, predictive power, and success rates of pharmaceutical R&D.

From Manual Tracking to Deep Learning: Core Principles of AI-Driven Behavioral Analysis

Motion tracking technology has undergone a profound transformation, evolving from labor-intensive manual methods to sophisticated artificial intelligence (AI)-driven systems. This evolution has been particularly impactful in behavioral analysis research, where precise quantification of movement is crucial for studying behavioral phenotypes, assessing therapeutic efficacy, and understanding neurological function. The transition from manual tracking to markerless AI represents not merely a technical improvement but a fundamental shift in research capabilities, enabling the capture of complex, naturalistic behaviors in real-world environments with minimal intrusion [1]. For researchers and drug development professionals, this progress unlocks new possibilities for high-throughput, objective behavioral assessment, providing richer datasets and more sensitive biomarkers for preclinical and clinical studies.

This article details the key technological stages of this evolution, provides structured protocols for implementing modern tracking solutions, and furnishes a practical toolkit to guide research design in behavioral studies.

Historical Progression and Quantitative Comparison

The development of motion tracking can be segmented into four distinct technological phases, each characterized by significant shifts in accuracy, usability, and application scope [1].

Table 1: Evolutionary Stages of Motion Tracking Technology

| Era | Key Technologies | Primary Applications | Data Output | Key Limitations |

|---|---|---|---|---|

| Manual Tracking | Manual frame-by-frame annotation. | Early animation, fundamental biomechanics. | 2D coordinate points. | Extremely time-consuming; subjective; low temporal resolution. |

| Non-Visual & Marker-Based | Electromagnetic sensors; Inertial Measurement Units (IMUs); Passive/active optical markers. | Detailed biomechanics; Gait analysis; Film and video game animation. | 3D positional data; joint angles. | Invasive markers alter natural behavior; constrained to lab environments; high cost. |

| Markerless (Pre-DL) | Optical flow (Lucas-Kanade, Horn-Schunck); Feature-based tracking (SIFT, SURF); Background subtraction [1]. | Robotics; Early video surveillance; Basic activity recognition. | 2D motion vectors; feature trajectories. | Struggles with occlusions; requires high contrast; limited robustness in dynamic environments. |

| AI & Deep Learning (DL) | Convolutional Neural Networks (CNNs); OpenPose; YOLO; DeepSORT; RNNs/LSTMs [1] [2]. | Real-time behavioral phenotyping; AI-assisted diagnosis; Drug efficacy assessment in neurobiology. | 2D/3D pose estimation keypoints; semantic segmentation maps. | High computational demand; requires large, annotated datasets for training. |

The quantitative leap afforded by AI is demonstrated by the performance of modern multiple object tracking (MOT) algorithms. Tracking accuracy is commonly measured by metrics such as IDF1, which assesses identity preservation across frames.

Table 2: Quantitative Performance Comparison of Modern Multi-Object Tracking Algorithms (on MOT Challenge Benchmarks)

| Tracker | Paradigm | MOT16 IDF1 (%) | MOT17 IDF1 (%) | Key Innovation |

|---|---|---|---|---|

| FairMOT | Joint Detection and Embedding | 71.7 | 71.3 | Balances detection and Re-ID feature learning. |

| CenterTrack | Joint Detection and Tracking | 68.3 | 66.5 | Tracks by detecting object displacements. |

| MPMOT (2025) | Motion-Perception JDT | 72.8 | 72.6 | Gain Kalman Filter (GKF) and Adaptive Cost Matrix (ACM) [2]. |

The MPMOT framework exemplifies the modern focus on motion-aware tracking, which enhances robustness in challenging conditions like occlusions—a common scenario in behavioral studies of social groups [2].

Experimental Protocols for Behavioral Research

The following protocols provide a framework for implementing markerless AI motion tracking in behavioral and pharmacological research settings.

Protocol 1: Setup for Top-Down Multi-Animal Tracking

Application Note: This protocol is designed for high-throughput screening of group-housed animals, relevant for studying social behaviors, anxiety, and the effects of neuroactive compounds.

Methodology:

- Hardware Setup:

- Cameras: Position two or more synchronized high-speed cameras (≥100 fps) at different angles to resolve 3D pose and minimize occlusions.

- Housing: Use a standardized, well-lit arena. Ensure uniform, diffuse lighting to minimize shadows and glare.

- Data Acquisition: Record videos at a resolution of at least 1920x1080 pixels.

Software and Model Configuration:

- Detection Model: Employ a pre-trained object detector like YOLOv8 or Faster R-CNN, fine-tuned on a dataset of the target animal species.

- Tracking Algorithm: Implement a tracker such as DeepSORT or the MPMOT framework. For MPMOT, configure the Gain Kalman Filter (GKF) to adaptively adjust detection noise based on confidence scores, stabilizing predictions during brief occlusions [2].

- Identity Management: Rely on the tracker's appearance and motion models. The Adaptive Cost Matrix (ACM) in MPMOT is particularly useful, as it dynamically fuses motion and appearance cues to maintain identities in crowded scenes [2].

Data Output:

- A time-series dataset of bounding boxes and unique identity tags for each animal across all video frames.

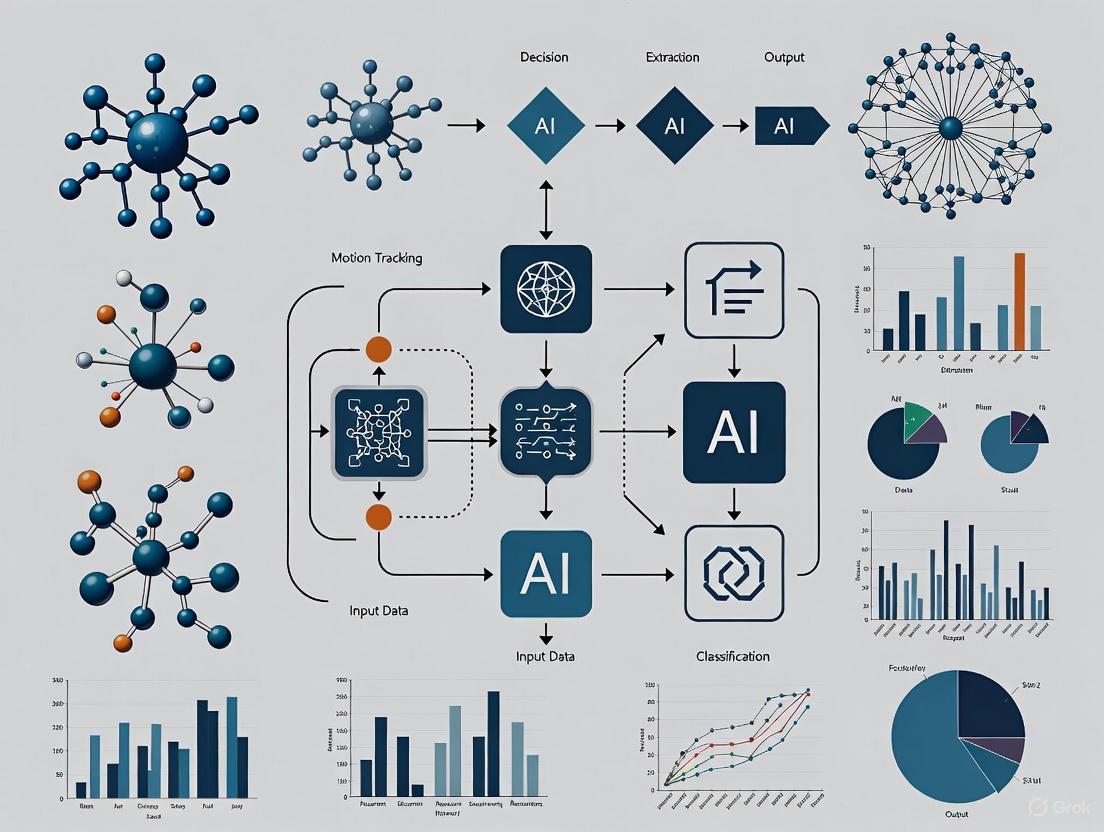

Workflow Diagram:

Protocol 2: Markerless Pose Estimation for Kinematic Analysis

Application Note: This protocol is used for detailed kinematic analysis of specific body parts, applicable in studies of motor coordination, gait analysis, and neurodegenerative disease models.

Methodography:

- Data Acquisition:

- Follow the camera and lighting setup from Protocol 1.

Pose Estimation:

- Model Selection: Use a bottom-up pose estimation framework like OpenPose or a similar CNN-based architecture capable of detecting multiple keypoints (e.g., limbs, snout, tail base) for each animal [1].

- Processing: Run the model on the video data to generate 2D or 3D coordinates (x, y, z) for each defined keypoint in every frame.

Post-Processing and Analysis:

- Data Smoothing: Apply a low-pass filter (e.g., a Butterworth filter) to the raw keypoint coordinates to reduce high-frequency noise.

- Kinematic Feature Extraction: Calculate derived metrics from the smoothed trajectories. These can include:

- Velocity: The first derivative of the snout or body centroid position.

- Acceleration: The second derivative of position.

- Joint Angles: Calculated from three adjacent keypoints (e.g., hip-knee-ankle).

- Behavioral Classifiers: Use the keypoint data to train machine learning models (e.g., Random Forest, SVM) to classify specific behaviors like rearing, grooming, or freezing.

Workflow Diagram:

The Scientist's Toolkit: Research Reagent Solutions

This section outlines the essential "research reagents"—the computational tools and datasets—required for modern motion tracking research in behavioral science.

Table 3: Essential Research Reagents for AI-Powered Motion Tracking

| Tool/Resource | Type | Function in Research | Example/Reference |

|---|---|---|---|

| Pre-trained Models | Software | Provides a foundation for transfer learning, reducing data and computational needs. | OpenPose (2D pose); DeepLabCut (pose estimation); YOLO (object detection) [1]. |

| Public Benchmark Datasets | Data | Standardized datasets for training, validating, and benchmarking algorithm performance. | MOT Challenge (human tracking); Animal pose datasets from academic labs [2]. |

| Frameworks for Multi-Object Tracking (MOT) | Software/Algorithm | Manages data association and identity preservation over time for multiple subjects. | MPMOT framework (GKF, ACM, GCM) [2]; FairMOT; DeepSORT. |

| Visualization & Analysis Suites | Software | Enables visualization of trajectories and extraction of quantitative behavioral metrics. | Computational tools for deriving velocity, acceleration, and interaction metrics from keypoints. |

| Community Model Hubs | Platform | Allows researchers to share, fine-tune, and monetize specialized behavioral models. | Reelmind's Model Hub for motion models [3]. |

The evolution from manual to markerless AI-driven motion tracking has fundamentally expanded the toolbox for behavioral researchers and drug development scientists. The advent of robust, multi-animal tracking and precise pose estimation enables the quantification of subtle behavioral phenotypes and motor patterns with unprecedented scale and objectivity. As these technologies continue to advance—particularly through motion-aware models and community-driven platforms—they promise to deliver even more powerful, accessible, and standardized biomarkers. This will accelerate the discovery of novel therapeutics and deepen our understanding of the brain and behavior.

Spatiotemporal data, which contains both spatial and temporal information, is fundamental to motion tracking and behavioral analysis research. This data is ubiquitous in video sequences, where the motion of objects or animals must be tracked across space and over time. The analysis of such data presents unique challenges, including occlusions, appearance changes, and complex non-linear motion patterns. Artificial Intelligence (AI), particularly deep learning architectures, has revolutionized the processing of spatiotemporal data. This document provides detailed application notes and experimental protocols for four core AI architectures—Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Long Short-Term Memory networks (LSTMs), and Transformers—within the context of behavioral analysis and motion tracking for drug development research. It is intended to guide researchers and scientists in selecting, implementing, and validating appropriate models for their studies.

Core Architectures for Spatiotemporal Data

Convolutional Neural Networks (CNNs): CNNs are specialized for processing grid-like spatial data, such as images. They use convolutional layers to detect hierarchical patterns (e.g., edges, shapes) and pooling layers to achieve spatial invariance [4] [5]. In motion tracking, CNNs serve as powerful backbone networks for feature extraction from individual video frames [6] [7].

Recurrent Neural Networks (RNNs): RNNs are designed for sequential data. They process inputs step-by-step while maintaining a hidden state that acts as a memory of previous information [4] [8]. This makes them suitable for modeling temporal dependencies in data streams.

Long Short-Term Memory Networks (LSTMs): LSTMs are a specialized variant of RNNs that address the vanishing gradient problem. They incorporate a gating mechanism (input, forget, and output gates) to regulate the flow of information, enabling them to capture long-range dependencies in temporal data more effectively than vanilla RNNs [4] [5].

Transformers: Originally developed for natural language processing, Transformers have gained prominence in computer vision. They utilize a self-attention mechanism to weigh the importance of all elements in a sequence when processing each element. This allows for global context modeling and parallel processing of sequences, overcoming the limitations of sequential processing in RNNs and LSTMs [4] [6].

Quantitative Architecture Comparison

The following table summarizes the key characteristics, strengths, and limitations of each architecture in the context of spatiotemporal data.

Table 1: Comparative Analysis of Core AI Architectures for Spatiotemporal Data

| Architecture | Primary Data Strength | Key Mechanism | Advantages | Limitations |

|---|---|---|---|---|

| CNN [4] [5] | Spatial (Images, frames) | Convolutional Filters, Pooling | Excellent at extracting spatial features and hierarchies; Highly efficient for image-based tasks. | Lacks inherent temporal modeling capability. |

| RNN [4] [8] | Temporal (Sequences) | Recurrent Hidden State | Can model sequentiality and short-term temporal dependencies. | Prone to vanishing/exploding gradients; Struggles with long-term dependencies. |

| LSTM [4] [5] | Temporal (Long Sequences) | Gated Memory Cell | Solves vanishing gradient problem; Effective at capturing long-term dependencies. | Computationally intensive; Complex to train. |

| Transformer [4] [6] | Spatiotemporal | Self-Attention Mechanism | Models global context and long-range dependencies; Enables parallel processing for faster training. | High computational and memory requirements; Requires large datasets. |

Application in Motion Tracking & Behavioral Analysis

CNNs for Spatial Feature Extraction

In the "tracking-by-detection" paradigm, the CNN is the workhorse for the detection stage. A CNN-based object detector (e.g., YOLOv8) processes individual video frames to identify and localize targets of interest [7]. The performance of the entire tracking pipeline heavily depends on the richness and discriminative power of the features extracted by the CNN backbone. Enhancements like the Coordinate Attention (CA) mechanism can be integrated into CNNs to help the model focus on more informative spatial regions, improving detection accuracy under challenging conditions like occlusion [7].

RNNs/LSTMs for Temporal Dynamics and Trajectory Prediction

RNNs and LSTMs are used to model the temporal consistency of object trajectories. By processing the sequence of a target's past positions (e.g., centroid coordinates from the detector), these networks can predict its future location, smooth its trajectory, and aid in data association across frames [9]. This is crucial for maintaining target identity during occlusions or complex motion.

Transformers for Global Spatiotemporal Context

Transformers have recently been applied to overcome the limitations of local modeling in CNNs and sequential processing in RNNs/LSTMs. Their self-attention mechanism can aggregate global contextual and spatio-temporal information [6]. For example:

- Feature Integration: The TFITrack model uses a transformer encoder-decoder architecture to integrate spatio-temporal information and global context, deepening the similarity between template and search region features for more robust tracking [6].

- Global Association: The Preformer MOT model leverages transformers for global trajectory prediction, which is particularly effective for handling non-linear motion and long-range associations that challenge traditional Kalman filter-based methods [9].

Table 2: Model Performance on Standard Multi-Object Tracking (MOT) Benchmarks

| Tracking Model | Core Architectural Innovations | MOT17 MOTA (%) | MOT17 IDF1 (%) | Key Application in Behavioral Analysis |

|---|---|---|---|---|

| TFITrack [6] | Transformer Feature Integration Encoder-Decoder | >80.5 (SOTA) | 79.3 | Robust tracking of tiny targets in aerial photography; resistant to fast motion and external interference. |

| Improved YOLOv8 + ByteTrack [7] | CNN (with CA & EfficientViT) + Two-stage association | 80.5 | 79.3 | High-precision pedestrian tracking; reduces ID switches in engineering safety scenarios. |

Experimental Protocols

Protocol 1: Implementing a Transformer-CNN Hybrid Tracker

Objective: To implement and evaluate a hybrid tracking model (e.g., inspired by TFITrack [6]) that combines a CNN for spatial feature extraction and a Transformer for spatiotemporal context integration.

Workflow:

- Input Preparation: Extract template (initial target) and search region patches from video sequences.

- Spatial Feature Extraction: Use a CNN backbone (e.g., ResNet, enhanced YOLOv8) to extract deep feature maps from both template and search regions [7].

- Spatiotemporal Encoding: Flatten and project the feature maps into sequences of tokens. Pass them through a Transformer encoder with a similarity calculation layer to model global dependencies and enhance feature discriminability [6].

- Context-Aware Decoding: A Transformer decoder integrates information from the template and search region tokens, using cross-attention to focus on relevant features.

- Target Localization: The refined search region tokens are used to predict the target's bounding box in the current frame.

- Temporal Filtering: A temporal context filtering layer adaptively ignores unimportant features to balance performance and model complexity [6].

Protocol 2: Behavioral Feature Extraction for Pharmacological Studies

Objective: To quantify behavioral phenotypes (e.g., locomotion, social interaction, anxiety-like behaviors) from tracked trajectory data for assessing drug efficacy or toxicity.

Workflow:

- Multi-Object Tracking: Implement a tracker from Protocol 1 to obtain trajectories (X, Y coordinates, frame) for each subject (e.g., mouse, zebrafish) in the assay.

- Trajectory Preprocessing: Smooth trajectories and calculate derived kinematics: velocity, acceleration, and heading angle.

- Spatiotemporal Feature Engineering: Extract the following features from the trajectories over defined time windows:

- Locomotion: Total distance traveled, average velocity, movement bout duration.

- Zone Preference: Time spent in, and entries into, predefined zones (e.g., center vs. periphery in an open field test).

- Social Behavior: Inter-individual distance, duration of proximity, and approach/avoidance dynamics.

- Temporal Modeling: Use an LSTM network to model the sequential nature of the behavioral data, capturing patterns and dependencies that simple summary statistics might miss [10].

- Analysis and Validation: Compare extracted features between treatment and control groups using statistical tests. Validate the model's ability to detect known drug effects against established manual scoring methods.

The Scientist's Toolkit: Research Reagents & Materials

Table 3: Essential Computational Reagents for AI-based Motion Tracking

| Research Reagent / Tool | Function / Purpose | Exemplars / Notes |

|---|---|---|

| Object Detection Models | Identifies and localizes targets in individual video frames. | YOLOv8 [7], Faster R-CNN. Critical for the "detection" step in tracking-by-detection. |

| Backbone CNN Architectures | Extracts rich, hierarchical spatial features from raw pixels. | ResNet, EfficientViT [7], VGG. A powerful backbone is foundational to tracking accuracy. |

| Attention Mechanisms | Allows the model to dynamically focus on more informative spatial regions or features. | Coordinate Attention (CA) [7], Self-Attention in Transformers [6]. Improves robustness to occlusion. |

| Re-Identification (Re-ID) Models | Extracts appearance features to distinguish between different targets. | OSNet-CA [7]. Used for data association to maintain consistent identity across frames. |

| Public Benchmark Datasets | Standardized datasets for training and, most importantly, fair benchmarking of tracking algorithms. | MOT17, MOT20 [7], UAV123 [6]. Essential for validating model performance. |

| Deep Learning Frameworks | Provides the programming environment to build, train, and deploy deep learning models. | PyTorch, TensorFlow, JAX. |

Multi-Object Tracking (MOT) is a fundamental computer vision task with critical applications in behavioral analysis research, from quantifying social interactions in animal models to monitoring human movement patterns in clinical trials. The core challenge in MOT lies in accurately detecting objects in each video frame and maintaining their unique identities across time, despite complications such as occlusions, changing appearances, and detection errors [11]. The field has evolved into two dominant computational paradigms with distinct philosophical and methodological approaches: Tracking-by-Detection (TbD) and Detection-by-Tracking (DbT) [12].

For researchers investigating behavior—whether in zebrafish social interactions or human disease progression—the choice between these paradigms directly impacts the reliability, accuracy, and interpretability of the resulting quantitative data. This application note provides a structured comparison of these paradigms, detailed experimental protocols for implementation, and specific applications in behavioral research contexts to inform algorithm selection for scientific studies.

Core Conceptual Differences

The fundamental distinction between the two paradigms lies in their treatment of the detection and association processes:

- Tracking-by-Detection (TbD) employs a sequential, modular approach where objects are first detected in each frame independently, and these detections are then linked across frames to form continuous tracks [12]. This compartmentalized strategy separates object detection from temporal association.

- Detection-by-Tracking (DbT) utilizes an integrated, end-to-end approach that jointly learns both detection and tracking objectives [12]. This paradigm reuses features extracted from individual frames specifically to maximize tracking performance rather than merely detecting objects in isolated frames.

Technical Implementation Comparison

Table 1: Technical Comparison of Tracking Paradigms

| Characteristic | Tracking-by-Detection (TbD) | Detection-by-Tracking (DbT) |

|---|---|---|

| System Architecture | Modular; detection and association are separate steps [12] | Integrated; joint learning of detection and tracking [12] |

| Implementation Flexibility | High flexibility; easy to swap detectors or association algorithms [12] | Low flexibility; components cannot be easily swapped [12] |

| Learning Approach | Modules designed and potentially trained separately [12] | Learned cohesion with potential for improved performance [12] |

| Representative Algorithms | SORT, DeepSORT, ByteTrack, BoT-SORT [13] | SAMBA-MOTR, MOTR [12] |

| Typical Frame Rate | High (e.g., ByteTrack: 30 FPS) [12] | Moderate (e.g., SAMBA-MOTR: 16 FPS) [12] |

| Training Complexity | Lower; modules can be trained independently | Higher; requires end-to-end training on tracking datasets |

| Performance Strengths | Excellent with high-quality detectors, computational efficiency | Superior in complex motion patterns, occlusions [12] |

Performance Metrics for Behavioral Research

When applying these paradigms to behavioral analysis, researchers should select evaluation metrics aligned with their scientific objectives:

- HOTA (Higher Order Tracking Accuracy): Provides a balanced assessment of both detection and association performance using the formula:

HOTA = √(DetA × AssA), where DetA measures detection accuracy and AssA measures association accuracy [12]. This metric is particularly valuable as it evaluates performance across multiple Intersection over Union (IoU) thresholds. - IDF1 (Identification F1 Score): Quantifies identity preservation accuracy using the formula:

IDF1 = 2 × IDTP / (2 × IDTP + IDFP + IDFN), where IDTP represents correctly identified objects, IDFP false identifications, and IDFN missed identifications [12]. This metric is crucial for long-term behavioral studies where maintaining individual identity is essential. - MOTA (Multiple Object Tracking Accuracy): Incorporates false positives, false negatives, and identity switches into a single metric [11]. While intuitive, MOTA can be dominated by detection performance in crowded scenes [12].

- AssA (Association Accuracy): Specifically evaluates how accurately a tracker maintains object identities across frames, focusing on temporal consistency [12]. This is particularly important in behavioral research where trajectory analysis is critical.

Tracking-by-Detection: Methods and Protocols

Core Architecture and Workflow

The Tracking-by-Detection paradigm follows a sequential pipeline where the output of an object detection model serves as input to a data association algorithm. The fundamental workflow consists of object detection, motion prediction, and data association stages [13].

Key Algorithmic Implementations

SORT (Simple Online and Realtime Tracking)

SORT establishes the fundamental TbD framework with minimalistic design. It employs a Kalman filter for motion prediction to estimate the next position of each track, and the Hungarian algorithm for data association based on Intersection over Union (IoU) between predicted and detected bounding boxes [13]. The state vector in SORT is represented as [u, v, s, r, u˙, v˙, s˙] where u,v are center coordinates, s is scale, r is aspect ratio, and u˙,v˙,s˙ are their respective velocities [13].

DeepSORT

DeepSORT enhances SORT by incorporating appearance information through a deep association metric. This extension uses a CNN to extract appearance features from bounding boxes, enabling more robust tracking through occlusions [13]. Each track maintains a gallery of the last appearance descriptors, allowing cosine distance calculations between new detections and stored descriptors to improve association accuracy.

ByteTrack

ByteTrack introduces a novel approach to handling low-confidence detections by associating every detection box, not just high-confidence ones [12] [13]. The algorithm employs a two-stage association: first matching high-score detections to existing tracks, then matching low-score detections to remaining unmatched tracks. This simple but effective optimization significantly reduces identity switches and fragmentation in challenging tracking scenarios.

Experimental Protocol: Implementing ByteTrack for Behavioral Analysis

Purpose: To implement and evaluate the ByteTrack algorithm for multi-object tracking in behavioral research applications.

Materials and Equipment:

- Video recording system appropriate for experimental subjects (e.g., overhead cameras for animal studies)

- Computing hardware with GPU acceleration (minimum 8GB GPU memory)

- Python 3.8+ programming environment

- PyTorch and torchvision libraries

- ByteTrack implementation (official GitHub repository)

Procedure:

- Data Acquisition and Preparation:

- Record video footage of subjects under standardized conditions

- Convert videos to sequential frame images at consistent resolution

- Annotate a subset of frames for validation purposes

Detection Model Configuration:

- Initialize YOLOX object detector with pre-trained weights

- Fine-tune detection model on domain-specific data if necessary

- Set confidence thresholds: high threshold (0.6), low threshold (0.1)

Tracking Implementation:

- Implement Kalman filter with state vector

[x, y, w, h, x˙, y˙, w˙, h˙] - Configure two-stage association process:

- Stage 1: Associate high-confidence detections using IoU similarity

- Stage 2: Associate low-confidence detections with remaining tracks using IoU

- Set track management parameters: track activation (2 consecutive matches), track deletion (30 frames without match)

- Implement Kalman filter with state vector

Validation and Analysis:

- Calculate HOTA, MOTA, and IDF1 metrics on validation sequences

- Export trajectory data for behavioral analysis

- Visualize tracks for qualitative assessment

Troubleshooting:

- For excessive identity switches: Adjust IoU threshold and appearance feature weight

- For fragmented tracks: Modify track activation and deletion parameters

- For computational bottlenecks: Optimize detection model or reduce input resolution

Detection-by-Tracking: Methods and Protocols

Core Architecture and Workflow

Detection-by-Tracking represents a paradigm shift toward end-to-end learnable approaches that jointly model detection and tracking objectives. These methods typically employ sequence modeling techniques to directly output tracked objects across frames.

Key Algorithmic Implementations

SAMBA-MOTR

SAMBA-MOTR utilizes synchronized state space models (SSM) to track multiple objects with complex, interdependent motion patterns [12]. The approach synchronizes multiple SSMs to model coordinated movements commonly found in group behaviors, making it particularly suitable for social behavior analysis in animal studies or team sports analytics.

The method combines a transformer-based object detector with the Samba sequence processing model, leveraging the object detector's encoder to extract image features from individual frames. These features are concatenated with detection and track queries from previous frames to maintain object identities [12]. A key innovation is the MaskObs technique for handling uncertain observations during occlusions or challenging scenarios by masking uncertain queries while maintaining state updates through historical information.

Performance Characteristics

SAMBA-MOTR demonstrates significantly improved performance on complex motion datasets such as DanceTrack, achieving 3.8 HOTA and 5.2 AssA improvement over competing methods [12]. The approach effectively models interdependencies between objects, enabling prediction of motion patterns based on group behavior with linear-time complexity suitable for extended tracking scenarios.

Experimental Protocol: Implementing SAMBA-MOTR for Complex Behavioral Phenotyping

Purpose: To implement SAMBA-MOTR for analyzing complex group behaviors and social interactions in research models.

Materials and Equipment:

- Multi-camera recording system for comprehensive coverage

- High-performance computing cluster with multiple GPUs

- PyTorch with custom Mamba/SSM extensions

- Behavioral annotation software for validation

Procedure:

- Data Preparation:

- Acquire multi-view video sequences of group interactions

- Pre-process videos to consistent frame rate and resolution

- Prepare training/validation splits with temporal continuity

Model Configuration:

- Initialize transformer-based feature extraction backbone

- Configure state space models for trajectory modeling

- Set track query initialization and propagation parameters

- Implement MaskObs mechanism for occlusion handling

Training Protocol:

- Pre-training on large-scale tracking datasets (MOT17, DanceTrack)

- Domain adaptation on target behavioral data

- Joint optimization of detection and association losses

- Validate using HOTA and AssA metrics

Behavioral Analysis:

- Extract trajectory data with maintained identities

- Compute interaction metrics (proximity, orientation, velocity correlation)

- Analyze group movement patterns and social dynamics

Troubleshooting:

- For training instability: Adjust learning rate schedules and gradient clipping

- For overfitting: Implement stronger data augmentation (temporal jittering, viewpoint simulation)

- For memory constraints: Reduce sequence length or model dimensionality

Application in Behavioral Analysis Research

Case Study: Wearable Motion Tracking for Disease Progression

A landmark study demonstrated the power of advanced tracking methodologies in clinical research, using wearable full-body motion tracking to predict disease trajectory in Duchenne muscular dystrophy (DMD) [14]. Researchers employed 17 wearable sensors to capture whole-body movement behavior during activities of daily living, establishing "ethomic fingerprints" that distinguished DMD patients from controls with high accuracy.

This approach combined elements of both tracking paradigms: precise detection of body segments (TbD) with holistic movement pattern analysis (DbT). The resulting behavioral biomarkers outperformed traditional clinical assessments in predicting disease progression, demonstrating the transformative potential of sophisticated tracking methodologies in biomedical research [14].

Implementation in Animal Behavior Research

In zebrafish behavioral research, deep learning-based object detection and tracking algorithms have enabled quantitative analysis of social behavior [15]. These implementations typically leverage YOLOv8-based object detection with region-based tracking metrics to quantify social preferences in controlled laboratory conditions.

The integration of tools like Ultralytics, OpenCV, and Roboflow enables reproducible workflows for detecting, tracking, and analyzing movement patterns in model organisms. This facilitates the computation of metrics such as zone preference, interaction frequency, and movement dynamics that are crucial for behavioral phenotyping.

The Scientist's Toolkit: Essential Research Reagents

Table 2: Essential Tools and Algorithms for Behavioral Tracking Research

| Tool Category | Specific Solutions | Research Application | Key Features |

|---|---|---|---|

| Tracking Algorithms | ByteTrack [12] [13] | General-purpose object tracking | High efficiency (30 FPS), simple but effective |

| SAMBA-MOTR [12] | Complex group behavior analysis | Models interdependent motion patterns | |

| Detection Models | YOLOX [13] | Real-time object detection | High accuracy and speed balance |

| Transformer Detectors [12] | Complex scene understanding | Superior feature extraction capabilities | |

| Evaluation Metrics | HOTA [12] | Comprehensive performance assessment | Balances detection and association accuracy |

| IDF1 [12] | Identity preservation evaluation | Measures long-term tracking consistency | |

| Motion Sensors | Wearable Sensor Suits [14] | Clinical movement analysis | Full-body kinematic capture (60 Hz) |

| Software Frameworks | Ultralytics YOLO [15] | Rapid model development | User-friendly API, extensive documentation |

| OpenCV [15] | Computer vision operations | Comprehensive image/video processing | |

| Annotation Tools | Roboflow [15] | Dataset preparation | Streamlined labeling and augmentation |

The choice between Tracking-by-Detection and Detection-by-Tracking paradigms depends critically on research objectives, computational resources, and behavioral context.

Tracking-by-Detection is recommended when:

- Research requires flexible, modular algorithm design

- Computational efficiency is prioritized for real-time analysis

- High-quality detection models are available for the target domain

- Research objectives focus on standardized behavioral assays

Detection-by-Tracking is preferable when:

- Research involves complex, interdependent motion patterns

- Maximum tracking accuracy is required despite computational costs

- Long-term identity preservation is critical for longitudinal studies

- Behavioral analysis involves group dynamics and social interactions

For behavioral researchers implementing these technologies, we recommend beginning with well-established TbD methods like ByteTrack for initial experiments, then progressing to more sophisticated DbT approaches like SAMBA-MOTR for complex behavioral phenotyping. Validation against manual annotations and correlation with biological outcomes should remain paramount when applying these computational paradigms to scientific research.

In preclinical research, particularly for evaluating drug efficacy and safety, the quantitative analysis of animal behavior is paramount. Traditional methods of behavioral scoring are often subjective, time-consuming, and prone to human error and variability [16]. Computer vision technologies offer a transformative solution by enabling automated, high-precision, and unbiased motion tracking and behavioral analysis [16]. This document details application notes and experimental protocols for three foundational computer vision techniques—Optical Flow, Feature Extraction, and Background Subtraction—within the context of AI-driven behavioral analysis for drug development. These methods allow researchers to extract robust quantitative metrics from video data, facilitating more reliable and reproducible pharmacological studies [17] [16].

Core Techniques & Comparative Analysis

Optical Flow: This technique estimates the motion of objects between consecutive video frames by calculating the displacement vector for each pixel. It is particularly useful for analyzing subtle and complex movement patterns, such as rodent gait or tremor responses to pharmaceutical compounds [17]. It models the apparent motion in the image plane caused by the relative movement between the animal and the camera.

Feature Extraction: This process involves identifying and describing distinctive keypoints (e.g., corners, edges) or regions within a video frame [18]. Techniques like edge detection are used to identify object boundaries, which can be crucial for segmenting different parts of an animal's body. The extracted features serve as anchors for tracking posture and articulation over time [18].

Background Subtraction: This is a fundamental method for segmenting moving objects, such as a rodent in an open field, from a static background. It works by creating a model of the background and then identifying foreground pixels that significantly deviate from this model [17]. This provides a binary mask of the animal's location and shape, which is often the first step in many behavioral analysis pipelines.

Quantitative Performance Comparison

The selection of an appropriate algorithm depends on the specific requirements of the experiment, including the need for accuracy, processing speed, and robustness to environmental factors. The following table summarizes a performance comparison of these methods based on a recent benchmark study [17].

Table 1: Performance Comparison of Computer Vision Techniques for Moving Object Detection

| Method | Response Time (seconds) | Accuracy (%) | Selectivity (%) | Specificity (%) |

|---|---|---|---|---|

| Discrete Wavelet Transform (DWT) | 0.27 | 95.34 | 95.96 | 94.68 |

| Optical Flow | Information Missing | Information Missing | Information Missing | Information Missing |

| Background Subtraction | Information Missing | Information Missing | Information Missing | Information Missing |

Note: The study [17] identified DWT as the optimal method among those tested. Specific quantitative data for Optical Flow and Background Subtraction in this particular benchmark were not fully detailed in the available search results. Further empirical validation is recommended for a direct comparison in a specific experimental setup.

Experimental Protocols

Protocol: Background Subtraction for Open Field Locomotion Analysis

This protocol is designed to quantify general locomotor activity and zone occupancy in rodent models, commonly used to assess drug-induced sedation or stimulation.

1. Equipment and Software Setup

- Camera: Fixed-mount CCD or CMOS camera with resolution ≥ 1080p.

- Enclosure: Standard open field arena (e.g., 40cm x 40cm x 40cm) with consistent, non-reflective interior.

- Lighting: Diffuse, constant illumination to minimize shadows and flicker.

- Computer: System with adequate processing power for real-time video analysis.

- Software: Python (with OpenCV library) or MATLAB.

2. Video Acquisition

- Acclimate the animal to the testing room for at least 60 minutes prior to recording.

- Place the rodent in the center of the open field arena.

- Record a video of the experimental session (e.g., 10-30 minutes). Ensure the camera is static and its settings (focus, white balance) are fixed throughout all experiments.

- Critical Step: Record an initial segment (e.g., 1-2 minutes) of the empty arena under identical lighting conditions to serve as the background model.

3. Algorithm Implementation (Using OpenCV/Python)

- Preprocessing: Convert video frames to grayscale to reduce computational load.

- Background Model Initialization: Use the

createBackgroundSubtractorMOG2()function, which is robust to gradual lighting changes and shadows. - Foreground Mask Generation: For each frame of the experimental video, apply the background subtractor to obtain a binary mask where the white pixels represent the foreground (the animal).

- Noise Reduction: Apply morphological operations (e.g.,

cv2.morphologyExwith an elliptical kernel) to remove small noise points and fill gaps in the foreground mask. - Object Tracking: Calculate the centroid of the largest contour found in the foreground mask (

cv2.findContours). Track the (x, y) coordinates of this centroid across all frames.

4. Data Extraction and Analysis

- Total Distance Travelled: Calculate the cumulative pixel distance moved by the centroid between frames. Convert pixels to centimeters using a known reference object in the arena.

- Velocity: Compute the instantaneous speed (distance per frame) and average speed over the session.

- Zone Occupancy: Define virtual zones (e.g., center, corners) in the arena. Calculate the percentage of time the centroid spends in each zone, a common metric for anxiety-like behavior.

Protocol: Optical Flow for Detailed Kinematic/Gait Analysis

This protocol is used for fine-grained analysis of movement dynamics, such as quantifying gait irregularities, tremor frequency, or specific drug-induced behavioral signatures [16].

1. Equipment and Software Setup

- Camera: High-speed camera (≥ 100 fps) to capture rapid movements.

- Setup: A clear, flat walking surface (e.g., a narrow runway or open field).

- Lighting: High-contrast, shadow-free lighting. For high accuracy, place markers on key anatomical points (e.g., joints) if manual feature detection is unreliable.

- Software: Python with OpenCV and SciPy, or specialized commercial tracking software.

2. Video Acquisition

- Record the animal from a lateral or top-down view, ensuring the entire body remains in the frame.

- For gait analysis, ensure the animal traverses a defined path. For generalized movement analysis (e.g., tremor), record in an open field.

- Maintain a fixed camera position and consistent, high-contrast lighting.

3. Algorithm Implementation (Dense Optical Flow using Farneback method in OpenCV)

- Preprocessing: Convert consecutive frames to grayscale.

- Flow Calculation: Use

cv2.calcOpticalFlowFarneback()to compute a dense flow field. This function returns a vector for each pixel representing its movement from the previous frame. - Vector Processing: The output is a two-channel array (flow in x and y directions). Calculate the magnitude and angle of each vector.

magnitude, angle = cv2.cartToPolar(flow_x, flow_y) - Thresholding: Apply a magnitude threshold to filter out noise and focus on significant movement.

4. Data Extraction and Analysis

- Overall Activity: Sum the magnitudes of all vectors above threshold to get a global "movement energy" metric for each frame.

- Tremor/Periodic Motion: Perform a Fourier Transform (FFT) on the movement energy time-series to identify dominant frequencies associated with tremors.

- Limb Movement Kinematics: By defining a Region of Interest (ROI) around a specific limb, the average flow vectors within that ROI can be used to calculate limb velocity and movement patterns.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools for Vision-Based Behavioral Analysis

| Tool / Solution | Function / Application | Example Uses in Behavioral Research |

|---|---|---|

| Convolutional Neural Networks (CNNs) [18] | Deep learning models for image analysis and classification. | Automated scoring of complex behaviors (e.g., rearing, grooming, social interaction) from raw video pixels [16]. |

| You Only Look Once (YOLO) [18] | Real-time object detection algorithm. | Fast and accurate multi-animal tracking and identification in a home cage or social interaction test. |

| OpenCV | Open-source library for computer vision and machine learning. | Provides the foundational functions for implementing all protocols described in this document (background subtraction, optical flow, feature extraction). |

| DeepEthogram [16] | Machine learning pipeline for supervised behavior classification. | Training a model to classify behavioral states (e.g., sleeping, eating, walking) based on user-labeled video data. |

| Discrete Wavelet Transform (DWT) [17] | Mathematical tool for multi-resolution analysis of signals and images. | Effective for moving object detection and analysis, showing high accuracy and fast response times in cluttered environments [17]. |

Implementing AI Algorithms: From Preclinical Models to Clinical Trial Analytics

Motion tracking algorithms have become indispensable tools in behavioral analysis research, enabling researchers to quantitatively analyze complex biological phenomena. Within the framework of artificial intelligence (AI)-driven research, selecting the appropriate tracking algorithm is crucial for generating reliable, reproducible data. This document provides detailed application notes and experimental protocols for three advanced tracking algorithms—SambaMOTR, ByteTrack, and DeepSORT—each representing distinct architectural paradigms for solving the multi-object tracking (MOT) problem. These algorithms differ fundamentally in their approach to data association, motion modeling, and handling of complex scenarios such as occlusions and erratic motion patterns commonly encountered in behavioral studies. The performance characteristics, implementation requirements, and optimal application domains for each algorithm are systematically evaluated to guide researchers in selecting the most appropriate tool for specific experimental conditions in pharmaceutical development and basic research.

Algorithm Comparative Analysis

Core Architectural Paradigms

Tracking-by-Detection (ByteTrack, DeepSORT): These methods separate the detection and association steps, using independent models for object detection in each frame followed by association across frames. This modular approach allows component swapping but may lack integrated optimization [12]. ByteTrack exemplifies this with its simple yet effective association strategy, while DeepSORT incorporates appearance features for improved identity preservation [19] [20].

Tracking-by-Propagation (SambaMOTR): This end-to-end approach jointly models detection and tracking through sequence propagation, reusing features across frames to maximize tracking performance. While potentially offering superior performance, these integrated architectures are less flexible and more complex to train [12] [21].

Quantitative Performance Comparison

Table 1: Performance Metrics Across Benchmark Datasets

| Algorithm | MOT17 (MOTA↑) | DanceTrack (HOTA↑) | MOT17 (IDF1↑) | Inference Speed (FPS) | Primary Strength |

|---|---|---|---|---|---|

| SambaMOTR | - | 69.2 [12] | - | 16 [12] | Complex motion patterns |

| ByteTrack | 80.3 [22] | 61.3 [12] | 77.3 [22] | 30-120 [12] [22] | High-speed tracking |

| DeepSORT | ~50.7* [20] | - | - | ~20* [20] | Occlusion handling |

Note: DeepSORT performance varies significantly with detector choice; values shown are from improved YOLOv5s-DeepSORT implementation [20].

Table 2: Scenario-Based Algorithm Selection Guide

| Experimental Condition | Recommended Algorithm | Rationale |

|---|---|---|

| High-throughput screening | ByteTrack | Superior speed with maintained accuracy [22] |

| Complex social interactions | SambaMOTR | Superior group behavior modeling [12] [21] |

| Occlusion-prone environments | DeepSORT | Robust re-identification capabilities [20] |

| Small object tracking | ByteTrack with MR2 adaptation | Multi-resolution rescoring for small objects [22] |

| Long-term identity preservation | DeepSORT | Appearance feature integration reduces ID switches [20] |

| Nonlinear motion patterns | SambaMOTR | State space models capture complex trajectories [21] |

| Resource-constrained environments | ByteTrack | Efficient cascaded association strategy [22] |

Technical Specifications and Implementation Requirements

Table 3: Computational Requirements and Implementation Dependencies

| Algorithm | Base Detector | Feature Extractor | Motion Model | Association Method | Primary Dependencies |

|---|---|---|---|---|---|

| SambaMOTR | DAB-D-DETR [21] | Integrated encoder | State space models | Set-of-sequences modeling | PyTorch, Deformable Attention CUDA ops [21] |

| ByteTrack | YOLOX/YOLOv8 [22] [23] | Not applicable | Kalman filter (linear) | Two-stage Hungarian + IoU | Python, lap library [23] |

| DeepSORT | YOLOv5/v7/v8 [20] [24] | ShuffleNetV2/CNN | Kalman filter (linear/UKF) | Cascade matching + appearance | PyTorch, TensorFlow |

Experimental Protocols

General Workflow for Behavioral Tracking Experiments

Experimental Workflow for Behavioral Tracking

Protocol 1: SambaMOTR Implementation for Complex Group Behaviors

Purpose: To track multiple interacting subjects with complex, interdependent motion patterns (e.g., social interaction assays, maternal behavior studies).

Materials:

- High-resolution camera system (≥30 FPS)

- GPU workstation (NVIDIA GPU with ≥8GB VRAM recommended)

- SambaMOTR codebase [21]

- Behavioral recording environment

Procedure:

- Data Preparation:

- Acquire video recordings at 30 FPS minimum

- Format datasets according to DanceTrack folder structure

- Generate sequence map files using provided scripts [21]

Model Configuration:

- Initialize with pre-trained DAB-D-DETR weights

- Configure Samba state space models with synchronized parameters

- Set MaskObs parameters for occlusion handling: uncertainty threshold = 0.5

Training Protocol (if fine-tuning):

- Use distributed training on 8 GPUs:

python -m torch.distributed.run --nproc_per_node=8 main.py - Apply gradient checkpointing with

--use-checkpointflag for memory optimization - Set batch size = 1 with longer training schedule as per configuration files [21]

- Use distributed training on 8 GPUs:

Inference Execution:

- Run evaluation on validation set:

python main.py --mode eval --eval-data-split val - For test set submission:

python -m torch.distributed.run --nproc_per_node=8 main.py --mode submit --submit-data-split test

- Run evaluation on validation set:

Validation:

- Assess HOTA metrics on validation sequences

- Manually verify complex interaction tracking accuracy

- Calculate identity preservation rate in occlusion scenarios

Protocol 2: ByteTrack Implementation for High-Throughput Screening

Purpose: To achieve real-time tracking of multiple subjects in high-throughput applications (e.g., locomotor activity, multi-well plate assessments).

Materials:

- High-speed camera system (≥60 FPS capable)

- GPU-enabled processing system

- ByteTrack implementation [22]

- YOLOX or YOLOv8 detector

Procedure:

- Detection Configuration:

- Select appropriate detector (YOLOX for accuracy, YOLOv8 for speed)

- Configure detection threshold: τ = 0.5 (initial value)

- Calibrate detection resolution based on object size

Association Parameterization:

- Initialize Kalman filter with linear motion model

- Set two-stage matching thresholds:

- First stage (high confidence): detection scores ≥ τ

- Second stage (low confidence): detection scores < τ [22]

- Configure IoU similarity metric for motion prediction

Optimization:

- Implement adaptive thresholding if detection quality varies

- For resource-constrained environments, apply MR2-ByteTrack multi-resolution rescoring [22]

- Validate tracking continuity using MOTA and IDF1 metrics

Execution:

- Process video sequences with

bytetrack.pyimplementation - For real-time applications, use

-m yolox_sfor lighter model [23] - Monitor identity switches particularly during occlusion events

- Process video sequences with

Protocol 3: DeepSORT Implementation for Occlusion-Prone Environments

Purpose: To maintain consistent identity tracking through partial and complete occlusions (e.g., burrowing behaviors, complex maze navigation).

Materials:

- Standard definition camera (≥720p)

- GPU or CPU-only system (CPU implementation possible with reduced performance)

- DeepSORT implementation with improved YOLOv5s [20]

- Appearance feature dataset (if available)

Procedure:

- Detector Enhancement:

- Implement improved YOLOv5s with:

- Focal-EIoU loss function replacement for CIoU

- Additional 160×160 pixel small object detection layer

- Multi-Head Self-Attention mechanism in backbone [20]

- Train on domain-specific data if available

- Implement improved YOLOv5s with:

Feature Extractor Optimization:

- Replace standard feature extractor with ShuffleNetV2 for efficiency

- Retrain appearance feature extraction model on target domain

- Balance re-identification frequency with computational load

Motion Model Refinement:

- Implement Unscented Kalman Filter (UKF) for nonlinear motion prediction [24]

- Incorporate adaptive factor for observation noise adjustment

- Configure covariance matrices for domain-specific motion patterns

Validation:

- Quantify identity switches per minute of video

- Measure tracking accuracy during occlusion events

- Compute MOTA and MOTP metrics against manual annotations [20]

The Scientist's Toolkit

Research Reagent Solutions

Table 4: Essential Software and Hardware Components for Tracking Experiments

| Component | Specification | Function | Example Implementation |

|---|---|---|---|

| Base Detector | YOLOX, YOLOv5/v8, DAB-D-DETR | Object identification in individual frames | YOLOX-L for ByteTrack [22] |

| Feature Extractor | ShuffleNetV2, CNN networks | Appearance feature representation for re-identification | ShuffleNetV2 in DeepSORT [20] |

| Motion Predictor | Kalman Filter, State Space Models | Future position estimation based on motion history | State space models in SambaMOTR [21] |

| Association Module | Hungarian algorithm, Sequence modeling | Data association across frames | Two-stage matching in ByteTrack [22] |

| Evaluation Framework | PyTorch, TensorFlow | Model training and validation | PyTorch for SambaMOTR [21] |

Algorithm Selection Framework

Algorithm Selection Decision Tree

The selection of an appropriate tracking algorithm represents a critical methodological decision in behavioral analysis research, directly impacting data quality and experimental conclusions. SambaMOTR, ByteTrack, and DeepSORT offer complementary strengths for different experimental scenarios: SambaMOTR excels in modeling complex, interdependent motions found in social behaviors; ByteTrack provides unparalleled efficiency for high-throughput applications; while DeepSORT offers robust performance in occlusion-prone environments. Researchers should carefully consider their specific experimental conditions—including subject density, motion complexity, occlusion frequency, and computational resources—when selecting and implementing these algorithms. The protocols provided herein establish standardized methodologies for implementing these advanced tracking systems, promoting reproducibility and rigorous comparison across behavioral studies in pharmaceutical development and basic research.

The objective quantification of behavior is a cornerstone of modern neuroscience, pharmacology, and genetics research. Behavioral phenotypes—the observable and measurable manifestations of an organism's underlying genetic, neural, and pharmacological state—provide critical endpoints for diagnosing disease, evaluating therapeutic efficacy, and understanding fundamental biological processes. Historically, behavioral analysis relied on subjective clinical scores or low-throughput manual observation, limiting its scalability and objectivity. The convergence of motion tracking technologies and sophisticated artificial intelligence (AI) algorithms has ushered in a new era of computational phenotyping. This paradigm shift enables the precise, high-dimensional, and high-throughput quantification of behavior in both human and animal models, transforming it into a robust and data-rich scientific discipline. This article presents a series of detailed application notes and protocols focused on three core behavioral domains: gait analysis, activity bursts, and social interactions, framed within the context of a broader thesis on AI-driven behavioral analysis.

Application Note 1: Automated Human Gait Analysis Using 2D Video and Pose Estimation

Gait is a complex motor behavior that is a sensitive biomarker for a wide range of neurological and musculoskeletal conditions, from Parkinson's disease and stroke to osteoarthritis. Traditional 3D motion capture (e.g., Vicon systems), while considered a gold standard, is expensive, requires a laboratory setting, and often involves placing markers on the subject, which is cumbersome and can alter natural movement [25] [26].

Protocol: 2D Video-Based Gait Analysis with OpenPose

- Objective: To provide a validated, accessible, and markerless method for quantifying spatiotemporal and kinematic gait parameters from simple 2D video recordings.

- Experimental Setup:

- Participants: Recruit participants based on study criteria. For validation studies, a sample size of at least 20 participants is recommended [25].

- Equipment: A standard digital video camera (e.g., a smartphone camera) with a known frame rate (≥25 Hz is suitable). For sagittal plane analysis, a tripod is essential.

- Environment: A well-lit, flat walking area (e.g., a hallway). A known distance (e.g., 6.30 m) must be marked on the floor for spatial calibration [26].

- Procedure:

- Position the camera laterally (sagittal plane) to the walking path, ensuring the entire gait cycle is within the field of view.

- Record participants walking at a self-selected comfortable pace over the marked distance. Multiple trials (e.g., at least 10) are recommended.

- For validation against 3D motion capture, systems like Vicon with reflective markers are used simultaneously [25] [26].

- Data Processing Workflow:

- Pose Estimation: Process video frames using an open-source pose estimation algorithm such as OpenPose [25] [26]. This software automatically detects and tracks key body landmarks (keypoints) like ankles, knees, hips, and heels.

- Data Extraction: Export the 2D coordinates of the keypoints across all video frames.

- Gait Event Detection: Use algorithms to identify heel-strike (initial contact) and toe-off (foot lift) events from the keypoint trajectories.

- Parameter Calculation: Calculate spatiotemporal and kinematic parameters for each gait cycle (see Table 1).

Quantitative Validation Data

The following table summarizes the performance of the OpenPose-based 2D video analysis method compared to the gold-standard 3D motion capture system [25] [26].

Table 1: Comparison of Gait Parameters from 3D Motion Capture (MC) and 2D OpenPose Analysis

| Gait Parameter Category | Specific Parameter | Mean Absolute Error (OpenPose vs. MC) | Inter-Method Correlation (ICC or other) | Notes |

|---|---|---|---|---|

| Temporal Parameters | Step Time | 0.02 s | High (ICC > 0.769) [25] | Accuracy improves when using mean participant values [26]. |

| Stance Time | 0.02 s | High [25] | - | |

| Swing Time | 0.02 s | High [25] | - | |

| Spatial Parameters | Step Length | 0.049 m (stride-by-stride); 0.018 m (participant mean) | High [25] | Sensitive to camera angle and participant position [26]. |

| Gait Speed | < 0.10 m/s difference | High [25] | - | |

| Joint Kinematics (Sagittal Plane) | Hip Angle | 4.0° | Moderate to Excellent [25] | - |

| Knee Angle | 5.6° | Lower than temporal parameters [25] | - | |

| Ankle Angle | 7.4° | Lower, especially for hip angles [25] | - |

Workflow Diagram

Application Note 2: Quantifying Social Interaction Patterns with Sociometers

Understanding social behavior is critical in neuroscience, psychology, and drug development for conditions like autism and social anxiety. Self-reported or observer-coded data can be subjective and difficult to scale. Electronic sensors known as "sociometers" provide an objective, high-resolution method for quantifying social dynamics in naturalistic settings [27].

Protocol: Quantifying Group Social Interactions Using Wearable Sociometers

- Objective: To objectively measure patterns of social proximity and speech (talkativeness) in a group of co-located individuals.

- Experimental Setup:

- Participants: A group of intermediate size (e.g., 50-80 participants) that allows for the formation of multiple small groups [27].

- Equipment: Sociometers—wearable badges containing a radio transmitter to gauge physical proximity (~3m range) and a microphone to detect speech. The devices do not store raw audio but compute audio features to infer speaking time.

- Context: Studies can be designed in collaborative (e.g., a group project) or non-collaborative (e.g., a lunch break) settings to investigate context-dependent behavior [27].

- Procedure:

- Provide each participant with a pre-configured sociometer to wear throughout the observation period (e.g., 12 hours).

- Participants engage in their normal activities within the defined context.

- Data is continuously collected on proximity and speech.

- Data Analysis:

- Data Segmentation: Divide the data into time windows (e.g., 5-minute segments).

- Network Construction: For each time window, construct a proximity network where individuals are linked if they were proximate for the entire window.

- Tie Strength: Calculate the duration of interactions to distinguish between brief encounters and sustained social interaction.

- Statistical Analysis: Analyze data for patterns such as gender homophily (preference for same-gender interaction) and context-dependent talkativeness. In one study, women were significantly more talkative and more likely to be proximate to other women in a collaborative context, but not in a non-collaborative setting [27].

Research Reagent Solutions: Behavioral Quantification Tools

Table 2: Key Tools and Technologies for Behavioral Phenotyping

| Tool / Reagent | Type | Primary Function | Key Features |

|---|---|---|---|

| OpenPose [25] [26] | Software Algorithm | 2D Human Pose Estimation | Markerless, open-source, processes standard video, outputs body keypoints. |

| Gaitmap [28] | Software Ecosystem | IMU-based Gait Analysis | Open-source Python toolbox for algorithm benchmarking and pipeline building using wearable sensor data. |

| Sociometer [27] | Hardware Sensor | Proximity & Speech Detection | Wearable, objective, preserves privacy by not storing raw audio, suitable for group studies. |

| PhenoScore [29] | AI Framework | Phenotypic Similarity Analysis | Combines facial recognition (from 2D photos) with Human Phenotype Ontology (HPO) data to quantify similarity for rare disease diagnosis. |

| MIAS [30] | Software Application | Synchronized Multi-Camera Video Acquisition | Unified control for multiple cameras from different vendors, records timestamps for frame synchronization. |

Workflow Diagram

Application Note 3: Integrated AI-Driven Phenotyping for Rare Disease

A significant challenge in genetics and drug development, particularly for rare neurodevelopmental disorders, is interpreting the clinical significance of genetic variants and recognizing distinct phenotypic subgroups. PhenoScore is an open-source, AI-based framework that addresses this by integrating two distinct data modalities: facial features from 2D photographs and deep phenotypic data from the Human Phenotype Ontology (HPO) [29].

Protocol: Phenotypic Similarity Analysis with PhenoScore

- Objective: To quantify the phenotypic similarity of an individual to a defined genetic syndrome cohort, aiding in the interpretation of Variants of Unknown Significance (VUS) and the identification of phenotypic subgroups.

- Experimental Setup:

- Cohorts: A cohort of individuals with a confirmed molecular diagnosis of a specific syndrome (e.g., Koolen-de Vries syndrome) and a control cohort of individuals with other neurodevelopmental disorders, matched for age, sex, and ethnicity [29].

- Data Inputs:

- Facial Data: A single 2D frontal facial photograph.

- Phenotypic Data: A list of HPO terms describing the individual's clinical features.

- Data Processing Workflow:

- Feature Extraction: Facial features are automatically extracted from the photograph. Phenotypic HPO similarity is calculated, excluding facial terms to avoid redundancy.

- Model Training: A support vector machine (SVM) classifier is trained on the combined facial and HPO features from the syndrome and control cohorts.

- Similarity Scoring: The trained model generates a Brier score and p-value for a new individual, defining their clinical similarity to the syndrome.

- Explainable AI: The framework uses LIME (Local Interpretable Model-agnostic Explanations) to generate facial heatmaps and lists the most important clinical (non-facial) HPO terms driving the classification [29].

Key Validation Result: In a proof-of-concept study on Koolen-de Vries syndrome, PhenoScore (Brier score: 0.09, AUC: 0.94) outperformed models using only facial data (Brier: 0.13) or only HPO data (Brier: 0.10), demonstrating the power of integrated multimodal analysis [29].

Workflow Diagram

The protocols and application notes detailed herein demonstrate a powerful paradigm shift in behavioral research. The integration of motion tracking—from 2D video and wearable sensors—with sophisticated AI algorithms enables the transformation of complex, qualitative behaviors into robust, quantitative phenotypes. These methodologies are not only validating and refining existing clinical measures but are also uncovering novel, context-dependent patterns in human behavior, from gait kinematics to social dynamics. For researchers and drug development professionals, these tools provide a scalable, objective, and multidimensional framework for biomarker discovery, target validation, and therapeutic evaluation. As these technologies continue to evolve and become more accessible, they promise to deepen our understanding of the links between genes, neural circuits, behavior, and disease.

High-throughput phenotypic screening of animal models represents a transformative approach in preclinical research, accelerating drug discovery and the study of human diseases. Central to this paradigm is the integration of automated motion tracking and artificial intelligence (AI) algorithms for detailed behavioral analysis. By moving beyond simple, univariate measures, these technologies enable the extraction of rich, multidimensional behavioral phenotypes from model organisms like the nematode C. elegans [31] [32]. This is particularly vital for investigating complex pleiotropic disorders, especially those affecting the nervous system, where the connection between a genetic lesion and a screenable phenotype may not be immediately obvious [32]. The application of advanced AI models, such as DeepTangleCrawl (DTC), allows researchers to overcome traditional bottlenecks in tracking, such as animal coiling or overlapping, thereby producing more continuous and gap-free behavioral trajectories [31]. This Application Note provides a detailed protocol for implementing such AI-driven screening, framed within the context of motion tracking and behavioral analysis research.

Current Technology and Key Applications

AI-Driven Tracking Models

The evolution of tracking algorithms has been pivotal for high-throughput chemobehavioral phenotyping. While conventional computer vision methods are effective for isolated animals on uniform backgrounds, they fail in more complex but biologically relevant scenarios. Deep learning approaches have significantly advanced the field, with models like DeepTangleCrawl (DTC) demonstrating state-of-the-art performance. DTC is a neural network specifically trained on crawling worms, using temporal information from video clips to resolve difficult cases such as self-intersecting postures and worm-worm interactions [31]. This model outperforms existing methods like Tierpsy, Omnipose, and part affinity field (PAF)-based trackers, notably reducing failure rates and producing more complete trajectories, which is essential for reliable behavioral analysis [31].

Applications in Disease Modeling and Drug Repurposing

This technology enables systematic phenotyping across diverse disease models. In one study, researchers used CRISPR-Cas9 to create 25 C. elegans models of human Mendelian diseases. Using a standardized high-throughput tracking assay, they found that 23 of the 25 strains exhibited detectable phenotypic differences from wild-type controls across multidimensional features of morphology, posture, and motion [32]. This approach successfully connected the human and model organism genotype-phenotype maps. Furthermore, as a proof-of-concept for drug repurposing, a screen of 743 FDA-approved compounds identified two drugs, Liranaftate and Atorvastatin, that rescued the behavioral phenotype in a worm model of UNC80 deficiency [32]. This demonstrates the potential of high-throughput worm tracking as a scalable and cost-effective strategy for identifying candidate treatments for rare diseases.

Table 1: Key AI Tracking Models and Performance in Behavioral Phenotyping

| Model Name | Core Principle | Key Advantage | Documented Performance |

|---|---|---|---|

| DeepTangleCrawl (DTC) [31] | Neural network using temporal data from video clips. | Robust tracking of coiled and overlapping worms on complex backgrounds. | Reduced failure rates; produced longer, more gap-free trajectories than Tierpsy. |

| Tierpsy Tracker [31] | Classic computer vision for segmentation and skeletonization. | Reliability for isolated, non-coiling worms on uniform backgrounds. | Serves as a baseline; fails on challenging cases like coils and overlaps. |

| Omnipose [31] | Instance segmentation based on deep learning. | Improved segmentation accuracy for certain object types. | Lower modal RMSD than DTC where successful, but higher failure rate on difficult cases. |

| PAF-based Tracker [31] | Landmark-based tracking using part affinity fields. | Good accuracy for pose estimation when landmarks are detectable. | Lower modal RMSD than DTC where successful, but higher failure rate on difficult cases. |

Experimental Protocol for High-Throughput Phenotypic Screening

This protocol outlines the methodology for conducting a high-content phenotypic screen using C. elegans disease models, from preparation to data analysis. The workflow is designed to be systematic and scalable for drug repurposing campaigns [32].

Materials and Equipment

Research Reagent Solutions

Table 2: Essential Materials for High-Throughput Phenotypic Screening

| Item | Function/Description | Example/Specification |

|---|---|---|

| C. elegans Disease Models | Genetically engineered models of human diseases for screening. | CRISPR-Cas9 generated loss-of-function mutants (e.g., unc-80 model) [32]. |

| Control Strain | Genetically matched wild-type control for baseline behavioral comparison. | N2 (Bristol) wild-type strain. |

| Agar Plates | Substrate for animal cultivation and behavioral recording. | Standard Nematode Growth Medium (NGM) plates, seeded with E. coli OP50. |

| Compound Library | Collection of chemicals for screening (e.g., FDA-approved drugs). | Library of 743 FDA-approved compounds for repurposing screens [32]. |

| High-Throughput Imaging System | Automated array of cameras for parallel video acquisition. | Megapixel camera array (12.4 µm/pixel resolution) [31]. |

| AI Tracking Software | Software for extracting posture and movement data from videos. | DeepTangleCrawl (DTC) or comparable advanced AI model [31]. |

Step-by-Step Procedure

Step 1: Animal Preparation and Compound Exposure

- Synchronize the population of the wild-type and disease model strains using standard hypochlorite treatment.

- Culture the synchronized L1 larvae on NGM plates seeded with a bacterial lawn until they reach the young adult stage.

- Randomize young adult animals into treatment groups. For drug screens, transfer animals to plates containing the compound of interest from the library. Include a vehicle control group.

Step 2: Video Acquisition and Data Collection

- Mount the agar plates with animals onto the high-throughput imaging system.

- Record videos of animal behavior for a standardized period. The cited studies used a 16-minute assay at a frame rate of 25 frames per second [32].

- Ensure consistent environmental conditions (e.g., temperature, humidity) throughout all recordings to minimize non-biological variability.

Step 3: Pre-processing of Video Data

- Subtract the background from the video recordings to enhance contrast. This can be achieved using Singular Value Decomposition (SVD) on a temporally subsampled video, using the highest energy mode as the background image for subtraction [31].

- Format the data for the AI model. For a model like DTC, which uses temporal information, compile short clips of 11 consecutive frames for analysis [31].

Step 4: Animal Tracking and Pose Estimation with AI

- Process the pre-processed video clips through the chosen AI tracking model (e.g., DTC).

- Generate outputs including animal trajectories, skeletons (postures), and segmentation masks for each frame.

Step 5: Feature Extraction and Phenotypic Analysis

- Extract quantitative features from the tracking data. These can include:

- Locomotion: Velocity, acceleration, path curvature.

- Posture: Body bend angles, amplitude of waves, head movement.

- Morphology: Body length, width.

- Compare the multivariate phenotypic profile of the treated disease model animals to both the untreated disease model and the wild-type controls. Use appropriate statistical tests to identify significant phenotypic rescues or exacerbations.

Diagram 1: Experimental workflow for high-throughput phenotypic screening.

Data Processing and Analysis Pipeline

The raw tracking data generated by the AI model must be transformed into interpretable, high-level phenotypes. This requires a robust computational pipeline.

From Tracking Data to Behavioral Phenotypes

The primary output of trackers like DTC is the skeletal posture of each animal over time. From this, a large set of quantitative features are computed. These features capture different aspects of behavior, such as the speed and pattern of locomotion (e.g., dwelling vs. roaming), the complexity of postural dynamics, and subtle head movements [31] [32]. The power of this approach lies in its multidimensionality; a mutation may not affect a single obvious feature but can be detected by a unique combination of subtle alterations in multiple features. This complex phenotypic fingerprint is often necessary for modeling human diseases where the connection to the worm phenotype is non-obvious [32].

Increasing Signal-to-Noise with Advanced AI

The quality of tracking directly impacts the signal-to-noise ratio in phenotypic screens. By reducing tracking failures and gaps in trajectories, models like DTC produce more complete and reliable data. This increased data quality translates to an enhanced ability to detect statistically significant differences between strains or treatment conditions, thereby increasing the sensitivity of phenotypic screens [31]. This is critical for detecting subtle rescue effects in drug screens.

Table 3: Quantitative Performance Comparison of Tracking Models

| Performance Metric | DeepTangleCrawl (DTC) | Tierpsy | Omnipose | PAF-based Tracker |

|---|---|---|---|---|

| Pose Estimation Accuracy | ||||

| Median Root Mean Square Deviation (RMSD) | 2.2 pixels [31] | Information Missing | Lower modal RMSD than DTC [31] | Lower modal RMSD than DTC [31] |

| Tracking Robustness | ||||

| Failure Rate (No prediction made) | Lowest among compared models [31] | Fails on coils/overlaps [31] | Higher than DTC [31] | Higher than DTC [31] |

| Trajectory Continuity | Produces longer, more gap-free tracks [31] | Tracks interrupted by collisions/coils [31] | Information Missing | Information Missing |

Diagram 2: Data processing pipeline from video to phenotypic profile.