Ensuring Precision in Digital Neurology: A Comprehensive Framework for Reliability Testing of Virtual Reality Cognitive Assessment Tools

This article provides a systematic examination of the reliability and validity testing frameworks for Virtual Reality (VR) cognitive assessment tools, tailored for researchers and drug development professionals.

Ensuring Precision in Digital Neurology: A Comprehensive Framework for Reliability Testing of Virtual Reality Cognitive Assessment Tools

Abstract

This article provides a systematic examination of the reliability and validity testing frameworks for Virtual Reality (VR) cognitive assessment tools, tailored for researchers and drug development professionals. It explores the foundational psychometric principles underpinning VR assessment, details methodological approaches for establishing reliability in clinical and research settings, addresses prevalent technological and methodological challenges with optimization strategies, and presents comparative validation studies against gold-standard cognitive batteries. By synthesizing current evidence and standards, this review aims to equip biomedical professionals with the knowledge to implement, validate, and leverage VR cognitive assessments for enhanced diagnostic precision and therapeutic monitoring in neurological disorders and clinical trials.

The Psychometric Bedrock: Core Principles and Current Evidence for VR Cognitive Assessment Reliability

The integration of Virtual Reality (VR) into cognitive and psychological assessment represents a paradigm shift that demands a reconceptualization of traditional psychometric principles. While traditional paper-and-pencil tests and computerized assessments have established standards for reliability and validity, VR introduces unique considerations stemming from its immersive capabilities and ecological verisimilitude. Reliability in the VR context extends beyond mere score consistency to encompass technical stability of the system itself, including tracking precision and presentation consistency across sessions [1]. Similarly, validity expands from construct representation to include environmental authenticity and behavioral transfer to real-world functioning [2] [3].

This evolution is driven by VR's capacity to create controlled yet ecologically rich environments that elicit naturalistic behaviors. Where traditional assessments often rely on veridicality (statistical prediction of real-world functioning), VR enables verisimilitude – the degree to which assessment tasks mimic cognitive demands encountered in natural environments [2]. This distinction is crucial for researchers and drug development professionals seeking to measure treatment effects on functional outcomes rather than abstract cognitive scores. The fundamental question shifts from "Does this test measure the construct?" to "Does performance in this virtual environment predict functioning in the real world?"

Quantitative Comparison of VR Assessment Psychometric Properties

Table 1: Reliability Metrics Across VR Assessment Systems

| Assessment Tool | Domain Assessed | Test-Retest Reliability (ICC) | Internal Consistency (α) | Sample Size | Population |

|---|---|---|---|---|---|

| CAVIRE-2 [2] | Global Cognition (6 domains) | 0.89 (95% CI: 0.85-0.92) | 0.87 | 280 | Older adults (55-84 years) |

| NeuroFitXR [4] | Cognitive-Motor Function | High (exact values NR) | N/R | 829 | Elite athletes |

| CONVIRT [3] | Attention/Decision-Making | Satisfactory (exact values NR) | N/R | 165 | University students |

| VR-CAT [5] | Executive Functions | Modest (exact values NR) | N/R | 54 | Pediatric TBI |

| VR Motor Battery [6] | Reaction Time | 0.858 (RE), 0.888 (VR) | N/R | 32 | Healthy adults |

Table 2: Validity Evidence for VR Cognitive Assessments

| Assessment Tool | Convergent Validity | Discriminant Validity | Ecological Validity Evidence | AUC for Impairment Detection |

|---|---|---|---|---|

| CAVIRE-2 [2] | Moderate with MoCA and MMSE | Demonstrated | High (simulates real-world activities) | 0.88 (CI: 0.81-0.95) |

| CONVIRT [3] | Modest with Cogstate tasks | Demonstrated between visual processing and attention | Increased physiological arousal mimicking real sport | N/R |

| VR-CAT [5] | Modest with standard EF tests | N/R | High usability and motivation reports | N/R |

| NeuroFitXR [4] | Established via CFA | Confirmed through factor structure | Sports-relevant cognitive-motor tasks | N/R |

Abbreviations: ICC: Intraclass Correlation Coefficient; CI: Confidence Interval; NR: Not Reported; MoCA: Montreal Cognitive Assessment; MMSE: Mini-Mental State Examination; EF: Executive Function; TBI: Traumatic Brain Injury; AUC: Area Under Curve; CFA: Confirmatory Factor Analysis; RE: Real Environment

Experimental Protocols for Establishing VR Psychometric Properties

Protocol 1: Establishing Discriminant Validity in Cognitive Impairment

The CAVIRE-2 validation study provides a robust template for establishing discriminant validity in VR cognitive assessment [2]. Researchers recruited 280 multi-ethnic Asian adults aged 55-84 years from primary care settings, with 36 identified as cognitively impaired by MoCA criteria. Participants completed 13 VR scenarios simulating basic and instrumental activities of daily living in local community settings, automatically assessing six cognitive domains: perceptual motor, executive function, complex attention, social cognition, learning and memory, and language.

Methodological details: The VR system recorded both performance scores and completion time, generating a composite matrix. Researchers administered the MoCA independently to avoid bias. Statistical analyses included ROC curves to determine optimal cut-off scores, with sensitivity and specificity calculations. The protocol demonstrated an optimal cut-off score of <1850 (88.9% sensitivity, 70.5% specificity) for distinguishing cognitive status, showing strong discriminant ability beyond traditional measures.

Protocol 2: Cognitive-Motor Integration in Elite Athletes

The NeuroFitXR validation employed a sophisticated approach to establish reliability and validity for cognitive-motor assessment [4]. Using ten VR tests delivered via Oculus Quest 2 headsets, researchers assessed 829 elite male athletes across four domains: Balance and Gait (BG), Decision-Making (DM), Manual Dexterity (MD), and Memory (ME).

Methodological details: The protocol utilized Confirmatory Factor Analysis (CFA) to establish a four-factor model and generate data-driven weights for domain-specific composite scores. Test administration included a trained administrator to ensure proper performance, with repeated testing if protocols weren't followed. The analysis focused on creating normally distributed composite scores for parametric analysis, though Decision-Making showed ceiling effects. The rigorous multi-step data preparation included calculating Inverse Efficiency Scores, mathematical inversion for consistent directionality, Yeo-Johnson transformation for normality, and z-score standardization with outlier removal.

Protocol 3: Ecological Validity Through Physiological Arousal

The CONVIRT battery validation study introduced an innovative approach to ecological validation by measuring physiological responses [3]. Researchers developed a VR assessment simulating jockey experience during a horse race, assessing visual processing speed, attention, and decision-making in 165 university students.

Methodological details: The protocol compared CONVIRT with standard Cogstate computer-based measures while monitoring heart rate and heart rate variability (LF/HF ratio) as indicators of physiological arousal. The study demonstrated that CONVIRT elicited higher physiological arousal that better approximated workplace demands, providing evidence for ecological validity through verisimilitude rather than just statistical prediction.

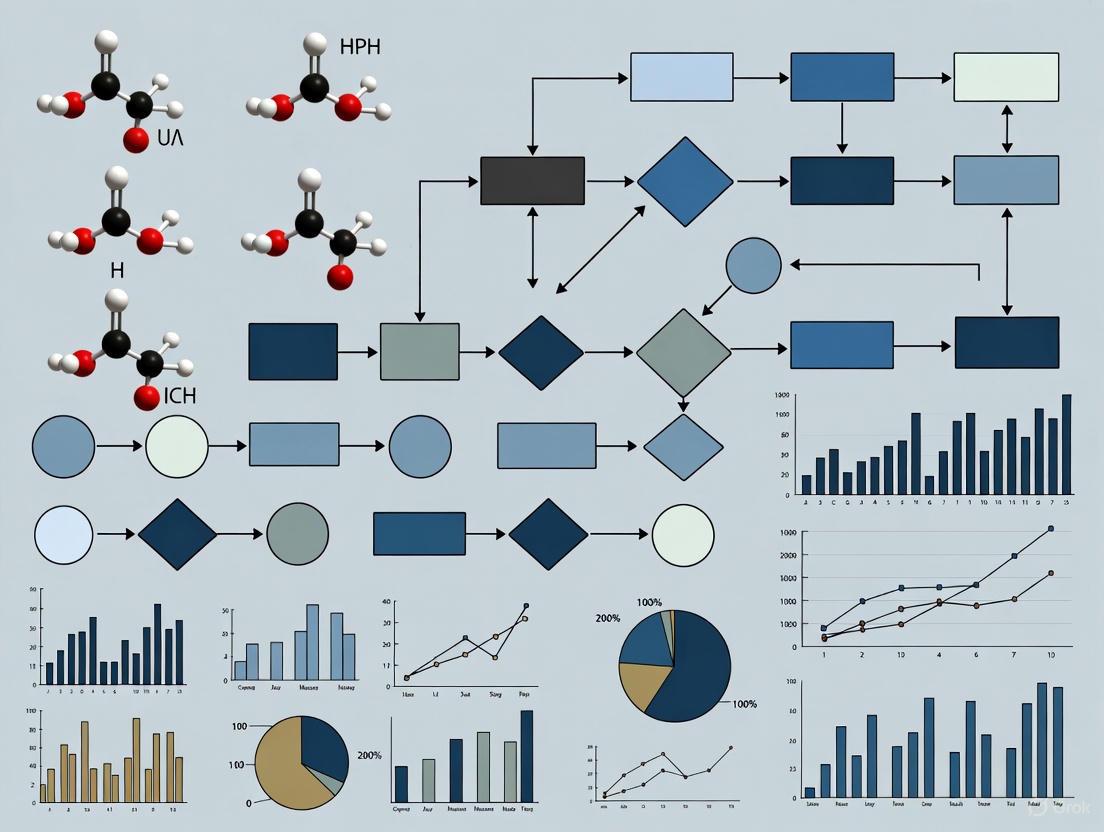

Conceptual Framework for VR Assessment Validation

Essential Research Reagent Solutions for VR Psychometric Research

Table 3: Essential Research Tools for VR Psychometric Validation

| Tool Category | Specific Examples | Research Function | Key Considerations |

|---|---|---|---|

| VR Hardware Platforms | Oculus Quest 2 [4], HTC Vive Pro [7], Varjo Aero [1] | Provides immersive environment delivery and motion tracking | Tracking accuracy (<1mm), refresh rate (90Hz), resolution (2880×2720 px/eye) [1] |

| Eye Tracking Systems | Integrated VR eye tracking (200Hz) [1], Infrared cameras [3] | Quantifies oculomotor function, visual attention, and processing speed | Precision (<1° visual angle), sampling rate, calibration stability [1] |

| Physiological Monitoring | Heart rate variability (LF/HF ratio) [3] | Objective measure of arousal state during ecological assessment | Synchronization with VR events, minimal interference |

| Validation Reference Tests | MoCA [2], Cogstate Battery [3], Standard motor tests [6] | Provides criterion measures for convergent validity | Administration independence, appropriate construct overlap |

| Robotic Validation Systems | Custom robotic eyes [1] | Objective technical validation of eye tracking precision | Movement accuracy (0.15°±0.1°), biological movement simulation |

| Data Processing Pipelines | Confirmatory Factor Analysis [4], Inverse Efficiency Score calculation [4] | Creates composite scores from multiple performance metrics | Handling non-normal distributions, outlier management |

The integration of VR into cognitive assessment requires expanding traditional psychometric frameworks to accommodate the unique capabilities of immersive technologies. Establishing reliability in VR contexts extends beyond statistical consistency to encompass technical precision and environmental stability across administrations [1]. Validity evidence must include ecological verisimilitude demonstrated through physiological arousal measures [3] and real-world functional correspondence [2].

For researchers and drug development professionals, these advances offer unprecedented opportunities to measure cognitive functioning in contexts that closely mirror real-world demands. The consistently high reliability metrics (e.g., CAVIRE-2's ICC of 0.89) and strong discriminant validity (AUC up to 0.88) demonstrate that VR assessments can meet rigorous psychometric standards while providing richer functional data [2]. As these technologies evolve, the validation frameworks outlined here provide a roadmap for developing assessments that balance psychometric rigor with ecological relevance, ultimately creating more sensitive tools for detecting treatment effects and functional changes in clinical and research populations.

In the development of virtual reality (VR) cognitive assessment tools, ecological validity—the degree to which results from controlled laboratory experiments can be generalized to real-world functioning—has emerged as a critical metric of efficacy [8]. This concept is conceptually dissected into two distinct approaches: verisimilitude and veridicality [2] [8]. Verisimilitude refers to the degree to which the cognitive demands of a test mirror those encountered in naturalistic environments, essentially reflecting the similarity of task demands between laboratory and real-world settings [2] [9]. In contrast, veridicality pertains to the extent to which performance on a test can predict some feature of day-to-day functioning, establishing a statistical correlation between assessment scores and real-world outcomes [8] [9].

Traditional neuropsychological assessments like the Montreal Cognitive Assessment (MoCA) predominantly adopt a veridicality-based approach, which has shown limited ability to correlate clinical cognitive scores with real-world functional performance [2]. VR technology proffers a rapprochement between laboratory control and everyday functioning by creating digitally recreated real-world activities that can be presented via immersive head-mounted displays [8]. This technological advancement allows for controlled presentations of dynamic perceptual stimuli while participants are immersed in simulations that approximate real-world contexts, thereby enhancing both verisimilitude and veridicality simultaneously [8] [9].

Theoretical Framework: Comparative Analysis of Ecological Validity Approaches

Table 1: Comparative Analysis of Verisimilitude and Veridicality in Cognitive Assessment

| Feature | Verisimilitude Approach | Veridicality Approach |

|---|---|---|

| Primary Focus | Similarity of task demands to real-world activities [2] [9] | Predictive power for real-world outcomes [8] [9] |

| Testing Paradigm | Function-led, mimicking activities of daily living [8] | Construct-driven, assessing cognitive domains [8] |

| VR Implementation | Recreation of naturalistic environments (e.g., kitchens, supermarkets) [2] [10] | Correlation of VR performance metrics with real-world functional measures [2] [10] |

| Strength | High face validity, engages real-world cognitive processes [2] [8] | Statistical correlation with outcomes, established predictive power [8] |

| Limitation | Requires empirical validation of real-world correspondence [9] | May overlook complexity of multistep real-world tasks [8] |

The theoretical distinction between these approaches has significant implications for assessment development. Verisimilitude-based assessments attempt to create new evaluation tools with ecological goals by simulating environments that closely resemble relevant real-world contexts [9]. Conversely, veridicality-based approaches establish predictive validity through statistical correlations between assessment scores and real-world functioning measures [8]. While these approaches can be pursued independently, the most ecologically valid assessments typically incorporate elements of both, leveraging VR's capacity to deliver multisensory information under different environmental conditions [9].

Advanced VR systems facilitate this integration by providing both the environmental realism necessary for verisimilitude and the precise performance metrics required for establishing veridicality [2] [10]. For instance, VR assessments can capture not only traditional accuracy scores but also kinematic data, movement speed, and efficiency metrics that may have stronger correlations with daily functioning than conventional test scores [11].

Experimental Evidence: Quantitative Comparisons of VR Assessment Tools

Recent validation studies demonstrate how VR cognitive assessments successfully integrate verisimilitude and veridicality to achieve ecological validity. The following table summarizes key findings from contemporary research investigating VR-based cognitive assessments across different patient populations.

Table 2: Validation Metrics of VR Cognitive Assessment Tools Across Clinical Studies

| Assessment Tool | Study Population | Veridicality Metrics (Correlation with Standards) | Reliability Metrics | Verisimilitude Features |

|---|---|---|---|---|

| CAVIRE-2 [2] | Multi-ethnic adults (55-84 years) with MCI (n=280) | AUC: 0.88 vs MoCA [2] | ICC: 0.89; Cronbach's α: 0.87 [2] | 13 VR scenarios simulating BADL/IADL in local community settings [2] |

| CAVIR [10] | Mood/psychosis spectrum disorders (n=70) vs healthy controls (n=70) | rₛ=0.60 with neuropsychological battery; r=0.40 with AMPS process skills [10] | Sensitive to employment status differentiation [10] | Immersive VR kitchen scenario assessing daily-life cognitive skills [10] |

| VR-BBT [11] | Stroke patients (n=24) vs healthy adults (n=24) | r=0.84 with conventional BBT; r=0.66-0.84 with FMA-UE [11] | ICC: 0.94 [11] | Virtual replica of BBT with physical interaction physics [11] |

The CAVIRE-2 system exemplifies a comprehensive approach to ecological validity, employing 14 discrete scenes including one starting tutorial session and 13 virtual scenes simulating both basic and instrumental activities of daily living (BADL and IADL) in local residential and community settings [2]. The virtual residential blocks and shophouses were modeled with a high degree of realism to bridge the gap between an unfamiliar virtual game environment and participants' real-world experiences [2]. This emphasis on environmental fidelity supports verisimilitude, while the strong correlation with MoCA (AUC=0.88) establishes its veridicality [2].

Similarly, the CAVIR test demonstrates how VR assessments can achieve ecological validity in psychiatric populations. Notably, CAVIR performance showed a moderate association with activities of daily living process ability (r=0.40) even when conventional neuropsychological performance, interviewer-based functional capacity, and subjective cognition measures failed to show significant associations [10]. This suggests that VR assessments may capture elements of real-world functioning that traditional measures miss, particularly highlighting their advantage in veridicality for complex daily living skills.

Methodology: Experimental Protocols for VR Assessment Validation

CAVIRE-2 Validation Protocol

The validation study for CAVIRE-2 employed a rigorous methodology to establish both verisimilitude and veridicality [2]. Participants included multi-ethnic Asian adults aged 55-84 years recruited at a public primary care clinic in Singapore. Each participant independently completed both CAVIRE-2 and the Montreal Cognitive Assessment (MoCA). The CAVIRE-2 software presented 13 VR scenarios assessing six cognitive domains: perceptual motor, executive function, complex attention, social cognition, learning and memory, and language [2].

Performance was evaluated based on a matrix of scores and time to complete the VR scenarios. The protocol specifically assessed the system's ability to discriminate between participants identified as cognitively healthy (n=244) and those with cognitive impairment (n=36) by MoCA standards [2]. Statistical analyses included receiver operating characteristic (ROC) curves to determine discriminative ability, intraclass correlation coefficients for test-retest reliability, and Cronbach's alpha for internal consistency [2].

CAVIR Experimental Protocol

The CAVIR validation study employed a case-control design comparing patients with mood or psychosis spectrum disorders against healthy controls [10]. Participants completed the CAVIR test alongside standard neuropsychological tests and were rated for clinical symptoms, functional capacity, and subjective cognition. For the patient group, activities of daily living ability was evaluated with the Assessment of Motor and Process Skills (AMPS), an observational assessment that examines the effectiveness of motor and process skills during performance of ADL tasks [10].

The CAVIR test itself consists of an immersive virtual reality kitchen scenario where participants perform daily-life cognitive tasks. The environment was designed to have high verisimilitude while maintaining experimental control. Performance metrics were correlated with both neuropsychological test scores (establishing veridicality with standard assessments) and AMPS scores (establishing veridicality with real-world functioning) [10].

Essential Research Reagents and Materials for VR Cognitive Assessment

The successful implementation and validation of VR cognitive assessments requires specific hardware, software, and methodological components. The following table details key elements constituting the essential "research reagent solutions" for this field.

Table 3: Essential Research Reagents for VR Cognitive Assessment Studies

| Component | Specification Examples | Research Function |

|---|---|---|

| Immersive VR Hardware | HTC Vive Pro 2 [11]; Oculus Quest [11]; Head-Mounted Displays (HMDs) with first-order Ambisonics (FOA)-tracked binaural playback [9] | Provides immersive visual and auditory stimulation; enables real-time tracking of movement and performance [11] [9] |

| VR Assessment Software | CAVIRE-2 (13 scenario system) [2]; CAVIR (kitchen scenario) [10]; VR-BBT (virtual Box & Block Test) [11] | Presents standardized, controlled cognitive tasks with embedded performance metrics [2] [10] [11] |

| Spatial Audio Technology | First-order Ambisonics (FOA); Higher-order Ambisonics (HOA); Head-Related Transfer Function (HRTF) [9] | Creates spatially accurate sound fields; enhances realism and contextual cues [9] |

| Validation Instruments | Montreal Cognitive Assessment (MoCA) [2]; Assessment of Motor and Process Skills (AMPS) [10]; Fugl-Meyer Assessment (FMA-UE) [11] | Provides criterion standards for establishing veridicality of VR assessments [2] [10] [11] |

| Data Analytics Framework | ROC curve analysis [2]; Intraclass Correlation Coefficients (ICC) [2] [11]; Cronbach's alpha [2] | Quantifies discriminative ability, test-retest reliability, and internal consistency [2] |

The hardware components must balance immersion with practicality. Standalone VR systems (e.g., Oculus Quest) offer advantages in portability and wireless operation, while PC-based systems (e.g., HTC Vive Pro 2) provide higher tracking precision, advanced kinematic analysis capabilities, and superior graphical quality [11]. The choice between these platforms involves trade-offs between accessibility and data quality that must be aligned with research objectives.

Spatial audio technology represents a critical yet often overlooked component for achieving verisimilitude. First-order Ambisonics with head-tracking functions that synchronize spatial audio and visual stimuli have emerged as the prevailing trend to achieve high ecological validity in auditory perception [9]. This technology enables the creation of dynamic sound fields that respond to user movement, significantly enhancing the sense of presence and environmental realism.

Virtual reality cognitive assessment tools represent a significant advancement in balancing experimental control with ecological validity through their unique capacity to address both verisimilitude and veridicality. The experimental evidence demonstrates that properly validated VR systems can achieve strong correlations with standard neuropsychological measures (veridicality) while simultaneously presenting tasks that closely mimic real-world cognitive demands (verisimilitude) [2] [10]. The integration of these two approaches enables VR assessments to capture elements of daily functioning that traditional paper-and-pencil tests miss, particularly for complex instrumental activities of daily living [10].

Future development in this field should continue to optimize both verisimilitude and veridicality while addressing practical implementation challenges. As VR technology becomes more accessible and sophisticated, these assessments have the potential to become standard tools in both clinical practice and research settings, offering unprecedented insights into the relationship between cognitive performance and real-world functioning across diverse populations.

Virtual reality (VR) has emerged as a transformative technology for cognitive assessment, offering potential solutions to limitations of traditional paper-and-pencil neuropsychological tests. This review synthesizes current evidence from systematic reviews and meta-analyses regarding the reliability and validity of VR-based cognitive assessment tools, particularly for identifying mild cognitive impairment (MCI) and early dementia. As pharmacological interventions for neurodegenerative diseases increasingly target early stages, accurate and ecologically valid assessment tools have become crucial for both research and clinical practice [12]. This analysis examines how VR assessment reliability compares with traditional methods across multiple cognitive domains and patient populations.

Quantitative Evidence: Diagnostic Accuracy and Reliability Metrics

Recent comprehensive analyses demonstrate that VR-based assessments achieve favorable reliability and diagnostic accuracy metrics compared to traditional cognitive screening tools.

Table 1: Pooled Diagnostic Accuracy of VR Assessments for Mild Cognitive Impairment

| Metric | VR-Based Assessments | Traditional Tools (Reference) |

|---|---|---|

| Sensitivity | 0.883 (95% CI: 0.854-0.912) [12] | 0.70-0.85 (MoCA/MMSE range) [12] |

| Specificity | 0.887 (95% CI: 0.861-0.913) [12] | 0.70-0.80 (MoCA/MMSE range) [12] |

| Area Under Curve (AUC) | 0.88 (CAVIRE-2) [2] | 0.70-0.85 (MoCA typical range) |

| Test-Retest Reliability (ICC) | 0.89 (CAVIRE-2) [2] | 0.70-0.90 (Varies by traditional test) |

| Internal Consistency (Cronbach's α) | 0.87 (CAVIRE-2) [2] | Varies by assessment |

Table 2: Reliability and Validity Metrics Across VR Assessment Systems

| Assessment System | Test-Retest Reliability (ICC) | Convergent Validity | Ecological Validity Advantages |

|---|---|---|---|

| CAVIRE-2 | 0.89 (95% CI: 0.85-0.92) [2] | Moderate with MoCA/MMSE [2] | Assesses 6 cognitive domains in real-world simulations [2] |

| MentiTree (AD Patients) | High feasibility (93%) [13] | Improved visual recognition memory (p=0.034) [13] | Tailored difficulty levels for impaired populations [13] |

| VR with Machine Learning | Sensitivity: 0.888, Specificity: 0.885 [12] | Superior to traditional tools in some studies [12] | Integrates multimodal data (EEG, movement, eye-tracking) [12] |

The consistently high sensitivity and specificity values across multiple VR systems indicate robust diagnostic performance for detecting MCI. The area under the curve (AUC) value of 0.88 for CAVIRE-2 demonstrates excellent discriminative ability between cognitively healthy and impaired individuals [2]. The intraclass correlation coefficient (ICC) of 0.89 for CAVIRE-2 indicates excellent test-retest reliability, suggesting consistent performance across repeated administrations [2].

Experimental Protocols and Methodologies

VR Cognitive Assessment Implementation

VR Assessment Experimental Workflow

Typical VR assessment protocols involve comprehensive testing across multiple cognitive domains using simulated real-world environments:

CAVIRE-2 Protocol: This system comprises 14 discrete scenes, including one tutorial session and 13 virtual scenes simulating both basic and instrumental activities of daily living (BADL and IADL) in familiar community settings. The assessment automatically evaluates all six cognitive domains (perceptual motor, executive function, complex attention, social cognition, learning and memory, and language) within a 10-minute administration time. Performance is calculated based on a matrix of scores and completion times across the VR scenarios [2].

MentiTree Protocol for Alzheimer's Patients: This intervention involves 30-minute VR training sessions twice weekly for 9 weeks (total 540 minutes) using Oculus Rift S headsets with hand tracking technology. The software provides alternating indoor and outdoor background content with automatically adjusted difficulty levels based on patient performance. Indoor tasks include making sandwiches, using the bathroom, and tidying up playrooms, while outdoor tasks involve wayfinding, social recognition, and shopping activities [13].

Data Collection Methods: Advanced VR systems incorporate multimodal data capture including traditional performance metrics, movement kinematics, eye-tracking, EEG patterns, and response times. Machine learning algorithms then analyze these complex datasets to identify subtle patterns indicative of MCI that might be missed by traditional assessment methods [12].

Comparative Study Designs

Methodological Approaches for VR Assessment Studies

Robust study designs are critical for establishing VR assessment reliability:

Randomized Controlled Trials: These studies typically compare VR-based cognitive interventions against traditional methods with participants randomly assigned to experimental or control groups. For example, one meta-analysis of 21 RCTs involving 1,051 participants with neuropsychiatric disorders found significant cognitive improvements in VR groups compared to controls (SMD 0.67, 95% CI 0.33-1.01, p<0.001) [14].

Diagnostic Accuracy Studies: These investigations evaluate VR assessments against reference standards (e.g., MoCA, MMSE, or biomarker confirmation). Participants complete both VR and traditional assessments, typically in randomized order to avoid practice effects. Blinded raters evaluate results to prevent bias [2] [12].

Systematic Reviews and Meta-Analyses: These comprehensive evidence syntheses follow PRISMA guidelines, involve systematic searches across multiple databases, assess study quality using tools like QUADAS-2 or Cochrane ROB-2, and perform pooled analyses of sensitivity, specificity, and effect sizes [12] [14].

The Researcher's Toolkit: Essential Methodological Components

Table 3: Research Reagent Solutions for VR Cognitive Assessment

| Component | Function | Examples & Specifications |

|---|---|---|

| VR Hardware Platforms | Create immersive environments for cognitive testing | Oculus Rift S, HTC Vive, Pico 4 Enterprise [13] [15] |

| Assessment Software | Administer standardized cognitive tasks in virtual environments | CAVIRE-2, MentiTree, Custom-built scenarios [2] [13] |

| Performance Metrics | Quantify cognitive performance across domains | Score matrices, completion time, error rates, movement kinematics [2] |

| Data Integration Systems | Combine multimodal data streams for comprehensive analysis | EEG integration, eye-tracking, movement sensors, speech analysis [12] |

| Statistical Analysis Tools | Process complex datasets and establish reliability | R, Python with SciPy, MATLAB, specialized ML algorithms [13] [12] |

Successful implementation of VR cognitive assessment requires several key methodological components:

Hardware Specifications: Modern VR assessment systems typically use head-mounted displays (HMDs) with minimum specifications of 2560×1440 resolution and 115-degree field of view for adequate immersion. Systems like Oculus Rift S provide the visual fidelity and motion tracking necessary for precise cognitive assessment [13].

Software Characteristics: Effective VR assessment platforms incorporate real-world simulations that test multiple cognitive domains simultaneously. CAVIRE-2, for instance, includes 13 virtual scenes simulating daily activities in familiar environments, automatically adjusting difficulty based on performance and generating comprehensive score matrices [2].

Data Analytics Infrastructure: Advanced VR systems capture rich datasets including performance scores, completion times, movement efficiency, and error patterns. Machine learning algorithms can process these complex multimodal data to detect subtle cognitive changes with higher sensitivity than traditional methods [12].

Current systematic review evidence indicates that well-designed VR cognitive assessment systems demonstrate comparable or superior reliability to traditional neuropsychological tests while offering significant advantages in ecological validity. The consistently high sensitivity and specificity metrics across multiple VR platforms support their utility as screening tools for mild cognitive impairment. The enhanced ecological validity of VR assessments, achieved through realistic simulations of daily activities, addresses a critical limitation of traditional paper-and-pencil tests.

Future research directions should focus on standardizing VR assessment protocols across platforms, establishing population-specific normative data, and further validating VR tools against biomarker-confirmed diagnoses. As VR technology becomes more accessible and sophisticated, these assessment platforms are poised to play an increasingly important role in both clinical practice and pharmaceutical research, particularly for early detection of neurodegenerative diseases where timely intervention is most beneficial.

Traditional neuropsychological assessments face significant limitations in ecological validity—their inability to predict real-world cognitive functioning in daily life environments [2] [16]. The six cognitive domains framework established by the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5) provides a comprehensive structure for evaluating cognitive health, encompassing perceptual-motor function, executive function, complex attention, social cognition, learning and memory, and language [2] [16]. Virtual reality (VR) technology has emerged as a transformative tool that bridges the gap between controlled clinical environments and real-world cognitive demands by creating immersive, ecologically valid assessment scenarios [17]. This comparison guide evaluates current VR-based cognitive assessment tools against traditional methods, with a specific focus on their application in reliability testing for research and clinical practice.

The fundamental advantage of VR-based assessment lies in its capacity for verisimilitude—the degree to which cognitive demands presented by tests mirror those encountered in naturalistic environments [2]. Unlike traditional paper-and-pencil tests that adopt a veridicality-based approach with weaker correlations to real-world outcomes, VR environments can simulate both basic and instrumental activities of daily living (BADL and IADL), allowing for more accurate assessment of cognitive capability in real time [2]. This technological advancement addresses a critical limitation in early detection of mild cognitive impairment (MCI), where only approximately 8% of expected cases are diagnosed during primary care assessments [2].

Comparative Analysis of Assessment Modalities

Psychometric Performance Comparison

Table 1: Psychometric Properties of VR-Based vs. Traditional Cognitive Assessments

| Assessment Tool | Sensitivity/Specificity | Test-Retest Reliability | Ecological Validity | Administration Time | Domains Assessed |

|---|---|---|---|---|---|

| CAVIRE-2 VR System [2] | 88.9% sensitivity, 70.5% specificity [2] | ICC = 0.89 (95% CI = 0.85-0.92) [2] | High (verisimilitude approach) [2] | 10 minutes [2] | All six domains [2] |

| MoCA (Traditional) [2] [16] | ~80-90% for MCI detection | 0.92 (original validation) [16] | Limited (veridicality approach) [2] | 10-15 minutes [18] | Limited domains (e.g., weak executive function) [16] |

| MMSE (Traditional) [17] | 63-71% for dementia detection | 0.96 (test-retest) | Limited | 7-10 minutes | Limited domains (e.g., weak executive function) [17] |

| VR-EAL [18] | Comparable to traditional | Not specified | High | Shorter than pen-and-paper | Multiple domains |

Table 2: Domain Coverage Across Assessment Modalities

| Cognitive Domain | CAVIRE-2 VR System | Traditional MoCA | Traditional MMSE | MentiTree VR |

|---|---|---|---|---|

| Complex Attention | Full assessment [2] | Limited assessment | Partial assessment | Partial assessment [13] |

| Executive Function | Full assessment [2] [16] | Limited assessment [16] | Minimal assessment [17] | Partial assessment [13] |

| Learning and Memory | Full assessment [2] | Primary focus | Primary focus | Partial assessment [13] |

| Language | Full assessment [2] | Assessment included | Assessment included | Minimal assessment |

| Perceptual-Motor | Full assessment [2] | Limited assessment | Limited assessment | Partial assessment [13] |

| Social Cognition | Full assessment [2] | Minimal assessment | Not assessed | Not assessed |

Technical Implementation and Feasibility

Table 3: Technical Specifications and Implementation Requirements

| Parameter | CAVIRE-2 System | MentiTree Software | Traditional Assessment | VR-EAL |

|---|---|---|---|---|

| Hardware Requirements | HTC Vive Pro HMD, Leap Motion, Lighthouse sensors [16] | Oculus Rift S [13] | Pen and paper | HMD (unspecified) [18] |

| Software Features | 13 immersive scenarios, automated scoring [2] | 25 indoor/outdoor scenarios, adaptive difficulty [13] | Manual scoring | Everyday activities simulation [18] |

| Operator Dependency | Low (automated) [2] | Moderate | High (trained administrator) | Low |

| Cybersickness Management | Not specified | 93% feasibility (7% dropout) [13] | Not applicable | Minimal cybersickness [18] |

| Session Duration | 10 minutes [2] | 30 minutes (training) [13] | 10-15 minutes [18] | Shorter than traditional [18] |

Experimental Protocols and Methodologies

Validation Study Design for VR Assessment Tools

The validation of VR-based cognitive assessment tools follows rigorous experimental protocols to establish reliability, validity, and clinical utility. The CAVIRE-2 validation study exemplifies a comprehensive approach, recruiting 280 multi-ethnic Asian adults aged 55-84 years from a primary care clinic in Singapore [2]. Participants completed both the CAVIRE-2 assessment and the standard MoCA independently, allowing for direct comparison between the novel VR system and established assessment methods [2]. The study employed a matrix of scores and time to complete 13 VR scenarios to discriminate between cognitively healthy individuals and those with MCI, with classification based on MoCA cut-off scores [2].

Statistical analyses in VR validation studies typically include concurrent validity assessment against established tools like MoCA, test-retest reliability measurement using Intraclass Correlation Coefficient (ICC), internal consistency evaluation with Cronbach's alpha, and discriminative ability analysis through Receiver Operating Characteristic (ROC) curves [2]. For CAVIRE-2, these analyses demonstrated moderate concurrent validity with MoCA, good test-retest reliability (ICC = 0.89), strong internal consistency (Cronbach's alpha = 0.87), and excellent discriminative ability (AUC = 0.88) [2].

VR Cognitive Training Intervention Protocol

For VR-based cognitive training applications such as MentiTree software, intervention protocols follow a structured approach. A typical study involves participants diagnosed with mild to moderate Alzheimer's disease undergoing VR training sessions for 30 minutes twice a week over 9 weeks (total 540 minutes) [13]. Each session alternates between indoor background content (e.g., making a sandwich, using the bathroom, tidying up) and outdoor background content (e.g., finding directions, shopping, finding the way home) with automatically adjusted difficulty levels based on participant performance [13].

Cognitive assessment occurs pre- and post-intervention using standardized batteries such as the Korean version of the Mini-Mental State Examination-2 (K-MMSE-2), Clinical Dementia Rating (CDR), Global Deterioration Scale (GDS), and Literacy Independent Cognitive Assessment (LICA) [13]. This longitudinal design allows researchers to track cognitive changes attributable to the VR intervention while monitoring feasibility and adverse effects throughout the study period.

Technical Implementation Framework

System Architecture and Workflow

Table 4: Essential Research Reagents and Solutions for VR Cognitive Assessment Studies

| Resource Category | Specific Examples | Function/Purpose | Implementation Considerations |

|---|---|---|---|

| VR Hardware Platforms | HTC Vive Pro, Oculus Rift S [13] [16] | Display immersive environments, track user movements | Resolution, field of view, refresh rate, comfort for elderly users |

| Interaction Technology | Leap Motion hand tracking, 6-DoF controllers [16] [19] | Natural user interaction with virtual objects | Ergonomic design, intuitive interfaces for technologically naive users |

| VR Software Development | Unity game engine, integrated API for voice recognition [16] | Create virtual environments, implement assessment logic | Cross-platform compatibility, rendering performance, accessibility features |

| Validation Instruments | MoCA, MMSE, LICA, CDR, GDS [2] [13] | Establish convergent and concurrent validity | Cultural adaptation, literacy considerations, normative data |

| Cybersickness Assessment | Virtual Reality Neuroscience Questionnaire (VRNQ) [19] | Quantify adverse symptoms and software quality | Session duration limits (55-70 minutes maximum) [19] |

| Data Collection Framework | Automated scoring algorithms, performance matrices [2] [16] | Standardized data capture and analysis | Data security, interoperability with clinical systems |

Domain-Specific Assessment Paradigms in Virtual Environments

The six cognitive domains are assessed in VR through carefully designed scenarios that simulate real-world activities while isolating specific cognitive functions:

- Complex Attention: Assessed through tasks requiring sustained, selective, and divided attention during multi-step activities with environmental distractors [2] [20]

- Executive Function: Evaluated through planning, decision-making, and problem-solving scenarios such as navigating virtual environments or organizing tasks [2] [16]

- Learning and Memory: Measured through recall of virtual object locations, instruction sequences, and route learning in immersive environments [2] [13]

- Language: Assessed through comprehension of audio instructions, virtual object naming, and responsive communication tasks [2]

- Perceptual-Motor Function: Evaluated through object manipulation, wayfinding, and visuospatial construction tasks in three-dimensional space [2] [20]

- Social Cognition: Measured through interpretation of virtual character interactions, emotional recognition, and socially appropriate responses [2]

VR-based cognitive assessment using the six domains framework represents a significant advancement over traditional neuropsychological tests, offering enhanced ecological validity, comprehensive domain coverage, and automated administration. Current evidence demonstrates that systems like CAVIRE-2 show comparable or superior psychometric properties to established tools like MoCA, with the additional benefit of assessing all six cognitive domains simultaneously in a brief administration period [2].

Future research directions should focus on establishing standardized protocols for VR cognitive assessment, developing normative data across diverse populations, enhancing accessibility for users with varying technological proficiency, and integrating biomarker data with behavioral performance metrics [17]. Additionally, longitudinal studies tracking cognitive decline using VR assessments could provide valuable insights into the progression from mild cognitive impairment to dementia, potentially enabling earlier intervention and more sensitive monitoring of therapeutic efficacy in clinical trials [2] [21].

The integration of VR technology into cognitive assessment protocols offers researchers and clinicians a powerful tool for comprehensive neuropsychological profiling that bridges the gap between laboratory measurement and real-world cognitive functioning. As these systems continue to evolve and validate, they hold significant promise for advancing our understanding of cognitive health and impairment across the lifespan.

The integration of virtual reality (VR) into cognitive assessment represents a significant advancement in neuropsychological testing, offering potential solutions to the ecological validity limitations of traditional paper-and-pencil tests [2]. Unlike conventional assessments conducted in controlled environments, VR enables the creation of immersive simulations that closely mirror real-world cognitive challenges. However, the reliability of these tools—their ability to produce consistent, accurate measurements—depends critically on the complex interplay between hardware capabilities and software implementation. For researchers and clinicians employing VR-based cognitive assessment, understanding these technological foundations is essential for evaluating tool reliability and interpreting results accurately within the growing field of digital cognitive neuroscience.

Hardware Components Influencing Reliability

The hardware components of a VR system form the physical interface between the user and the digital environment, directly impacting the consistency and accuracy of measurements.

Display Systems and Visual Fidelity

Near-eye displays present unique challenges for reliability. Viewed mere centimeters from the user's eyes, any visual imperfections can become glaringly obvious and introduce measurement variability [22]. Key display attributes affecting reliability include:

- Resolution and Pixel Density: Low resolution can create a "screen-door" effect, potentially distracting users and compromising task performance during cognitive assessments [22].

- Refresh Rate: Displays with refresh rates of 90 Hz or higher are generally necessary to minimize latency and reduce the risk of cybersickness, which could otherwise skew performance results [23] [22].

- Field of View (FOV): A restricted FOV may artificially impact performance on visuospatial tasks, reducing the ecological validity of assessments [22].

The Vergence-Accommodation Conflict (VAC) presents a particularly significant challenge in current VR hardware. This conflict occurs when a user's eyes converge on a virtual object at one perceived distance while simultaneously accommodating to focus on the physical display at a fixed distance [22]. This sensory mismatch can cause visual discomfort, eye strain, and potentially affect performance on depth-sensitive tasks, thereby threatening the test-retest reliability of assessments requiring precise depth perception.

Tracking Systems and Input Fidelity

Accurate motion tracking is fundamental for reliably capturing user behavior within VR cognitive assessments.

- Tracking Technologies: Systems utilizing inside-out tracking (where sensors are on the headset itself) versus external base stations offer different trade-offs between setup complexity and tracking volume, which can influence the consistency of movement capture [11] [24].

- Controller vs. Hand Tracking: Studies utilize various input methods, from standard controllers [11] [24] to more advanced hand-tracking technology [13]. The choice of input method significantly affects how users interact with virtual objects, influencing the reliability of motor performance measurements.

Software Components Influencing Reliability

Software implementation determines how cognitive tasks are presented, how user interactions are handled, and how performance data is quantified.

Virtual Environment Design

The design of the virtual environment directly impacts the ecological validity of the assessment. Software like CAVIRE-2 creates immersive scenarios simulating both basic and instrumental activities of daily living (BADL and IADL) in familiar settings like local residential areas and community spaces [2]. This high degree of environmental realism aims to bridge the gap between an artificial testing environment and real-world cognitive demands, potentially enhancing the predictive validity of the assessments.

Interaction Logic and Physics Engines

The implementation of how users manipulate virtual objects is a critical software factor. Research on the Virtual Reality Box & Block Test (VR-BBT) demonstrates two distinct approaches:

- Physical Interaction (VR-PI): Virtual objects obey laws of physics, requiring realistic manipulation [11].

- Non-Physical Interaction (VR-N): Virtual hands can pass through objects, reducing unnatural haptic feedback but potentially altering task demands [11].

The choice between these interaction models involves a direct trade-off between ecological validity and measurement reliability, as evidenced by different performance patterns between the two versions [11].

Data Capture and Analysis Algorithms

Reliable VR assessments require software capable of capturing rich, multi-dimensional performance data. The CAVIRE-2 system, for example, automatically assesses performance across six cognitive domains based on a matrix of scores and completion times across 13 VR scenarios [2]. This automated scoring reduces administrator variability—a significant source of error in traditional assessments—thereby enhancing inter-rater reliability.

Comparative Reliability Data Across VR Assessment Systems

Empirical studies across various domains provide quantitative evidence of VR system reliability, summarized in the table below.

Table 1: Comparative Test-Retest Reliability of VR-Based Assessments

| Assessment Tool | Domain | Reliability Metric | Results | Citation |

|---|---|---|---|---|

| CAVIRE-2 | Cognitive Screening (MCI) | Intraclass Correlation Coefficient (ICC) | ICC = 0.89 (95% CI = 0.85–0.92, p < 0.001) | [2] |

| VR Box & Block Test (VR-PI) | Upper Extremity Function | Intraclass Correlation Coefficient (ICC) | ICC = 0.940 | [11] |

| VR Box & Block Test (VR-N) | Upper Extremity Function | Intraclass Correlation Coefficient (ICC) | ICC = 0.943 | [11] |

| VR Drop-Bar Test | Reaction Time | Intraclass Correlation Coefficient (ICC) | ICC = 0.888 | [24] |

| VR Jump and Reach Test | Jumping Ability | Intraclass Correlation Coefficient (ICC) | ICC = 0.886 | [24] |

| VR-SFT (HTC Vive) | Pupillary Response (RAPD) | Intraclass Correlation Coefficient (ICC) | ICC = 0.44 to 0.83 (good to moderate) | [23] |

These reliability metrics demonstrate that well-designed VR systems can achieve psychometric properties suitable for research and clinical application. The consistently high ICC values across multiple domains indicate that VR assessments can produce stable and consistent measurements over time.

Experimental Protocols for Establishing Reliability

Establishing the reliability of VR assessment tools requires rigorous experimental methodologies. The following workflow visualizes a comprehensive protocol for validating a VR-based cognitive assessment tool, synthesized from multiple studies:

Diagram 1: VR Cognitive Assessment Validation Workflow

Participant Recruitment and Grouping

Studies typically employ a cross-sectional design comparing healthy controls to clinically diagnosed populations. For example, research validating the CAVIRE-2 system recruited multi-ethnic Asian adults aged 55–84 years from a primary care clinic, classifying them as cognitively normal (n=244) or cognitively impaired (n=36) based on Montreal Cognitive Assessment (MoCA) scores [2]. This grouping enables the critical assessment of a tool's ability to discriminate between clinical populations.

Assessment Administration Protocol

The core validation process involves:

- VR Assessment: Participants complete the VR assessment (e.g., CAVIRE-2's 13 scenarios assessing six cognitive domains in approximately 10 minutes) [2].

- Gold-Standard Comparison: Participants independently complete established assessments like the MoCA to evaluate convergent validity [2].

- Retest Procedure: A subset of participants repeats the VR assessment after a predetermined interval (ranging from 1-5 weeks in reviewed studies) to establish test-retest reliability [2] [23].

Statistical Analysis Methods

Comprehensive validation requires multiple statistical approaches:

- Reliability Analysis: Intraclass Correlation Coefficient (ICC) calculates test-retest reliability, with values >0.75 indicating good reliability and >0.90 indicating excellent reliability [2] [11]. Cronbach's alpha evaluates internal consistency between different tasks or items within the assessment [2].

- Validity Analysis: Pearson or Spearman correlations determine convergent validity between VR tasks and traditional neuropsychological measures [2] [11]. Receiver Operating Characteristic (ROC) analysis evaluates discriminant validity, quantifying how well the VR tool distinguishes between clinical groups (e.g., calculating Area Under the Curve - AUC) [2].

The Researcher's Toolkit: Essential Components for VR Reliability Testing

Table 2: Essential Research Reagents and Materials for VR Reliability Testing

| Component | Specification Examples | Research Function |

|---|---|---|

| VR Head-Mounted Display (HMD) | HTC Vive Pro Eye [23], FOVE 0 [23], Oculus Rift S [13] | Presents standardized visual stimuli; often includes integrated eye-tracking for advanced metrics. |

| Tracking System | Base stations (e.g., HTC Vive), inside-out tracking (e.g., Oculus) [11] [24] | Captures user movement and position within the virtual environment for kinematic analysis. |

| Input Devices | Motion controllers, data gloves, hand-tracking sensors [11] [13] | Translates user actions into virtual interactions; choice affects motor task reliability. |

| VR Development Engine | Unreal Engine [23], Unity | Creates and renders complex, interactive 3D environments for cognitive tasks. |

| Performance Data Logging | Custom software (e.g., Python scripts [23]) | Records multi-dimensional outcomes (response time, errors, kinematic paths) for analysis. |

| Traditional Assessment Tools | Montreal Cognitive Assessment (MoCA) [2], Box & Block Test (BBT) [11] | Serves as gold-standard for establishing convergent and criterion validity of the VR tool. |

| Statistical Analysis Software | Python (Pingouin library) [23], R, SPSS | Calculates reliability coefficients (ICC), validity correlations, and other psychometrics. |

The reliability of VR-based cognitive assessment tools is not determined by a single technological element but emerges from the complex integration of hardware and software components. Display quality, tracking accuracy, interaction design, and data processing algorithms collectively establish the foundation for consistent, valid measurements. Current evidence indicates that when these components are carefully engineered and validated, VR systems can achieve excellent reliability metrics comparable to—and in some cases surpassing—traditional assessment methods. For researchers in this field, rigorous attention to both technological implementation and psychometric validation is paramount. Future developments should focus on standardizing reliability testing protocols across platforms and addressing persistent challenges such as the vergence-accommodation conflict to further enhance the role of VR in cognitive assessment.

Methodological Rigor: Protocols and Metrics for Establishing VR Assessment Reliability

In the rapidly advancing field of virtual reality (VR) cognitive assessment, establishing robust psychometric properties of measurement tools is paramount for both research credibility and clinical application. As VR technologies increasingly transform cognitive screening and monitoring in healthcare, the validation of these tools requires rigorous reliability testing. This guide provides an objective comparison of three core reliability metrics—Intraclass Correlation Coefficients (ICC), Cronbach's Alpha, and Test-Retest Analysis—within the context of VR-based cognitive assessment tools. These metrics form the foundation for determining whether novel assessment systems can produce consistent, reproducible results that researchers and clinicians can trust for making critical decisions in cognitive health evaluation and pharmaceutical intervention studies.

Theoretical Foundations of Reliability Metrics

Intraclass Correlation Coefficient (ICC)

The Intraclass Correlation Coefficient (ICC) is a versatile reliability index that evaluates the extent to which measurements can be replicated, reflecting both degree of correlation and agreement between measurements. Mathematically, reliability represents a ratio of true variance over true variance plus error variance [25]. Unlike simple correlation coefficients that only measure linear relationships, ICC accounts for systematic differences in measurements, making it particularly valuable for assessing rater reliability and measurement consistency over time.

ICC encompasses multiple forms—traditionally categorized into 10 distinct types based on "Model" (1-way random effects, 2-way random effects, or 2-way mixed effects), "Type" (single rater/measurement or mean of k raters/measurements), and "Definition" (consistency or absolute agreement) [25]. This diversity allows researchers to select the most appropriate form based on their specific experimental design and intended inferences.

Cronbach's Alpha

Cronbach's alpha (α) serves as a measure of internal consistency reliability, quantifying how closely related a set of items are as a group within a multi-item scale or assessment tool [26]. The calculation involves dividing the average shared variance (covariance) by the average total variance, essentially measuring whether items intended to measure the same underlying construct produce similar results [26]. Cronbach's alpha is equivalent to the average of all possible split-half reliabilities and is particularly sensitive to the number of items in a scale [27].

Test-Retest Reliability

Test-retest reliability assesses the consistency of results when the same assessment tool is administered to the same individuals on two separate occasions under identical conditions [26] [28]. This metric evaluates the stability of a measurement instrument over time, with the time interval between administrations carefully selected based on the stability of the construct being measured—shorter intervals for dynamic constructs and longer intervals for stable traits [26]. Unlike Pearson's correlation coefficient, which only measures linear relationships, appropriate test-retest analysis should account for both correlation and agreement between measurements.

Comparative Analysis of Reliability Metrics

Table 1: Direct Comparison of Core Reliability Metrics

| Metric | Primary Application | Statistical Interpretation | Key Strengths | Common Limitations |

|---|---|---|---|---|

| ICC | Test-retest, interrater, and intrarater reliability | Ranges 0-1; <0.5=poor, 0.5-0.75=moderate, 0.75-0.9=good, >0.9=excellent [25] | Accounts for both correlation and agreement; multiple forms for different designs | Complex model selection; requires understanding of variance components |

| Cronbach's Alpha | Internal consistency of multi-item scales | Ranges 0-1; <0.5=unacceptable, 0.5-0.6=poor, 0.6-0.7=questionable, 0.7-0.8=acceptable, 0.8-0.9=good, >0.9=excellent [26] | Easy to compute; reflects item interrelatedness | Overly sensitive to number of items; assumes essentially tau-equivalent items |

| Test-Retest Analysis | Temporal stability of measurements | Typically reported as ICC with confidence intervals; higher values indicate greater stability | Assesses real-world stability over time; intuitive interpretation | Susceptible to practice effects; optimal time interval varies by construct |

Mathematical and Conceptual Relationships

A critical understanding for researchers is that Cronbach's alpha is functionally equivalent to a specific form of ICC—the average measures consistency ICC or ICC(C,k) [29]. When applied to the same data, these two metrics will produce identical values, revealing that alpha is essentially a special case of the broader ICC framework. This relationship underscores the importance of selecting the appropriate reliability statistic based on the specific measurement context rather than defaulting to traditional choices.

The mathematical formulation of ICC as a ratio of variances (true variance divided by true variance plus error variance) provides a conceptual framework that applies across different reliability types [25]. This variance partitioning approach enables researchers to quantify and distinguish between different sources of measurement error, facilitating more targeted improvements to assessment protocols.

Application in Virtual Reality Cognitive Assessment

Case Study: CAVIRE-2 VR Cognitive Assessment System

The "Cognitive Assessment using VIrtual REality" (CAVIRE-2) software provides a compelling case study for applying reliability metrics to VR-based cognitive assessment tools. This fully immersive VR system was designed to assess six cognitive domains through 13 scenarios simulating basic and instrumental activities of daily living [2]. Validation studies demonstrated promising reliability metrics that support its potential as a cognitive assessment tool.

Table 2: Reliability Metrics for VR Cognitive Assessment Tools

| Assessment Tool | Reliability Metric | Reported Value | Interpretation | Study Context |

|---|---|---|---|---|

| CAVIRE-2 VR System | Test-retest ICC | 0.89 (95% CI: 0.85-0.92) [2] | Good reliability | Cognitive assessment in adults aged 55-84 |

| CAVIRE-2 VR System | Cronbach's Alpha | 0.87 [2] | Good internal consistency | Multi-domain cognitive assessment |

| Immersive VR Perceptual-Motor Test | Test-retest ICC | 0.618-0.922 (transformed measures) [30] | Moderate to excellent reliability | Healthy young adults over 3 consecutive days |

| Immersive VR Perceptual-Motor Test | Response Time ICC | 0.851 [30] | Good reliability | Composite metric incorporating duration and accuracy |

Experimental Protocols for VR Reliability Testing

The methodology employed in validating the CAVIRE-2 system illustrates rigorous reliability assessment for VR cognitive tools. Researchers recruited multi-ethnic Asian adults aged 55-84 years from a primary care setting, administering both the CAVIRE-2 and the standard Montreal Cognitive Assessment (MoCA) to each participant independently [2]. The sample included 280 participants, with 244 classified as cognitively normal and 36 as cognitively impaired based on MoCA scores, enabling comparisons across cognitive status groups.

For test-retest reliability, the study implemented appropriate time intervals between administrations to minimize practice effects while ensuring the construct being measured remained stable. The resulting ICC value of 0.89 indicates excellent temporal stability for the VR assessment tool, supporting its potential for longitudinal monitoring of cognitive function [2].

Similarly, a study of immersive VR measures of perceptual-motor performance demonstrated methodological rigor by testing 19 healthy young adults over three consecutive days, analyzing response time, perceptual latency, and intra-individual variability across 40 trials [30]. The moderate to excellent ICC values (ranging from .618 to .922) across multiple measures support the test-retest reliability of VR for capturing perceptual-motor responses.

Interpretation Guidelines and Standards

Clinical Interpretation of ICC Values

When interpreting ICC values in clinical research contexts, established guidelines suggest that values less than 0.5 indicate poor reliability, values between 0.5 and 0.75 represent moderate reliability, values between 0.75 and 0.9 indicate good reliability, and values greater than 0.90 demonstrate excellent reliability [25]. These thresholds provide practical benchmarks for researchers evaluating VR assessment tools.

However, interpretation should also consider the 95% confidence interval around ICC point estimates. For example, an ICC value of 0.02 with a standard error of 0.01 implies 95% confidence bounds of 0.00 to 0.04, indicating substantial uncertainty that should factor into study design decisions, particularly for sample size calculations [31].

Internal Consistency Standards

For Cronbach's alpha, conventional interpretation thresholds categorize values below 0.50 as unacceptable, 0.51 to 0.60 as poor, 0.61 to 0.70 as questionable, 0.71 to 0.80 as acceptable, 0.81 to 0.90 as good, and 0.91 to 0.95 as excellent [26]. Notably, values exceeding 0.95 may indicate item redundancy rather than superior reliability, potentially suggesting unnecessary duplication in assessment content [26].

Methodological Considerations for VR Assessment Research

Selection of ICC Forms

Choosing the appropriate ICC form requires careful consideration of research design and intended inferences. The selection process can be guided by four key questions:

- Do we have the same set of raters for all subjects?

- Do we have a sample of raters randomly selected from a larger population or a specific sample of raters?

- Are we interested in the reliability of a single rater or the mean value of multiple raters?

- Do we concern ourselves with consistency or absolute agreement? [25]

For most VR cognitive assessment studies where the focus is on the measurement tool itself rather than rater variability, two-way random effects models often apply when generalizing to similar populations, while two-way mixed effects models may be appropriate when the specific measurement conditions are fixed.

Optimizing Test-Retest Reliability

Several methodological factors significantly influence test-retest reliability estimates in VR assessment contexts:

Time Interval Selection: Research suggests an optimal window of two weeks to two months between test administrations for cognitive measures, balancing the need to minimize practice effects while ensuring the underlying construct remains stable [28].

Administration Consistency: Standardizing testing conditions, instructions, equipment, and environment across administrations is crucial for isolating measurement consistency from extraneous influences [28].

Sample Size Considerations: Increasing sample sizes reduces the impact of random measurement error, with many reliability studies including hundreds of participants to generate stable estimates [2].

Practice Effects Management: Incorporating practice trials and familiarization sessions can mitigate learning effects that might artificially inflate or deflate reliability estimates.

The Researcher's Toolkit for VR Reliability Studies

Table 3: Essential Methodological Components for VR Reliability Research

| Component | Function | Implementation Example |

|---|---|---|

| Sample Size Planning | Ensure adequate statistical power for reliability estimation | 280 participants for CAVIRE-2 validation [2] |

| Reference Standard | Establish convergent validity with existing measures | Montreal Cognitive Assessment (MoCA) comparison [2] |

| Time Interval Protocol | Minimize practice effects while measuring stable constructs | 2-week to 2-month gap between administrations [28] |

| Statistical Analysis Plan | Appropriate reliability coefficients and confidence intervals | ICC with 95% confidence intervals, Cronbach's alpha [2] |

| Standardized Administration | Control for extraneous variance in testing conditions | Identical hardware, instructions, and testing environment [28] |

The validation of virtual reality cognitive assessment tools requires meticulous attention to reliability testing using appropriate statistical metrics. Intraclass Correlation Coefficients offer the most flexible framework for evaluating test-retest, interrater, and intrarater reliability, while Cronbach's alpha provides specific information about internal consistency for multi-item scales. Test-retest analysis remains fundamental for establishing the temporal stability of measurements, particularly important for longitudinal studies of cognitive change.

Current research demonstrates that well-designed VR assessment systems can achieve good to excellent reliability across multiple cognitive domains, with ICC values exceeding 0.85 and Cronbach's alpha above 0.80 in rigorous validation studies [2]. These promising results support the growing integration of VR technologies into cognitive assessment batteries, though they also highlight the necessity for comprehensive reliability testing following established methodological standards.

As VR applications continue to expand within clinical research and pharmaceutical development, adherence to robust reliability assessment protocols will ensure that these innovative tools generate scientifically valid, reproducible data capable of detecting subtle cognitive changes in response to interventions or disease progression.

In the evolving field of cognitive assessment, immersive virtual reality (VR) technologies present a paradigm shift from traditional neuropsychological testing. These tools offer enhanced ecological validity by reproducing naturalistic environments that mirror real-world cognitive challenges [2]. Unlike conventional paper-and-pencil or computerized tests that rely on two-dimensional, controlled stimuli, VR assessments create embodied testing experiences within three-dimensional, 360-degree environments [32]. This technological advancement, however, introduces new complexities in administration protocols that must be addressed to ensure assessment reliability and validity.

The fundamental premise of standardized testing hinges on consistency—of administration procedures, environmental conditions, and technical specifications. For VR-based assessments, standardization extends beyond traditional concerns to encompass technical immersion parameters, hardware configurations, and interaction methodologies that collectively influence cognitive performance metrics [33] [32]. Research indicates that the level of immersion itself serves as a significant moderator of therapeutic outcomes in cognitive interventions, necessitating optimized sensory integration protocols that balance ecological validity with individual tolerance levels [33]. This comparison guide examines current VR assessment platforms and their standardized administration protocols, providing researchers with evidence-based frameworks for implementing consistent immersive testing environments.

Comparative Analysis of VR Cognitive Assessment Platforms

Table 1: Comparison of Standardized VR Cognitive Assessment Platforms

| Platform/System | Cognitive Domains Assessed | Standardization Features | Administration Time | Reliability Metrics | Validity Evidence |

|---|---|---|---|---|---|

| CAVIRE-2 [2] | All six DSM-5 domains (perceptual-motor, executive, complex attention, social cognition, learning/memory, language) | Fully automated administration; 13 standardized scenarios simulating BADL/IADL; consistent audio/text instructions | 10 minutes | ICC = 0.89 (test-retest); Cronbach's α = 0.87 (internal consistency) | AUC = 0.88 vs. MoCA; 88.9% sensitivity, 70.5% specificity at cut-off <1850 |

| VR-BBT [11] | Upper extremity function; manual dexterity | Standardized virtual dimensions (53.7cm × 25.4cm × 8.5cm); fixed 60-second assessment; consistent haptic feedback | 5-10 minutes (plus practice) | ICC = 0.940 (VR-PI), 0.943 (VR-N) | r = 0.841 with conventional BBT; r = 0.657-0.839 with FMA-UE |

| Virtuleap Enhance [34] | Cognitive flexibility, response inhibition, visual short-term memory, executive function | Fixed task sequence (React, Memory Wall, Magic Deck, Odd Egg); consistent session intervals (≥1 month) | 25-30 minutes per session | Significant change over time detected (React, Odd Egg tasks) | Reliable correlation with MoCA and Stroop in CRCI patients |

| VR Neuropsychological Assessment Battery [32] | Working memory, psychomotor skills | Hardware standardization (HTC Vive Pro Eye); ergonomic interaction guidelines; standardized audio prompts | Variable by battery | Moderate-to-strong convergent validity with PC versions (r values not specified) | Higher user experience ratings vs. PC-based assessments |

Table 2: Technical Implementation Specifications Across VR Assessment Systems

| System Component | CAVIRE-2 | VR-BBT | Virtuleap Enhance | VR Assessment Battery |

|---|---|---|---|---|

| Hardware Platform | Not specified | HTC Vive Pro 2 with controllers & base stations | Not specified | HTC Vive Pro Eye with eye tracking |

| Interaction Method | Virtual object manipulation | Controller-based grasping with trigger button | Controller-based interactions | Naturalistic hand controllers |

| Environment Type | Fully immersive VR with realistic local settings | Virtual BBT replication | Immersive multisensory environment | Customizable virtual environments |

| Feedback Mechanisms | Not specified | Vibrotactile stimulus on grasp | Not specified | Spatial audio with SteamAudio plugin |

| Software Foundation | Not specified | Unity development | Not specified | Unity 2019.3.f1 with SteamVR SDK |

Experimental Protocols and Methodologies

CAVIRE-2 Validation Protocol

The CAVIRE-2 system was validated through a rigorous methodological framework designed to ensure standardization across participants and sessions [2]. The protocol implementation followed these key stages:

Participant Recruitment: Multi-ethnic Asian adults aged 55-84 years were recruited from a public primary care clinic in Singapore, representing the target population for cognitive screening. The final cohort included 280 participants, with 36 identified as cognitively impaired by MoCA criteria.

Assessment Administration: All participants underwent both CAVIRE-2 and traditional MoCA assessments administered independently to prevent order effects. The CAVIRE-2 system presented 13 standardized scenarios simulating basic and instrumental activities of daily living (BADL and IADL) in locally familiar environments.

Standardization Controls: The fully automated administration eliminated administrator variability through consistent audio and text instructions, uniform virtual environment parameters, and automated scoring algorithms. The residential and shophouse environments were modeled with high realism to bridge the gap between unfamiliar virtual environments and participants' real-world experiences.

Validation Metrics: Researchers assessed concurrent validity with MoCA, convergent validity with MMSE, test-retest reliability through ICC, internal consistency via Cronbach's alpha, and discriminative ability using ROC curve analysis with AUC calculation.

This standardized protocol demonstrated that CAVIRE-2 could effectively distinguish cognitive status with high sensitivity (88.9%) and specificity (70.5%) at the optimal cut-off score of <1850 [2].

VR-BBT Implementation Protocol

The Virtual Reality Box & Block Test implementation followed a detailed standardization protocol to ensure consistency across healthy adults and stroke patients [11]:

Hardware Configuration: The system utilized HTC Vive Pro 2 with head-mounted display, two controllers, and two base stations to ensure precise tracking. The virtual environment replicated conventional BBT dimensions (53.7cm × 25.4cm × 8.5cm) with a central partition and 150 virtual blocks measuring 2.5cm per side.

Administration Sequence: Each session followed a fixed structure: (1) demonstration mode with standardized auditory and text instructions; (2) adjustable practice mode (0-300 seconds) to accommodate participant familiarity; and (3) actual assessment mode fixed at 60 seconds with identical task instructions across all participants.

Interaction Standardization: Two versions were developed with consistent interaction parameters: VR-PI (physical interaction adhering to virtual physics laws) and VR-N (non-physical interaction where hands pass through blocks). For both versions, successful block transfer required maintaining grip until the fingertip clearly crossed the partition.

Data Collection: The system automatically recorded the number of transferred blocks, with real-time display of remaining time. Additional kinematic parameters (movement speed, distance) were captured for comprehensive motor function assessment.

This meticulous protocol resulted in strong reliability (ICC = 0.940-0.943) and validity (r = 0.841 with conventional BBT) across both healthy and stroke-affected populations [11].

Comparative VR vs. PC Assessment Protocol

A rigorous comparative study established standardization protocols for evaluating VR against traditional computerized assessments [32]:

Participant Selection: Sixty-six participants (38 women) aged 18-45 years with 12-25 years of education were recruited through standardized channels. The protocol included comprehensive assessment of IT skills, gaming experience, and computing proficiency.

Counterbalanced Administration: All participants performed the Digit Span Task, Corsi Block Task, and Deary-Liewald Reaction Time Task in both VR-based and PC-based formats with counterbalanced order to control for learning effects.

Hardware Standardization: VR assessments used HTC Vive Pro Eye with built-in eye tracking that exceeded minimum specifications for reducing cybersickness. Computerized tasks were hosted on PsyToolkit with consistent hardware configuration (Windows 11 Pro, Intel Core i9 CPU, 128 GB RAM, GeForce GTX 1060 Ti graphics).

Ergonomic Implementation: VR software followed ISO ergonomic guidelines and best practices for neuropsychological assessment. Interactions utilized SteamVR SDK for naturalistic hand controller use, while PC versions employed traditional keyboard/mouse interfaces.

This standardized protocol revealed that VR assessments showed minimal influence from age and computing experience compared to PC versions, which were significantly affected by these demographic factors [32].

Visualization of Standardized VR Assessment Workflow

Standardized VR Assessment Workflow

This workflow diagram illustrates the sequential stages of standardized VR cognitive assessment implementation, highlighting the critical hardware and procedural components that ensure consistency across administrations.

Table 3: Essential Research Reagents and Solutions for VR Cognitive Assessment

| Resource Category | Specific Examples | Function in Research Protocol | Implementation Considerations |

|---|---|---|---|

| VR Hardware Platforms | HTC Vive Pro Eye, HTC Vive Pro 2 | Provide immersive visual, auditory, and tracking capabilities | Must exceed minimum specifications for reducing cybersickness; ensure consistent configuration across participants |

| Software Development Frameworks | Unity 2019.3.f1, SteamVR SDK | Enable creation of standardized virtual environments and interactions | Should follow ergonomic guidelines and best practices for neuropsychological assessment |

| Assessment Content | CAVIRE-2 scenarios, VR-BBT, Virtuleap Enhance games | Deliver cognitive tasks targeting specific domains | Must balance ecological validity with standardization; incorporate familiar activities of daily living |