From Data to Discovery: Building Analytical Frameworks for Virtual Reality Behavioral Data in Biomedical Research

This article provides a comprehensive guide to the frameworks and methodologies for analyzing behavioral data collected in Virtual Reality (VR) environments, tailored for biomedical and clinical research professionals.

From Data to Discovery: Building Analytical Frameworks for Virtual Reality Behavioral Data in Biomedical Research

Abstract

This article provides a comprehensive guide to the frameworks and methodologies for analyzing behavioral data collected in Virtual Reality (VR) environments, tailored for biomedical and clinical research professionals. It explores the foundational principles of VR data collection, detailing specialized toolkits and sensor technologies that capture multimodal behavioral cues. The piece delves into application-specific analytical methods, including deep learning for automated assessment and behavioral pattern analysis, and addresses key challenges in data processing, standardization, and ethical governance. Finally, it examines validation techniques and real-world case studies, synthesizing how these frameworks can unlock robust digital biomarkers and transform patient stratification, therapy development, and clinical trial endpoints.

The New Frontier: Understanding VR Behavioral Data and Its Potential for Biomedical Insights

FAQs on VR Behavioral Data

What exactly is classified as behavioral data in a VR experiment?

Behavioral data in VR encompasses any measurable physical action or reaction of a user within the virtual environment. This data is typically collected covertly and continuously by the VR system and its integrated sensors at a very fine-grained level [1]. The primary categories are:

- Motion Tracking Data: This is the most common type, captured by the headset and controllers to update the user's point of view and interactions. It includes:

- Physiological Data: These are signals from specialized sensors that provide insights into a user's cognitive and emotional states.

- Eye-Tracking: Integrated into some headsets to measure pupil dilation, gaze paths, and blink rate, which can indicate attention and cognitive load [2] [3].

- Electrodermal Activity (EDA): Measures skin conductance, often linked to arousal or stress [3].

- Electrocardiogram (ECG/EEG): Monitors heart rate and brain activity, respectively [3].

- Behavioral Metrics: These are higher-level measures derived from the raw tracking data, such as:

- Interpersonal Distance: The distance a user maintains from virtual characters, which can be a proxy for social bias or approach/avoidance behavior [1].

- Task Completion Time and Accuracy: Standard performance metrics [4].

- Object Interaction Logs: Records of what objects a user touched, how, and in what sequence [2].

How can I troubleshoot poor motion tracking data quality?

Poor tracking manifests as a jittery or drifting virtual world and controllers. Common causes and solutions are detailed in the table below.

| Problem | Possible Cause | Solution |

|---|---|---|

| Jittery or Lost Tracking/Guardian | Poor lighting conditions (too dark/bright) or direct sunlight [5] [6]. | Use a consistently, well-lit indoor space and close blinds to avoid direct sunlight [6]. |

| Dirty or smudged headset tracking cameras [6]. | Clean the four external cameras on the headset with a microfiber cloth [6]. | |

| Environmental interference [6]. | Remove or cover large mirrors and avoid small string lights (e.g., Christmas lights) that can confuse the tracking cameras [6]. | |

| Controller Not Detected or Tracking Poorly | Low or depleted battery [5] [6]. | Replace the AA batteries in the affected controller [5] [6]. |

| General system software error. | Perform a full reboot of the headset (via the power menu), not just putting it to sleep [6]. |

Our experiment requires high-quality physiological data. What framework can we use to synchronize data from multiple sensors?

For complex experiments requiring multi-sensor data, a dedicated data collection framework is recommended. The ManySense VR framework, implemented in Unity, is designed specifically for this purpose. It provides a reusable and extensible structure to unify data collection from diverse sources like eye trackers, EEG, ECG, and galvanic skin response sensors [2].

The framework operates through a system of specialized data managers, which handle the connection, data retrieval, and formatting for each sensor. This data is then centralized, making it easy for the VR application to consume and ensuring all data streams are synchronized with a common timestamp [2]. A performance evaluation showed that ManySense VR runs efficiently without significant overhead on processor usage, frame rate, or memory footprint [2].

Data Synchronization with ManySense VR Framework

What are the key considerations for analyzing and visualizing VR behavioral data?

VR behavioral data analysis requires careful handling due to its complex, multi-modal, and temporal nature.

- Exploratory Data Analysis (EDA): Always begin with summary statistics (min, max, mean) and check for variables on different scales, as this can impact some machine learning algorithms [7].

- Class Distribution: For activity recognition tasks, plot class counts. Be aware of the class imbalance problem, where some behaviors (e.g., 'Walking') may have many more instances than others (e.g., 'Standing'). This can bias classifiers, and solutions like stratified sampling or oversampling may be needed [7].

- User-Class Sparsity: Create a matrix to visualize which users performed which activities. It is common that not all users perform all behaviors, and your analysis and model training must account for this sparsity [7].

- Data Visualization: Use boxplots to see how a single feature (e.g., amount of movement) varies across different activities. Use correlation plots to understand linear relationships between different pairs of variables in your dataset [7].

The Scientist's Toolkit

Table: Essential "Research Reagents" for VR Behavioral Data Collection

| Item / Tool | Function in VR Research |

|---|---|

| Head-Mounted Display (HMD) | The primary hardware for immersion. Tracks head position and rotation, providing core motion data. Often integrates other sensors [1] [2]. |

| Motion Tracking System | Tracks the position and rotation of the user's head, hands (via controllers), and optionally other body parts. This is the foundational source for nonverbal behavioral data [1]. |

| Eye Tracker (Integrated) | Measures gaze direction, pupillometry, and blink rate. Provides a fine-grained proxy for visual attention and cognitive load [2] [3]. |

| Physiological Sensors (EEG, ECG, EDA) | Wearable sensors that capture physiological signals like brain activity, heart rate, and skin conductance. Used to infer emotional arousal, stress, and cognitive engagement [3]. |

| Data Collection Framework (e.g., ManySense VR) | A software toolkit that standardizes and synchronizes data ingestion from various sensors and hardware, simplifying the data pipeline for researchers [2]. |

| Game Engine (Unity/Unreal) | The development platform for creating the controlled virtual environment and scripting the experimental protocol and data logging [2] [8]. |

| XR Interaction SDK | A software development kit that provides pre-built, robust components for handling common VR interactions (e.g., grabbing, pointing), ensuring consistent data generation [8]. |

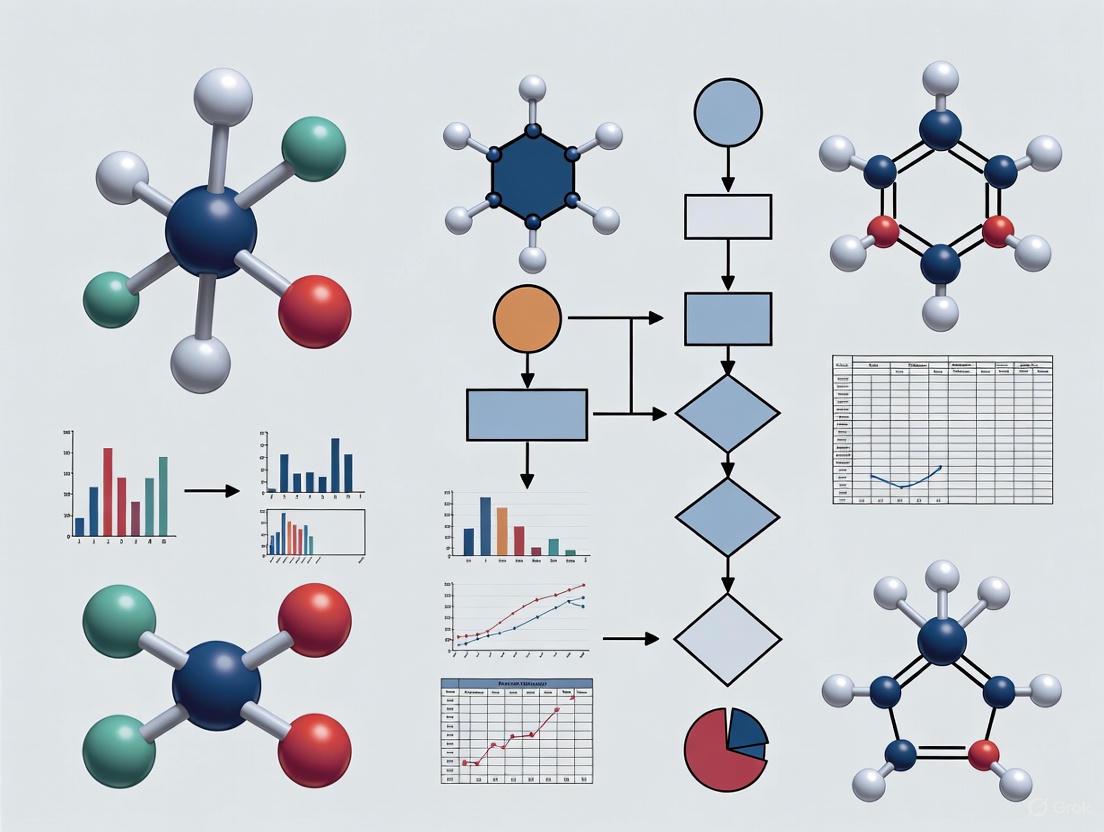

VR Behavioral Research Workflow

In the evolving field of virtual reality behavioral research, the scarcity of large-scale, high-quality datasets presents a significant paradox: while VR generates unprecedented volumes of rich behavioral data, researchers consistently struggle to access comprehensive datasets necessary for robust analytical modeling. This scarcity stems from a complex interplay of technological, methodological, and practical constraints that collectively impede data collection efforts across scientific domains. The immersive nature of VR technology enables the capture of multimodal data streams—including eye-tracking, electrodermal activity, motion tracking, and behavioral responses—within controlled yet ecologically valid environments [9]. This rich data tapestry offers tremendous potential for understanding human behavior, cognitive processes, and physiological responses with unprecedented granularity. However, as noted in recent studies, "publicly available VR multimodal emotion datasets remain limited in both scale and diversity due to the scarcity of VR content and the complexity of data collection" [9]. This shortage fundamentally hampers progress in developing accurate recognition models and validating research findings across diverse populations and contexts. The following sections examine the specific barriers contributing to this data scarcity while providing practical frameworks for researchers navigating these challenges within their VR behavioral studies.

Quantifying the Data Gap: Current Dataset Limitations

Table 1: Overview of Current Publicly Available VR Behavioral Datasets

| Dataset Name | Modalities Collected | Participant Count | Primary Research Focus | Key Limitations |

|---|---|---|---|---|

| VREED [9] | 59-channel GSR, ECG | 34 volunteers | Multi-emotion recognition | Limited modalities, small participant pool |

| DER-VREEG [9] | Physiological signals | Not specified | Emotion recognition | Narrow emotional categories |

| VRMN-bD [9] | Multimodal | Not specified | Behavioral analysis | Constrained by data diversity |

| ImmerIris [10] | Ocular images | 564 subjects | Iris recognition | Domain-specific application |

| Self-collected dataset [9] | GSR, eye-tracking, questionnaires | 38 participants | Emotion recognition | Limited sample size |

Table 2: Technical Barriers to VR Data Collection at Scale

| Barrier Category | Specific Challenges | Impact on Data Collection |

|---|---|---|

| Hardware Limitations | Head-mounted displays partially obscure upper face [9] | Limits effectiveness of facial expression recognition |

| Data Complexity | Multimodal integration (eye-tracking, GSR, EEG, motion) [9] | Increases processing requirements and standardization challenges |

| Individual Variability | Large inter-subject differences in physiological responses [9] | Requires larger sample sizes for statistical significance |

| Technical Expertise | Need for specialized skills in VR development and data science [11] | Slows research implementation and methodology development |

| Resource Requirements | Cost of equipment, space, and computational resources [12] [13] | Limits participation across institutions and research groups |

The quantitative landscape reveals consistent patterns across VR research domains. Existing datasets remain constrained by limited modalities, small participant pools, and narrow application focus [9]. For instance, even newly collected datasets typically involve approximately 30-40 participants [9], which proves insufficient for training robust machine learning models that must account for significant individual variability in physiological and behavioral responses. The ImmerIris dataset, while substantial with 499,791 ocular images from 564 subjects, represents an exception rather than the norm in VR research [10]. This scarcity of comprehensive datasets directly impacts model performance and generalizability, creating a cyclical challenge where limited data begets limited analytical capabilities.

Root Causes: Understanding the Barriers to VR Data Collection

Technical and Methodological Challenges

The technical complexity of VR data collection creates substantial barriers to assembling large-scale datasets. Unlike traditional research methodologies, VR studies require simultaneous capture and synchronization of multiple data streams, each with unique sampling rates and processing requirements. As noted in recent research, "behavioral analysis encounters certain constraints in VR: head-mounted displays (HMDs) partially obscure the upper face, which limits the effectiveness of traditional facial expression recognition" [9]. This limitation necessitates alternative approaches such as eye movement analysis, vocal characteristics, and head motion tracking, each adding layers of methodological complexity. Furthermore, the field lacks "conceptual and technical frameworks that effectively integrate multimodal data of spatial virtual environments" [11], leading to incompatible data structures and non-standardized collection protocols that hinder dataset aggregation across research institutions.

Practical and Resource Constraints

Beyond technical hurdles, practical considerations significantly impact VR data collection capabilities. The financial investment required for high-quality VR equipment, sensors, and computational infrastructure creates substantial barriers, particularly for academic research teams [12] [13]. This resource intensity naturally limits participant throughput, as each VR session typically requires individual supervision, technical support, and equipment sanitization between uses. Additionally, specialized expertise in both VR technology and data science remains scarce, creating a human resource bottleneck that slows research implementation [11]. These constraints collectively explain why even well-designed studies typically yield datasets with only 30-50 participants [9], falling short of the sample sizes needed for robust statistical modeling and machine learning applications.

Experimental Protocols: Standardized Methodologies for VR Data Collection

Multimodal Emotion Recognition Protocol

Table 3: Research Reagent Solutions for VR Emotion Recognition Studies

| Essential Material/Equipment | Specification Guidelines | Primary Function in Research |

|---|---|---|

| Head-Mounted Display (HMD) | Standalone VR headset with embedded eye-tracking | Presents immersive environments while capturing gaze behavior |

| Electrodermal Activity Sensor | GSR measurement with at least 4Hz sampling frequency | Captures sympathetic nervous system arousal via skin conductance |

| Eye-Tracking System | Minimum 60Hz sampling rate with pupil detection | Records visual attention patterns and pupillary responses |

| Stimulus Presentation Software | Unity3D or equivalent with 360° video capability | Creates controlled, repeatable emotional elicitation scenarios |

| Data Synchronization Platform | Custom or commercial solution with millisecond precision | Aligns multimodal data streams for temporal analysis |

| Subjective Assessment Tools | SAM, PANAS, or custom questionnaires using PAD model | Collects self-reported emotional state validation |

The standardized protocol for VR emotion recognition research involves carefully controlled environmental conditions and systematic data capture procedures. Based on established methodologies [9], researchers should recruit a minimum of 30 participants to achieve basic statistical power, with each session lasting approximately 45-60 minutes. The experimental sequence begins with equipment calibration, followed by a 5-minute baseline recording during which participants view a neutral stimulus to establish individual physiological baselines. Researchers then present 10 emotion-eliciting video clips selected through pre-testing to target specific emotional states (e.g., fear, joy, sadness) using the PAD (Pleasure-Arousal-Dominance) model as a theoretical framework [9]. Each stimulus should have a duration of 60-90 seconds, followed by a 30-second resting period and immediate subjective assessment using standardized questionnaires like the Self-Assessment Manikin (SAM) [9]. Throughout this process, synchronized data collection captures electrodermal activity (minimum 4Hz sampling), eye-tracking (minimum 60Hz), and continuous behavioral observation, yielding approximately 10 valid trials per participant [9].

Iris Recognition in Immersive Environments Protocol

For iris recognition studies in VR environments, the experimental protocol must address unique challenges of off-axis capture and variable lighting conditions. The ImmerIris dataset methodology provides a robust framework [10], requiring head-mounted displays equipped with specialized ocular imaging cameras capable of capturing iris textures under dynamic viewing conditions. The protocol involves 564 subjects to ensure sufficient diversity, with each participant completing multiple sessions to assess temporal stability [10]. During each 20-minute session, participants engage with standard VR applications while the system continuously captures ocular images from varying angles and distances, simulating naturalistic interaction patterns. This approach specifically addresses the "perspective distortion, intra-subject variation, and quality degradation in iris textures" that characterize immersive applications [10]. The resulting dataset of 499,791 images enables development of normalization-free recognition paradigms that outperform traditional methods [10], demonstrating the value of scale-appropriate data collection.

Troubleshooting Guide: Addressing Common VR Data Collection Challenges

FAQ 1: How can researchers overcome facial occlusion issues in emotion recognition studies?

Challenge: Head-mounted displays partially obscure the upper face, limiting traditional facial expression analysis [9].

Solution: Implement multimodal workarounds including:

- Eye-tracking metrics: Focus on pupil dilation, blink rate, and gaze patterns as indicators of emotional arousal [9]

- Vocal analysis: Capture and analyze speech characteristics during VR experiences

- Head movement patterns: Track frequency and amplitude of head movements as behavioral markers

- Physiological signals: Prioritize electrodermal activity, ECG, and EEG which remain unobstructed [9]

- Integrated modeling: Combine multiple signal types using fusion algorithms like the MMTED framework [9]

FAQ 2: What strategies address the high costs of VR research equipment?

Challenge: Financial constraints present significant barriers to VR adoption in research settings [12] [13].

Solution: Implement cost-mitigation approaches:

- Strategic hardware selection: Utilize standalone VR headsets rather than tethered PC-based systems

- Collaborative resource sharing: Establish multi-researcher equipment sharing protocols

- Gradual implementation: Begin with core functionality and expand capabilities as funding permits

- Open-source software: Leverage community-developed tools for data collection and analysis

- Grant targeting: Specifically budget for equipment replacement and maintenance in funding proposals

FAQ 3: How can researchers ensure data quality across varying technical implementations?

Challenge: Inconsistent data quality and format variability hamper aggregation and analysis [11].

Solution: Establish standardized quality control procedures:

- Pre-session calibration: Implement rigorous sensor calibration before each data collection session

- Automated quality checks: Develop scripts to flag abnormal data ranges or sensor failures

- Cross-validation protocols: Periodically collect duplicate measurements to verify consistency

- Documentation standards: Maintain detailed equipment and procedure documentation

- Data annotation guidelines: Establish clear protocols for labeling and metadata assignment

FAQ 4: What methods help address participant variability in VR studies?

Challenge: Large inter-individual differences in physiological and behavioral responses require large sample sizes [9].

Solution: Implement statistical and methodological adjustments:

- Within-subject designs: Collect multiple data points from each participant across conditions

- Baseline normalization: Calculate individual baselines and express responses as change scores [9]

- Stratified recruitment: Ensure demographic and individual difference factors are balanced

- Covariate analysis: Include relevant individual characteristics as covariates in statistical models

- Adaptive stimuli: Adjust stimulus intensity based on individual response thresholds

Future Directions: Overcoming the Data Scarcity Challenge

The future of VR behavioral research depends on systematically addressing the data scarcity challenge through technological innovation, methodological standardization, and collaborative frameworks. Emerging approaches include the development of normalization-free recognition paradigms that directly process minimally adjusted ocular images [10], reducing preprocessing complexity and potential information loss. The integration of artificial intelligence with VR data collection offers promising avenues for real-time data quality assessment and adaptive experimental protocols [14]. Furthermore, the creation of shared data repositories with standardized formatting and annotation standards would significantly accelerate progress by enabling multi-institutional data aggregation [9]. As these initiatives mature, the research community must simultaneously address critical ethical considerations around privacy, data ownership, and inclusive representation [14] [15]. Only through coordinated effort across technical, methodological, and ethical dimensions can we overcome the current data scarcity limitations and fully realize the potential of VR as a transformative tool for understanding human behavior.

For researchers and scientists in drug development and behavioral studies, Virtual Reality (VR) offers an unprecedented tool for creating controlled, immersive experimental environments. The power of VR lies in its ability to elicit naturalistic behaviors and responses within these settings. However, this potential can only be fully realized with robust, standardized frameworks for collecting the rich, multi-dimensional data generated. A well-structured VR data collection framework ensures that the data acquired from human participants is reliable, reproducible, and suitable for rigorous analysis, ultimately forming the foundation for valid scientific insights and conclusions [16] [17].

This technical support guide details the core components of such a framework, focusing on the practical toolkits, sensor technologies, and data formats that underpin successful VR research. Furthermore, it provides targeted troubleshooting and methodological protocols to address common challenges faced by professionals during experimental setup and data acquisition.

Core Toolkit Components

The foundation of any VR data collection system is its software toolkit, which standardizes the process of capturing data from various hardware sensors and input devices.

OpenXR Data Recorder (OXDR)

The OpenXR Data Recorder (OXDR) is a versatile toolkit designed for the Unity3D game engine to facilitate the capture of extensive VR datasets. Its primary advantage is device agnosticism, working with any head-mounted display (HMD) that supports the OpenXR standard, such as Meta Quest, HTC Vive, and Valve Index [16].

Key Features:

- Frame-Independent Capture: Unlike tools that capture the full application state at the frame rate, OXDR records data at a fixed polling rate independent of the frame rate. This is critical for accurately capturing high-frequency data like eye movements or controller inputs [16].

- Structured Data Output: The toolkit captures the output of hardware provided to the Unity engine and stores it in a standardized, extensible format, making it directly usable for training machine learning models [16].

- Python Integration: OXDR is complemented by a set of Python scripts for external data analysis, enabling seamless integration into existing data science workflows [16].

ManySense VR

ManySense VR is a reusable and extensible context data collection framework also built for Unity. It is specifically designed for context-aware VR applications, unifying data collection from diverse sensor sources beyond standard VR controllers [2].

Key Features:

- Modular Sensor Integration: ManySense VR uses a manager-based architecture where each sensor (e.g., eye tracker, EEG, GSR sensor) is managed by a dedicated component. This makes it easy for developers to add or remove sensors [2].

- Focus on Embodiment: The framework has been demonstrated in applications requiring rich embodiment, which synchronizes a user's avatar with their real-world bodily actions using data from multiple tracking sources [2].

- Performance Efficiency: Evaluations show that ManySense VR has a low impact on processor usage, frame rate, and memory footprint, which is vital for maintaining immersion [2].

Unity Experiment Framework (UXF)

The Unity Experiment Framework (UXF) is an open-source software resource that empowers behavioral scientists to leverage the power of the Unity game engine without needing to be expert programmers. It provides the structural "nuts and bolts" for running experiments [17].

Key Features:

- Standardized Experiment Structure: UXF logically encodes experiments using a familiar session-block-trial model, making code readable and simplifying the implementation of complex designs [17].

- Flexible Data Collection: It supports both discrete behavioral data (e.g., task scores, responses) and continuous data (e.g., head position, rotation) at high frequencies [17].

Table: Comparison of Primary VR Data Collection Toolkits

| Toolkit | Primary Use Case | Key Strength | Supported Platforms/Devices |

|---|---|---|---|

| OpenXR Data Recorder (OXDR) [16] | Large-scale, multimodal dataset creation for machine learning. | Device-agnostic data capture via OpenXR; frame-independent polling. | Any OpenXR-compatible HMD (Meta Quest, HTC Vive, etc.). |

| ManySense VR [2] | Context-aware VR applications requiring rich user embodiment. | Extensible, modular architecture for diverse physiological and motion sensors. | Unity-based VR systems with integrated or external sensors. |

| Unity Experiment Framework (UXF) [17] | Standardized behavioral experiments in a 3D environment. | Implements a familiar session-block-trial model for experimental rigor. | Unity-based applications for both 2D screens and VR. |

Essential Sensors and Data Modalities

Moving beyond standard controller and headset tracking, advanced VR research incorporates a suite of sensors to capture a holistic view of user state and behavior.

Table: Sensor Technologies for VR Data Collection

| Sensor Type | Measured Data Modality | Application in Research |

|---|---|---|

| Eye Tracker [16] [2] | Gaze position, pupil dilation, blink rate. | Studying attention, cognitive load, and psychological arousal. |

| Electroencephalogram (EEG) [2] | Electrical brain activity. | Researching neural correlates of behavior, emotion classification. |

| Galvanic Skin Response (GSR) [2] | Skin conductance. | Measuring emotional arousal and stress responses. |

| Facial Tracker [2] | Facial muscle movements and expressions. | Analyzing emotional responses and non-verbal communication. |

| Force Sensors & Load Cells [18] | Pressure, weight, and haptic feedback. | Creating realistic touch sensations in training simulations; measuring exertion in rehabilitation. |

| Physiological (Pulse, Respiration) [2] | Heart rate, breathing rate. | Monitoring physiological arousal and stress in therapeutic or training scenarios. |

Standardized Data Formats and Storage

A critical challenge in VR data collection is standardizing the format of heterogeneous data for analysis and sharing.

The OXDR toolkit proposes a hierarchical data structure to store information efficiently and extensibly [16]:

- Metadata: The initial entry containing essential information about the recording session, such as start/end time, polling rate, HMD model, and video resolution [16].

- Snapshot: Represents all data captured during a single update cycle of the input system. Each snapshot contains a timestamp and data from multiple devices [16].

- Device: Represents a physical or virtual hardware component (e.g., controller, eye tracker). Each device has its own metadata and a set of features [16].

- Feature and Value Types: The abstraction for individual hardware capabilities, containing the actual data values [16].

For storage, OXDR supports two formats to balance size and handling: NDJSON (Newline Delimited JSON) for readability and MessagePack for efficient binary serialization [16].

Troubleshooting Guides & FAQs

Common VR Data Collection Issues

Q: The tracking of my headset or controllers is frequently lost or becomes jittery during data collection. What could be the cause? A: Tracking issues are often environmental. Ensure your play area is well-lit but not in direct sunlight, which can interfere with the cameras. Clean the headset's four external tracking cameras with a microfiber cloth to remove smears. Also, remove or cover reflective surfaces like mirrors and avoid small string lights, as these can confuse the tracking system. A full reboot of the headset can often resolve software-related tracking glitches [6].

Q: My collected data appears to be out of sync or has dropped samples, especially for high-frequency sources like eye-tracking. A: This could be due to frame-dependent data capture. Ensure your toolkit (like OXDR) is configured for frame-independent capture at a fixed polling rate suitable for your highest frequency data source. Also, monitor your application's frame rate; if it drops significantly due to high graphical fidelity or complex scenes, it can disrupt data collection workflows that are tied to the render cycle [16].

Q: The VR experience is causing participants to report nausea or discomfort, potentially biasing behavioral data. A: VR sickness is a common challenge. To mitigate it:

- Provide Stable Visual Anchors: Add a fixed reference point in the virtual environment, like a virtual cockpit or horizon line.

- Offer Multiple Locomotion Options: Implement teleportation in addition to or instead of smooth continuous movement.

- Optimize Performance: Maintain a high and stable frame rate (e.g., 90Hz). Dropping frames is a major contributor to discomfort.

- Adjust Settings: Allow users to adjust settings like field of view (FOV) limiting or movement speed [19].

Q: How can I ensure the force feedback from my haptic devices feels realistic and is accurately recorded? A: Realism in haptics relies on high-fidelity force sensors. For data collection, integrate force measurement solutions like load cells into VR equipment (e.g., gloves, treadmills) to capture precise force data. This data can be used both for generating real-time haptic feedback and for later analysis of user interactions. Ensure the data from these sensors is synchronized with other data streams in your framework [18].

General VR Headset Troubleshooting

- Blurry Image: Check the headset's fit and positioning on the user's face. Clean the lenses with a microfiber cloth. Adjust the Interpupillary Distance (IPD) setting to match the user's eyes [6] [20].

- Controller Disconnection: Replace the AA batteries, as tracking quality can decline as the battery depletes [6].

- Overheating: Avoid prolonged use in warm environments and ensure adequate ventilation around the headset [20].

- Audio Issues: Verify that the correct audio output device is selected within the VR system's settings and that all volume levels are appropriately adjusted [20].

Experimental Protocols & Workflows

A standardized protocol is vital for reproducibility. The following workflow, based on the UXF model, outlines the key steps for setting up a behavioral experiment in VR.

Detailed Methodology:

- Define Session Structure: Structure your experiment using the session-block-trial model. A session represents one participant's complete run. Within a session, group trials into blocks where independent variables are held constant. Each trial is a single instance of the task [17].

- Configure Independent Variables (Settings): Use the toolkit's settings system to define your experimental manipulations. These can be set at the session, block, or trial level. Examples include the difficulty of a task, the type of visual stimulus presented, or the level of haptic feedback [17].

- Implement Trial Logic: Program the sequence of events within a trial: presentation of stimuli, measurement of participant responses, and collection of data. The toolkit should handle the beginning and ending of trials, including timestamping [17].

- Data Collection During Trial:

- Behavioral Data: Record discrete dependent variables (e.g., task success, reaction time, questionnaire score) at the end of each trial [17].

- Continuous Data: Log data from trackers and sensors (e.g., head position, controller movement, gaze coordinates, physiological signals) at a high sampling rate throughout the trial [17].

- Post-Session Data Assembly: The framework should automatically assemble and output the collected data into structured files (e.g., CSV for behavioral data, binary or NDJSON for continuous data) for subsequent analysis [16] [17].

The Scientist's Toolkit: Essential Research Reagents

Table: Key "Reagents" for a VR Data Collection Lab

| Item / Toolkit | Function in VR Research |

|---|---|

| Unity Game Engine [16] [17] | Primary development platform for creating 3D virtual environments and experiments. |

| OpenXR Standard [16] [21] | Unified interface for VR applications, ensuring cross-platform compatibility and simplifying data capture from various HMDs. |

| OXDR or ManySense VR [16] [2] | Core data collection frameworks that handle the recording, synchronization, and storage of multi-modal sensor data. |

| Eye-Tracking Module | Integrated or add-on hardware essential for capturing gaze behavior and pupilometry as digital biomarkers for cognitive and emotional processes [22] [2]. |

| Physiological Sensor Suite (EEG, GSR, ECG) | Wearables for capturing objective physiological data correlated with arousal, stress, cognitive load, and emotional states [2]. |

| Haptic Controllers or Gloves | Input devices that provide touch and force feedback, critical for studies on motor learning, rehabilitation, and realistic simulation training [18] [19]. |

| Python Data Analysis Stack (Pandas, NumPy, Scikit-learn) | Post-collection toolset for cleaning, analyzing, and modeling the complex, time-series data generated by VR experiments [16]. |

The Behavioral Framework of Immersive Technologies (BehaveFIT) provides a structured, theory-based approach for developing and evaluating virtual reality (VR) interventions designed to support behavioral change processes. This framework addresses the fundamental challenge in psychology known as the intention-behavior gap - the well-documented phenomenon where individuals fail to translate their intentions, values, attitudes, or knowledge into actual behavioral changes [23].

Research indicates that while intentions may be the best predictor of behavior, they account for only about 28% of the variance in future behavior, suggesting numerous other factors inhibit successful behavior change [23]. BehaveFIT addresses this gap by offering an intelligible categorization of psychological barriers, mapping immersive technology features to these barriers, and providing a generic prediction path for developing effective immersive interventions [23] [24].

Theoretical Foundation: Understanding Psychological Barriers

BehaveFIT is grounded in comprehensive psychological research on why the intention-behavior gap occurs. The framework synthesizes various barrier classifications into an accessible structure for non-psychologists [23].

Key Psychological Barriers

Table 1: Categorization of Major Psychological Barriers to Behavior Change

| Barrier Category | Specific Barriers | Psychological Description |

|---|---|---|

| Individuality Factors [23] | Attitudes, personality traits, predispositions, limited cognition | Internal factors including lack of self-efficacy, optimism bias, confirmation bias |

| Responsibility Factors [23] | Lack of control, distrust, disbelief in need for change | Low perceived influence on situation and low expectancy of self-efficacy |

| Practicality Factors [23] | Limited resources, facilities, economic constraints | External constraints including financial investment, behavioral momentum |

| Interpersonal Relations [23] | Social norms, social comparison, social risks | Fear that significant others will disapprove of changed behavior |

| Conflicting Goals [23] | Competing aspirations, costs, perceived risks | Conflicts between intended behavior change and other goals |

| Tokenism [23] | Rebound effect, belief of having done enough | Easy changes chosen over actions with higher effort |

These barriers operate across different levels and explain why individuals often struggle to maintain consistent behavioral patterns despite positive intentions. The BehaveFIT framework specifically targets these barriers through strategic application of immersive technology features [23].

BehaveFIT Framework Architecture

The BehaveFIT framework operates through three core components that guide researchers in developing effective VR interventions for behavior change.

Framework Logic Flow illustrates how BehaveFIT maps immersive technology features to psychological barriers, creating pathways that lead to successful behavior change.

Core Framework Components

Barrier Categorization: BehaveFIT provides an intelligible organization of psychological barriers that impede behavior change, making complex psychological concepts accessible to researchers and developers [23]

Immersive Feature Mapping: The framework identifies how specific immersive technology features can overcome particular psychological barriers, explaining why VR can support behavior change processes [23] [24]

Prediction Pathways: BehaveFIT establishes generic prediction paths that enable structured, theory-based development and evaluation of immersive interventions, showing how these interventions can bridge the intention-behavior gap [23]

Technical Support Center: Troubleshooting Guides and FAQs

Common VR Experimental Issues and Solutions

Table 2: Technical Troubleshooting Guide for VR Behavior Change Experiments

| Problem Category | Specific Symptoms | Recommended Solution | Theoretical Implications |

|---|---|---|---|

| Tracking Issues [25] [26] | Headset/controllers not tracking, black screen, unstable connection | Reboot link box (power off 3 seconds, restart), check sensor placement/obstruction, adjust lighting conditions | Breaks spatial presence, compromising barrier reduction |

| Visual Performance [25] [27] | Stuttering, flickering, graphical anomalies | Update graphics drivers, reduce graphical settings, ensure adequate ventilation to prevent overheating | Disrupts plausibility, reducing psychological engagement |

| Setup Configuration [26] | "Headset not connected" errors, hardware not detected | Verify correct desktop boot sequence, ensure proper cable connections, confirm controller power status | Prevents embodiment establishment, limiting self-efficacy |

| Software Integration [27] | Crashes, compatibility issues, subpar performance | Update VR system software, restart system after updates, check for application-specific updates | Interrupts real-time feedback, impeding behavioral reinforcement |

Frequently Asked Questions: Research Implementation

Q: How can I determine if my VR intervention is effectively addressing psychological barriers rather than just providing technological novelty?

A: BehaveFIT provides specific mapping between immersive features and psychological barriers. For example, to address "responsibility factors" (low self-efficacy), implement embodiment features that allow users to practice behaviors in safe environments. To combat "practicality factors," use realistic simulations that overcome resource limitations. Each barrier should have a corresponding technological solution directly mapped in your experimental design [23].

Q: What are the essential validation metrics when using BehaveFIT in pharmacological research contexts?

A: Beyond standard VR performance metrics (frame rates, latency), include behavioral measures specific to your target behavior, physiological indicators (EEG, HRV, eye-tracking), and validated psychological scales measuring self-efficacy, intention, and actual behavior change. Multimodal assessment combining these measures provides the most robust validation [28].

Q: How do we maintain experimental control while ensuring ecological validity in VR behavior change studies?

A: Use standardized VR environments with consistent parameters (lighting, audio levels, task sequences) while incorporating dynamic elements that create psychological engagement. The balance can be achieved by creating structured interaction protocols within immersive environments, as demonstrated in successful implementations [28].

Q: What debugging tools are most effective for identifying performance issues that might compromise behavioral outcomes?

A: Essential tools include Unity Debugger for real-time inspection, Oculus Debug Tool for performance metrics, Visual Studio for code analysis, and platform-specific tools like ARCore/ARKit for mobile VR. Implement continuous performance profiling to maintain frame rates ≥60 FPS, as drops directly impact presence and intervention efficacy [27].

Experimental Protocols and Methodologies

Standardized VR Experimental Workflow

Implementing BehaveFIT requires careful experimental design to ensure valid and reproducible results.

Experimental Workflow outlines the standardized four-phase methodology for implementing BehaveFIT in behavioral research studies.

Multimodal Data Collection Protocol

Contemporary VR behavior research employs comprehensive multimodal assessment to capture behavioral, physiological, and psychological data simultaneously [28].

Table 3: Multimodal Assessment Framework for VR Behavior Studies

| Data Modality | Specific Measures | Collection Tools | Behavioral Correlates |

|---|---|---|---|

| Neurophysiological [28] | EEG theta/beta ratio, frontal alpha asymmetry | BIOPAC MP160 system, portable EEG | Cognitive engagement, emotional processing |

| Ocular Metrics [28] | Saccade count, fixation duration, pupil dilation | See A8 portable telemetric ophthalmoscope | Attentional allocation, cognitive load |

| Autonomic Nervous System [28] | Heart rate variability (HRV), LF/HF ratio | ECG sensors, HRV analysis | Emotional arousal, stress response |

| Behavioral Performance [28] | Task completion, response latency, movement patterns | Custom VR environment logging | Behavioral implementation, skill acquisition |

| Self-Report [23] [28] | Psychological scales, barrier assessments, presence measures | Standardized questionnaires (e.g., CES-D) | Perceived barriers, self-efficacy, intention |

The Researcher's Toolkit: Essential Research Reagents

Core Technological Components

Table 4: Essential Research Reagents for VR Behavioral Studies

| Component Category | Specific Tools & Platforms | Research Function | Implementation Notes |

|---|---|---|---|

| Development Platforms [27] | Unity Engine, Unreal Engine, A-Frame framework | Core VR environment development, experimental protocol implementation | Unity preferred for rapid prototyping; A-Frame for web-based deployment |

| Debugging & Profiling [27] | Unity Debugger, Oculus Debug Tool, Visual Studio | Performance optimization, issue identification, frame rate maintenance | Critical for maintaining presence (≥60 FPS target) |

| Hardware Platforms [28] [26] | Vive Cosmos, Oculus devices, mobile VR solutions | Participant immersion, interaction capability, experimental delivery | Consider balance between mobility and performance |

| Physiological Sensing [28] | BIOPAC MP160, portable EEG, eye-tracking systems | Multimodal data collection, objective biomarker assessment | Enables comprehensive behavioral and physiological correlation |

| Analysis Frameworks [28] [29] | SVM classifiers, HBAF, RFECV feature selection | Behavioral pattern identification, biomarker validation, statistical assessment | Machine learning essential for complex multimodal data |

Implementation Checklist for BehaveFIT Experiments

- Barrier Assessment: Identify specific psychological barriers relevant to target behavior

- Feature Mapping: Select appropriate immersive features to address identified barriers

- Technical Validation: Verify VR system performance (≥60 FPS, latency <20ms)

- Multimodal Setup: Calibrate and synchronize all physiological sensors

- Protocol Standardization: Implement consistent task structure and timing

- Data Pipeline: Establish automated data collection and processing workflow

- Analysis Plan: Pre-register statistical approach and machine learning methods

The BehaveFIT framework offers a structured methodology for investigating behavioral change processes using immersive technologies. For drug development professionals, this approach provides a standardized platform for assessing behavioral components of pharmacological interventions, enabling more objective measurement of how therapeutics impact not just symptoms but actual behavior change. The multimodal assessment framework allows researchers to correlate physiological biomarkers with behavioral outcomes, creating more comprehensive understanding of intervention efficacy [28].

By implementing the troubleshooting guides, experimental protocols, and methodological recommendations outlined in this technical support center, researchers can leverage BehaveFIT to advance the scientific understanding of how immersive technologies can bridge the intention-behavior gap across diverse research contexts and populations.

Frequently Asked Questions (FAQs)

Q1: What are the most common physiological signals collected in VR research and what do they measure? Physiological signals provide objective data on a user's neurophysiological and autonomic state. Common modalities include:

- Electroencephalography (EEG): Measures electrical brain activity; frequency bands (e.g., theta, beta) can indicate cognitive load or clinical states [30] [3].

- Eye-Tracking (ET): Records gaze position, pupil size, saccades, and fixations; used to assess attention, engagement, and cognitive processing [30] [31].

- Heart Rate Variability (HRV): Derived from ECG or photoplethysmography (PPG); indicates autonomic nervous system activity (e.g., stress, arousal) [30] [3].

- Electrodermal Activity (EDA): Measures skin conductance; a primary indicator of physiological arousal and emotional response [3] [32].

- Electromyography (EMG): Records muscle activity; can be used to study physical responses or interactions [3].

Q2: How can I address the challenge of synchronizing data from different sensors? Data synchronization is a common technical hurdle. Key strategies include:

- Hardware Synchronization: Use integrated systems where possible (e.g., HP Reverb G2 Omnicept HMD with built-in eye-tracking and PPG) or a central acquisition system like the BIOPAC MP160 to record multiple streams [30] [31].

- Software Triggers: Send precise trigger codes from the VR software (e.g., Unity, Unreal Engine) to all recording devices at the onset of specific events to align data streams during post-processing [31] [32].

- Temporal Alignment: Acknowledge differing sampling rates (e.g., EEG at 250 Hz vs. GSR at 4 Hz) and apply resampling and alignment algorithms during data preprocessing [32] [33].

Q3: My machine learning model performance is poor when generalizing to new participants. What could be wrong? This often stems from inter-individual variability and data sparsity.

- Feature Personalization: Account for individual differences in perceptual-motor style, which can influence physiological responses [3].

- Expand Training Data: If possible, increase your participant pool. Small sample sizes (e.g., N=19-28) can limit model generalizability, as noted in several studies [31] [32].

- Cross-Modality Learning: Use a modality with a strong signal (e.g., pupil size) to fill in gaps from a noisier one (e.g., reduced-channel EEG) to improve hybrid classifier performance [31] [34].

Q4: How can I validly measure a subjective experience like "Presence" in VR? Presence ("the feeling of being there") is complex and multi-faceted. A multi-method approach is recommended:

- Physiological Measures: Use head movements, ECG/HRV, EDA, and EEG as indirect, objective correlates of presence [3].

- Behavioral Measures: Analyze user interactions, gaze control, and eye-hand coordination within the VR environment [3].

- Subjective Questionnaires: Continue to use post-experience questionnaires (e.g., SAM) to capture the conscious, self-reported aspect of the experience, but be aware of their limitations [3] [32].

Troubleshooting Guides

Issue 1: Excessive Noise in Physiological Recordings

Problem: EEG, ECG, or other biosignals are contaminated with motion artifacts or interference, making features unusable.

| Solution Step | Action Details | Relevant Tools/Techniques |

|---|---|---|

| 1. Minimize Sources | Instruct participants to minimize non-essential movements (e.g., swallowing, extensive blinking) during critical task phases [31]. | Standardized participant instructions. |

| 2. Artifact Removal | Apply specialized algorithms to remove ocular artifacts from EEG data. For example, use SGEYESUB, which requires dedicated calibration runs where participants perform deliberate eye movements and blinks [31]. | Sparse Generalized Eye Artifact Subspace Subtraction (SGEYESUB). |

| 3. Signal Validation | Check sensor impedance and signal quality before starting the main experiment. For EEG, ensure electrode impedance is below 10 kΩ [32]. | Manufacturer's software (e.g., Unicorn Suite). |

Issue 2: Designing a VR Experiment for Emotion Induction

Problem: The virtual environment fails to elicit the intended emotional response (e.g., happiness vs. anger), confounding results.

| Solution Step | Action Details | Key Considerations |

|---|---|---|

| 1. Define Target Emotions | Select emotions with distinct valence (positive/negative) and target a specific arousal level (high/low) to facilitate clear physiological differentiation [32]. | Use the circumplex model of emotion for planning. |

| 2. Design Multisensory Cues | Combine environmental context, auditory cues, and a virtual human (VH) to reinforce the target emotion. For example, a bright natural forest with a joyful VH for happiness, versus a dim, crowded subway car with an angry VH for anger [32]. | Leverage color psychology, ambient sound, and VH body language. |

| 3. Incorporate Psychometrics | Administer standardized questionnaires like the Self-Assessment Manikin (SAM) immediately after VR exposure to validate the subjective emotional experience [32]. | Use for manipulation checks and correlating subjective with physiological data. |

Issue 3: Implementing a Multimodal Machine Learning Pipeline

Problem: Effectively fusing heterogeneous data streams (e.g., EEG, ET, HRV) for classification tasks like depression screening or error detection.

| Solution Step | Action Details | Application Example |

|---|---|---|

| 1. Feature Extraction | Identify clinically or theoretically relevant features from each modality. | In adolescent MDD screening: EEG Theta/Beta ratio, ET saccade count, HRV LF/HF ratio [30]. |

| 2. Model Selection & Training | Choose a classifier suitable for your feature set and sample size. Support Vector Machines (SVM) have been successfully used with multimodal physiological features [30]. | An SVM model using EEG, ET, and HRV features achieved 81.7% accuracy in classifying adolescent depression [30]. |

| 3. Hybrid Approach | Combine a primary signal (e.g., EEG) with a secondary, easily acquired signal (e.g., pupil size) to boost performance, especially in setups with a reduced number of EEG channels [31]. | A hybrid EEG + pupil size classifier improved error-detection performance in a VR flight simulation compared to EEG alone [31]. |

The table below summarizes key physiological biomarkers identified in recent VR studies, which can serve as benchmarks for your own research.

Table 1: Experimentally Derived Physiological Biomarkers in VR Research

| Study Focus | Modality | Key Biomarker(s) | Performance / Effect |

|---|---|---|---|

| Adolescent MDD Screening [30] | EEG | Theta/Beta Ratio | Significantly higher in MDD group (p<.05), associated with severity. |

| Eye-Tracking (ET) | Saccade Count, Fixation Duration | Reduced saccades, longer fixations in MDD (p<.05). | |

| Heart Rate (HRV) | LF/HF Ratio | Elevated in MDD group (p<.05), associated with severity. | |

| Multimodal (SVM Model) | Combined EEG+ET+HRV features | 81.7% classification accuracy, AUC=0.921. | |

| Error Processing in VR Flight Sim [31] | Pupillometry | Pupil Dilation | Significantly larger after errors; usable for single-trial decoding. |

| Hybrid Classification | EEG + Pupil Size | Improved performance over EEG-only with a reduced channel setup. |

Experimental Protocols

Protocol 1: A 10-Minute VR Emotional Task for Depression Screening

This protocol is adapted from a case-control study that successfully differentiated adolescents with Major Depressive Disorder (MDD) from healthy controls [30].

1. Participant Preparation: Recruit participants based on clear inclusion/exclusion criteria (e.g., confirmed MDD diagnosis vs. no psychiatric history). Obtain ethical approval and informed consent/assent. 2. VR Setup & Calibration: Use a custom VR environment (e.g., developed in A-Frame) displaying a calming, immersive scenario (e.g., a magical forest). Integrate an AI agent for interactive dialogue. Calibrate all physiological sensors (EEG, ET, HRV). 3. Experimental Task: Participants engage in a 10-minute interactive dialogue with the AI agent "Xuyu." The agent follows a scripted protocol to explore themes of personal worries, distress, and future hopes. 4. Data Recording: Synchronously record EEG, eye-tracking (saccades, fixations), and ECG (for HRV) throughout the entire VR exposure. 5. Post-Task Assessment: Administer a depression severity scale (e.g., CES-D) to correlate with the physiological biomarkers. 6. Data Analysis: Extract features (e.g., EEG theta/beta ratio, saccade count, HRV LF/HF ratio). Use statistical analysis (t-tests) to find group differences and train a machine learning classifier (e.g., SVM).

Protocol 2: Emotion Induction Using a Virtual Human

This protocol details a method for eliciting specific emotional states (happiness, anger) using a Virtual Human (VH) [32].

1. VR Environment Design: - Happiness Induction: Create a bright, natural outdoor forest environment. - Anger Induction: Create a dimly lit, confined subway car environment. 2. Virtual Human Design: - Employ a professional actor and motion capture (e.g., Vicon system) to create realistic, emotionally congruent VH body language and facial expressions (e.g., Duchenne smile for happiness, clenched fists for anger). - Record the VH's speech in a studio, adjusting acoustic features (intonation, fundamental frequency) to match the target emotion. 3. Experimental Procedure: - Baseline Recording: Record physiological signals (EEG, BVP, GSR, Skin Temp) for 3-5 minutes while the participant is at rest. - VR Exposure: Immerse the participant in one of the two VEs for approximately 3 minutes. During this time, the VH delivers a 90-second emotionally charged monologue. - Repeat: After a washout period, expose the participant to the other VE (counterbalanced order). 4. Data Collection: - Physiological: Record EEG, BVP, GSR, and skin temperature throughout. - Subjective: Immediately after each VE, have participants complete the Self-Assessment Manikin (SAM) to report valence, arousal, and dominance.

Signaling Pathways and Workflows

Diagram: Multimodal VR Experiment Workflow

Multimodal VR Experiment Workflow

Diagram: Conceptual Framework for Data Fusion

Conceptual Framework for Data Fusion

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for a Multimodal VR Research Laboratory

| Item Category | Specific Examples | Primary Function |

|---|---|---|

| VR Hardware | HTC VIVE Pro 2, HP Reverb G2 Omnicept | Provides immersive visual experience and often integrates built-in sensors (e.g., eye-tracking, PPG) [31] [32]. |

| Physiological Acq. | BIOPAC MP160 System, Empatica E4 wristband, Unicorn Hybrid Black EEG | Records high-fidelity biosignals: EEG, ECG, GSR, BVP, Skin Temperature [30] [32]. |

| Motion Capture | Vicon System, Inertial Measurement Units (IMUs) | Captures precise body and hand movements for behavioral analysis and VH animation [3] [32]. |

| VR Development | Unity Engine, Unreal Engine 5, A-Frame framework | Software platforms for creating and controlling custom virtual environments and experimental paradigms [30] [31] [32]. |

| Data Analysis | Python (with Scikit-learn, MNE), SVM Classifiers, SGEYESUB algorithm | Tools for signal processing, artifact removal, feature extraction, and machine learning modeling [30] [31]. |

Building Your Pipeline: Methodologies for Capturing and Analyzing VR Behavior

Framework Comparison Tables

Technical Specifications and Data Handling

| Feature | ManySense VR | Unity Experiment Framework (UXF) | XR Interaction Toolkit (XRI) |

|---|---|---|---|

| Primary Purpose | Context data collection for personalization [2] | Structuring and running behavioral experiments [17] [35] | Enabling core VR interactions (grab, UI, locomotion) [36] |

| Key Strength | Extensible multi-sensor data fusion [2] | Automated trial-based data collection & organization [17] [37] | High-quality, pre-built interactions for VR/AR [36] |

| Data Output | Unified context data from diverse sensors [2] | Trial-by-trial behavioral data & continuous positional tracking in CSV files [35] | Not primarily a data collection framework |

| Extensibility | High, via dedicated data managers for new sensors [2] | High, supports custom trackers and measurements [35] | High, component-based architecture [36] |

| Best For | Research on context-aware VR (e.g., affective computing) [2] [38] | Human behavior experiments requiring rigid trial structure [17] | Rapid prototyping of interactive VR applications [36] |

Performance and Development Considerations

| Aspect | ManySense VR | Unity Experiment Framework (UXF) | XR Interaction Toolkit (XRI) |

|---|---|---|---|

| Development Activity | Academic research project [2] [38] | Active open-source project [35] [37] | Officially supported by Unity [36] |

| Performance Impact | Good processor usage, frame rate, and memory footprint [2] | Multithreaded file I/O to prevent framerate drops [37] | Varies with interaction complexity; part of Unity's core XR stack [36] |

| Implementation Ease | Evaluated as easy-to-use and learnable [2] | Designed for readability and fits Unity's component system [35] | Medium; uses modern Unity systems like the Input System [36] |

| Target Environment | VR for the metaverse [2] | VR, Desktop, and Web-based experiments [35] | VR, AR, and MR (Multiple XR Environments) [36] |

| Community & Support | Research paper documentation [2] | GitHub repository, Wiki, and example projects [35] | Official Unity documentation and community [36] |

Experimental Protocols and Methodologies

Protocol 1: Implementing a Context-Aware Embodiment VR Scene with ManySense VR

This methodology details the procedure for using ManySense VR to create an avatar that synchronizes with a user's real-world bodily actions, a key case study in its original research [2].

1. Objective: To develop a VR scene where the user's virtual avatar is dynamically controlled by data from multiple physiological and motion sensors, enabling rich embodiment [2].

2. Research Reagent Solutions (Key Materials):

| Item | Function in the Experiment |

|---|---|

| Eye Tracker | Measures gaze direction and blink states to drive avatar eye animations [2]. |

| Facial Tracker | Captures user's facial expressions for synchronization with the avatar's face [2]. |

| Motion Controllers | Provides standard input for head and hand pose tracking [2]. |

| Physiological Sensors (EEG, GSR, Pulse) | Collects context data (e.g., cognitive load, arousal) for potential real-time personalization [2]. |

| ManySense VR Framework | Unifies data collection from all above sensors and provides a clean API for the VR application [2]. |

3. Workflow Diagram:

4. Procedure:

- Step 1: Framework Integration. Import the ManySense VR framework into the Unity project [2].

- Step 2: Sensor Configuration. For each required sensor (e.g., eye tracker, facial tracker), instantiate and configure the corresponding dedicated Data Manager component within ManySense VR [2].

- Step 3: Avatar Rigging. Prepare the 3D avatar model with a standard skeletal rig and animation controllers.

- Step 4: Data Binding. In the application logic, subscribe to the relevant data streams from ManySense VR. Map incoming data (e.g., eye tracking values, facial expression coefficients) to the corresponding parameters of the avatar's animation controller.

- Step 5: Context Logging. Utilize ManySense VR's built-in logging capabilities to record all context data for post-session analysis [2].

Protocol 2: Designing a VR Behavioral Study with UXF

This protocol outlines the standard method for constructing a structured human behavior experiment in VR using the Unity Experiment Framework (UXF) [17] [35].

1. Objective: To create a VR experiment with a session-block-trial structure for the rigorous collection of behavioral and continuous tracking data [17].

2. Research Reagent Solutions (Key Materials):

| Item | Function in the Experiment |

|---|---|

| UXF Framework | Provides the core session-block-trial structure and automates data saving [17] [35]. |

| PositionRotationTracker | A UXF component attached to GameObjects (e.g., HMD, controllers) to log their movement [35]. |

| Settings System | UXF's cascading JSON-based system for defining independent variables at session, block, or trial levels [35]. |

| UI Prefabs | UXF's customizable user interface for collecting participant details and displaying instructions [35]. |

3. Workflow Diagram:

4. Procedure:

- Step 1: Experiment Specification. In a script (e.g.,

ExperimentBuilder), programmatically create the session structure by defining blocks and trials. Use thesettingsproperty of trials and blocks to assign independent variables [35]. - Step 2: Experiment Implementation. Create another script (e.g.,

SceneManipulator) that responds to the session'sOnTrialBeginandOnTrialEndevents. In these methods, use the trial's settings to present the correct stimulus and record the participant's responses (dependent variables) to thetrial.resultdictionary [35]. - Step 3: Continuous Tracking. Add the

PositionRotationTrackercomponent to any GameObject (e.g., the player's HMD or a stimulus) whose movement needs to be recorded throughout the trial [35]. - Step 4: Data Output. UXF automatically saves data upon trial completion. The main

trial_results.csvfile contains trial-by-trial behavioral data, while continuous tracker data is saved in separate CSV files, linked via file paths in the main results file [35].

Troubleshooting Guides and FAQs

Frequently Asked Questions

Q1: Can these frameworks be used together in a single project? Yes, they can be complementary. For instance, you can use the XR Interaction Toolkit to handle core VR interactions like grabbing and UI, the ManySense VR framework to collect specialized physiological sensor data, and the UXF to structure the overall session into trials and automatically manage the saving of all data types [2] [35] [36]. Ensure you manage dependencies and execution order carefully.

Q2: Which framework is best for a study requiring precise trial-by-trial data logging? The Unity Experiment Framework is specifically designed for this purpose. Its core architecture is built around the session-block-trial model, and it automatically handles the timing and organization of data into clean CSV files, with one row per trial and linked files for continuous data [17] [35].

Q3: We need to integrate a custom biosensor. How extensible are these frameworks? Both ManySense VR and UXF are designed for extensibility. ManySense VR allows you to add new sensors by creating a dedicated Data Manager component that fits into its unifying framework [2]. UXF allows you to create custom Tracker classes to measure and log any variable over time during a trial [35].

Troubleshooting Common Issues

Issue: Inconsistent or dropped data frames during recording.

- Checklist:

- Performance Profiling: Use Unity's Profiler to identify CPU or memory bottlenecks. High processor usage during file I/O can cause frames to drop.

- Multithreading: If using UXF, ensure you are using a version with its multithreaded data saving feature, which prevents framerate drops by performing file operations on a separate thread [37].

- Update Loops: If writing custom data collection code, ensure it runs in the correct update loop (

Updatevs.FixedUpdatevs.LateUpdate) to avoid missing frames.

Issue: Difficulty querying or managing data from multiple sensors in ManySense VR.

- Background: A formative evaluation of ManySense VR pointed out that its data query method could be inconvenient or error-prone [2].

- Solution:

- Leverage the provided extensible context data managers for each sensor type to ensure data is properly formatted upon collection [2].

- Design and implement a intermediate data abstraction layer in your application that listens to the relevant ManySense VR data streams and repackages them into a format more suited to your specific query needs.

Issue: Locomotion or interaction feels unpolished when using the XR Interaction Toolkit.

- Background: The XR Interaction Toolkit provides the components, but fine-tuning is often required [36].

- Checklist:

- Input Actions: Verify that your Input Action bindings in the Unity Input System are correctly set up for your target devices.

- Interaction Settings: Examine the components on your Interactable objects. Parameters like hover, select, and activate thresholds can be adjusted to fine-tune the feel of interactions.

- Physics: For realistic interactions, ensure that the Rigidbody and Collider components on interactable objects are configured appropriately (e.g., mass, drag).

FAQs & Troubleshooting Guides

This section addresses common technical challenges researchers face when setting up and conducting multi-modal data acquisition in virtual reality (VR) environments.

Q1: What is the most reliable method for synchronizing data streams from eye-trackers, EEG, and other physiological sensors?

A: Hardware synchronization via shared trigger signals is the gold standard. A common and robust method involves using a single computer to present stimuli and record data from all devices. Synchronization can be achieved by having the stimulus presentation software send precise electrical pulse triggers (e.g., via a parallel port or a dedicated data acquisition card) that are simultaneously recorded by all data acquisition systems [39]. For software-based synchronization, ensure all systems are connected to the same network and use a common time server (Network Time Protocol) to align timestamps during post-processing. Always record synchronization validation triggers at the start and end of each experimental block.

Q2: During our VR experiments, we encounter excessive motion artifacts in the EEG data. What steps can we take to mitigate this?

A: Motion artifacts are a common challenge in VR EEG studies. To address this:

- Use Dry Electrode Systems: Consider modern dry electrode EEG headsets, which are designed to handle higher contact impedances and are less susceptible to movement. Some systems feature ultra-high impedance amplifiers and mechanical isolation designs to stabilize electrodes for artifact-free recordings even during participant movement [40].

- Optimize Electrode Placement: Ensure a secure and comfortable fit. Electrodes should maintain stable contact with the scalp without causing discomfort, which can lead to fidgeting.

- Incorplicate Reference Sensors: Utilize systems that include motion sensors (accelerometers/gyroscopes) to record head movement. This data can later be used to model and subtract motion artifacts from the EEG signal during analysis.

- Provide Adequate Restraints: While participants should be able to move naturally, minimize excessive cable sway by using wireless systems or properly securing and suspending cables.

Q3: Our participants report cybersickness, which disrupts data collection. How can we reduce its occurrence?

A: Cybersickness can introduce significant noise and lead to participant dropout.

- Optimize VR Locomotion: Avoid artificial locomotion (e.g., joystick-controlled movement) when possible. Use teleportation or room-scale setups that align with physical movement.

- Ensure High Frame Rates: Maintain a consistent and high frame rate (90 Hz or higher) to reduce latency, which is a primary contributor to cybersickness.

- Provide Adequate acclimatization: Allow participants ample time to get used to the VR headset and controls in a neutral environment before starting the experimental protocol.

- Monitor Physiological Markers: Track physiological signals like electrodermal activity (EDA) and heart rate variability (HRV) in real-time, as they can be early indicators of simulator sickness, allowing you to pause the session if necessary [3].

Q4: The contrast ratios in our experimental diagrams are insufficient. How do we calculate and ensure adequate color contrast?

A: To ensure readability and accessibility, the contrast ratio between foreground (e.g., text) and background colors should meet the Web Content Accessibility Guidelines (WCAG) AA minimum of 4.5:1 for standard text. You can programmatically calculate the contrast ratio using a standardized formula [41].

First, calculate the relative luminance of a color (RGB values from 0-255):

- Convert each color channel value:

v = v/255 - Linearize each value: If

v <= 0.03928then usev/12.92, else use((v+0.055)/1.055)^2.4 - Calculate luminance (L):

L = (R * 0.2126) + (G * 0.7152) + (B * 0.0722)

Then, calculate the contrast ratio (CR) between two colors with luminances L1 and L2 (where L1 > L2):

CR = (L1 + 0.05) / (L2 + 0.05)

The table below shows the contrast ratios for common color pairs, using the specified palette [42].

Color Contrast Analysis

| Color 1 (Hex) | Color 2 (Hex) | Contrast Ratio | Passes WCAG AA? |

|---|---|---|---|

#4285F4 (Blue) |

#FFFFFF (White) |

8.59 [41] | Yes |

#EA4335 (Red) |

#FFFFFF (White) |

4.82 (Calculated) | Yes |

#FBBC05 (Yellow) |

#FFFFFF (White) |

1.07 [41] | No |

#34A853 (Green) |

#FFFFFF (White) |

3.26 (Calculated) | No (for small text) |

#4285F4 (Blue) |

#EA4335 (Red) |

1.1 [42] | No |

Key Insight: Mid-tone colors like the specified yellow (#FBBC05) and green (#34A853) often do not provide sufficient contrast with white or with each other [43]. For diagrams, prefer combinations like blue/white, red/white, or dark grey/white.

Experimental Protocols & Methodologies

This section provides a detailed, actionable protocol for a simultaneous EEG and eye-tracking study in VR, based on validated experimental designs [39].

Protocol: Synchronized EEG and Eye-Tracking in a VR Environment

1. Objective: To capture and analyze the neural and visual attention correlates of participants performing a target detection task within a virtual reality environment.

2. Materials and Equipment: The table below lists the essential research reagents and solutions for this experiment.

Research Reagent Solutions & Essential Materials

| Item | Function / Application |

|---|---|

| VR-Capable Laptop/Workstation | Renders the immersive VR environment in real-time. |

| Immersive VR Headset | Presents the virtual environment; often includes integrated eye-tracking. |

| EEG System (32-channel) | Records electrical brain activity (e.g., NE Enobio 32 system) [39]. |

| Eye Tracker | Records gaze patterns and pupil dilation (e.g., SMI RED250) [39]. |

| Conductive Electrode Gel | Ensures good electrical contact between EEG electrodes and the scalp. |

| Skin Preparation Abrasion Gel | Lightly abrades the skin to lower impedance for EEG and other physiological sensors. |

| Disinfectant Solution & Wipes | For cleaning EEG electrodes and other reusable equipment between participants. |

| Data Acquisition Software | Records and synchronizes multiple data streams (e.g., LabStreamingLayer, Unity). |

3. Participant Setup and Calibration:

- EEG Application: Apply 32 conductive silver chloride gel electrodes according to the international 10-20 system. Check the impedance of each electrode before recording and ensure it is maintained below 5 kΩ [39].

- Eye-Tracking Calibration: Perform a 13-point eye-tracking calibration at the start of the experiment. Repeat the calibration until the error between any two measurements at a single point is less than 0.5° or the average error across all points is less than 1° [39].

- VR Headset Fitting: Ensure the headset is comfortable and properly aligned. Re-check the eye-tracking calibration after donning the headset if possible.

4. Experimental Workflow: The following diagram outlines the sequential workflow for a typical experimental session.

5. Data Temporal Alignment:

Since EEG and eye-tracking data may be recorded on different computers or with different sampling rates, temporal alignment is critical. Use shared hardware triggers (keyboard inputs) recorded at the beginning and end of each block. The conversion between eye-tracking time (T_ET) and EEG time (T_EEG) can be calculated using the formula [39]:

T_EEG * 2 = ((T_ET - b) / 1000)

Where b is a baseline offset determined from the synchronization trigger.

Data Integration & Analytical Framework

Integrating the multi-modal data requires a structured framework. The following diagram illustrates the pathway from raw data acquisition to a unified analytical model, crucial for a thesis on data analytics frameworks.

Key Analytical Steps:

- Pre-processing: Clean each data stream independently. For EEG, this involves band-pass filtering, artifact removal (e.g., ICA), and segmenting into epochs. For eye-tracking, this involves detecting fixations, saccades, and areas of interest (AOIs).

- Synchronization: Use the recorded triggers to align all data streams onto a common, millisecond-precise timeline.

- Feature Engineering: Extract meaningful features from each modality for the analysis period. Examples include:

- Data Fusion: Create a unified dataset where each row represents an experimental trial or time window, with columns for all extracted features from every sensor. This fused dataset becomes the input for statistical analysis or machine learning models to identify complex behavioral phenotypes or digital biomarkers.

Data Format Troubleshooting Guide

Q1: Why is my virtual reality behavioral data file so large and difficult to process sequentially? Large, complex VR datasets in a single JSON file can strain memory and hinder rapid analysis. This is common when storing continuous data streams like head tracking, eye movement, and controller inputs.

- Problem: A monolithic JSON file containing an entire experimental session becomes slow to load and parse.

- Solution: Implement NDJSON (Newline Delimited JSON).

- Methodology: Instead of one large array, structure your data so each individual event or record is a valid JSON object separated by a newline.

- Example: A VR fear-of-heights experiment would store each participant's step onto a virtual plank, heart rate data point, and gaze direction as separate lines in a single

.ndjsonfile. - Benefit: This enables efficient, line-by-line processing (e.g., using a simple

whileloop) without loading the entire dataset into memory, facilitating real-time analysis and parallel processing [44].

Q2: How should I store binary data from VR experiments, like screen recordings or physiological data, alongside my JSON metadata? JSON is inefficient for binary data, leading to significant size overhead. The solution depends on your system's architecture.

- Problem: Encoding a 10MB screen recording in Base64 within a JSON field increases its size to approximately 13.3MB [44] [45].

- Solution 1: Use a Binary-Serialized Format like BSON.

- Methodology: Convert your JSON documents, including metadata and any embedded binary data, into BSON (Binary JSON) for storage. BSON natively supports types like

binDataanddate[44]. - Benefit: This avoids the Base64 overhead and preserves type information, making it suitable for database-level storage, as used natively by MongoDB [44].

- Methodology: Convert your JSON documents, including metadata and any embedded binary data, into BSON (Binary JSON) for storage. BSON natively supports types like

- Solution 2: Use a Multi-Part Approach.