Managing Large-Scale Diffusion Tensor MRI Datasets: A Comprehensive Guide for Behavioral Research and Drug Development

This article provides a comprehensive framework for handling the unique challenges posed by large Diffusion Tensor Imaging (DTI) datasets in behavioral studies and drug development research.

Managing Large-Scale Diffusion Tensor MRI Datasets: A Comprehensive Guide for Behavioral Research and Drug Development

Abstract

This article provides a comprehensive framework for handling the unique challenges posed by large Diffusion Tensor Imaging (DTI) datasets in behavioral studies and drug development research. Covering the entire data lifecycle, we explore DTI fundamentals and the specific nature of big DTI data, methodological approaches for acquisition and analysis, troubleshooting for common artifacts and performance bottlenecks, and validation strategies for multi-center studies. With a focus on practical implementation, we discuss advanced techniques including deep learning acceleration, data harmonization methods, and quality control protocols to ensure reproducible and clinically translatable results. This guide equips researchers and pharmaceutical professionals with the knowledge to optimize DTI workflows from data acquisition through analysis in large-scale studies.

Understanding DTI Fundamentals and Large-Scale Data Challenges

Core Principles & Quantitative Metrics

Frequently Asked Questions

What is the fundamental physical principle behind DTI? Diffusion Tensor Imaging (DTI) measures the Brownian motion (random thermal motion) of water molecules within biological tissues [1]. This movement is not always uniform (isotropic); in structured tissues like white matter, the parallel bundles of axons and their myelin sheaths restrict water diffusion, making it directionally dependent (anisotropic) [2] [1]. DTI quantifies this directionality and magnitude of water diffusion to infer microscopic structural details about tissue architecture.

What does the "Tensor" in DTI represent? The tensor is a 3x3 symmetric matrix that mathematically models the local diffusion properties of water in three-dimensional space within each voxel (volume element) of an image [3]. This model allows for the calculation of both the degree of anisotropy and the primary direction of diffusion, providing a more complete picture than a single scalar value [1].

How is the health of a white matter tract reflected in DTI metrics? Changes in DTI metrics are highly sensitive to microstructural alterations. For example, a decrease in Fractional Anisotropy (FA) or an increase in Mean Diffusivity (MD) often indicates axonal injury or loss of structural integrity, which can occur in conditions like traumatic brain injury [1]. Radial diffusivity is particularly associated with myelin pathology, often increasing with demyelination [1].

Quantitative DTI Metrics Table

The following table summarizes the key quantitative metrics derived from DTI, which are essential for analyzing large datasets in research.

| Metric | Acronym | Description | Typical Change in Pathology |

|---|---|---|---|

| Fractional Anisotropy | FA | Quantifies the directionality of water diffusion (0=isotropic, 1=anisotropic) [1]. | Decrease (e.g., axonal damage) [1]. |

| Mean Diffusivity | MD | Represents the average rate of molecular diffusion, also known as Apparent Diffusion Coefficient (ADC) [1]. | Increase (e.g., edema, necrosis) [1]. |

| Axial Diffusivity | AD | Measures the rate of diffusion parallel to the primary axis of the axon [1]. | Increase with axonal degeneration [1]. |

| Radial Diffusivity | RD | Measures the rate of diffusion perpendicular to the primary axon direction [1]. | Increase with demyelination [1]. |

Experimental Protocols & Data Handling

Frequently Asked Questions

What is a major data quality concern in DTI acquisition and how can it be mitigated? DTI data, often acquired using Single-shot Echo Planar Imaging (EPI), is susceptible to artifacts from eddy currents and patient motion due to its low signal-to-noise ratio (SNR) and long scan times [3]. Mitigation strategies include:

- Using parallel imaging techniques (e.g., SENSE, GRAPPA) to shorten the readout time, which reduces sensitivity to motion and geometric distortions [3].

- Increasing the number of diffusion gradient directions (e.g., 6, 9, 33, or more) to increase confidence in the accuracy of the tensor estimation, though this trades off with longer scan times [1].

Our analysis shows low anisotropy in a voxel. Does this always mean the tissue is disorganized? Not necessarily. A voxel showing low anisotropy (isotropy) may contain multiple highly anisotropic structures (e.g., crossing fibers) oriented in different directions, which cancel each other out on a macroscopic scale [1]. This is a key limitation of the standard DTI model and a primary challenge when working with complex white matter architecture in large datasets.

What are the main methods for quantitative analysis of DTI data? There are three primary methodologies, each with strengths for different research questions:

- Region of Interest (ROI): Manual or semi-automated tracing of specific brain regions for analysis. It is reliable and replicable but can be time-consuming [1].

- Whole-Brain Voxel-Based Analysis (VBA): An automated approach that performs a voxel-wise comparison across the entire brain, ideal for large-scale dataset analysis without a priori hypotheses [1].

- Tract-Based Spatial Statistics (TBSS): A "skeletonized" approach that projects all subjects' FA data onto a common white matter skeleton, reducing alignment issues and is highly popular for group studies [1].

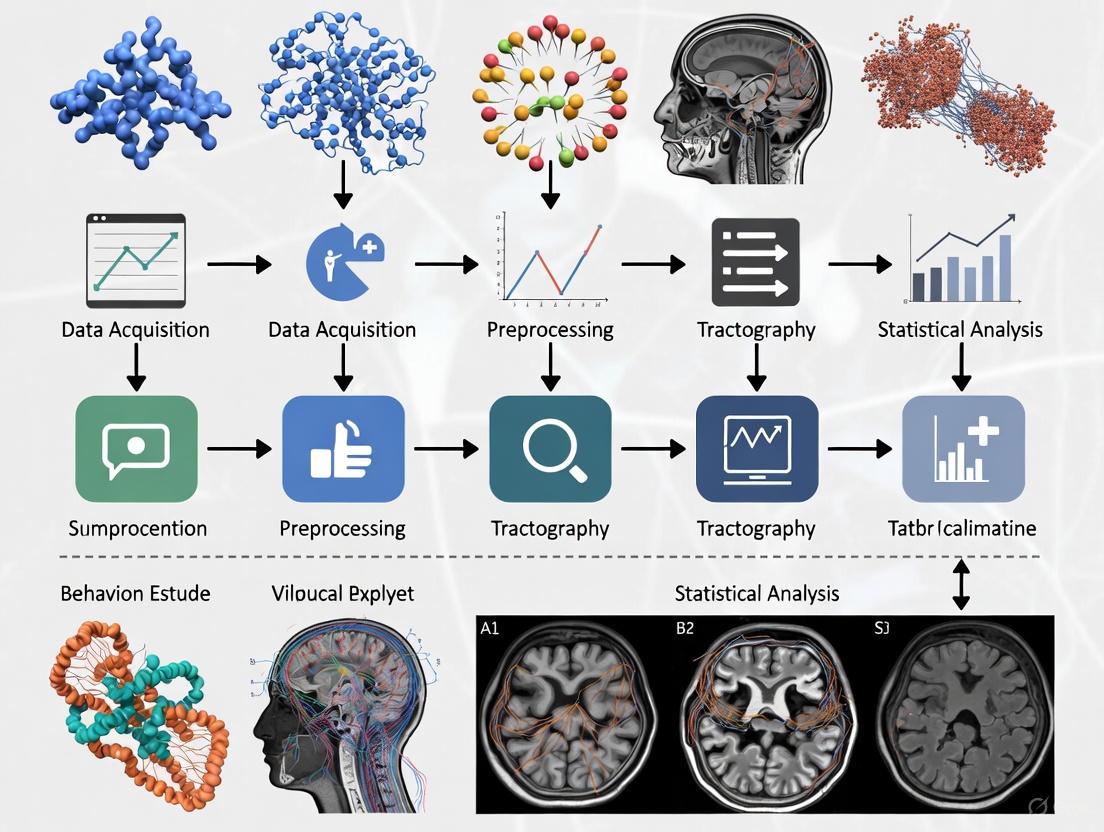

DTI Analysis Workflow

The diagram below outlines the standard workflow for processing and analyzing DTI data, from acquisition to statistical inference, which is crucial for managing large studies.

Research Reagent Solutions: Essential Tools for DTI Research

The following table details key software and methodological "reagents" essential for conducting DTI studies.

| Tool / Method | Function | Relevance to Large Datasets |

|---|---|---|

| Riemannian Tensor Framework | Provides a robust mathematical foundation for tensor interpolation, averaging, and statistical calculation, using the full tensor information [4]. | Enables more accurate population-level statistics and registration in large-scale studies by properly handling the tensor data structure [4]. |

| Fiber Tract-Oriented Statistics | An object-oriented analysis approach where statistics are computed along specific fiber bundles rather than on a voxel-by-voxel basis [4]. | Reduces data dimensionality and provides a more biologically meaningful analysis of white matter properties across a cohort [4]. |

| ACT Rules & Accessibility Testing | A framework of rules for accessibility conformance testing, emphasizing the need for sufficient color contrast in visualizations [5]. | Ensures that tractography visualizations and result figures are interpretable by all researchers, a key concern for collaboration and publication [5]. |

Advanced Troubleshooting & Artifact Handling

Frequently Asked Questions

We are seeing geometric distortions in our DTI data. What is the likely cause and solution? Geometric distortions and B0-susceptibility artifacts are common in EPI-based DTI, especially near tissue-air interfaces (e.g., the sinuses) [3]. This can be addressed by:

- Increasing the image bandwidth to reduce distortion at the cost of SNR.

- Using spin-echo (FSE) or line scan (LSDI) sequences instead of EPI, though these typically have longer acquisition times [3].

- Applying post-processing distortion correction algorithms, which often require acquiring data with reversed phase-encoding directions.

How can we ensure our DTI results are reproducible and comparable across different scanners? Scanner-specific protocols and hardware differences are a major challenge. To ensure reproducibility:

- Standardize your acquisition protocol (e.g., resolution, b-value, number of directions) across all sites in a multi-center study.

- Implement a "human phantom" phenomenon [1]. Scan a single subject on different scanners to establish a scaling factor, enabling comparison to normative databases acquired on different hardware [1].

What is the recommended color contrast for creating publication-quality diagrams and visualizations? For legibility, follow web accessibility guidelines (WCAG). Use a contrast ratio of at least 4.5:1 for normal text and 3:1 for large-scale text or user interface components against their background [6]. This ensures that all elements of your diagrams, such as text in nodes and the colors of arrows, are clearly distinguishable for all readers.

Core DTI Metrics Reference Tables

Primary Scalar Metrics from the Diffusion Tensor

Table 1: Key Scalar DTI Metrics Derived from Eigenvalues (λ₁, λ₂, λ₃)

| Metric Name | Acronym | Formula | Biological Interpretation | Clinical & Research Context |

|---|---|---|---|---|

| Fractional Anisotropy | FA | ( \textrm{FA} = \frac{\sqrt{(\lambda1 - \lambda)^2 + (\lambda2 - \lambda)^2 + (\lambda3 - \lambda)^2}}{\sqrt{\lambda1^2 + \lambda2^2 + \lambda3^2}} ) [7] | Degree of directional water diffusion restriction; reflects white matter "integrity" (axonal density, myelination, fiber coherence) [8]. | Increase: Often associated with brain development [8]. Decrease: Linked to axonal damage, demyelination (e.g., TBI, MS, AD) [9] [8]. |

| Mean Diffusivity | MD | ( \textrm{MD} = \frac{\lambda1 + \lambda2 + \lambda_3}{3} ) [10] | Magnitude of average water diffusion, irrespective of direction [9]. | Increase: Suggests loss of structural barriers, often seen in edema, necrosis, or neurodegeneration [11]. |

| Axial Diffusivity | AD | ( \textrm{AD} = \lambda_1 ) | Diffusivity parallel to the primary axon direction. | Decrease: Interpreted as axonal injury [3]. |

| Radial Diffusivity | RD | ( \textrm{RD} = \frac{\lambda2 + \lambda3}{2} ) | Average diffusivity perpendicular to the primary axon direction. | Increase: Interpreted as demyelination [3]. |

Advanced Diffusion Models

Table 2: Advanced Diffusion Metrics Beyond the Standard Tensor Model

| Model/Metric | Acronym | Description | Interpretation & Advantage |

|---|---|---|---|

| Tensor Distribution Function | TDF | Probabilistic mixture of tensors to model multiple underlying fibers [11]. | Overcomes single-tensor limitation in crossing-fiber regions; provides "corrected" FA (FATDF) shown to be more sensitive to disease effects (e.g., in Alzheimer's disease) [11]. |

| Mean Kurtosis | MK | Quantifies the degree of non-Gaussian water diffusion [12]. | Higher MK suggests greater microstructural complexity; sensitive to pathological changes in both gray and white matter [12]. |

| Neurite Orientation Dispersion and Density Imaging | NODDI | Multi-compartment model separating intra-neurite, extra-neurite, and CSF signal [12]. | Provides specific metrics like neurite density (NDI) and orientation dispersion (ODI), offering more biological specificity than DTI [12]. |

| Generalized Fractional Anisotropy | GFA | An analog of FA for models like Q-Ball imaging, based on the orientation distribution function (ODF) [8]. | Measures variation of the ODF; useful for high angular resolution diffusion imaging (HARDI) methods [8]. |

Troubleshooting Guides & FAQs

Data Quality & Metric Interpretation

Q1: Our study shows a statistically significant decrease in Fractional Anisotropy in a patient group. Can we directly conclude this indicates a loss of "white matter integrity"?

Not directly. While often interpreted as a marker of white matter integrity, a decrease in FA is not specific to a single biological cause [8]. It can result from:

- A decrease in Axial Diffusivity (suggesting axonal injury).

- An increase in Radial Diffusivity (suggesting demyelination) [8].

- Complex changes in both AD and RD.

- An increase in fiber crossing or dispersion not related to pathology [11].

- Recommendation: Always consult AD and RD metrics alongside FA to generate more specific hypotheses about the underlying microstructural change [3].

Q2: We are getting inconsistent FA values in brain regions with known crossing fibers. How can we improve accuracy?

This is a classic limitation of the single-tensor model, which can only represent one dominant fiber orientation per voxel [11].

- Solution: Employ advanced reconstruction models designed for complex fiber architecture, such as the Tensor Distribution Function (TDF) or Constrained Spherical Deconvolution (CSD) [11]. These models provide more accurate anisotropy estimates in regions with crossing, kissing, or fanning fibers.

Q3: Our multi-site DTI study shows high inter-scanner variability in metric values. How can we ensure data consistency?

This is a common challenge due to differences in scanner hardware, software, and gradient performance [13] [14].

- Solution: Implement a rigorous, phantom-based Quality Assurance (QA) protocol [13] [15] [12].

- Use a stable, agar-filled diffusion phantom [15] [12].

- Conduct regular scans of the phantom to track the scanner's performance over time.

- Use automated QA tools (e.g., the publicly available

qa-dtitool [15]) to extract metrics like SNR, eddy current-induced distortions, and FA uniformity [13] [15]. This allows you to quantify and correct for inter-site and inter-scanner differences.

Q4: What are the primary sources of artifacts in DTI data, and how can they be mitigated?

Table 3: Common DTI Artifacts and Correction Strategies

| Artifact Type | Cause | Impact on DTI Metrics | Mitigation Strategies |

|---|---|---|---|

| Eddy Current Distortions | Rapid switching of strong diffusion-sensitizing gradients. | Geometric distortions in DWI images; inaccurate tensor estimation [3]. | Use of "dual spin-echo" sequences [15]; post-processing correction tools (e.g., eddy in FSL) [13] [3]. |

| EPI Distortions (B0 Inhomogeneity) | Magnetic field (B0) inhomogeneities, especially near sinuses. | Severe geometric stretching or compression [3]. | Use of parallel imaging (SENSE, GRAPPA) to reduce echo train length [3]; B0 field mapping (e.g., with FUGUE) for post-processing correction [13]. |

| Subject Motion | Head movement during the relatively long DTI acquisition. | Blurring, misalignment between diffusion volumes, corrupted tensor fitting [3]. | Proper head stabilization; prospective motion correction; post-processing realignment and registration [3]. |

| Systematic Spatial Errors | Nonlinearity of the magnetic field gradients. | Inaccurate absolute values of diffusion tensors, affecting cross-scanner comparability [14]. | Use of the B-matrix Spatial Distribution (BSD-DTI) method to characterize and correct for gradient nonuniformities [14]. |

Experimental Protocol & Analysis

Q5: What is the minimum number of diffusion gradient directions required for a robust DTI study?

While more directions are always better, a common recommendation is a minimum of 20 diffusion directions, with 30 or more (e.g., 64) being preferred for robust tensor estimation and fiber tracking [10]. The exact number depends on the desired accuracy and the specific analysis (e.g., tractography requires more directions than a simple whole-brain FA analysis).

Q6: Our clinical scan time is limited. Can we still derive meaningful DTI metrics from a protocol with fewer gradient directions?

Yes. Research indicates that even with a reduced set of directions (e.g., 30, 15, or 7), meaningful group-level analyses are possible [11]. Furthermore, using advanced models like the Tensor Distribution Function (TDF) on such "clinical quality" data can yield FA metrics (FATDF) that are more sensitive to disease effects than standard FA from a full dataset, by better accounting for crossing fibers [11].

Detailed Experimental Protocols

Protocol 1: Phantom-Based DTI Quality Assurance

This protocol provides a methodology for monitoring scanner stability and ensuring data quality consistency, crucial for handling large multi-site or longitudinal datasets [13] [15] [12].

Objective: To establish a baseline and longitudinally track the performance of an MRI scanner for DTI acquisitions using an agar phantom.

Materials:

- Phantom: FBIRN-style agar-filled spherical phantom (stable, reproducible diffusion properties) [15].

- MRI Scanner: Equipped with a multi-channel head coil.

Acquisition Parameters:

- The DTI acquisition protocol (b-value, number of diffusion directions, phase encode steps) should match the parameters used for human subject scans to accurately assess performance.

- Repetition Time (TR) can be minimized as full brain coverage is not needed.

- A central slab (thick slice or average of a few slices) is acquired to ensure high SNR [15].

Processing & Analysis (Automated):

The following workflow can be implemented using automated tools like the publicly available qa-dti code [15].

Key Outcome Metrics: The protocol generates eleven key metrics, including [13] [15]:

- SNR (nDWI and DWI): Measures signal stability and gradient performance.

- Shape Analysis (Sphericity): Quantifies distortions from eddy currents and B0 inhomogeneity.

- FA Value and Uniformity: Tracks the accuracy and consistency of the primary DTI metric in the phantom.

- Nyquist Ghosting: Assesses EPI-related artifacts.

Protocol 2: In-Vivo DTI Processing for Large Cohort Analysis

This protocol outlines a standardized pipeline for processing human DTI data, which is essential for ensuring reproducibility and validity in studies with large datasets.

Objective: To preprocess and reconstruct DTI data from human subjects for group-level statistical analysis.

Materials:

- Raw DWI Data: Including multiple b=0 s/mm² images (nDWI) and multiple diffusion-weighted images (DWI).

- Structural T1-weighted Image: For co-registration and anatomical reference.

- Software: Tools like FSL, DTIStudio, DTIprep, or TORTOISE [13] [3].

Processing & Analysis: The standard pipeline involves several critical steps to correct for common artifacts.

Critical Steps:

- Eddy Current & Motion Correction: Uses tools like

eddy(FSL) to correct for distortions from gradient switching and subject head motion [13] [3]. - EPI Distortion Correction: Uses B0 field maps (e.g., with

FUGUE) to correct for geometric distortions caused by magnetic field inhomogeneities [13] [3]. - Tensor Fitting & Metric Calculation: The diffusion tensor is fitted at each voxel to derive FA, MD, AD, and RD maps [3].

- Spatial Normalization & Group Analysis:

- Tract-Based Spatial Statistics (TBSS): A highly recommended approach for voxel-wise group analysis. All subjects' FA data are aligned to a common space and projected onto a mean FA skeleton, which mitigates registration issues and avoids the need for arbitrary spatial smoothing [9]. This increases the sensitivity and reliability of statistical comparisons.

The Scientist's Toolkit

Table 4: Essential Research Reagents & Materials for DTI Studies

| Item Name | Category | Function & Rationale |

|---|---|---|

| Agar Diffusion Phantom | Quality Assurance | Provides a stable, reproducible reference with known diffusion properties to monitor scanner performance, validate sequences, and ensure cross-site and longitudinal consistency [13] [12]. |

| BSD-DTI Correction | Software/Algorithm | Corrects for spatial systematic errors in the diffusion tensor caused by gradient nonlinearities, improving the accuracy and comparability of absolute metric values, especially in multi-scanner studies [14]. |

| Tract-Based Spatial Statistics (TBSS) | Analysis Software | A robust software pipeline within FSL for performing voxel-wise multi-subject statistics on FA and other diffusion metrics, minimizing registration problems and increasing statistical power [9]. |

| Advanced Reconstruction Models (TDF, CSD, NODDI) | Software/Algorithm | Mathematical frameworks that overcome the limitations of the single-tensor model, providing more accurate metrics in complex white matter regions and potentially greater sensitivity to disease-specific changes [11] [12]. |

FAQs and Troubleshooting Guides

This section addresses common challenges researchers face when handling the "Three V's" of Big Data—Volume, Velocity, and Variety—in Diffusion Tensor Imaging (DTI) studies.

FAQ 1: How can we manage the massive Volume of raw DTI data?

- Challenge: Raw DTI data from large cohorts can consume terabytes of storage. For example, a dataset from just 10,000 individuals can require over 13.5 TB of space for NIfTI files alone [16]. This volume strains storage systems and complicates data backup.

Solutions:

- Utilize Processed Data: For specific questions, use preprocessed data (e.g., connectivity matrices), which can reduce storage needs to a fraction of the raw data size [16].

- Cloud & Distributed Storage: Leverage cloud data warehouses (e.g., Snowflake, BigQuery) or distributed file systems (e.g., Hadoop HDFS) that offer scalable, virtually infinite storage [17] [18] [19].

- Strategic Backups: When backing up data, consider excluding intermediate processed files that can be regenerated, focusing only on irreplaceable raw data [16].

Troubleshooting Guide: "My research group is running out of storage space for our DTI datasets."

| Step | Action | Rationale |

|---|---|---|

| 1 | Audit Data | Identify and archive raw data that is no longer actively needed for analysis. |

| 2 | Implement a Data Tiering Policy | Move older, infrequently accessed datasets to cheaper, long-term storage solutions. |

| 3 | Use Data Compression | Apply lossless compression to NIfTI files to reduce their footprint without losing information. |

| 4 | Consider Processed Data | If your research question allows, download only the preprocessed scalar maps (FA, MD) for analysis. |

FAQ 2: How do we handle the Variety and inconsistency of DTI data from different sources or protocols?

- Challenge: DTI data can come in varied formats (DICOM, NIfTI), from different scanner manufacturers, and with different acquisition parameters (b-values, gradient directions). This inconsistency makes pooling data for large-scale studies difficult [18] [20] [19].

Solutions:

- Standardize with BIDS: Organize your data according to the Brain Imaging Data Structure (BIDS) standard. This ensures a consistent and predictable structure, making data easier to share, process, and understand [16].

- Data Integration Tools: Use data integration tools to create reliable pipelines that can pull data from hundreds of different sources and apply transformations for consistency [17].

- Detailed Documentation: Meticulously document all acquisition parameters, preprocessing steps, and software versions used for each dataset [16].

Troubleshooting Guide: "I cannot combine my DTI dataset with a public dataset due to format and parameter differences."

| Step | Action | Rationale |

|---|---|---|

| 1 | Convert to Standard Format | Ensure all datasets are in the same standard format, preferably NIfTI, using tools like dcm2niix [21]. |

| 2 | Harmonize Acquisition Parameters | Note differences in b-values, number of gradient directions, and voxel size. Statistical harmonization methods (e.g., ComBat) may be required to adjust for these differences. |

| 3 | Spatial Normalization | Register all individual DTI maps (FA, MD) to a common template space (e.g., FMRIB58_FA) to enable voxel-wise group comparisons. |

| 4 | Use a Common Pipeline | Process all datasets through the same software pipeline (e.g., FSL's dtifit) to ensure derived metrics are comparable [21]. |

FAQ 3: What are the best practices for ensuring data Veracity (quality) in high-Velocity, automated DTI processing streams?

- Challenge: The push for faster (high-velocity) analysis and real-time insights increases the risk of propagating errors from poor-quality data. This includes artifacts from subject motion, eddy currents, and low signal-to-noise ratio [17] [3].

Solutions:

- Automated Quality Control (QC): Implement automated QC pipelines that quantify metrics like signal-to-noise ratio, motion parameters, and artifact detection for every dataset [16].

- Data Observability: Go beyond simple monitoring. Use platforms that provide freshness, distribution, and lineage of your data to quickly identify and triage quality issues [17].

- Visual Inspection: Never fully automate QC. Always include a step for manual visual inspection of raw data, intermediate steps, and final results to catch subtle errors automated systems might miss [16] [21].

Troubleshooting Guide: "My automated DTI pipeline produced implausible tractography results for several subjects."

| Step | Action | Rationale |

|---|---|---|

| 1 | Check Raw Data | Go back to the raw diffusion-weighted images. Look for severe motion artifacts, signal dropouts, or "zipper" artifacts that could corrupt the entire pipeline. |

| 2 | Inspect Intermediate Outputs | Check the outputs of key steps like eddy-current correction and brain extraction. A poorly generated brain mask can severely impact tensor fitting. |

| 3 | Review QC Metrics | Check the subject's motion parameters and outlier metrics from the eddy-current correction step. High values often explain poor results. |

| 4 | Re-run with Exclusions | For subjects with severe artifacts, consider excluding the affected volumes (if using a modern tool like FSL's eddy) or excluding the subject entirely. |

Experimental Protocols for DTI Analysis

This section provides detailed methodologies for key experiments in DTI research, incorporating best practices for handling large datasets.

Protocol 1: Multi-Site DTI Analysis for Large-Scale Studies

This protocol is designed for pooling and analyzing DTI data collected across multiple scanners and sites, a common scenario in large-scale consortia studies like the UK Biobank or ABCD [16] [22].

1. Data Acquisition Harmonization:

- Aim: Minimize site-related variability at the source.

- Method:

- Use standardized acquisition protocols across all sites where possible.

- Employ phantom studies to quantify and correct for inter-scanner differences in DTI metrics.

- Collect high-resolution structural scans (T1-weighted, T2-weighted) alongside DTI for registration and tissue segmentation [22].

2. Centralized Data Processing & Quality Control:

- Aim: Ensure consistent and high-quality data processing for all subjects.

- Method:

- Storage: Use a centralized, cloud-based storage platform (e.g., a data lakehouse) to aggregate all datasets [17].

- Preprocessing: Run all data through a single, version-controlled pipeline. Key steps include:

- Denoising

- Eddy-current and motion correction

- Outlier volume rejection

- Tensor fitting to create Fractional Anisotropy (FA) and Mean Diffusivity (MD) maps [21].

- Quality Control: Implement an automated QC pipeline that flags datasets for excessive motion, artifacts, or poor registration. All flagged datasets must undergo manual inspection.

3. Statistical Analysis and Data Integration:

- Aim: Derive meaningful insights from the harmonized dataset.

- Method:

- Voxel-Based Analysis (VBA): Non-linearly register all FA maps to a standard template. Perform voxel-wise cross-subject statistics to identify regions where DTI metrics correlate with clinical or demographic variables [4].

- Tract-Based Spatial Statistics (TBSS): Project all FA data onto a mean FA skeleton before performing statistics, which reduces alignment issues and is considered more robust than VBA.

- Data Integration: Link DTI metrics with phenotypic, genetic, or behavioral data stored in a structured format (e.g., SQL database) for multivariate analysis [16].

The workflow for this protocol can be visualized as follows:

Protocol 2: Accelerated DTI Acquisition and Reconstruction using Deep Learning

This protocol addresses the Velocity challenge by reducing scan times, which is critical for clinical practice and large-scale studies [23] [22].

1. Data Acquisition for Model Training:

- Aim: Acquire a high-quality reference dataset for training a deep learning model.

- Method:

2. Model Training for Image Synthesis:

- Aim: Train a model to predict high-quality DWI volumes from a highly undersampled acquisition.

- Method:

- Framework: Use a deep learning framework like DeepDTI [23].

- Input: The model takes as input a minimal set of data—one b=0 and six diffusion-weighted images (DWIs) along with structural T1 and T2 volumes.

- Output: The model is trained to output the residuals between the input low-quality images and the target high-quality DWI volumes.

- Architecture: A 3D Convolutional Neural Network (CNN) is used to leverage redundancy in local, non-local, and cross-contrast spatial information [23].

3. Validation and Tractography:

- Aim: Ensure the accelerated method produces biologically valid results.

- Method:

- Tensor Fitting: Fit the diffusion tensor to the model's output high-quality DWIs to generate standard scalar maps (FA, MD) and the primary eigenvector map [23] [21].

- Validation: Quantitatively compare the DeepDTI-derived metrics and resulting tractography against the ground truth from the fully sampled data. Metrics like mean distance between reconstructed tracts should be used (e.g., target: 1-1.5 mm) [23].

The following diagram illustrates the core DeepDTI processing framework:

The Scientist's Toolkit: Research Reagent Solutions

The following table details essential software, data, and hardware "reagents" required for modern DTI research involving large datasets.

| Research Reagent | Type | Function / Application |

|---|---|---|

| FSL (FDT, BET, dtifit) [21] | Software Library | A comprehensive suite for DTI preprocessing, tensor fitting, and tractography. Essential for creating standardized analysis pipelines. |

| Brain Imaging Data Structure (BIDS) [16] | Data Standard | A standardized framework for organizing neuroimaging data. Critical for ensuring data reproducibility, shareability, and ease of use in large-scale studies. |

| Cloud Data Platforms (e.g., Snowflake, BigQuery) [17] | Data Infrastructure | Provide scalable storage and massive parallel processing capabilities for handling the Volume of large DTI datasets and associated phenotypic information. |

| High-Field MRI Scanner (5.0T-7.0T) [22] | Hardware | Provides higher spatial resolution and Signal-to-Noise Ratio (SNR) for DTI, improving the accuracy of tractography and microstructural measurements. |

| Deep Learning Models (e.g., DeepDTI) [23] | Software Algorithm | Enables significant acceleration of DTI acquisition by synthesizing high-quality data from undersampled inputs, directly addressing the Velocity challenge. |

| Large-Scale Open Datasets (e.g., HCP, UK Biobank, Diff5T) [16] [22] | Data Resource | Provide pre-collected, high-quality data from thousands of subjects, enabling studies with high statistical power and the development of normative atlases. |

| Data Observability Platform (e.g., Monte Carlo) [17] | Data Quality Tool | Monitors data pipelines for freshness, schema changes, and lineage, helping to maintain Veracity across large, complex data ecosystems. |

Clinical and Research Applications in Behavioral Neuroscience

Troubleshooting Guide: DTI in Behavioral Neuroscience

Common DTI Data Quality Issues and Solutions

| Problem Symptom | Potential Cause | Diagnostic Steps | Solution |

|---|---|---|---|

| Low Signal-to-Noise Ratio (SNR) [1] [24] | Short scan time, high b-value, long echo time (TE), hardware limitations [25]. | Check mean diffusivity maps for unexpected noise; calculate tSNR in a homogeneous white matter region [25]. | Increase number of excitations (NEX); use denoising algorithms (e.g., Marchenko-Pastur PCA) [25]; reduce TE if possible [25]. |

| Image Distortion (Eddy Currents) [24] | Rapid switching of strong diffusion gradients [24]. | Visual inspection of raw DWI for shearing or stretching artifacts [24]. | Use pulsed gradient spin-echo sequences; apply post-processing correction (e.g., FSL's EDDY) [25]. |

| Motion Artifacts [24] | Subject movement during scan [24]. | Check output from motion correction tools (e.g., EDDY) for excessive translation/rotation [25]. | Improve subject head stabilization; use faster acquisition sequences (e.g., single-shot EPI); apply motion correction [24]. |

| Coregistration Errors | Misalignment between DTI slices or with anatomical scans. | Visual inspection of registered images for blurring or misaligned edges. | Ensure use of high-quality b=0 image for registration; align DWI to first TE session; check and rotate b-vectors [25]. |

| Abnormal FA/MD Values | Pathological finding, or partial volume effect from CSF. | Correlate with T2-weighted anatomical images; check if values are consistent across nearby slices. | Use more complex models (e.g., NODDI) for specific microstructural properties [25]; ensure proper skull stripping [24]. |

Frequently Asked Questions (FAQs)

Q1: Our deep learning model for classifying CSM severity is overfitting. What strategies can we use? A1: Consider using a pre-trained network and freezing its parameters to prevent overfitting [26]. Implement a feature fusion mechanism, like DCSANet-MD, which integrates both 2D (maximally compressed disc) and 3D (whole spinal cord) features to provide a larger, more robust decision framework [26]. Data augmentation techniques specific to DTI can also be beneficial.

Q2: How does the choice of Echo Time (TE) impact DTI metrics, and how should we account for it? A2: DTI metrics are TE-dependent [25]. Increases in TE can lead to decreased Fractional Anisotropy (FA) and Axial Diffusivity (AD), and increased Mean Diffusivity (MD) and Radial Diffusivity (RD) [25]. For consistent results, use a fixed TE across all subjects in a study. If comparing across studies, the TE parameter must be considered. Multi-echo DTI acquisitions can help disentangle these effects [25].

Q3: What is the best way to define Regions of Interest (ROIs) for analysis to minimize subjectivity? A3: Manual ROI drawing is susceptible to human expertise and can yield inconsistent outcomes [26]. To minimize subjectivity, consider using standardized atlases (e.g., JHU white matter atlas) [25] or automated, deep-learning-based methods that perform end-to-end analysis without manual intervention [26].

Q4: We have a large, multi-session DTI dataset. What is a robust preprocessing pipeline?

A4: A standard pipeline includes: 1) Denoising (e.g., using dwidenoise in MRtrix3) [25]; 2) B0-inhomogeneity correction (e.g., FSL's TOPUP) [25]; 3) Eddy current and motion correction (e.g., FSL's EDDY) [25]; 4) Brain extraction (skull stripping); and 5) Co-registration of all images to a common space [24].

Q5: How can we validate the quality of our acquired DTI dataset? A5: Perform both visual inspection and quantitative checks [25]. Visually check for ghosting or distortions in the mean DWIs [25]. Quantitatively, calculate the temporal Signal-to-Noise Ratio (tSNR) and monitor head motion parameters (translation, rotation) provided by tools like EDDY [25].

DTI Quantitative Metrics and Experimental Parameters

Key DTI Scalar Metrics

| Metric | Full Name | Biological Interpretation | Typical Use Case in Behavioral Neuroscience |

|---|---|---|---|

| FA | Fractional Anisotropy [1] | Degree of directional water diffusion; reflects white matter integrity/organization [1]. | Correlating WM integrity with cognitive scores (e.g., JOA score in CSM) [26]. |

| MD / ADC | Mean Diffusivity / Apparent Diffusion Coefficient [1] [24] | Overall magnitude of water diffusion; increases can indicate edema or necrosis [1]. | Detecting general tissue changes in neurodegenerative diseases [24]. |

| AD | Axial Diffusivity [1] | Diffusion rate parallel to the main axon direction; may indicate axonal integrity [1]. | Differentiating specific types of axonal injury in trauma models [24]. |

| RD | Radial Diffusivity [1] | Diffusion rate perpendicular to the axon; may reflect myelin integrity [1]. | Assessing demyelination in disorders like multiple sclerosis [24]. |

Example Experimental Protocol for CSM Severity Classification

Objective: To automatically classify the severity of Cervical Spondylotic Myelopathy (CSM) using deep learning on DTI data [26].

Dataset:

- Subjects: 176 CSM patients (112 male, 64 female, mean age 63.8 ± 13.7 years) [26].

- Clinical Ground Truth: Japanese Orthopaedic Association (JOA) score [26].

- Severity Categorization (Example):

DTI Acquisition (Example from cited research):

- Scanner: 3T Philips Achieva [26].

- Sequence: Single-shot Echo Planar Imaging (EPI) [26].

- Parameters: b-value = 600 s/mm², 15 directions, NEX=3, 12 slices covering C2-C7 [26].

Preprocessing:

- Feature Calculation: Use Spinal Cord Toolbox to calculate Fractional Anisotropy (FA) maps [26].

- Spatial Feature Selection: Extract the DTI slice at the Maximally Compressed Cervical Disc (MCCD) and the 3D DTI scan of the entire spinal cord [26].

Model & Analysis:

- Model: DCSANet-MD (DTI-Based CSM Severity Assessment Network-Multi-Dimensional) [26].

- Architecture: Employs two parallel residual networks (DCSANet-2D and DCSANet-3D) to extract features from the 2D MCCD slice and the 3D whole-cord scan, followed by a feature fusion mechanism [26].

- Results: The model achieved 82% accuracy in binary classification (mild vs. severe) and ~68% accuracy in the three-category classification [26].

DTI Analysis Workflow

The Scientist's Toolkit: Essential DTI Research Reagents & Solutions

| Item Name | Function / Role | Example Usage / Notes |

|---|---|---|

| Diffusion-Weighted Images (DWI) | Raw data input; images sensitive to water molecule diffusion in tissues [24]. | Acquired with multiple gradient directions and b-values to probe tissue microstructure [25]. |

| Fractional Anisotropy (FA) Map | Primary computed metric; quantifies directional preference of water diffusion [1]. | Used as the key input feature for deep learning models assessing white matter integrity [26]. |

| Spinal Cord Toolbox (SCT) | Software for processing and extracting metrics from spinal cord MRI data [26]. | Used for automated extraction of DTI features like FA maps from the cervical spinal cord [26]. |

| FSL (FMRIB Software Library) | Comprehensive brain MRI analysis toolbox, includes DTI utilities [25]. | Used for EDDY (correction for eddy currents and motion) and DTIFIT (tensor fitting) [25]. |

| Deep Learning Framework (e.g., PyTorch, TensorFlow) | Platform for building and training custom neural network models [26]. | Used to implement architectures like DCSANet-MD for automated classification tasks [26]. |

| JHU White Matter Atlas | Standardized atlas defining white matter tract regions [25]. | Used for ROI-based analysis to ensure consistent and comparable regional measurements across studies [25]. |

White Matter Architecture and Microstructural Correlates

Troubleshooting Guides & FAQs

This technical support resource addresses common challenges in diffusion MRI (dMRI) research, particularly within the context of managing large-scale datasets for behavioral studies.

Data Quality & Preprocessing

Q: Our diffusion measures (e.g., FA, MD) show unexpected variations between study sites in a multicenter trial. What could be the cause and how can we mitigate it?

A: Variations in dMRI data quality are a major confounder in multicenter studies. Key data quality metrics, including contrast-to-noise ratio (CNR), outlier slices, and participant motion (both relative and rotational), often differ significantly between scanning centers. These factors have a widespread impact on core diffusion measures like Fractional Anisotropy (FA) and Mean Diffusivity (MD), as well as tractography outcomes, and this impact can vary across different white matter tracts. Notably, these effects can persist even after applying data harmonization algorithms [27].

- Mitigation Strategy: Always include data quality metrics as covariates in your statistical models. This is critical for analyzing individual differences or group effects in multisite datasets. Proactively monitoring these metrics during data acquisition can also help identify and rectify issues early [27].

Q: How can we handle the confounding effects of age when studying a clinical population across a wide age range?

A: Age-related microstructural changes are a pervasive factor in white matter architecture. Conventional DTI metrics follow well-established trajectories: FA typically shows a non-linear inverted-U pattern across the lifespan, while MD, Axial Diffusivity (AD), and Radial Diffusivity (RD) generally increase with older age, reflecting declining microstructural integrity [28]. Advanced dMRI models (e.g., NODDI, RSI) can provide additional sensitive measures to age-related changes [28].

- Mitigation Strategy: For cross-sectional studies, carefully age- and sex-match your patient and control groups. In longitudinal studies or those with wide age ranges, use statistical methods like linear mixed effects models to explicitly model and account for age trajectories [28] [29].

Experimental Design & Interpretation

Q: We are observing higher Fractional Anisotropy (FA) in early-stage patient groups compared to controls, which contradicts the neurodegenerative disease hypothesis. Is this plausible?

A: Yes, this seemingly paradoxical finding is biologically plausible and has been documented. A large worldwide study of Parkinson's disease (PD) found that in the earliest disease stage (Hoehn and Yahr Stage 1), participants displayed significantly higher FA and lower MD across much of the white matter compared to controls. This is interpreted as a potential early compensatory mechanism, which is later overridden by degenerative changes (lower FA, higher MD) in advanced stages [29]. Similar considerations may apply to other disorders.

- Recommendation: Do not dismiss findings of increased FA out of hand. Interpret your results within the context of disease stage. Early, hyper-compensatory phases may exist for some conditions, and analyzing a cohort that mixes early and late-stage patients could obscure these distinct biological signatures [29].

Q: What is the justification for averaging measures across homologous tracts (e.g., left and right) in a group analysis?

A: While a common practice, it should be done with caution. Research shows that while many of the strongest microstructural correlations exist between homologous tracts in opposite hemispheres, the degree of this coupling varies widely. Furthermore, many white matter tracts exhibit known hemispheric asymmetries [30]. Blindly averaging can mask these biologically meaningful lateralized differences.

- Recommendation: Initially, analyze left and right hemisphere tracts separately to check for asymmetries or lateralized effects. Averaging is only justified if your statistical analysis confirms no significant hemispheric differences or interactions for your specific research question [30].

Summarized Quantitative Data

Table 1: Representative Microstructural Alterations Across Parkinson's Disease Stages

Data adapted from a worldwide study of 1,654 PD participants and 885 controls, comparing against matched controls [29].

| Hoehn & Yahr Stage | Fractional Anisotropy (FA) Profile (vs. Controls) | Key Implicated White Matter Regions | Effect Size Range (Cohen's d) |

|---|---|---|---|

| Stage 1 | Significantly higher FA | Anterior corona radiata, Anterior limb of internal capsule | d = 0.23 to 0.24 |

| Stage 2 | Lower FA in specific tracts | Fornix | d = -0.27 |

| Stage 3 | Lower FA in more regions | Fornix, Sagittal stratum | d = -0.29 to -0.31 |

| Stage 4/5 | Widespread, significantly lower FA | Fornix (most affected), 18 other ROIs | d = -0.38 to -1.09 |

Table 2: Common dMRI Data Quality Metrics and Their Impact

Based on an analysis of 691 participants (5-17 years) from six centers [27].

| Data Quality Metric | Description | Impact on Diffusion Measures |

|---|---|---|

| Contrast-to-Noise Ratio (CNR) | Signal quality relative to noise | Low CNR can bias FA and MD values, reducing reliability. |

| Outlier Slices | Slices with signal drop-outs or artifacts | Can disrupt tractography, causing erroneous tract breaks or spurious connections. |

| Relative Motion | Subject movement relative to scan | Introduces spurious changes in diffusivity measures and reduces anatomical accuracy. |

| Rotational Motion | Rotational head movement during scan | Particularly detrimental to directional accuracy and anisotropy calculations. |

Detailed Experimental Protocols

Protocol 1: Standardized DTI Tractography for Tract-Based Microstructural Analysis

This protocol is adapted from a foundational study investigating microstructural correlations across white matter tracts in 44 healthy adults [30].

- MRI Acquisition: Data were acquired on a 3T MRI scanner using a single-shot spin-echo EPI sequence.

- Key Parameters: TE/TR = 63 ms/14 s, 55 diffusion-encoding directions at b=1000 s/mm², 1.8 mm isotropic voxels, 128x128 matrix [30].

- DTI Processing:

- Brain Extraction: Remove non-brain tissue using a tool like FSL's BET [30].

- Correction: Correct for eddy currents and subject motion using linear registration (e.g., FSL's FLIRT) of all diffusion-weighted images to the b=0 volume [30].

- Tensor Fitting: Fit the diffusion tensor model in each voxel to compute maps of FA, MD, AD, and RD using software such as DTIstudio or FSL [30].

- Fiber Tractography:

- Algorithm: Use deterministic Fiber Assignment by Continuous Tracking (FACT).

- Parameters: Seed from all voxels with FA > 0.3. Continue tracking while FA > 0.2 and the turning angle between voxels is < 50° [30].

- Tract Segmentation: Manually place Regions of Interest (ROIs) on directionally encoded color FA maps to isolate specific white matter tracts (e.g., Arcuate Fasciculus, Corticospinal Tract). Use exclusion ROIs to remove anatomically implausible fibers [30].

- Quantification: Extract mean FA, MD, AD, and RD values along the entire 3D trajectory of each reconstructed tract for statistical analysis [30].

Protocol 2: Quality Control and Harmonization for Multicenter dMRI Studies

This protocol outlines steps to manage data variability in large-scale, multi-scanner datasets [27].

- Proactive Harmonization: Standardize acquisition protocols across all sites whenever possible, including scanner manufacturer, model, field strength, and sequence parameters (b-values, number of directions, voxel size) [27].

- Quality Metric Extraction: For each dataset, compute a standard set of quality metrics:

- Contrast-to-Noise Ratio (CNR)

- Number of Outlier Slices

- Motion Parameters (absolute, relative, translation, rotation) [27].

- Data Processing with Quality in Mind: Use modern pipelines (e.g., FSL, ANTs, MRtrix3) that include steps for denoising, eddy-current correction, and EPI distortion correction. Ensure gradient tables are correctly oriented after motion correction [31].

- Statistical Modeling: Include the extracted data quality metrics (Step 2) as nuisance covariates in all between-group or correlation analyses to statistically control for their influence [27].

Experimental Workflow Visualization

Diagram 1: Multicenter dMRI Data Analysis Workflow

Diagram 2: dMRI Preprocessing and Tractography Pipeline

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software Tools for dMRI Analysis

| Tool Name | Primary Function | Brief Description of Role |

|---|---|---|

| FSL (FMRIB Software Library) | Data Preprocessing & Analysis | A comprehensive library for MRI brain analysis. Its tools, like eddy_correct and FLIRT, are industry standards for correcting distortions and motion in dMRI data [30] [31]. |

| DTIstudio / DSIstudio | Tractography & Visualization | Specialized software for performing deterministic fiber tracking and for the interactive selection and quantification of white matter tracts via ROIs [30]. |

| MRtrix3 | Advanced Tractography & Processing | Provides state-of-the-art tools for dMRI processing, including denoising, advanced spherical deconvolution-based tractography, and connectivity analysis [31]. |

| ANTs (Advanced Normalization Tools) | Image Registration | A powerful tool for sophisticated image registration, often used for EPI distortion correction by non-linearly aligning dMRI data to structural T1-weighted images [31]. |

| ENIGMA-DTI Protocol | Standardized Analysis | A harmonized pipeline for skeletonized TBSS analysis, enabling large-scale, multi-site meta- and mega-analyses of DTI data across research groups worldwide [29]. |

DTI Acquisition Protocols and Computational Analysis Methods

Frequently Asked Questions (FAQs)

What is the b-value and how does it affect my diffusion MRI data?

The b-value (measured in s/mm²) is a factor that reflects the strength and timing of the gradients used to generate diffusion-weighted images (DWI) [32]. It controls the degree of diffusion-weighted contrast in your images, similar to how TE controls T2-weighting [32].

The signal in a DWI follows the equation: S = So * e^(–b • ADC), where So is the baseline MR signal without diffusion weighting, and ADC is the apparent diffusion coefficient [32]. A higher b-value leads to stronger diffusion effects and greater signal attenuation but also reduces the signal-to-noise ratio (SNR) [32] [33]. Choosing the correct b-value is crucial, as it directly impacts the quality of your derived quantitative measures, such as ADC maps, which are essential for analyzing large datasets in behavioral studies [33].

What is the recommended range of b-values for clinical and research studies?

The optimal b-value depends on the anatomical region, field strength, and predicted pathology [32]. The following table summarizes expert recommendations:

Table 1: Recommended b-value Ranges

| Anatomical Region | Recommended b-value range (s/mm²) | Key Considerations |

|---|---|---|

| Brain (Adult) | 0 – 1000 [33] | A useful rule of thumb is to pick the b-value so that (b × ADC) ≈ 1 [32]. |

| Body | 50 – 800 [33] | Includes areas like the liver and kidneys. |

| Neonates/Infants | 600 – 700 [32] | Higher water content and longer ADC values require adjustment. |

| High-Contrast Microstructure | 2000 – 3000 [33] | Provides specific information on tissue microstructure but with increased noise [32]. |

How many gradient directions should I use and why does it matter?

The number of diffusion gradient directions is critical for accurately modeling tissue anisotropy, especially in structured tissues like brain white matter.

Table 2: Guidelines for Gradient Directions

| Application / Goal | Minimum Number of Directions | Rationale and Notes |

|---|---|---|

| Basic ADC calculation | 3–4 directions [33] | Mitigates slight tissue anisotropy effects. |

| Full Diffusion Tensor Imaging (DTI) | ≥ 6 non-collinear directions [33] | Required to calculate fractional anisotropy (FA) and tensor orientation in anisotropic tissues [33]. |

| Multi-shell acquisitions | Different directions per shell [34] | Using the same directions for different b-value shells (e.g., b=1000 and b=2000) is not optimal. A multishell scheme with uniform coverage across all shells is a better approach [34]. |

What are the common artifacts and how can I correct for b-value inaccuracies?

Several artifacts can affect DWI data quality in large-scale studies:

- Geometric Distortions: Caused by magnetic susceptibility variations, especially at air-tissue interfaces [33].

- Eddy Currents: Induced by strong diffusion gradients, leading to image distortions [33].

- Gradient Deviations: The actual played-out b-value can deviate from its nominal value due to gradient nonlinearities, miscalibration, and other system imperfections [35]. This can lead to erroneous ADC and FA values.

An image-based method for voxel-wise b-value correction has been proposed to address these inaccuracies comprehensively [35]. The protocol involves:

- Phantom Scanning: Acquire DWI data (e.g., 64 directions) from a large isotropic water phantom at the target b-value (e.g., b=1000 s/mm²) [35].

- Calculate True ADC: Measure the phantom's temperature to determine the true diffusion constant of water (D_true) [35].

- Compute Correction Map: For each voxel and direction, compute a correction factor

c = ADC_err / ADC_true, where ADC_err is the ADC calculated using the nominal b-value. The effective b-value is thenb_eff = c * b_nom[35]. - Apply Correction: Use the calculated

b_effmap to correct research or clinical DTI datasets, improving the accuracy of diffusivity and anisotropy measures [35].

Experimental Protocols

Protocol 1: Basic DWI Acquisition for ADC Mapping

This protocol is suitable for initial experiments focusing on general diffusion characterization.

- Pulse Sequence: Use a single-shot spin-echo Echo-Planar Imaging (EPI) sequence for its speed and robustness to motion [33].

- Parameter Optimization:

- b-values: Acquire at least two b-values: a low b-value (b=0 s/mm² or b=50 s/mm² for body) and a high b-value (e.g., b=800 or 1000 s/mm²) [33].

- Gradient Directions: A minimum of 3–4 directions is sufficient for a rotationally invariant ADC estimate in relatively isotropic tissues [33].

- Data Output: The raw data will consist of DICOM files for each b-value and direction. These must be processed (motion-corrected, etc.) before voxel-wise ADC calculation using the equation:

ADC = ln(S_low / S_high) / (b_high - b_low)[36] [35].

Protocol 2: Comprehensive DTI for White Matter Tractography

This advanced protocol is designed for studies investigating white matter microstructure and connectivity in large datasets.

- Pulse Sequence: Single-shot spin-echo EPI, ideally with parallel imaging (e.g., SENSE, GRAPPA) to reduce TE and distortions [33].

- Parameter Optimization:

- Gradient Directions: For a full diffusion tensor model, acquire at least 6 non-collinear directions for a single shell, though more directions (e.g., 30-64) are recommended for robust tensor estimation and tractography [34] [33].

- Data Output: The dataset will include multiple DWI volumes. Preprocessing for large studies should include correction for motion, eddy currents, and susceptibility distortions [37]. The output is a 4D data file ready for tensor fitting to generate FA, MD, and eigenvector maps.

Workflow Visualization

The following diagram illustrates the key decision points and steps for optimizing b-values and gradient directions in a diffusion MRI study.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Materials for Diffusion MRI Quality Assurance

| Item | Function in Experiment |

|---|---|

| Isotropic Water Phantom | A crucial tool for validating scanner performance, calibrating gradients, and measuring voxel-wise b-value maps. Its known diffusion properties provide a ground truth reference [38] [35]. |

| Temperature Probe | Used to measure the temperature of the water phantom. This is essential for accurately determining the phantom's true diffusion coefficient (D_true), which is temperature-dependent [38] [35]. |

| Geometric Distortion Phantom | A phantom with a known grid structure to quantify and correct for EPI-related geometric distortions in the acquired diffusion images [33]. |

| B-value Correction Software | Custom or open-source scripts (e.g., in MATLAB) to implement voxel-wise correction algorithms, improving the accuracy of ADC and DTI metrics in large datasets [36] [35]. |

| Diffusion Data Preprocessing Pipelines | Integrated software tools (e.g., FSL, DSI Studio) that perform critical steps like eddy current correction, motion artifact removal, and outlier rejection, which are essential for ensuring data quality in behavioral studies [37] [34]. |

Balancing Angular Resolution with Acquisition Time

In diffusion MRI research, particularly in studies involving large datasets for behavioral or drug development purposes, one of the most fundamental challenges is balancing the need for high angular resolution with the practical constraints of acquisition time. Higher angular resolution—achieved by acquiring more diffusion gradient directions—provides a more detailed characterization of complex white matter architecture, such as crossing fibers. However, this comes at the cost of longer scan times, which can increase susceptibility to patient motion, reduce clinical feasibility, and complicate the management of large-scale datasets. This guide provides targeted troubleshooting and FAQs to help researchers optimize this critical trade-off in their experimental protocols.

FAQs: Resolving Common Experimental Issues

1. How many diffusion gradient directions are sufficient for a reliable DTI study?

The optimal number of gradients is a balance between signal-to-noise ratio (SNR), accuracy, and time. While only six directions are mathematically required to fit a diffusion tensor, research studies require more for robustness.

- Evidence-Based Recommendations: A study scanning 50 healthy adults at 4 Tesla found that the SNR for common metrics like Fractional Anisotropy (FA) and Mean Diffusivity (MD) peaked at around 60-66 gradients [39]. Beyond this, adding more directions provided diminishing returns for these particular metrics. For studies aiming to reconstruct more complex fiber orientation distributions, accuracy may continue to improve with more gradients [39].

- Protocol Selection: The takeaway is that for standard DTI metrics, protocols with approximately 60 directions offer a good balance. For studies specifically focused on crossing fibers, a higher number may be warranted if acquisition time allows.

2. Should I prioritize higher spatial resolution or higher angular resolution when scan time is fixed?

This is a classic trade-off. In a fixed scan time, increasing the number of gradients (angular resolution) requires larger voxel sizes (lower spatial resolution) to maintain SNR, and vice-versa.

- The Trade-off: Studies comparing time-matched protocols have shown that protocols with larger voxels and higher angular resolution generally provide better SNR and improved stability of diffusion measures over time [40]. However, larger voxels increase partial volume effects, potentially biasing anisotropy measures [40].

- Guidance for Different Goals:

- For tractography in regions with complex fiber crossings (e.g., kissing or crossing fibers), a high angular resolution is more critical [41].

- For accurately distinguishing between closely neighboring anatomical structures (e.g., kissing fibers), a high spatial resolution is more beneficial [41].

- Using "beyond-tensor" models can help mitigate the partial volume biases introduced by larger voxels [40].

3. My clinical DWI data has only 6 directions. Can I still use it for research?

While challenging, deep learning methods are being developed to enhance the utility of such limited data. One proposed method, DirGeo-DTI, uses directional encoding and geometric constraints to estimate reliable DTI metrics from as few as six directions, making retrospective studies on clinical datasets more feasible [42]. However, the performance of such models depends on the quality and diversity of their training data.

4. What are the most common pitfalls that affect DTI metric accuracy, beyond the number of directions?

The accuracy of your results can be compromised by multiple factors throughout the processing pipeline. Key pitfalls include [43]:

- Systematic Spatial Errors: Caused by inhomogeneities in the scanner's magnetic field gradients, these errors can significantly distort diffusion metrics. Correction methods like the B-matrix Spatial Distribution (BSD) are essential for accuracy [14] [44].

- Random Noise: Denoising techniques should be applied to the data. Combining denoising with BSD correction has been shown to significantly improve the quality of both DTI metrics and tractography [44].

- Subject Motion and Physiological Effects: Even with high gradient numbers, real-world factors like motion can limit the achievable SNR, making robust correction algorithms a necessity [39].

Troubleshooting Guides

Issue: Inconsistent or Noisy DTI Metrics Across Study Participants

Possible Causes and Solutions:

- Cause 1: Inadequate SNR due to an insufficient number of gradient directions or overly small voxels.

- Cause 2: Uncorrected systematic spatial errors from gradient non-uniformity.

- Cause 3: Failure of the tensor model in brain regions with complex fiber architecture (e.g., ~40% of white matter).

Issue: Tractography Fails to Resolve Crossing Fiber Pathways

Possible Causes and Solutions:

- Cause: The angular resolution of the acquisition is too low to accurately represent multiple fiber orientations within a single voxel.

- Solution: Prioritize angular resolution over spatial resolution for this specific goal. Research indicates that for resolving crossings, high angular resolution is crucial, whereas high spatial resolution is better for distinguishing between kissing fibers [41]. If your protocol is fixed, switch to a multi-fiber reconstruction model that is designed to resolve multiple fiber populations [41].

Quantitative Data for Protocol Design

The following tables summarize key experimental findings to guide your protocol design.

Table 1: Optimal Number of Gradient Directions for Key DTI Metrics (4T Scanner, Corpus Callosum ROI) [39]

| DTI Metric | Abbreviation | Number of Gradients for Near-Maximal SNR |

|---|---|---|

| Mean Diffusivity | MD | 58 |

| Fractional Anisotropy | FA | 66 |

| Relative Anisotropy | RA | 62 |

| Geodesic Anisotropy (and its tangent) | GA / tGA | ~55 |

Table 2: Trade-offs in Time-Matched Acquisition Protocols (3T Scanner) [40]

| Protocol | Voxel Size (mm³) | Number of Gradients | Key Strengths | Key Weaknesses |

|---|---|---|---|---|

| Protocol P1 | 3.0 × 3.0 × 3.0 | 48 | Higher SNR, better temporal stability | Increased partial volume effects |

| Protocol P3 | 2.5 × 2.5 × 2.5 | 37 | Finer anatomical detail | Lower SNR, less stable metrics over time |

Experimental Protocols for Key Studies

Protocol 1: Establishing SNR vs. Gradient Number

This methodology is derived from a study that empirically determined the relationship between gradient number and SNR in human subjects [39].

- Subject Description: 50 healthy adults.

- Image Acquisition:

- Scanner: 4 Tesla Bruker Medspec MRI scanner.

- Sequence: Optimized diffusion tensor sequence.

- Parameters: TE/TR = 92.3/8250 ms, 55 contiguous 2mm slices, FOV = 23 cm.

- Core Acquisition: 105 gradient images were collected (11 baseline b0 images and 94 diffusion-sensitized images).

- Data Analysis:

- Subsetting: Multiple subsets of gradients (ranging from 6 to 94) were selected from the full set, optimizing for spherical angular distribution.

- SNR Calculation: SNR was defined and calculated within a region of interest in the corpus callosum for various DTI metrics (FA, MD, RA, etc.).

- ODF Accuracy: The accuracy of orientation density function (ODF) reconstructions was also assessed as the number of gradients increased.

Protocol 2: Comparing Spatial vs. Angular Resolution Trade-offs

This protocol outlines an approach for evaluating the trade-off between spatial and angular resolution in a fixed scan time [40].

- Subject Description: 8 healthy subjects scanned twice, 2 weeks apart.

- Image Acquisition:

- Scanner: GE 3T MRI scanner with an 8-channel head coil.

- Fixed Time: All protocols were designed to take 7 minutes.

- Protocols:

- P1: 3.0 mm isotropic voxels with 48 gradients.

- P2: 2.7 mm isotropic voxels with 41 gradients.

- P3: 2.5 mm isotropic voxels with 37 gradients.

- Other Parameters: DWI data acquired with contiguous axial slices at b = 1,000 s/mm².

- Data Analysis:

- Stability Analysis: Maps of stability over time were created for FA, MD, and ODFs.

- TBSS Analysis: The stability of FA was assessed in 14 TBSS-derived ROIs.

- Simulations: Computational simulations with prescribed fiber parameters and noise were conducted to supplement the in-vivo findings.

Workflow Visualization

The following diagram illustrates the logical decision process for balancing angular resolution and acquisition time based on your research goals.

Decision Workflow for DTI Protocol

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Software and Computational Tools for DTI Analysis

| Tool / Resource Name | Type / Category | Primary Function in Analysis |

|---|---|---|

| FSL DTIFIT [42] | Model Fitting Software | Fits a diffusion tensor model at each voxel to generate standard DTI metric maps (FA, MD, etc.). |

| BSD-DTI Correction [14] [44] | Systematic Error Correction | Corrects for spatial distortions in the diffusion tensor caused by magnetic field gradient inhomogeneities, improving metric accuracy. |

| TractSeg [42] | Tractography Pipeline | Automates the segmentation of white matter fiber tracts from diffusion MRI data. |

| DirGeo-DTI [42] | Deep Learning Model | Enhances angular resolution from a limited number of diffusion gradients, useful for analyzing clinical-grade data. |

| Diff5T Dataset [22] | Benchmarking Dataset | A 5.0 Tesla dMRI dataset with raw k-space data, used for developing and testing advanced reconstruction and processing methods. |

Tractography Methods for White Matter Pathway Reconstruction

Frequently Asked Questions

Q1: How can I efficiently process tractography datasets containing millions of fibers? A1: Use robust clustering methods designed for massive datasets. Hierarchical clustering approaches can compress millions of fiber tracts into a few thousand homogeneous bundles, effectively capturing the most meaningful information. This acts as a compression operation, making data manageable for group analysis or atlas creation [45].

Q2: What can I do when my tractography results contain many spurious or noisy fibers? A2: Implement a clustering method that includes outlier elimination. These methods filter out fibers that do not belong to a bundle with high fiber density, which is an effective way to clean a noisy fiber dataset. The density-based filtering helps distinguish actual white matter pathways from tracking errors [45].

Q3: How can I achieve consistent and anatomically correct bundle segmentation across multiple subjects? A3: Use atlas-guided clustering. This technique incorporates structural information from a white matter atlas into the clustering process, ensuring the grouping of fiber tracts is consistent with known neuroanatomy. This leads to higher reproducibility and correct identification of white matter bundles across different subjects [46].

Q4: What is an alternative to manual region-of-interest (ROI) drawing for isolating specific white matter pathways? A4: Automated global probabilistic reconstruction methods like TRACULA (TRActs Constrained by UnderLying Anatomy) are excellent alternatives. They use prior information from training subjects to reconstruct pathways without manual intervention, avoiding the need for manual ROI placement on a subject-by-subject basis [47].

Q5: My tractography fails in regions with complex fiber architecture (e.g., crossing fibers). How can I improve tracking in these areas? A5: Consider using algorithms that utilize the entire diffusion tensor, not just the major eigenvector. The Tensor Deflection (TEND) algorithm, for instance, is less sensitive to noise and can better handle regions where the diffusion tensor has a more oblate or spherical shape, which often occurs where fibers cross, fan, or merge [48].

Troubleshooting Guides

Issue 1: Long Processing Times for Large Tractography Datasets

Problem: Clustering a whole-brain tractography dataset is computationally intensive and takes an impractically long time, often failing due to memory limitations.

Solution: Employ a smart hierarchical clustering framework designed for large datasets.

- Step 1: Data Reduction. Use a method that exploits the inherent redundancy in large datasets. Techniques like random sampling or partitioning the data can significantly reduce the computational load before the main clustering begins [46].

- Step 2: Hierarchical Decomposition. Break down the problem into smaller, more manageable steps. A recommended workflow is:

- Separate tracts by hemisphere [45].

- Split the resulting subsets into groups of tracts with similar lengths [45].

- Within each length group, generate a voxel-wise segmentation of white matter into gross bundle masks [45].

- Perform a final clustering on the extremities of the tracts to create homogeneous fascicles [45].

- Step 3: Parallel Computing. Use software toolkits that leverage the multithreading capabilities of modern multi-processor systems to distribute the workload and reduce processing time from hours to minutes [46].

Issue 2: Poor Anatomical Accuracy and Low Reproducibility

Problem: Reconstructed fiber bundles do not correspond well to known white matter anatomy, and results vary greatly between users or across different sessions.

Solution: Integrate anatomical priors to guide the clustering process.

- Step 1: Atlas-Guided Clustering. Incorporate information from a white matter atlas into the clustering algorithm. This ensures the formation of clusters is spatially constrained by anatomical knowledge, leading to more biologically plausible bundles [46].

- Step 2: Use a Training Set. For automated reconstruction of specific pathways, use a method like TRACULA. This involves:

- Manually labeling the pathways of interest in a set of training subjects [47].

- Combining these manual labels with an automatic anatomical segmentation to derive a prior model of each pathway's trajectory [47].

- Applying this model to constrain the tractography search space in new subjects, penalizing connections that do not match the prior anatomical knowledge [47].

Issue 3: Handling of False-Positive and False-Negative Tracts

Problem: Tractography output contains many erroneous streamlines (false positives) or misses true white matter connections (false negatives).

Solution: Implement a robust, multi-stage clustering pipeline that filters and validates tracts.

- Step 1: Generate a High-Fidelity Dataset. Use advanced tractography algorithms (e.g., deterministic regularized tractography using spherical deconvolution) on high-quality data (e.g., HARDI) to maximize the accuracy of the initial tractogram [45].

- Step 2: Filter via Clustering. Use the clustering process itself as a filter. By grouping tracts into bundles based on geometry and spatial location, the method can automatically filter out fibers that do not belong to any robust, high-density bundle, thus removing many false positives [45].

- Step 3: Split and Merge Strategy. A robust hierarchical process involves splitting the data into preliminary bundles and then merging fascicles with very similar geometries. This strategy alleviates the risk of both over-splitting true pathways and merging distinct pathways together [45].

Comparison of Large-Scale Clustering Methods

The following table summarizes key methodologies for handling large tractography datasets.

| Method Name | Core Approach | Key Advantage | Reported Scale |

|---|---|---|---|

| Hierarchical Clustering [45] | Sequential steps: hemisphere/length grouping, voxel-wise connectivity, extremity clustering. | Robustness; can be applied to data from different tractography algorithms and acquisitions. | Millions of fibers → Thousands of bundles |

| CATSER Framework [46] | Atlas-guided clustering with random sampling and data partitioning. | High speed and anatomical consistency across subjects. | Hundreds of thousands of fibers in "a couple of minutes" |

| TRACULA [47] | Global probabilistic tractography constrained by anatomical priors from training subjects. | Fully automated, reproducible reconstruction of specific pathways without manual ROI definition. | Suitable for large-scale studies (dozens of subjects) |

Research Reagent Solutions

| Item / Tool | Function in Tractography Research |

|---|---|

| High Angular Resolution Diffusion Imaging (HARDI) | An advanced MRI acquisition scheme that samples more diffusion directions than standard DTI, allowing for better resolution of complex fiber architectures like crossings [45]. |

| BrainVISA Software Suite [45] | A comprehensive neuroimaging software platform that includes tools for processing and clustering massive tractography datasets. |

| Spherical Deconvolution Algorithms | A type of diffusion model used in tractography to estimate the fiber orientation distribution function (fODF), improving the accuracy of tracking through complex white matter regions [45]. |

| White Matter Atlas [46] | A predefined segmentation of white matter into anatomical regions or bundles. Used to guide clustering algorithms for anatomically correct and reproducible bundle extraction. |

| TEND & Tensorlines Algorithms [48] | Tractography algorithms that use the entire diffusion tensor to deflect the fiber trajectory, making them less sensitive to noise and better in regions of non-linear fibers compared to standard streamline methods. |

Experimental Workflow Diagrams

Hierarchical Fiber Clustering Workflow

Atlas-Guided Clustering Framework

Automated Probabilistic Reconstruction (TRACULA)

Multi-Site Study Design and Protocol Standardization

Technical Support Center

Troubleshooting Guides

Problem: Inconsistent Quality Control (QC) results across research teams for large-scale dMRI datasets.

- Solution: Implement a centralized, standardized QC pipeline that generates consistent visual outputs (e.g., PNG images) for all researchers to assess. Utilize a team platform that allows for quick visualization, documents when data processing has failed, and aggregates QC assessments across pipelines and datasets into a shareable format (e.g., CSV) [49].

Problem: Low site engagement and performance variability impacting participant accrual and data compliance.

- Solution: Apply a structured, phase-based site engagement strategy [50] [51].

- Planning/Launch Phase (Months 1-3): Build partnerships and commitment by actively eliciting site-specific processes and feedback on trial design. Provide layered education and establish clear communication channels [50] [51].

- Conducting/Maintenance Phase (Months 4-8+): Sustain engagement through bi-directional communication. Facilitate learning networks via monthly group calls for shared problem-solving, conduct refresher trainings for new staff, and perform regular site visits or calls to troubleshoot challenges [50] [51].

- Dissemination/Closeout Phase: Leverage site partnerships to create locally designed dissemination plans. Collect structured feedback on site experience and recognize contributions to build goodwill for future collaboration [50] [51].

Problem: Delays and inconsistencies in multi-site Institutional Review Board (IRB) approvals.

- Solution: Advocate for a centralized IRB review system to streamline the process. If local review is required, the coordinating center should provide document templates, track deadlines, and send reminders to sites. Standardized forms and procedures across sites can significantly reduce approval times and inconsistencies [52].

Problem: Lengthy study start-up timelines, particularly due to budget negotiations.

- Solution: Focus on reducing "white space," the unproductive time between active review cycles during budget negotiations. Provide upfront justifications for budget items, use standard editing practices, and establish clear internal limits for negotiations to avoid prolonged discussions that do not materially change the budget [53].

Problem: Tractography algorithm failures or unreliable outputs in dMRI studies.

- Solution: Participate in or leverage lessons from international tractography challenges, which provide platforms for fair algorithm comparison and validation against ground truth data. These efforts offer quantitative measures of the reliability and limitations of existing approaches, guiding researchers toward more robust techniques [54].

Frequently Asked Questions (FAQs)

Q: What is the most critical element for ensuring protocol standardization across multiple sites? A: A rigorous and detailed study protocol is foundational, but it must be paired with a well-organized coordinating center. This center ensures standardization through comprehensive site training, ongoing monitoring, and the implementation of stringent quality assurance measures that minimize inter-site variability [55].