Managing White Balance Variations in Video Behavior Analysis: A Complete Guide for Biomedical Research

This article provides researchers, scientists, and drug development professionals with a comprehensive framework for managing white balance to ensure color fidelity and data integrity in video-based behavioral analysis.

Managing White Balance Variations in Video Behavior Analysis: A Complete Guide for Biomedical Research

Abstract

This article provides researchers, scientists, and drug development professionals with a comprehensive framework for managing white balance to ensure color fidelity and data integrity in video-based behavioral analysis. It covers the foundational impact of color temperature on quantitative data, methodological approaches for in-camera and post-processing correction, strategies for troubleshooting complex lighting scenarios, and rigorous validation techniques for scientific rigor. By addressing these core areas, the guide empowers professionals to minimize analytical artifacts and enhance the reliability of their findings in preclinical and clinical studies.

Understanding White Balance Fundamentals and Its Critical Role in Behavioral Data Fidelity

Defining White Balance and Color Temperature in the Scientific Context

Fundamental Definitions

What is White Balance?

White balance (WB) is a crucial process in digital imaging that ensures the color temperature of a scene is accurately represented, making white objects appear truly white and, by extension, guaranteeing the correct appearance of all other colors in the image [1] [2]. This adjustment is essential because different light sources emit light with varying color properties, which can introduce unwanted color casts. The primary objective of white balance correction, particularly in a research context, is to achieve color constancy—ensuring that the perceived color of objects remains consistent regardless of changes in the illumination conditions [3] [4]. This process mimics the chromatic adaptation of the human visual system, which automatically adjusts for different lighting, a capability that cameras and scientific imaging systems do not intrinsically possess [3] [4] [2].

What is Color Temperature?

Color temperature is a quantitative concept that describes the spectral properties of a light source by comparing its color output to that of an idealized theoretical object known as a black-body radiator. It is measured in degrees Kelvin (K) [1] [5]. The Kelvin scale is based on molecular energy, where higher temperatures correspond to bluer (cooler) light, and lower temperatures correspond to yellower and redder (warmer) light [2]. In scientific and industrial applications, standardized illuminants with specific Correlated Colour Temperatures (CCTs) are often used as reference whites, such as D50 (5000 K) in graphic arts or D65 (6500 K) in color television [6].

The table below summarizes the color temperatures of common light sources encountered in laboratory and real-world settings:

Table 1: Color Temperatures of Common Light Sources

| Light Source | Typical Color Temperature (K) |

|---|---|

| Candlelight | 1900 K [5] |

| Incandescent / Tungsten Bulb | 2700 - 3200 K [1] [5] |

| Sunrise / Golden Hour | 2800 - 3000 K [5] |

| Halogen Lamps | 3000 K [5] |

| Moonlight | 4100 K [5] |

| White LEDs | 4500 K [5] |

| Mid-day Sun / Daylight | 5000 - 5600 K [1] [5] |

| Camera Flash | 5500 K [5] |

| Overcast Sky | 6500 - 7500 K [5] |

| Shade | 8000 K [5] |

| Heavy Cloud Cover | 9000 - 10000 K [5] |

| Blue Sky | 10000 K [5] |

Troubleshooting Guide: Frequently Asked Questions (FAQs)

FAQ 1: Despite using Auto White Balance (AWB), my video data shows inconsistent colors across different recording sessions. What is the issue?

Auto White Balance (AWB) algorithms, while convenient, are not recommended for scientific research due to their inherent variability [7]. AWB functions by having the camera analyze the scene and make its best guess at the correct color temperature. However, this evaluation is easily influenced by environmental factors such as ambient light intensity and the dominant colors within the scene itself [7] [5]. If your scene lacks neutral (white, black, or grey) colors, is dominated by a single color, or is illuminated by multiple light sources with different color temperatures, the AWB will produce inconsistent and unreliable results [5]. For reproducible data, manual control of white balance is essential.

FAQ 2: How can I achieve accurate color when my experimental setup involves multiple light sources with different color temperatures (mixed lighting)?

Mixed lighting is one of the most challenging scenarios for white balance correction, as a single global adjustment cannot perfectly correct all light sources simultaneously [1] [5]. The first and most effective strategy is to eliminate the variability at the source by using a single, consistent light source or by matching all lights to the same color temperature [1]. If standardizing the lighting is not feasible, you should:

- Use a Custom White Balance: Set a custom white balance using a reference card under the primary light source illuminating your subject [5].

- Shoot in a RAW Format: Capture video data in a RAW format, which retains all the original sensor information and allows for non-destructive and highly precise white balance adjustments during post-processing analysis [1] [2].

- Employ Advanced Algorithms: For sophisticated analysis, consider advanced computational methods. Multi-color balancing techniques, such as three-color balancing, can improve color constancy by mapping multiple target colors to their known ground truths, offering better performance than standard white balancing [4].

FAQ 3: My automated image analysis algorithm is producing variable results due to color shifts in the input video. How can I make my analysis robust to white balance variations?

This is a common problem in quantitative video behavior analysis. The solution involves moving beyond subjective color correction to a fully standardized and calibrated imaging chain [8]. Key steps include:

- Pre-Imaging Calibration: Before every experiment, use a standardized, sterile reference target (e.g., a color checker card or a neutral gray card) placed within the scene to establish a known color reference point [9] [8].

- In-Situ Referencing: For the highest accuracy, the reference should be captured under the exact same imaging conditions (distance, lighting, optics) as your subject. The use of references obtained under different conditions is a major source of error [10].

- Procedural Standardization: Implement strict protocols for sample acquisition, preparation, and staining to minimize pre-analytic color variables [8].

- Algorithmic Correction: Develop a pre-processing step for your analysis pipeline that uses the reference target from each video session to apply a deterministic color transformation, normalizing all input data to a consistent color space before analysis [3] [8].

Experimental Protocols for Research

Basic Protocol: Custom In-Camera White Balance

This protocol is the foundational method for achieving correct color at the time of data acquisition.

- Acquire a Reference Target: Place a pure white or neutral mid-gray card (commercially available) within your scene, ensuring it is illuminated by the primary light source for your experiment.

- Fill the Frame: Position your camera so that the reference card fills or mostly fills the field of view. Ensure the card is evenly lit and in focus.

- Capture Reference Image: Take a photograph of the card.

- Set Custom WB: Navigate your camera's menu to the "Custom White Balance" or "Preset Manual" mode (often indicated by an icon of two overlapping triangles).

- Select Reference: The camera will prompt you to select the reference image you just captured. Confirm your selection.

- Verify and Lock: The camera will now calculate and set the white balance. Your camera will maintain this setting for all subsequent video recording until you change it, ensuring session-to-session consistency [5].

Advanced Protocol: Synthetic Reference Generation for Sterile or Challenging Environments

In some research environments, such as surgical fields or sterile workspaces, using a traditional reference target is impossible. This protocol, adapted from hyperspectral imaging research, outlines a method for generating a synthetic white reference.

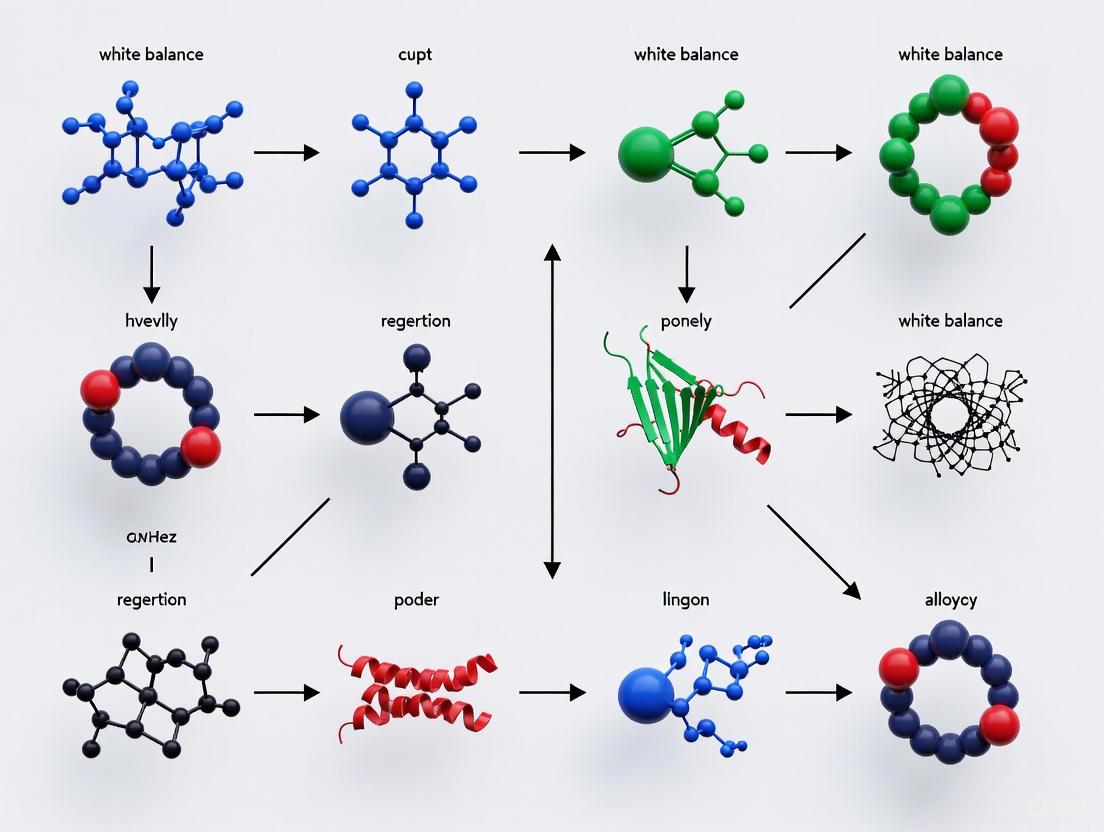

Diagram: Workflow for Synthetic White Reference Generation

Methodology:

- Capture Video: Using your video camera, record a short video clip of a standard sterile ruler (or another sterile, uniformly colored object widely available in the environment) under the same lighting and camera settings that will be used for the experiment.

- Construct Composite Image: Generate a single, high-quality composite image from the video frames to average out noise and imperfections.

- Model the Reference: Formally model the ideal white reference as the product of independent spatial and spectral components, multiplied by a scalar factor that accounts for camera gain, exposure time, and light intensity [10].

- Apply Reflectivity Compensation: The algorithm must account for the non-ideal, non-Lambertian reflectivity of the ruler to estimate what a perfect reflective surface would look like under the same conditions.

- Generate Reference: The output is a synthetic white reference image that can be used for white balancing subsequent video frames of the actual experiment, achieving accurate color and spectral reconstruction without breaking sterility [10].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 2: Key Materials for White Balance Standardization in Research

| Item Name | Function / Explanation |

|---|---|

| White Balance / Gray Card | A reference card of known neutral color (white or 18% gray). Used for in-scene calibration to set a custom white balance, providing a target for the camera or software to neutralize color casts [1] [9]. |

| Color Checker Card | A card containing an array of color patches with known spectral reflectance values. It allows for advanced multi-color balancing, which can correct colors beyond just white, and is used to create color profiles for specific lighting conditions [4] [2]. |

| Spectrophotometer / Color Meter | A precision instrument that measures the spectral power distribution of a light source. It provides the exact color temperature in Kelvin, enabling researchers to manually set the most accurate white balance setting on their cameras [2]. |

| Spectralon Tile | A highly reflective, near-perfect Lambertian surface made from fluoropolymer. It is the traditional standard for white reference in imaging science but is often not sterilizable, limiting its use in controlled environments [10]. |

| Sterile Ruler | A common sterile surgical tool. It can be repurposed as a reference object for generating a synthetic white reference in environments where traditional standards cannot be used, preserving the sterile field [10]. |

In video behavior analysis, consistent and accurate color representation is paramount. The Kelvin (K) scale is the standard measure for color temperature, quantifying the hue of a light source from warm (yellow/red) to cool (blue) [11] [12]. A proper understanding of this scale is not merely an artistic pursuit; it is a critical methodological factor that ensures the fidelity of visual data, prevents analytical artifacts caused by inconsistent lighting, and enables the valid comparison of results across different experimental sessions and laboratories [13] [1].

The core principle is that all light sources possess a color temperature [14]. Lower Kelvin values (e.g., 2000K-3200K) correspond to warmer, amber tones, similar to candlelight or incandescent bulbs. Higher Kelvin values (e.g., 5500K-6500K) correspond to cooler, bluish tones, akin to midday sun [11] [15]. For the researcher, a failure to account for these variations can introduce a significant confounding variable, where observed changes in an animal's coloration or apparent behavior are in fact driven by shifts in illumination rather than the experimental manipulation [13].

Kelvin Scale Reference and Troubleshooting

The following table summarizes the color temperatures of light sources frequently encountered in laboratory and filming environments. This data is essential for identifying potential sources of color imbalance in your setup [11] [13] [15].

| Kelvin (K) Value | Light Source Examples | Typical Appearance |

|---|---|---|

| 1500K - 2000K | Candlelight, embers [13] [15] | Warm, deep orange/red |

| 2500K - 3000K | Household incandescent bulbs, tungsten studio lights [14] [13] [1] | Warm, yellow-orange |

| 3200K | Standard halogen/Fresnel lamps (common in video) [13] [15] | Warm white |

| 4000K - 4500K | Fluorescent lighting (some types), "neutral white" [11] [13] | Neutral white |

| 5500K - 5600K | Midday sun, electronic camera flash, HMI lights [14] [13] [1] | Cool white (standard daylight) |

| 6000K - 7000K | Overcast sky, shaded light [14] [15] | Cool, bluish-white |

| 9000K+ | Clear blue sky [12] [15] | Very cool, deep blue |

Frequently Asked Questions (FAQs) & Troubleshooting

Q1: My video footage has an unnatural blue or orange tint. What is the most likely cause and how can I fix it?

- Cause: This is a classic symptom of incorrect white balance. Your camera was set to a Kelvin value that did not match the color temperature of your primary light source [14] [1]. A scene lit with tungsten bulbs (3200K) will look blue if the camera is set for daylight (5600K), and orange if the situation is reversed [13].

- Solution:

- Manually set your camera's white balance before recording. Use a custom white balance function with a neutral gray or white card placed in the same lighting as your subject for the most accurate result [1] [16].

- For existing footage, use color correction tools in software like DaVinci Resolve or Adobe Premiere Pro. Use the eyedropper tool on a known white or gray area in the scene to neutralize the color cast [17] [12].

Q2: I am using multiple light sources in my experimental arena. How do I prevent color inconsistencies across the field of view?

- Cause: Mixed lighting, where different light sources with different color temperatures illuminate the same scene, causes color shifts and inconsistent appearance [11] [14].

- Solution:

- Standardize your lights: Use light sources that can be tuned to the same Kelvin value [1].

- Use corrective gels: If standardization is not possible, use color-correcting gels like CTO (Color Temperature Orange) or CTB (Color Temperature Blue) on your light fixtures to match their color temperatures [12]. CTB gels cool down warm light, while CTO gels warm up cool light.

- Prioritize one source: Set your camera's white balance for the dominant light source and accept the color cast from the secondary source, or block the secondary source if it is not essential.

Q3: Why does the "Auto White Balance" (AWB) setting on my camera sometimes produce inconsistent results between recordings?

- Cause: Auto White Balance algorithms can be fooled by dominant colors in a scene (e.g., a large red substrate in an animal enclosure) or under mixed lighting conditions, leading to varying results from one shot to the next [1] [16].

- Solution: For scientific consistency, avoid AWB in controlled experiments. Manually set and document the white balance Kelvin value for all recordings to ensure data consistency [13] [1]. This eliminates an uncontrolled variable from your methodology.

Experimental Protocol: Standardizing White Balance for Reproducible Video Analysis

Objective: To establish a standardized, reproducible method for setting and documenting white balance in video-based behavioral research, minimizing color as a confounding variable.

Principle: The camera must be calibrated to perceive a neutral white or gray object as truly neutral under the specific lighting conditions of the experiment, ensuring all other colors are rendered accurately [1] [16].

Materials & Reagents:

- Digital camera with manual white balance capability.

- Neutral reference card: A standardized 18% neutral gray card is ideal, but a pure, matte-white object can suffice [1].

- Consistent light source: Laboratory lighting or dedicated video lights that can be maintained at a stable output and color temperature.

Procedure:

- Setup and Stabilization: Position your experimental arena and configure all lighting. Allow lights to warm up for at least 10 minutes to ensure their color temperature has stabilized [18].

- Place Reference: Position the neutral gray or white card within the arena, ensuring it is evenly illuminated by the primary light source and fills the camera's field of view.

- Access Camera Menu: Navigate to your camera's manual white balance or custom white balance setting.

- Calibrate: Follow the camera's instructions to capture an image of the reference card. The camera will analyze this image to calculate the correct Kelvin value for the lighting.

- Select and Lock: Confirm the setting. The camera will now use this custom white balance until changed. Ensure the setting is saved or locked to prevent accidental alteration.

- Documentation: Record the final Kelvin value used in your lab notes or metadata for full experimental reproducibility.

Workflow for Managing Color Temperature

The following diagram illustrates the logical decision process for managing color temperature to ensure data fidelity in video analysis.

Essential Research Reagents and Materials

The table below details key materials required for implementing a robust color management protocol in video behavior analysis.

| Item | Function in Research |

|---|---|

| Manual-Control Camera | Allows for precise manual setting of white balance in Kelvin, removing the unreliable variable of automatic settings [1]. |

| Neutral Gray Card (18%) | Provides a standardized, spectrally neutral reference for performing custom white balance, ensuring true-to-life color reproduction [1]. |

| Color Temperature Meter | Directly measures the precise Kelvin value of a light source, enabling high-precision lighting setup and documentation (concept from cited sources on measuring K) [18]. |

| CTO / CTB Gels | Color-correcting filters placed over light sources to match the color temperature of multiple lights, eliminating mixed-lighting artifacts [12]. |

| Consistent Light Source (e.g., LED Panels) | Tunable or fixed-color temperature lights that provide stable and reproducible illumination across experimental trials [11] [14]. |

FAQs on Color Accuracy in Video Analysis

Q1: What is a color cast and how does it directly impact quantitative video analysis? A color cast is an unwanted tint that uniformly affects an entire video frame, often caused by inaccurate white balance or specific lighting conditions. In quantitative analysis, where color data is used for measurements, a color cast introduces significant systematic error. It alters the Red, Green, and Blue (RGB) channel values captured by the camera, meaning that a measured color change could be due to the actual chemical reaction being studied or an artifact of the inconsistent lighting. This compromises the validity and reproducibility of the data [19] [20] [5].

Q2: Why does my video's color balance fluctuate between frames, and how can I prevent it? Fluctuating white balance between frames is almost always caused by using auto white balance and auto-exposure settings on your camera. In this mode, the camera continuously re-analyzes the scene and adjusts the color and exposure settings based on the content within the frame, such as moving objects or changing shadows [21]. To prevent this, you must switch your camera to fully manual mode before recording. This allows you to lock in a fixed white balance (either using a custom setting or a specific Kelvin value), aperture, ISO, and shutter speed for the entire duration of the experiment, ensuring consistent color capture from frame to frame [22] [21] [5].

Q3: What is the most reliable method to achieve accurate white balance for my experimental setup? The most reliable method is to perform a manual custom white balance using a neutral reference card (white or mid-gray) under the exact same lighting conditions used for your experiment. This process involves photographing the reference card to fill the frame, then using that image to calibrate the camera's white balance. This tells the camera what "true white" looks like in your specific environment, leading to far greater accuracy than auto modes or preset white balance options [5]. For the highest precision, some cameras allow you to directly select a specific color temperature in Kelvin, but this still requires manual setting [5].

Q4: My video already has a color cast. How can I correct it during post-processing? You can correct color casts in post-processing by using a color reference chart filmed in the same lighting as your experiment. Software can use the known reference values of the chart's color patches to build a correction matrix or profile. This profile can then be applied to all frames of the video to mathematically neutralize the color cast and bring the colors closer to their true values [23] [20]. It is crucial to note that this method may not be as accurate as getting the white balance right during recording, as it can be difficult to fully separate the cast from the true colors of your sample.

Troubleshooting Guide

| Problem | Possible Cause | Solution |

|---|---|---|

| Inconsistent colors between videos from different days | Slight variations in lighting conditions or a manually set Kelvin value that doesn't match the new environment. | Use a custom white balance before every recording session. Do not rely on a Kelvin value from a previous session [22] [5]. |

| Color correction in post-processing gives poor results | The reference chart was not in the same plane as the sample or was poorly lit, leading to an inaccurate profile. | Ensure the color chart is placed at the same level as your sample and is evenly illuminated. Use charts with a matte finish to avoid specular reflections [23]. |

| Skin tones or specific colors look wrong after correction | Generic color chart data is being used, which may not be optimized for all color ranges. The camera's profile is over-correcting certain hues. | For critical work, consider creating a custom camera profile using a chart measured with your own spectrophotometer for more accurate reference data [23]. |

| Persistent green or magenta tint after white balance | "Tint memory" in the camera; a previous custom white balance correction is being carried over. Mixed lighting from sources with different color temperatures (e.g., window light and overhead LEDs) [22]. | Perform a new custom white balance to reset both Kelvin and Tint values. Control your environment to use a single, uniform light source [22] [5]. |

Quantitative Data on Color Accuracy and Correction

Table 1: Common Color Difference Metrics for Quantifying Accuracy This table outlines standard metrics used to quantify color deviation.

| Metric Name | Formula / Principle | Interpretation / Use Case |

|---|---|---|

| ΔE (CIE 1976) | ΔE = √[(L₂ - L₁)² + (a₂ - a₁)² + (b₂ - b₁)²] | The original, simple Euclidean distance in CIE L*a*b* space. A ΔE of 1.0 is roughly a just-noticeable difference [23]. |

| ΔE (CIE 2000) | Complex formula accounting for perceptual non-uniformities in L*a*b* space. | A more perceptually accurate metric. Used in strict guidelines like FADGI. An average ΔE2000 below 2.0 is considered high accuracy [23]. |

| Chromaticity (CIE x, y) | x = X/(X+Y+Z), y = Y/(X+Y+Z) | Isolates and compares pure color, independent of lightness (Y), often plotted on a 2D chromaticity diagram [20]. |

Table 2: Effectiveness of Color Correction Methodologies This table summarizes results from a study on smartphone video colorimetry.

| Condition | Average Color Error (ΔE) Before Correction | Average Color Error (ΔE) After Correction | % Improvement |

|---|---|---|---|

| Inter-Device Variation | High (Exact values not provided in source) | N/A | 65-70% reduction [20] |

| Lighting-Dependent Variation | High (Exact values not provided in source) | N/A | 65-70% reduction [20] |

| Viewing Angle (Oblique) | Increased by up to 64% vs. reference | N/A | (Highlighting a key source of error) [20] |

Experimental Protocol: Color Correction for Quantitative Video Analysis

Aim: To establish a standardized workflow for capturing and correcting video to ensure color accuracy for quantitative analysis.

Materials:

- Camera (smartphone or DSLR) capable of manual video mode.

- Neutral white balance reference card (e.g., mid-gray or pure white).

- Standardized color reference chart (e.g., X-Rite ColorChecker Classic or SG).

- Controlled, uniform lighting setup.

- Computer with video processing and color analysis software (e.g., Python/OpenCV, ImageJ, or commercial tools).

Procedure:

- Setup Stabilization: Place the camera on a stable tripod. Configure your lighting to be as uniform as possible across the entire field of view and ensure it remains stable throughout the recording.

- Camera Configuration:

- Set the camera to manual video mode.

- Set the ISO, aperture, and shutter speed to fixed values. Use the lowest native ISO possible to reduce noise.

- Focus manually on the sample to prevent focus hunting during recording.

- White Balance Calibration:

- Place the neutral reference card in the center of your scene, ensuring it is evenly lit.

- Fill the camera's frame with the card and perform a manual custom white balance using your camera's function. Refer to your camera's manual for specific instructions [5].

- Reference Video Capture:

- Position the standardized color reference chart next to your sample at the same height, ensuring it is fully and evenly visible in the frame.

- Record a few seconds of video featuring both the chart and the sample. This "reference frame" will be used for all post-processing color correction.

- You may carefully remove the chart if it obstructs the experiment, ensuring the lighting is not disturbed.

- Experimental Video Capture: Proceed to record your experimental video.

- Post-Processing Color Correction:

- Extract a frame containing the color chart from your reference video.

- In your software, input the known reference values (e.g., CIE L*a*b*) for each patch of the chart.

- Use a function (e.g.,

cv2.transformin OpenCV for a polynomial correction) to compute a color correction matrix or profile that best maps the colors you captured to the known reference colors. - Apply this computed transformation to every frame of your experimental video.

The Scientist's Toolkit: Essential Materials for Color-Accurate Video

Table 3: Key Research Reagent Solutions for Color Management

| Item | Function & Rationale |

|---|---|

| Standardized Color Reference Chart | Serves as the ground truth for color. Charts like the X-Rite ColorChecker contain patches with precisely measured color values, enabling the creation of a camera-specific color profile and post-processing correction [23] [20]. |

| Neutral White Balance Card | A pure white or mid-gray card with a matte finish is critical for performing a manual custom white balance in-camera, preventing color casts at the source [5]. |

| Spectrophotometer | A laboratory instrument that measures the spectral reflectance or transmittance of surfaces. It provides the most accurate reference data for color chart patches, superior to generic manufacturer data, which can be a source of error [23] [20]. |

| Controlled LED Lighting | Provides a stable, uniform, and consistent light source with a known and stable color temperature (e.g., D50 or D65 standard illuminants). This is fundamental to reproducible imaging conditions [20]. |

| Color Management Software | Software capable of using images of reference charts to build ICC profiles or correction matrices, and then applying them to video frames. This is the computational engine for post-processing color accuracy [23] [20]. |

Workflow and Relationship Diagrams

Diagram 1: End-to-end workflow for color-accurate video analysis.

In video behavior analysis research, consistent and accurate color reproduction is a foundational requirement for data integrity. Auto White Balance (AWB), the feature in digital imaging systems that automatically adjusts color casts, becomes a significant source of uncontrolled experimental variance. AWB is designed for aesthetic photography, not scientific measurement, and its algorithmic nature often conflicts with the rigorous needs of reproducible research [24] [25]. This guide details the specific limitations of AWB, provides troubleshooting methodologies, and outlines protocols to mitigate its impact on your data, particularly within the context of drug development and behavioral studies.

Core Technical Limitations of AWB

FAQ: How does AWB fundamentally work, and why is that a problem for research?

AWB operates by analyzing the overall scene and making assumptions to neutralize color casts. It typically identifies the brightest area of an image as a "white point" and then adjusts all other colors accordingly to make that point neutral [26]. The core issue is that these are assumptions, not measurements, and they can be incorrect.

- Statistical Assumptions: Many traditional AWB algorithms rely on presuppositions like the "Gray-World" hypothesis (the average reflectance of a scene is gray) or the "White-Patch" method (the brightest patch is a perfect reflector) [3] [27]. These assumptions are frequently violated in research settings.

- Removal of Meaningful Data: AWB cannot distinguish between an undesirable color cast and a critical experimental signal. For instance, in video analysis of animal models, it might correct for the warm hue of specialized lighting or even for pigmentation changes in skin or fur that are relevant to the study [24].

- Inconsistency: AWB recalculates for every frame or scene change. This can lead to color values fluctuating unpredictably across a video sequence, making temporal analysis unreliable [26].

FAQ: In what specific experimental scenarios does AWB typically fail?

The following table summarizes common failure modes and their potential impact on research data.

Table 1: Common AWB Failure Scenarios in Research Environments

| Scenario | AWB Behavior | Impact on Research Data |

|---|---|---|

| Single-Color Dominated Scenes [24] [27] | Interprets a large area of a single color (e.g., a rodent's fur, a testing arena wall) as a color cast and attempts to neutralize it. | Alters the true colorimetric properties of the subject, leading to inaccurate tracking or classification. |

| Preservation of Atmospheric Color [24] | Removes desirable color casts, such as the warm light from a sunset/sunrise simulation or specialized ambient lighting. | Compromises studies on the impact of light wavelength on behavior or physiology. |

| Mixed or Artificial Lighting [26] | Struggles to correct scenes with multiple light sources of different color temperatures (e.g., fluorescent overhead lights combined with a monitor). | Creates inconsistent color rendition across different experimental setups or days. |

| Low-Light Conditions [27] | Produces unpredictable and often noisy color corrections due to poor signal-to-noise ratio. | Introduces significant error in quantitative color analysis. |

| Skin Tone/Reflectance Analysis [27] | Fails to accurately reproduce skin tones, particularly for darker skin with more complex spectral characteristics and lower reflectivity. | Critically undermines studies reliant on precise human or animal skin color measurement. |

Quantitative Evidence of AWB-Induced Error

The empirical case against using AWB in scientific measurement is strong. Research into point-of-care diagnostic devices, which often use smartphone cameras for colorimetric analysis, has highlighted these issues.

Table 2: Documented Impact of AWB on Measurement Systems

| Measurement Context | Documented Problem | Proposed Solution |

|---|---|---|

| Smartphone-based Colorimetric Assays (e.g., for Zika virus detection) [25] | Automatic white-balancing algorithms are identified as a key factor complicating the relationship between image RGB values and analyte concentration, leading to high limits of detection (LOD) and poor reproducibility. | Use of video analysis to synthesize many frames, and development of algorithms to select frames with minimal AWB interference. Replacing snapshot-based analysis with a more robust multi-frame metric. |

| Portrait & Skin Color Reproduction [27] | Traditional AWB algorithms (Gray-World, Max-RGB) show significant inaccuracies in reproducing skin tones, with errors exacerbated under extreme correlated color temperature (CCT) conditions. | Development of specialized algorithms (e.g., SCR-AWB) that incorporate prior knowledge of skin reflectance data to predict the scene illuminant's spectral power distribution (SPD). |

Troubleshooting & Experimental Protocols

FAQ: What is the most critical first step to mitigate AWB issues?

Shoot in RAW format. If your camera system supports it, recording video or image sequences in RAW format is the single most effective step. RAW data preserves the unprocessed sensor data, allowing you to apply a consistent, manually defined white balance to all your footage during post-processing, effectively bypassing the camera's AWB [26].

Experimental Protocol for System Validation

Before beginning any color-critical experiment, validate your imaging pipeline using the following protocol.

Objective: To determine the level of color variance introduced by AWB under standardized experimental conditions. Materials: Color calibration chart (e.g., X-Rite ColorChecker), your research subject or arena, fixed mounting for your camera. Procedure:

- Place the color calibration chart within the field of view, adjacent to your subject or region of interest.

- Set your camera to AWB mode. Record a baseline video sequence.

- Without moving the camera or changing the lighting, introduce a minor, non-color change to the scene (e.g., introduce a neutral-colored object, have a researcher walk through the frame).

- Observe if the AWB recalculates, evidenced by a visible color shift in the video. Use software to measure the RGB values of the ColorChecker patches before and after the shift.

- Repeat the experiment with the camera set to a manual white balance or daylight preset for a stable baseline [24] [26].

Workflow for Managing White Balance in Research

The following diagram outlines a systematic workflow for integrating white balance control into a video-based research methodology.

The Scientist's Toolkit: Research Reagent Solutions

Beyond standard lab equipment, the following "reagents" are essential for managing color fidelity in imaging-based research.

Table 3: Essential Materials for Color-Critical Video Research

| Item | Function | Application in AWB Management |

|---|---|---|

| Physical Color Checker Chart | Provides a standardized set of color and gray reference patches with known reflectance values. | Serves as the ground truth for setting and validating white balance manually in post-processing and for quantifying AWB-induced drift. [25] |

| Standardized Illumination Source | Provides consistent, full-spectrum lighting with a stable and known Correlated Color Temperature (CCT). | Removes the primary variable that AWB tries to correct for, allowing for the use of a single, fixed manual white balance setting. [24] |

| RAW Video/Image Processing Software (e.g., Python libraries, OpenCV, scientific image tools) | Allows for the application of a precise, consistent white balance value to all frames in a sequence based on a reference from the color checker. | The primary method for bypassing in-camera AWB and ensuring consistent color metrics across an entire dataset. [26] |

| Advanced AWB Algorithms (e.g., SCR-AWB [27]) | Software that uses prior knowledge (e.g., skin reflectance spectra) for more accurate illuminant estimation. | For specialized applications like behavioral phenotyping, these can offer more reliable correction than generic AWB when manual control is not feasible. |

| Video Frame Classification Algorithms [25] | Analyzes multiple video frames to select those with the most stable and reliable color properties for analysis. | Mitigates the impact of AWB fluctuations by rejecting frames where automatic corrections have degraded the colorimetric data. |

Foundational Concepts FAQ

How does human visual adaptation differ from camera white balance?

The human visual system achieves color constancy through complex biological mechanisms, allowing it to perceive consistent object colors despite changes in lighting conditions. In contrast, cameras rely on computational algorithms to adjust white balance, which lacks the sophisticated neural adaptation capabilities of biological vision [28] [29].

Key Differences:

- Human Vision: Uses neural adaptation mechanisms that interpret scenes based on context and spatial relationships [30]

- Camera Capture: Applies mathematical corrections based on assumptions about scene content (e.g., "gray world" assumption) [31]

- Temporal Dynamics: Human adaptation occurs continuously through eye movements and neural processing, while camera adjustments are typically frame-by-frame [32]

What are the primary mechanisms of human color constancy?

Research has identified three classical mechanisms that contribute to human color constancy:

- Local Surround: Color perception is influenced by contrast with immediately surrounding colors [30]

- Spatial Mean ("Gray World"): The visual system partially adapts to the average color of the entire scene [30]

- Maximum Flux ("Bright is White"): Perception adapts to the brightest area as a potential white reference [30]

Recent virtual reality experiments show that eliminating the local surround mechanism causes the most significant reduction in color constancy performance, particularly under green illumination [30].

Troubleshooting Guides

Problem: Inconsistent color measurements across varying lighting conditions

Solution: Implement biological inspiration in your capture setup

Include Color References:

- Place standardized color charts in the scene during recording

- Use multiple reference points to account for spatial variation

- Ensure references cover the expected color temperature range

Leverage Spatial Processing:

- Apply algorithms that consider spatial relationships in scenes

- Avoid global correction-only approaches

- Implement local adaptation models inspired by retinal processing [28]

Problem: Discrepancies between human perception and automated video analysis

Solution: Account for temporal dynamics in visual processing

Human vision does not process individual "frames" but rather continuous dynamic information from eye movements [32]. Camera systems capture discrete frames, missing crucial temporal information.

Experimental Protocol to Quantify Discrepancy:

Stimulus Design:

- Create test sequences with controlled illumination changes

- Include both sudden and gradual transitions

- Incorporate spatial patterns of varying complexity

Data Collection:

- Record human perceptual judgments using standardized scales

- Capture simultaneous video under identical conditions

- Apply multiple white balance algorithms to video footage

Analysis:

- Calculate perceptual difference metrics between human and automated judgments

- Identify specific conditions where discrepancies are largest

- Correlate with scene characteristics (spatial frequency, contrast, color distribution)

Problem: Uncontrolled variables in behavioral video analysis

Solution: Standardize experimental protocols based on visual adaptation principles

Pre-Recording Checklist:

- Document ambient lighting conditions with spectral measurements

- Establish fixed camera positions with consistent viewing angles

- Implement uniform background environments

- Calibrate all imaging equipment using standardized reference materials

- Validate color reproduction across expected behavioral areas

Critical Considerations Table:

| Factor | Human Vision Adaptation | Camera Limitation | Mitigation Strategy |

|---|---|---|---|

| Lighting Changes | Continuous neural compensation | Requires manual/algorithmic recalibration | Use constant, diffuse illumination sources |

| Spatial Context | Automatically accounts for surroundings | Treats each region independently | Maintain consistent background elements |

| Temporal Adaptation | Gradual adjustment to new conditions | Instantaneous or delayed correction | Allow stabilization period after changes |

| Color Perception | Relies on scene interpretation [30] | Pixel-based calculations | Include known reference colors in field of view |

Advanced Experimental Protocols

Protocol 1: Quantifying Color Constancy Mechanisms

This protocol is adapted from recent virtual reality research on color constancy [30].

Objective: Systematically evaluate the contribution of different cues to color constancy in experimental setups.

Materials:

- Virtual reality environment with controlled rendering

- Standardized test objects with known reflectance properties

- Multiple illuminant conditions (neutral, blue, yellow, red, green)

- Color selection interface for participant responses

Methodology:

Baseline Condition:

- Present scenes with all color constancy cues available

- Record color selection accuracy across illuminant conditions

Cue Elimination:

- Local Surround: Place test objects on uniform-colored surfaces

- Spatial Mean: Modify scene to maintain constant average color

- Maximum Flux: Include constant bright object with neutral chromaticity

Data Analysis:

- Calculate color constancy index for each condition

- Compare performance across cue elimination conditions

- Statistical analysis of cue contribution significance

Expected Results: Based on recent studies, eliminating local surround cues typically produces the largest reduction in constancy performance, while maximum flux elimination has minimal impact [30].

Protocol 2: Temporal Dynamics of Adaptation

Objective: Characterize the time course of adaptation differences between human vision and camera systems.

Research Reagent Solutions

Essential Materials for Visual Adaptation Experiments

| Reagent/Material | Function | Application Notes |

|---|---|---|

| Standardized Color Charts | Provides reference for color calibration | Ensure consistent positioning across experiments |

| Spectrophotometer | Measures precise spectral properties of light sources | Critical for quantifying illumination conditions |

| Adaptive Optics System | Controls for optical aberrations in vision research [33] | Allows isolation of neural adaptation effects |

| Virtual Reality Environment | Creates controlled visual contexts while maintaining immersion [30] | Enables precise manipulation of spatial cues |

| Eye Tracking System | Monitors fixational eye movements and saccades | Essential for studying active vision processes [32] |

| Contrast Sensitivity Test Patterns | Quantifies spatial and temporal vision thresholds [34] | Use for system validation and calibration |

Computational Tools for Consistent Measurement

| Tool/Algorithm | Purpose | Limitations |

|---|---|---|

| Hurlbert Regularization | Biologically-inspired color constancy algorithm [31] | Computationally intensive for real-time applications |

| Gray World Assumption | Simple white balance correction | Fails with non-uniform color distributions |

| Retinex-based Models | Simulates human lightness perception | Requires careful parameter tuning |

| Multispectral Imaging | Captures additional spectral information | Increased hardware requirements and complexity |

Methodological Approaches: Implementing Reliable White Balance Correction in Research Workflows

Establishing a Standardized Protocol for In-Camera White Balance

Frequently Asked Questions (FAQs)

Q1: Why is accurate white balance critical in video behavior analysis research? Inconsistent white balance introduces significant color casts, which can alter the perceived appearance of test subjects and environments. This variability compromises data integrity, as color information is often a key metric in behavioral analysis, and hinders the reproducibility of experiments across different recording sessions or camera systems [35].

Q2: What is the fundamental difference between Auto White Balance (AWB) and a manual preset in a research setting? AWB allows the camera to algorithmically determine color temperature, which can lead to inconsistent results as the scene composition changes [5] [36]. A manual preset, such as a custom white balance set with a gray card, provides a fixed, repeatable color reference point. This ensures consistent color representation from one recording to the next, which is a cornerstone of experimental standardization [37] [38].

Q3: How do I handle situations with multiple light sources of different color temperatures (mixed lighting)? Mixed lighting is a common challenge. The most reliable method is to eliminate the variable light sources where possible (e.g., closing blinds, turning off ambient room lights). If elimination is not feasible, you must dominate the scene with a single, consistent light source calibrated to a specific color temperature (e.g., 5500K) and set your custom white balance to that source. The camera can only compensate for one dominant illuminant [5] [38].

Q4: Can I correct white balance in post-processing, and is this recommended for research? While you can adjust white balance when working with RAW video formats, correcting heavily in post-processing can degrade image quality and introduce subjective judgment [37]. The gold standard for research is to "get it right in-camera." This practice provides a pristine original recording, saves analysis time, and removes post-processing as a variable, thereby enhancing the validity of your data [37] [36].

Q5: Our lab uses multiple camera models. How can we ensure consistent color across all devices? Different cameras have varying sensor spectral sensitivities and internal processing, which can lead to color rendition differences [39]. To mitigate this, use the same manual protocol—a calibrated gray card and consistent lighting—on all cameras. For high-precision applications, you may need to establish a camera-specific color calibration profile, though a rigorous custom white balance procedure is often sufficient for standardizing across devices [39].

Troubleshooting Guides

Problem: Consistent Yellow/Orange Color Cast

- Description: The entire video footage has an unnatural warm, yellowish hue.

- Probable Cause: Recording under tungsten (incandescent) lighting (~2700-3200K) with the camera set to a daylight (~5500K) or AWB preset that has incorrectly estimated the scene [5] [37].

- Solution:

- Manually set the white balance to the Tungsten/Light Bulb preset.

- For higher accuracy, perform a custom white balance using a gray card under the same tungsten lights [36].

Problem: Consistent Blue Color Cast

- Description: The video appears cool and bluish.

- Probable Cause: Shooting in shade or under heavy cloud cover (~7000-10000K) with the camera set to a daylight or AWB preset [5] [37].

- Solution:

- Manually set the white balance to the Shade or Cloudy preset.

- Perform a custom white balance in the actual shaded environment where you are recording [37].

Problem: Unpredictable Color Shifts Between Shots

- Description: Color temperature fluctuates even under what appears to be stable lighting.

- Probable Cause: Relying on Auto White Balance (AWB). AWB continuously re-evaluates the entire scene, and changes in subject composition or movement can confuse it [35] [36].

- Solution:

- Abandon AWB for controlled experiments.

- Set a manual custom white balance at the beginning of each recording session and after any change in lighting. This locks the color settings [38].

Problem: Partial Color Casts (Mixed Lighting)

- Description: Only parts of the frame have a color cast, such as a yellow area under a lamp and a blue area near a window.

- Probable Cause: The scene is illuminated by multiple light sources with different color temperatures, and the camera is set to a single white balance value [5] [38].

- Solution:

- Eliminate variable sources: Turn off ambient indoor lights when using your calibrated studio lights, or black out windows.

- Dominant source balancing: Set your custom white balance for the light source that illuminates your primary subject or the majority of the scene.

Standardized Experimental Protocols

Protocol 1: Custom White Balance Using a Gray Card

This is the recommended method for establishing a repeatable color baseline in a controlled environment [38].

Research Reagent Solutions

| Item | Function in Protocol |

|---|---|

| Standardized Gray Card (e.g., WhiBal) | Provides a spectrally neutral, 18% reflectance reference surface for the camera to measure true "white" or "gray" under the specific lab lighting [38]. |

| Color Checker Card | Used for higher-level validation and to create custom camera profiles for extreme color accuracy across the entire spectrum, beyond neutral balance. |

Methodology:

- Illuminate the scene with the primary light source that will be used during the experiment. Ensure lighting is consistent and even.

- Position the gray card so it fills the center of the camera's frame under the experimental lights. Avoid shadows or glare on the card.

- Set camera focus to Manual (MF) to prevent the lens from hunting for focus on the flat card.

- Capture an image of the gray card. The frame should be as evenly filled with the card as possible.

- Access camera menu and select the "Custom White Balance" or "Preset Manual" option.

- Select the reference image you just captured of the gray card. The camera will now use this image to calculate the correct color balance.

- Set white balance mode to "Custom". The camera is now calibrated to your lab's lighting conditions.

Protocol 2: Manual Kelvin Temperature Selection

Use this protocol when the color temperature of your light source is known and stable, such as with calibrated scientific lighting.

Methodology:

- Identify the color temperature of your primary light source from its specification sheet.

- Access camera menu and set the white balance mode to "K" (Kelvin).

- Dial the Kelvin value to match the known temperature of your lights (e.g., set 5500K for daylight-balanced LEDs).

- Validate and refine by recording a short clip of a gray or color checker card under the lights and analyzing the footage on a calibrated monitor. Make minor Kelvin adjustments if necessary.

Quantitative Data Reference

Table 1: Common Lighting Conditions and Corresponding Color Temperatures

| Lighting Condition | Typical Color Temperature Range (Kelvin) | Recommended WB Preset |

|---|---|---|

| Candlelight | 1900K [5] | |

| Incandescent/Tungsten | 2700-3200K [5] [37] | Tungsten / Light Bulb |

| Sunrise/Golden Hour | 2800-3000K [5] | |

| Halogen Lamps | 3000K [5] | |

| Fluorescent Lights | 4000-5000K [5] [36] | Fluorescent |

| Daylight (Mid-day) | 5000-5500K [5] [37] | Daylight / Sunny |

| Camera Flash | 5500K [5] [36] | Flash |

| Overcast/Cloudy Sky | 6500-7500K [5] [37] | Cloudy |

| Shade | 8000K+ [5] [37] | Shade |

Table 2: Troubleshooting Common Color Cast Issues

| Observed Problem | Probable Cause | Immediate Solution | Long-Term Standardized Solution |

|---|---|---|---|

| Yellow/Orange Cast | Tungsten lighting with incorrect WB | Switch to Tungsten preset | Implement Protocol 1 (Custom WB) |

| Blue Cast | Shade/Overcast with incorrect WB | Switch to Shade/Cloudy preset | Implement Protocol 1 (Custom WB) |

| Green/Magenta Tint | Fluorescent/LED lighting | Adjust "Tint" in post (if shooting RAW) | Use lights with high CRI and Protocol 1 |

| Inconsistent Colors Between Shots | Auto White Balance (AWB) fluctuations | Switch to any manual preset | Disable AWB and use Protocol 1 or 2 |

| Mixed Color Casts in Frame | Multiple light sources | Eliminate or filter conflicting sources | Control all lighting; use a single, dominant calibrated source |

In video behavior analysis research, consistent and accurate color reproduction is critical for reliable data extraction. Variations in white balance and lighting conditions can introduce significant artifacts, compromising the validity of quantitative results. This guide provides detailed protocols for using gray cards and ColorChecker charts to calibrate your imaging systems, ensuring color fidelity throughout your experiments.

Understanding the Tools and Their Role in Research

What are Gray Cards and ColorChecker Charts?

- Gray Cards: Physically calibrated cards that reflect 18% gray across the visible spectrum, providing a neutral reference point for your camera's exposure meter and white balance system [40].

- ColorChecker Charts: Standardized targets containing an array of 24 color patches, including primary/secondary colors and a neutral gray scale. The known, published color values (in CIE Lab and other color spaces) allow for precise color correction and profiling of entire imaging systems [41].

Why are they essential for video behavior analysis?

These tools move color management from a subjective estimation to an objective, measurable process. They control for variables like different camera sensor responses and changing ambient light, ensuring that observed color changes in video (e.g., in fur, skin, or reagents) are genuine and not artifacts of the imaging process [42].

Experimental Protocols for System Calibration

Protocol 1: Basic White Balance Calibration Using a Gray Card

This protocol establishes a neutral color baseline at the beginning of a recording session.

Materials:

- Calibrated 18% gray card

- Imaging setup (camera, lens, stable lighting)

Procedure:

- Setup: Position the gray card within the scene where your subject will be, ensuring it is evenly lit by the primary light source.

- Framing: Fill the camera's frame as much as possible with the gray card.

- Manual White Balance: Use your camera's "Custom White Balance" or "Preset Manual" function. Navigate to the setting, select the image of the gray card you have taken as the reference, and confirm.

- Verification: The camera will now use this reference to neutralize color casts. Capture a test shot of the gray card to confirm it appears neutral without any color tint [40].

Protocol 2: Comprehensive Color Correction Using a ColorChecker Chart

This advanced protocol creates a color profile for your specific camera and lighting setup, enabling precise color reproduction in post-processing.

Materials:

- X-Rite ColorChecker Classic or Passport chart

- Imaging setup and video recording system

- Software capable of applying ColorChecker-derived profiles (e.g., Adobe Premiere Pro, DaVinci Resolve)

Procedure:

- Initial Capture: At the start of your recording session under final lighting, place the ColorChecker chart within the field of view. Record a few seconds of footage where the chart is clearly visible and evenly lit.

- Reference Data: Obtain the official color specification file for your specific ColorChecker chart model, which contains the reference CIE Lab values for all 24 patches [41].

- Software Correction:

- In your video editing software, apply a color correction effect (e.g., Lumetri Color in Premiere Pro).

- Use the software's vectorscope and the "Hue vs Hue" or similar tool to manually neutralize the white, gray, and black patches on the chart. This establishes a neutral baseline for tonal values [42].

- Use the eyedropper tool on the corresponding color patches to align the primary and secondary colors in your video with their known reference values. The software will generate a correction profile.

- Profile Application: Save this correction as a preset and apply it to all other footage from the same session and camera to ensure consistent color grading [42].

Troubleshooting Common Calibration Issues

Problem: Corrected footage still has a persistent color cast.

- Solution: Verify your lighting sources. Mixed lighting (e.g., daylight and tungsten) can create conflicting color temperatures. Use controlled, consistent artificial lighting throughout the experiment [40].

Problem: Colors look different after calibration when using multiple cameras of the same model.

- Solution: Perform the ColorChecker calibration protocol for each camera individually. Slight manufacturing variations in sensors mean each requires its own profile for perfect matching [42].

Problem: Automated white balance function on the camera keeps changing during a recording.

- Solution: Always disable "Auto White Balance" after setting a custom white balance with a gray card. Use fully manual camera settings to lock in the white balance and prevent the camera from adjusting mid-recording [40].

Problem: The gray card reading results in overexposed or underexposed footage.

- Solution: Ensure you use the gray card for white balance only. Set your exposure (aperture, shutter speed, ISO) separately using your camera's light meter or a dedicated light meter for the scene.

Research Reagents and Essential Materials

| Tool Name | Function in Experiment | Key Specifications |

|---|---|---|

| 18% Gray Card | Provides a neutral reference for setting a custom white balance and verifying exposure [40]. | Reflects 18% of incident light across the visible spectrum. |

| ColorChecker Chart | Enables comprehensive color correction and profiling of the entire imaging system by providing known color reference points [41]. | 24 color patches with published CIE Lab values (e.g., White: Lab(95.19, -1.03, 2.93), Black: Lab(20.64, 0.07, -0.46)) [41]. |

| Controlled Lighting | Eliminates color cast variations from ambient light, ensuring consistent illumination across all recordings. | D50 or D65 standard illuminants are often preferred for color-critical work. |

| Color Profiling Software | Applies the correction profile generated from the ColorChecker reference to all experimental footage. | Examples: DaVinci Resolve, Adobe Premiere Pro (Lumetri Color). |

Visualization and Color Contrast Standards

Adhering to accessibility contrast guidelines is crucial for creating clear and readable data visualizations, charts, and on-screen information during analysis.

Minimum Contrast Ratios for Graphical Objects and Text:

| Element Type | WCAG Level AA | WCAG Level AAA |

|---|---|---|

| Normal Body Text | 4.5:1 | 7:1 |

| Large-Scale Text (≥18pt or ≥14pt bold) | 3:1 | 4.5:1 |

| User Interface Components & Graphical Objects (icons, charts) | 3:1 | Not Defined [43] |

Frequently Asked Questions (FAQs)

Q1: Can I use a plain gray piece of paper instead of a calibrated gray card? No. A calibrated gray card is manufactured to a precise 18% reflectance. The color and reflectance of ordinary paper are unknown and can contain optical brighteners, leading to inaccurate white balance and exposure [40].

Q2: How often do I need to recalibrate during a long recording session? Recalibrate whenever lighting conditions change. For stable, controlled lighting, a single calibration at the session start is sufficient. If lighting intensity or color temperature fluctuates (e.g., natural light from a window), recalibrate frequently [40].

Q3: My research requires comparing videos from different camera models. Is this possible? Yes, but it requires careful calibration. You must create a unique ColorChecker profile for each camera model and lighting setup. This corrects for each sensor's different color response, allowing for standardized, comparable color data across devices [42].

Q4: Why is manual color calibration preferred over the camera's automatic white balance (AWB) for scientific work? AWB algorithms are designed for pleasing visuals, not scientific accuracy. They can change between frames and are easily biased by dominant colors in the scene. Manual calibration using a reference provides a consistent, objective, and repeatable standard [40].

Frequently Asked Questions (FAQs)

Q1: In a video analysis pipeline, my AWB correction creates a noticeable color jump between consecutive frames instead of a smooth transition. How can I mitigate this?

This is a common issue when applying still-image AWB algorithms to video on a frame-by-frame basis. To ensure temporal stability:

- Frame Averaging: Implement a moving average filter for the estimated illuminants (e.g., the RGB gain values) across a short window of frames instead of using the estimate from a single frame [44].

- Constrained Estimation: Limit the maximum allowable change for the illuminant estimates between two consecutive frames to prevent sudden, large corrections.

- Protocol Adjustment: When using the White Patch Retinex algorithm, avoid using the brightest pixels from the entire scene, as a fleeting, bright specular highlight can cause large illuminant fluctuations. Instead, use the consistent approach of excluding the top 1% of brightest pixels and leveraging a scene mask to exclude unreliable regions [45].

Q2: My scene contains a large, brightly colored object (e.g., a green wall), which causes the Gray World algorithm to fail. What are my options?

The Gray World algorithm assumes a scene average of neutral gray, so a dominant color violates its core premise. Solutions include:

- Spatial Masking: If your experimental setup allows, create a binary mask to exclude the dominant colored object from the illuminant estimation process [45].

- Percentile Trimming: Use the refined Gray World method that excludes the top and bottom 1% of pixel values from the calculation. This makes the estimate more robust to large areas of extreme colors [45].

- Algorithm Switching: Employ the White Patch Retinex method, which is less affected by large colored areas if a neutral bright patch is available. Alternatively, use the PCA-based method (Cheng's method), which is designed to be more robust to such scene content variations [45] [46].

Q3: For behavioral research, how critical is it to account for multiple illuminants in a single scene (mixed lighting), and which AWB method should I use?

Mixed lighting is a significant challenge. Traditional global AWB methods (Gray World, White Patch) assume a single, uniform illuminant and will struggle, potentially altering the appearance of the scene in a way that confounds analysis.

- Global vs. Local Correction: Standard methods provide a single correction for the entire frame, which is insufficient for spatially varying lighting. The research field is moving towards local AWB techniques to address this [47].

- Advanced Methods: For complex environments, consider modern, learning-based methods that use deep feature statistics and feature distribution matching. These are specifically designed to handle multiple illuminants and complex lighting by modeling the illumination as a style factor that can vary across the image [3].

- Recommendation: If using a traditional algorithm, Cheng's PCA-based method has been shown to perform better in the presence of multiple illuminants compared to simpler statistics-based methods [46].

Troubleshooting Guides

Problem: Inconsistent Color Rendition Across Different Cameras

Description The same AWB algorithm produces different color results when applied to video footage from different camera models, hindering the reproducibility of your research.

Solution Steps

- Radiometric Self-Calibration: Implement a post-processing calibration step. Research indicates that a camera's response function (CRF) can be time-variant and change with scene content. Using a data-driven technique that models a mixture of responses can counteract nonlinear, exposure-dependent intensity perturbations and white-balance changes caused by proprietary camera firmware [44].

- Standardized Input: If possible, always work with raw, linear RGB image data. Avoid using images that have already been processed by the camera's built-in JPEG engine, as this applies non-linear corrections (like gamma encoding) and an unknown white balance that is difficult to reverse [45].

- Camera-Specific Profiling: For critical applications, create a camera profile by capturing a reference ColorChecker chart under your lab's lighting conditions. Use the ground truth illuminant measured from the chart to normalize the camera's output [45].

Problem: Poor AWB Performance in Low-Light Conditions

Description AWB algorithms become unstable and produce noisy, color-shifted results in low-light video sequences.

Solution Steps

- Channel-Specific Gain Analysis: Understand that in low light, the blue channel typically has the lowest signal-to-noise ratio. The large gains applied to the blue channel by AWB, especially under incandescent lighting, will amplify this noise [48].

- Algorithm Selection: Prefer the Gray World algorithm with percentile trimming. It averages the entire scene, which can have a denoising effect compared to methods like White Patch that rely on a small number of extreme pixels, which are often noise in low-light conditions [45].

- Post-Processing Denoising: Apply a gentle color-aware denoising filter after the AWB correction step to reduce the amplified chrominance noise without blurring fine details important for behavior analysis.

Experimental Protocols & Data

Protocol 1: Ground Truth Illuminant Measurement Using a ColorChecker Chart

This protocol is essential for quantitatively evaluating the performance of different AWB algorithms in a controlled environment [45].

- Setup: Place a ColorChecker chart within the scene to be recorded.

- Data Capture: Capture a raw, linear RGB image or video frame. Demosaic the data if necessary, but avoid any gamma correction or pre-existing white balance.

- Chart Detection: Use a function (e.g.,

colorCheckerin MATLAB) to automatically detect the chart within the image. Manually confirm the detection is correct. - Illuminant Calculation: Use a measurement function (e.g.,

measureIlluminant) on the linear RGB data from the detected chart to compute the ground truth illuminant vector, for example,[4.54, 9.32, 6.18] * 10³[45]. - Create a Mask: Generate a binary mask to exclude the ColorChecker chart from subsequent algorithmic analysis, ensuring the algorithm is evaluated only on the scene data.

Protocol 2: Implementing and Comparing Classic AWB Algorithms

1. White Patch Retinex

- Principle: Assumes the brightest pixels in the scene represent the color of the illuminant [48].

- Method:

- For a given image, specify a percentile of the brightest pixels to exclude (e.g., top 1%) to avoid overexposed specular highlights [45].

- Optionally, apply a scene mask to ignore non-representative regions.

- The algorithm (

illumwhite) will return the estimated illuminant based on the maximum values of the remaining pixels.

2. Gray World

- Principle: Assumes that the average reflectance of a scene is achromatic (gray) [45] [48].

- Method:

- Specify percentiles of the darkest and brightest pixels to exclude (e.g., 1% for each) to improve robustness against shadows and saturated pixels [45].

- The algorithm (

illumgray) calculates the scene illuminant as the average of all remaining pixel values in each RGB channel.

3. Cheng's Principal Component Analysis (PCA) Method

- Principle: Operates on the assumption that the illuminant vector can be found by analyzing the principal component of a subset of bright and dark colors in the scene [46].

- Method:

- The algorithm involves selecting pixels based on their brightness and darkness and then performing PCA on the color distribution of these selected pixels.

- The first principal component of this specific color distribution is used to estimate the illuminant vector.

Quantitative Comparison of AWB Algorithms

Table 1: Angular Error of Different AWB Configurations Angular error (in degrees) measures the accuracy of an estimated illuminant against the ground truth. A lower error is better [45].

| Algorithm | Configuration | Angular Error (degrees) |

|---|---|---|

| White Patch Retinex | Percentile=0% (use all pixels) | 16.54 |

| White Patch Retinex | Percentile=1% (exclude top 1%) | 5.03 |

| Gray World | Percentiles=[0% 0%] (use all pixels) | 5.04 |

| Gray World | Percentiles=[1% 1%] (exclude extremes) | 5.11 |

Table 2: Characteristics of Classic AWB Algorithms

| Algorithm | Underlying Principle | Strengths | Weaknesses |

|---|---|---|---|

| White Patch Retinex | The brightest patch reflects the illuminant color [48]. | Simple, intuitive, works well with neutral highlights. | Fails with overexposed pixels or no white patches; sensitive to noise. |

| Gray World | The average scene reflectance is gray [45]. | Simple, computationally efficient, good for diverse scenes. | Fails with dominant color casts or monochromatic scenes. |

| Cheng's PCA | The illuminant is the first principal component of bright/dark colors [46]. | More robust to scene content variations than Gray World or White Patch. | More computationally complex than simpler statistical methods. |

Workflow Visualization

The Researcher's Toolkit

Table 3: Essential Research Reagents and Materials

| Item | Function in AWB Research |

|---|---|

| ColorChecker Chart | Provides 24 patches with known spectral reflectances. Used to calculate the ground truth scene illuminant for quantitative algorithm evaluation [45]. |

| Raw Image Dataset (e.g., Cube+) | Contains unprocessed, linear RGB images essential for fair and accurate testing of AWB algorithms without the unknown corrections applied by in-camera processing [45] [3]. |

| Synthetic Mixed-Illuminant Dataset | A specialized dataset containing scenes illuminated by multiple light sources. Critical for developing and testing the robustness of AWB algorithms against complex, real-world lighting challenges [3]. |

| Multi-Illuminant Multispectral-Imaging (MIMI) Dataset | Includes both RGB and multispectral images from nearly 800 scenes. This resource is ideal for developing next-generation, learning-based white balancing algorithms for multi-illuminant environments [47]. |

Post-Processing Correction in Video Analysis Software

Core Concepts and Importance

What is White Balance and why is it critical for video analysis research?

White balance is the process of adjusting colors in video to ensure that white objects appear naturally white under different lighting conditions, which ensures all other colors are accurately represented [49]. In video behavior analysis, this is not merely a visual enhancement but a fundamental requirement for data integrity. Accurate white balance prevents unwanted color casts that can alter the perception of experimental subjects, ensuring that measurements of appearance, movement, or physiological indicators (like skin tone or fur color in animal models) are consistent and reliable across all recordings [49] [50].

How does light source affect color temperature?

Different light sources emit light with different color temperatures, measured in Kelvin (K). This is a primary factor requiring white balance correction [49] [50].

Table: Common Color Temperatures of Light Sources

| Light Source | Typical Color Temperature (K) | Color Cast if Uncorrected |

|---|---|---|

| Tungsten (Incandescent) Light | ~3200 K | Strong Orange/Yellow [49] |

| Sunrise/Sunset | ~3000-4000 K | Warm, Golden [49] |

| Midday Sun / Daylight | ~5500 K | Neutral [49] |

| Cloudy Sky / Shade | ~6000-6500 K | Cool, Bluish [49] |

| Fluorescent Light | Varies | Greenish or Bluish [49] |

Troubleshooting Guides

Problem 1: Inconsistent Color Values Across Sequential Recordings

Symptoms: The same subject appears to have different colors when analyzed from videos shot on different days or under different lighting, introducing noise into longitudinal data.

Diagnosis: This is typically caused by using Auto White Balance (AWB) or inconsistent manual settings between recording sessions. AWB adjusts continuously, leading to color shifts within and between clips [49] [51].

Solution:

- Set White Balance Manually: Before starting recordings, manually set the white balance on your camera using a custom preset [51] [50].

- Use a Reference Card: Use a calibrated white balance or 18% grey card under your experiment's lighting conditions to create a custom white balance setting in-camera [50].

- Document Settings: Record the Kelvin value used for each experimental session for future reference and replication.

Problem 2: Unnatural Color Cast After Post-Processing Correction

Symptoms: After applying white balance correction in software, the overall video has a pronounced green, magenta, blue, or yellow tint, making subjects look unnatural.

Diagnosis: This "overcorrection" can happen when using an imprecise reference point or when the original footage lacks sufficient color information due to heavy compression [49] [51].

Solution:

- Use a Neutral Reference: During post-processing, use the software's eyedropper tool to click on a known neutral-grey or white object within the scene that was captured under the same primary lighting [50].

- Fine-tune with Sliders: Manually adjust the "Temperature" (blue-amber) and "Tint" (green-magenta) sliders until colors, especially skin tones or known reference colors, appear natural [49] [50].

- Check Skin Tones: As a vital benchmark, ensure that the skin tones of any human or animal subjects look natural and consistent across the dataset [49].

Problem 3: Color Banding and Artifacts After Correction

Symptoms: Correcting white balance in post-processing results in visible bands of color instead of smooth gradients, particularly in areas like skies or plain walls, degrading image quality.

Diagnosis: This is often a limitation of the source footage. Heavily compressed video formats (like H.264) and 8-bit color depth do not retain the full sensor data, making them prone to banding when color values are stretched in post-production [51] [52].

Solution:

- Prevent at Source: If possible, record using a higher color depth (10-bit or higher) and a less compressed or log format. 10-bit video is much more resilient to post-production color adjustment [52].

- Correct in Linear Space: For advanced workflows, convert log footage to a linear color space before making white balance adjustments. This provides more mathematically accurate color correction and can minimize artifacts [52].

- Mitigate in Software: Apply subtle noise reduction or dithering after correction to help mask banding artifacts.

Frequently Asked Questions (FAQs)

Q1: Is it better to correct white balance in-camera or during post-processing? For research purposes, getting it right in-camera is strongly recommended. Post-processing correction is possible but is a "recovery" tool, not a replacement for proper initial setup. In-camera correction preserves the most data and avoids the quality loss associated with correcting highly compressed video [51].

Q2: Can I fully trust my camera's Auto White Balance (AWB) for consistent experiments? No. While convenient, AWB is designed for consumer aesthetics, not scientific consistency. It can change within a single shot due to moving subjects or shifting composition, introducing unacceptable variability into your data. Manual white balance is essential for research [49] [51].

Q3: What is the best way to handle mixed lighting conditions (e.g., daylight from a window and fluorescent room lights)? Mixed lighting is a significant challenge. The recommended strategy is to:

- Balance the Lights: If possible, use gels on the artificial lights to match the color temperature of the dominant natural light (or vice versa).

- Set for the Subject: Set your manual white balance for the light source that is directly illuminating your primary research subject.

- Accept and Document: If you cannot control the lighting, document the conditions thoroughly. In post-processing, you may need to use power windows or secondary color correction to balance different areas of the frame separately [49].

Q4: My analysis software is detecting different colors than what I see in the video player. Why? This discrepancy often arises from color space misinterpretation. Ensure that your video editing software, playback software, and analysis tool are all using the same color profile and color space settings (e.g., Rec. 709). Always work on a calibrated monitor.