Manual vs. Automated Behavior Scoring: A Reliability Analysis for Preclinical Research

This article provides a comprehensive comparison of the reliability of manual and automated behavior scoring methods, tailored for researchers and professionals in drug development.

Manual vs. Automated Behavior Scoring: A Reliability Analysis for Preclinical Research

Abstract

This article provides a comprehensive comparison of the reliability of manual and automated behavior scoring methods, tailored for researchers and professionals in drug development. It explores the foundational concepts of reliability analysis, details methodological approaches for implementation, addresses common challenges and optimization strategies, and presents a comparative validation of both techniques. The goal is to equip scientists with the knowledge to choose and implement the most reliable scoring methods, thereby enhancing the reproducibility and translational potential of preclinical behavioral data.

The Pillars of Reliability: Understanding Scoring Consistency in Behavioral Research

In behavioral and neurophysiological research, the consistency and trustworthiness of measurements form the bedrock of scientific validity. Reliability ensures that the scores obtained from an instrument are due to the actual characteristics being measured rather than random error or subjective judgment. This is especially critical when comparing manual versus automated scoring methods, as researchers must determine whether automated systems can match or exceed the reliability of trained human experts. The transition from manual to automated scoring brings promises of increased efficiency, scalability, and objectivity, yet it also introduces new challenges in ensuring measurement consistency across different platforms, algorithms, and contexts [1].

The comparison between manual and automated scoring reliability is particularly relevant in fields like sleep medicine, neuroimaging, and educational assessment, where complex patterns must be interpreted consistently. For instance, in polysomnography (sleep studies), manual scoring by experienced technologists has long been considered essential, requiring 1.5 to 2 hours per study. The emergence of certified automated software performing on par with traditional visual scoring represents a major advancement for the field [1]. Understanding the different types of reliability and how they apply to both manual and automated systems provides researchers with the framework needed to evaluate these emerging technologies critically.

The Three Cornerstones of Reliability

Internal Consistency

Internal consistency measures whether multiple items within a single assessment instrument that propose to measure the same general construct produce similar scores [2]. This form of reliability is particularly relevant for multi-item tests, questionnaires, or assessments where several elements are designed to collectively measure one underlying characteristic.

Measurement Approach: Internal consistency is typically measured with Cronbach's alpha (α), a statistic calculated from the pairwise correlations between items. Conceptually, α represents the mean of all possible split-half correlations for a set of items [2] [3]. A split-half correlation involves dividing the items into two sets (such as first-half/second-half or even-/odd-numbered) and examining the relationship between the scores from both sets [3].

Interpretation Guidelines: A commonly accepted rule of thumb for interpreting Cronbach's alpha is presented in Table 1 [2].

Table 1: Interpreting Internal Consistency Using Cronbach's Alpha

| Cronbach's Alpha Value | Level of Internal Consistency |

|---|---|

| 0.9 ≤ α | Excellent |

| 0.8 ≤ α < 0.9 | Good |

| 0.7 ≤ α < 0.8 | Acceptable |

| 0.6 ≤ α < 0.7 | Questionable |

| 0.5 ≤ α < 0.5 | Poor |

| α < 0.5 | Unacceptable |

It is important to note that very high reliabilities (0.95 or higher) may indicate redundant items, and shorter scales often have lower reliability estimates yet may be preferable due to reduced participant burden [2].

Test-Retest Reliability

Test-retest reliability measures the consistency of results when the same test is administered to the same sample at different points in time [3] [4]. This approach is used when measuring constructs that are expected to remain stable over the period being assessed.

Measurement Approach: Assessing test-retest reliability requires administering the identical measure to the same group of people on two separate occasions and then calculating the correlation between the two sets of scores, typically using Pearson's r [3]. The time interval should be long enough to prevent recall bias but short enough that the underlying construct hasn't genuinely changed [4].

Application Context: In behavioral scoring research, test-retest reliability is crucial for establishing that both manual and automated scoring methods produce stable results over time. For example, an automated sleep staging system should produce similar results when analyzing the same polysomnography data at different times, just as a human scorer should consistently apply the same criteria to the same data when re-evaluating it [1].

Inter-Rater Reliability

Inter-rater reliability (also called interobserver reliability) measures the degree of agreement between different people observing or assessing the same phenomenon [3] [4]. This form of reliability is essential when behavioral measures involve significant judgment on the part of observers.

Measurement Approach: To measure inter-rater reliability, different researchers conduct the same measurement or observation on the same sample, and the correlation between their results is calculated [4]. When judgments are quantitative, inter-rater reliability is often assessed using Cronbach's α; for categorical judgments, an analogous statistic called Cohen's κ (kappa) is typically used [3]. The intraclass correlation coefficient (ICC) is another widely used statistic for assessing reliability across raters for quantitative data [5].

Application Context: Inter-rater reliability takes on particular importance when comparing manual and automated scoring. Researchers might measure agreement between multiple human scorers, between human scorers and automated systems, or between different automated algorithms [1] [5]. For instance, a recent study of automated sleep staging found strong inter-scorer agreement between two experienced manual scorers (~83% agreement), but unexpectedly low agreement between manual scorers and automated software [1].

Table 2: Summary of Reliability Types and Their Applications

| Type of Reliability | Measures Consistency Of | Primary Measurement Statistics | Key Application in Scoring Research |

|---|---|---|---|

| Internal Consistency | Multiple items within a test | Cronbach's α, Split-half correlation | Ensuring all components of a complex scoring rubric align |

| Test-Retest | Same test over time | Pearson's r | Establishing scoring stability across repeated measurements |

| Inter-Rater | Different raters/Systems | ICC, Cohen's κ, Pearson's r | Comparing manual vs. automated and human-to-human agreement |

Experimental Protocols for Assessing Reliability

Protocol for Comparing Manual and Automated Scoring Reliability

Research comparing manual versus automated scoring reliability typically follows a structured experimental protocol to ensure fair and valid comparisons:

Sample Selection: Researchers gather a representative dataset of materials to be scored. For example, in sleep medicine, this includes polysomnography (PSG) recordings from patients with various conditions [1]. In neuroimaging, researchers might select PET scans from both control subjects and those with Alzheimer's disease [5].

Manual Scoring Phase: Multiple trained experts independently score the entire dataset using established protocols and criteria. For instance, in sleep staging, experienced technologists would visually score each PSG according to AASM guidelines, with each study requiring 1.5-2 hours [1].

Automated Scoring Phase: The same dataset is processed using the automated scoring system(s) under investigation. In modern implementations, this may involve rule-based systems, shallow machine learning models, deep learning models, or generative AI approaches [6].

Reliability Assessment: Researchers calculate agreement metrics between different human scorers (inter-rater reliability) and between human scorers and automated systems. Standard statistical approaches include Bland-Altman plots, correlation coefficients, and agreement percentages [1] [5].

Clinical Impact Analysis: Beyond statistical agreement, researchers examine whether scoring discrepancies lead to different diagnostic classifications or treatment decisions, linking reliability measures to real-world outcomes [1].

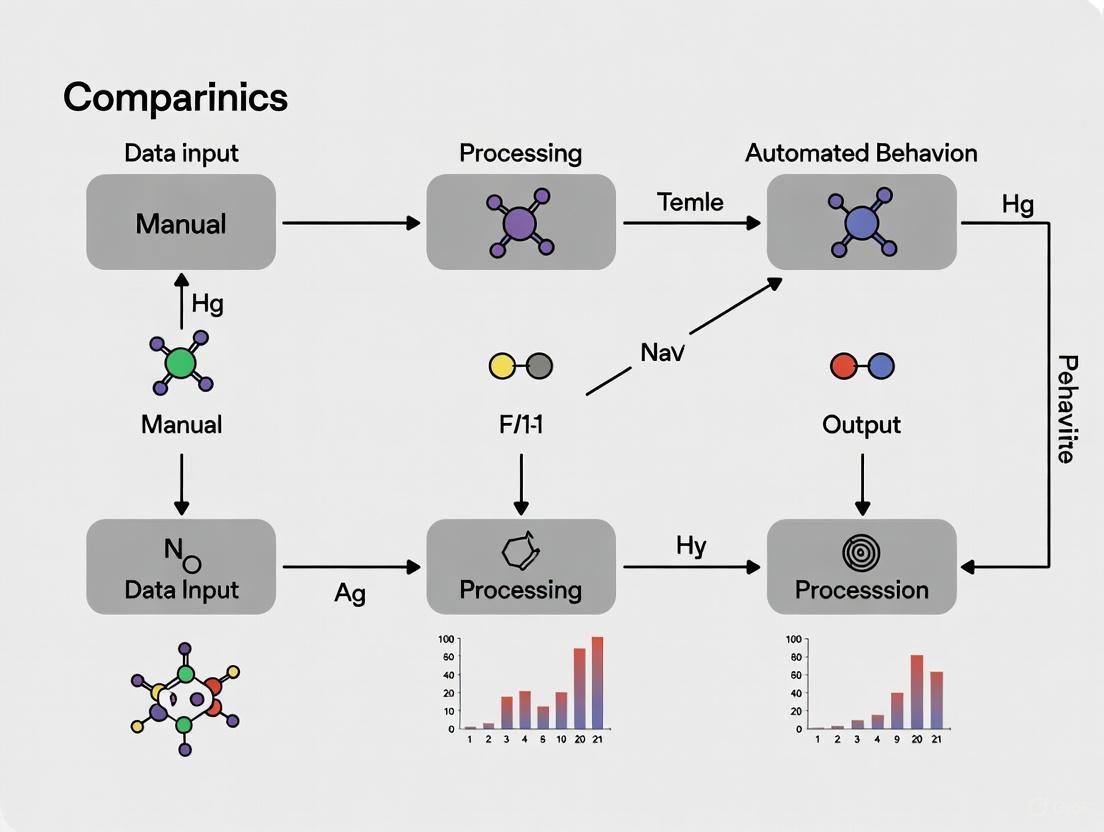

Figure 1: Experimental workflow for comparing manual and automated scoring reliability

Protocol for Assessing Internal Consistency in Automated Scoring Systems

When evaluating the internal consistency of automated scoring systems, particularly those using multiple features or components, researchers may employ this protocol:

Feature Identification: Document all features, rules, or components the automated system uses to generate scores. For example, an automated essay scoring system might analyze linguistic features, discourse structure, and argumentation quality [7].

Component Isolation: Temporarily isolate different components or item groups within the scoring system to assess whether they produce consistent results for the same underlying construct.

Correlation Analysis: Calculate internal consistency metrics (Cronbach's α or split-half reliability) across these components using a representative sample of scored materials.

Dimensionality Assessment: Use techniques like factor analysis to determine whether all system components truly measure the same construct or multiple distinct dimensions.

This approach is particularly valuable for understanding whether complex automated systems apply scoring criteria consistently across different aspects of their evaluation framework.

Comparative Data: Manual vs. Automated Scoring Reliability

Empirical studies across multiple domains provide quantitative data on how manual and automated scoring methods compare across different reliability types.

Table 3: Comparative Reliability Metrics Across Domains

| Domain | Manual Scoring Reliability | Automated Scoring Reliability | Notes |

|---|---|---|---|

| Sleep Staging | Inter-rater agreement: ~83% [1] | Reaches AASM accuracy standards [1] | Agreement between manual and automated can be unexpectedly low [1] |

| PET Amyloid Imaging | Inter-rater ICC: 0.932 [5] | ICC vs. manual: 0.979 (primary areas) [5] | Automated methods show high reliability in primary cortical areas [5] |

| Essay Scoring | Human-AI correlation: 0.73 [8] | Quadratic Weighted Kappa: 0.72; overlap: 83.5% [8] | |

| Free-Text Answer Scoring | Subject to fatigue, mood, order effects [6] | Consistent performance unaffected by fatigue [6] | Automated scoring reduces subjective variance sources [6] |

Key Findings from Comparative Studies

Contextual Factors Affect Automated Reliability: A critical finding across studies is that automated scoring systems validated in controlled development environments do not always maintain their reliability when applied in different clinical or practical settings. Factors such as local scoring protocols, signal variability, equipment differences, and patient heterogeneity can significantly influence algorithm performance [1].

Certification Status Matters: In fields like sleep medicine, AASM certification of automated scoring software provides a recognized benchmark for quality and reliability. However, current certification efforts remain limited in scope—applying only to sleep stage scoring while leaving other clinically critical domains like respiratory event detection unaddressed [1].

Promising Approaches for Enhancement: Federated learning (FL), a machine learning technique that enables institutions to collaboratively train models without sharing raw patient data, shows promise for improving automated scoring reliability. This approach allows algorithms to learn from heterogeneous datasets that reflect variations in protocols, equipment, and patient populations while preserving privacy [1].

Essential Research Reagent Solutions for Reliability Studies

Researchers investigating reliability in behavioral scoring require specific methodological tools and approaches to ensure robust findings.

Table 4: Essential Research Reagents for Scoring Reliability Studies

| Research Reagent | Function in Reliability Research | Example Implementation |

|---|---|---|

| Intraclass Correlation Coefficient (ICC) | Measures reliability of quantitative measurements across multiple raters or instruments [5] | Assessing inter-rater reliability of PiB PET amyloid retention measures [5] |

| Cohen's Kappa (κ) | Measures inter-rater reliability for categorical items, correcting for chance agreement [3] | Useful for comparing scoring categories in behavioral coding or diagnostic classification |

| Cronbach's Alpha (α) | Assesses internal consistency of a multi-item measurement instrument [2] [3] | Evaluating whether all components of a complex scoring rubric measure the same construct |

| Bland-Altman Analysis | Visualizes agreement between two quantitative measurements by plotting differences against averages [1] | Used in sleep staging studies to compare manual and automated scoring methods [1] |

| Federated Learning Platforms | Enables collaborative model training across institutions without sharing raw data [1] | ODIN platform for automated sleep stage classification across diverse clinical datasets [1] |

The comprehensive comparison of reliability metrics across manual and automated scoring methods reveals a nuanced landscape. While automated systems can achieve high reliability standards—sometimes matching or exceeding human consistency—they also face distinct challenges related to contextual variability, certification limitations, and generalizability across diverse populations.

The most promising path forward appears to be a complementary approach that leverages the strengths of both methods. Automated systems offer efficiency, scalability, and freedom from fatigue-related inconsistencies, while human experts provide nuanced judgment, contextual understanding, and adaptability to novel situations. Future research should focus not only on improving statistical reliability but also on ensuring that scoring consistency translates to meaningful clinical, educational, or research outcomes.

As automated scoring technologies continue to evolve, ongoing validation against established manual standards remains essential. By maintaining rigorous attention to all forms of reliability—internal consistency, test-retest stability, and inter-rater agreement—researchers can ensure that technological advancements genuinely enhance rather than compromise measurement quality in behavioral scoring.

In scientific research, particularly in fields reliant on behavioral coding and data scoring, the reliability of the methods used to generate data is the bedrock upon which reproducibility is built. Low reliability in data scoring—whether the process is performed by humans or machines—introduces critical risks that cascade from the smallest data point to the broadest scientific claim. A reproducibility crisis has long been identified in scientific research, undermining its trustworthiness [9]. This article explores the consequences of low reliability in behavioral scoring, frames them within a comparison of manual and automated approaches, and provides researchers with evidence-based guidance to safeguard their work. The integrity of our data directly dictates the integrity of our conclusions.

Defining the Problem: How Low Reliability Undermines Science

Reliability in scoring refers to the consistency and stability of a measurement process. When reliability is low, the following risks emerge, each posing a direct threat to data integrity and reproducibility.

- Introduction of Uncontrolled Variance: Low reliability acts as a source of uncontrolled, often unmeasured, variance. This "noise" can obscure true effects (Type II errors) or, worse, create the illusion of effects where none exist (Type I errors) [10]. In manual scoring, this can stem from rater fatigue or inconsistent application of rules; in automated scoring, it can arise from an model's inability to generalize to new data.

- Erosion of Reproducibility: A study whose core data is generated through an unreliable process is inherently irreproducible. Other researchers cannot replicate the findings because the measurement standard itself is unstable. Inconsistent data collection methods, such as variations in survey administration or scoring, are a fundamental source of irreproducibility in multisite and longitudinal studies [11] [12].

- Systematic Bias: While human raters can exhibit random errors, both human and automated systems are susceptible to systematic bias. Humans may be influenced by unconscious expectations, while automated models can systematically discriminate against certain patterns or subgroups if the training data is biased [6] [10]. Such biases are particularly pernicious as they lead to consistently erroneous conclusions.

- Compromised Data Reusability: The FAIR principles (Findability, Accessibility, Interoperability, and Reusability) emphasize the importance of data reuse [11]. Data generated through an unreliable process has low reusability because its inherent inconsistency makes it unsuitable for secondary analysis or meta-analyses, wasting resources and limiting scientific progress.

Comparative Analysis: Manual vs. Automated Scoring Reliability

The choice between manual and automated scoring is not trivial, as each methodology has distinct strengths and weaknesses that impact reliability. The table below summarizes a comparative analysis of these two approaches.

Table 1: Comparison of Manual and Automated Scoring Methodologies

| Aspect | Manual Scoring | Automated Scoring |

|---|---|---|

| Primary Strength | Nuanced human judgment, adaptable to novel contexts [13] | High speed, consistency, and freedom from fatigue [13] [6] |

| Primary Risk to Reliability | Susceptibility to fatigue, mood, and low inter-rater reliability [6] [10] | Systematic bias from poor training data or inability to handle variance [6] |

| Impact on Resources | Time-consuming and expensive, limiting sample size [14] | High initial development cost, but low marginal cost per sample thereafter |

| Explainability | Intuitive; raters can explain their reasoning [6] | Often a "black box"; lack of explainability is a key ethical challenge [6] |

| Best Suited For | Subjective tasks, novel research with undefined rules, small-scale studies | Well-defined, high-volume tasks, large-scale and longitudinal studies [11] |

The "best" approach is context-dependent. For example, a study quantifying connected speech in individuals with aphasia found that automated scoring compared favorably to human experts, saving time and reducing the need for extensive training while providing reliable and valid quantification [14]. Conversely, for tasks with high intrinsic subjectivity, manual scoring may be more appropriate.

Experimental Evidence: Case Studies in Scoring Reliability

Case Study 1: Automated Scoring of Connected Speech in Aphasia

- Objective: To create and evaluate an automated program (C-QPA) to score the Quantitative Production Analysis (QPA), a measure of morphological and structural features of connected speech, and compare its reliability to manual scoring by trained experts [14].

- Methodology: Language transcripts from 109 individuals with left hemisphere stroke were analyzed using both the traditional manual QPA protocol and the new automated C-QPA command within the CLAN software. The manual QPA required trained scorers to perform an utterance-by-utterance analysis following a strict protocol. The C-QPA command automatically computed the same measures by leveraging CLAN's morphological (%mor) and grammatical (%gra) tagging tiers [14].

- Key Results: Linear regression analysis revealed that 32 out of 33 QPA measures showed good agreement between manual and automated scoring. The single measure with poor agreement (Auxiliary Complexity Index) required post-hoc refinement of the automated scoring rules. The study concluded that automated scoring provided a reliable and valid quantification while saving significant time and reducing training burdens [14].

Table 2: Key Results from Automated vs. Manual QPA Scoring Study

| Metric | Manual QPA Scoring | Automated C-QPA Scoring | Agreement |

|---|---|---|---|

| Number of Nouns | Manually tallied | Automatically computed | Good |

| Proportion of Verbs | Manually calculated | Automatically calculated | Good |

| Mean Length of Utterance | Manually derived | Automatically derived | Good |

| Auxiliary Complexity Index | Manually scored | Automatically computed | Poor |

| Total Analysis Time | High (hours per transcript) | Low (minutes per transcript) | N/A |

Case Study 2: The Role of Standardization Frameworks in Survey Data

- Objective: To address inconsistencies in survey-based data collection that undermine reproducibility across biomedical, clinical, behavioral, and social sciences [11] [12].

- Methodology: Researchers introduced and tested ReproSchema, a schema-driven ecosystem for standardizing survey design. This framework includes a library of reusable assessments, tools for validation and conversion to formats like REDCap, and integrated version control. The platform was compared against 12 common survey platforms (e.g., Qualtrics, REDCap) against FAIR principles and key survey functionalities [11] [12].

- Key Results: ReproSchema met all 14 assessed FAIR criteria and supported 6 out of 8 key survey functionalities, including automated scoring. The study demonstrated that a structured, schema-driven approach could maintain consistency across studies and over time, directly enhancing the reliability and reproducibility of collected data [11] [12].

Methodological Protocols for Ensuring Reliability

To mitigate the risks of low reliability, researchers should adopt rigorous methodological protocols, whether using manual or automated scoring.

Protocol for Establishing Manual Scoring Reliability

- Rater Training and Calibration: Implement a structured training program using the specific scoring guide. Raters must practice on a gold-standard set of transcripts or recordings until they achieve a high inter-rater reliability (IRR), often exceeding 90% agreement [14].

- Calculate Inter-Rater Reliability (IRR): Throughout the data collection phase, a portion of the data (e.g., 10-20%) should be independently scored by multiple raters. Statistical measures of IRR, such as Cohen's Kappa or intraclass correlation coefficients, must be calculated and monitored for drift [10].

- Blinding: Raters should be blinded to experimental conditions and hypotheses to prevent confirmation bias from influencing their scores.

Protocol for Establishing Automated Scoring Reliability

- Robust Training and Validation: The automated model must be trained on a comprehensive and representative dataset. Its performance should be validated on a separate, held-out dataset—a critical step to ensure the model can generalize to new, unseen data [6].

- Stability and Replicability Analysis: For responsible AI data collection, the annotation task should be repeated under different conditions (e.g., different time intervals). Stability analysis (association of scores across repetitions) and replicability similarity (agreement between different rater pools) should be performed to ensure the model's reliability is not fragile [10].

- Continuous Performance Monitoring: An automated scoring system is not a "set it and forget it" tool. Its outputs must be periodically checked against a manual gold standard to detect and correct for performance decay over time.

Visualizing the Pathways to Reliable Data

The following diagrams illustrate the core workflows and relationships that underpin reliable data scoring, highlighting critical decision points and potential failure modes.

Diagram 1: Manual Scoring Reliability Pathway. This workflow emphasizes the iterative nature of training and the critical role of ongoing Inter-Rater Reliability (IRR) checks to ensure consistent data generation.

Diagram 2: Automated Scoring Reliability Pathway. This chart outlines the development and deployment cycle for an automated scoring system, highlighting the essential feedback loop for validation and continuous monitoring.

Table 3: Essential Research Reagent Solutions for Scoring Reliability

| Tool or Resource | Function | Application Context |

|---|---|---|

| CLAN (Computerized Language ANalysis) | A set of programs for automatic analysis of language transcripts that have been transcribed in the CHAT format [14]. | Automated linguistic analysis, such as quantifying connected speech features in aphasia research [14]. |

| ReproSchema | A schema-driven ecosystem for standardizing survey-based data collection, featuring a library of reusable assessments and version control [11] [12]. | Ensuring consistency and interoperability in survey administration and scoring across longitudinal or multi-site studies. |

| TestRail | A test case management platform that helps organize test cases, manage test runs, and track results for manual QA processes [13]. | Managing and tracking the execution of manual scoring protocols to ensure adherence to defined methodologies. |

| Inter-Rater Reliability (IRR) Statistics (e.g., Cohen's Kappa) | A set of statistical measures used to quantify the degree of agreement among two or more raters [10] [14]. | Quantifying the consistency of manual scorers during training and throughout the primary data scoring phase. |

| RedCap (Research Electronic Data Capture) | A secure web platform for building and managing online surveys and databases. Often used as a benchmark or integration target for standardized data collection [11] [12]. | Capturing and managing structured research data, often integrated with other tools like ReproSchema for enhanced standardization. |

The consequences of low reliability in data scoring are severe, directly threatening data integrity and dooming studies to irreproducibility. There is no one-size-fits-all solution; the choice between manual and automated scoring must be a deliberate one, informed by the research question, available resources, and the nature of the data. Manual scoring offers nuanced judgment but is vulnerable to human inconsistency, while automated scoring provides unparalleled efficiency and consistency but risks systematic bias and lacks explainability. The path forward requires a commitment to rigorous methodology—whether through robust rater training and IRR checks for manual processes or through careful validation, stability analysis, and continuous monitoring for automated systems. By treating the reliability of our scoring methods with the same seriousness as our experimental designs, we can produce data worthy of trust and conclusions capable of withstanding the test of time.

In biomedical research, particularly in drug development, the accurate scoring of complex behaviors, physiological events, and morphological details is paramount. Manual scoring by trained human observers has long been considered the gold standard against which automated systems are validated. This guide objectively compares the reliability and performance of manual scoring with emerging automated alternatives across multiple scientific domains. While automated systems offer advantages in speed and throughput, understanding the principles and performance of manual scoring remains essential for evaluating new technologies and ensuring research validity. The continued relevance of manual scoring lies not only in its established reliability but also in its capacity to handle complex, context-dependent judgments that currently challenge automated algorithms.

Manual scoring involves human experts applying standardized criteria to classify events, behaviors, or morphological characteristics according to established protocols. This human-centric approach integrates contextual understanding, pattern recognition, and adaptive judgment capabilities that have proven difficult to fully replicate computationally. As research increasingly incorporates artificial intelligence and machine learning, the principles of manual scoring provide the foundational reference standard necessary for validating these new technologies. This comparison examines the empirical evidence regarding manual scoring performance across multiple domains, detailing specific experimental protocols and quantitative outcomes to inform researcher selection of appropriate scoring methodologies.

Foundational Principles of Manual Scoring

The validity of manual scoring rests upon several core principles developed through decades of methodological refinement across scientific disciplines. These principles ensure that human observations meet the rigorous standards required for scientific research and clinical applications.

Standardized Protocols: Manual scoring relies on precisely defined criteria and classification systems that are consistently applied across observers and sessions. For example, in sleep medicine, the American Academy of Sleep Medicine (AASM) Scoring Manual provides the definitive standard for visual scoring of polysomnography, requiring 1.5 to 2 hours of expert analysis per study [1].

Comprehensive Training: Human scorers undergo extensive training to achieve and maintain competency, typically involving review of reference materials, supervised scoring practice, and ongoing quality control measures. This training ensures scorers can properly identify nuanced patterns and edge cases that might challenge algorithmic approaches.

Contextual Interpretation: Human experts excel at incorporating contextual information and prior knowledge into scoring decisions, allowing for appropriate adjustment when encountering atypical patterns or ambiguous cases not fully addressed in standardized protocols.

Multi-dimensional Assessment: Manual scoring frequently integrates multiple data streams simultaneously, such as combining visual observation with physiological signals or temporal patterns, to arrive at more robust classifications than possible from isolated data sources.

Quantitative Comparisons Across Scientific Domains

Diagnostic Imaging and Medical Screening

Table 1: Performance Comparison in Diabetic Retinopathy Screening

| Metric | Manual Consensus Grading | Automated AI Grading (EyeArt) | Research Context |

|---|---|---|---|

| Sensitivity (Any DR) | Reference Standard | 94.0% | Cross-sectional study of 247 eyes [15] |

| Sensitivity (Referable DR) | Reference Standard | 89.7% | Oslo University Hospital screening [15] |

| Specificity (Any DR) | Reference Standard | 72.6% | Patients with diabetes (n=128) [15] |

| Specificity (Referable DR) | Reference Standard | 83.0% | Median age: 52.5 years [15] |

| Agreement (QWK) | Established benchmark | Moderate agreement with manual | Software version v2.1.0 [15] |

Table 2: Reliability in Orthopedic Morphological Measurements

| Measurement Type | Interobserver ICC (Manual) | Intermethod ICC (Manual vs. Auto) | Clinical Agreement |

|---|---|---|---|

| Lateral Center Edge Angle | 0.95 (95%-CI 0.86-0.98) | 0.89 (95%-CI 0.78-0.94) | High reliability [16] |

| Alpha Angle | 0.43 (95%-CI 0.10-0.68) | 0.46 (95%-CI 0.12-0.70) | Moderate reliability [16] |

| Triangular Index Ratio | 0.26 (95%-CI 0-0.57) | Not reported | Low reliability [16] |

| Acetabular Dysplasia Diagnosis | 47%-100% agreement | 63%-96% agreement | Variable by condition [16] |

Sleep Medicine and Physiological Monitoring

In sleep medicine, manual scoring of polysomnography represents one of the most well-established applications of human expert evaluation. The AASM Scoring Manual defines the comprehensive standards that experienced technologists apply, typically requiring 1.5 to 2 hours per study [1]. This process involves classifying sleep stages, identifying arousal events, and scoring respiratory disturbances according to rigorously defined criteria.

Recent research indicates strong inter-scorer agreement between experienced manual scorers, with approximately 83% agreement previously reported by Rosenberg and confirmed in contemporary studies [1]. This high level of agreement demonstrates the reliability achievable through comprehensive training and standardized protocols. However, studies have revealed unexpectedly low agreement between manual scorers and automated systems, despite AASM certification of some software. This discrepancy highlights that even well-validated algorithms may perform differently when applied to real-world clinical datasets compared to controlled development environments [1].

Table 3: Manual vs. Automated Data Collection in Clinical Research

| Performance Aspect | Manual Data Collection | Automated Data Collection | Research Context |

|---|---|---|---|

| Patient Selection Accuracy | 40/44 true positives; 4 false positives | Identified 32 false negatives missed manually | Orthopedic surgery patients [17] |

| Data Element Completeness | Dependent on abstractor diligence | Limited to structured data fields | EBP project replication [17] |

| Error Types | Computational and transcription errors | Algorithmic mapping challenges | 44-patient validation study [17] |

| Resource Requirements | High personnel time commitment | Initial IT investment required | Nursing evidence-based practice [17] |

Experimental Protocols and Methodologies

Manual Consensus Grading for Diabetic Retinopathy

The manual grading protocol for diabetic retinopathy screening follows a rigorous methodology to ensure diagnostic accuracy [15]. A multidisciplinary team of healthcare professionals independently evaluates color fundus photographs using the International Clinical Disease Severity Scale for DR and diabetic macular edema. The process begins with pupil dilation and retinal imaging using standardized photographic equipment. Images are then de-identified and randomized to prevent grading bias.

Trained graders assess multiple morphological features, including microaneurysms, hemorrhages, exudates, cotton-wool spots, venous beading, and intraretinal microvascular abnormalities. Each lesion is documented according to standardized definitions, and overall disease severity is classified as no retinopathy, mild non-proliferative DR, moderate NPDR, severe NPDR, or proliferative DR. For consensus grading, discrepancies between initial graders are resolved through either adjudication by a senior grader or simultaneous review with discussion until consensus is achieved. This method provides the reference standard against which automated systems like the EyeArt software (v2.1.0) are validated [15].

Manual Polysomnography Scoring Protocol

The manual scoring of sleep studies follows the AASM Scoring Manual, which provides definitive criteria for sleep stage classification and event identification [1]. The process begins with the preparation of high-quality physiological signals, including electroencephalography (EEG), electrooculography (EOG), electromyography (EMG), electrocardiography (ECG), respiratory effort, airflow, and oxygen saturation. Technologists ensure proper signal calibration and impedance levels before commencing the scoring process.

Scoring proceeds in sequential 30-second epochs according to a standardized hierarchy. First, scorers identify sleep versus wakefulness based primarily on EEG patterns (alpha rhythm and low-voltage mixed-frequency activity for wakefulness). For sleep epochs, scorers then apply specific rules for stage classification: N1 (light sleep) is characterized by theta activity and slow eye movements; N2 features sleep spindles and K-complexes; N3 (deep sleep) contains at least 20% slow-wave activity; and REM sleep demonstrates rapid eye movements with low muscle tone. Simultaneously, scorers identify respiratory events (apneas, hypopneas), limb movements, and cardiac arrhythmias according to standardized definitions. The completed scoring provides comprehensive metrics including sleep efficiency, arousal index, apnea-hypopnea index, and sleep architecture percentages [1].

Manual Sleep Study Scoring Workflow

Orthopedic Radiograph Measurement Protocol

Manual morphological assessment of hip radiographs follows precise anatomical landmark identification and measurement protocols [16]. The process begins with standardized anterior-posterior pelvic radiographs obtained with specific patient positioning to ensure reproducible measurements. Trained observers then assess eight key parameters using specialized angle measurement tools within picture archiving and communication system (PACS) software.

For each measurement, observers identify specific anatomical landmarks: the lateral center edge angle (LCEA) measures hip coverage by drawing a line through the center of the femoral head perpendicular to the transverse pelvic axis and a second line from the center to the lateral acetabular edge; the alpha angle assesses cam morphology by measuring the head-neck offset on radial sequences; the acetabular index evaluates acetabular orientation; and the extrusion index quantifies superolateral uncovering of the femoral head. Each measurement is performed independently by at least two trained observers to establish inter-rater reliability, with discrepancies beyond predetermined thresholds resolved through consensus reading or third-observer adjudication. This method provides the reference standard for diagnosing conditions like acetabular dysplasia, femoroacetabular impingement, and hip osteoarthritis risk [16].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 4: Essential Materials for Manual Scoring Methodologies

| Item | Specification | Research Function |

|---|---|---|

| AASM Scoring Manual | Current version standards | Definitive reference for sleep stage and event classification [1] |

| International Clinical DR Scale | Standardized severity criteria | Reference standard for diabetic retinopathy grading [15] |

| Digital Imaging Software | DICOM-compliant with measurement tools | Enables precise morphological assessments on radiographs [16] |

| Polysomnography System | AASM-compliant with full montage | Acquires EEG, EOG, EMG, respiratory, and cardiac signals [1] |

| Validated Data Collection Forms | Structured abstraction templates | Standardizes manual data collection across multiple observers [17] |

| Retinal Fundus Camera | Standardized field protocols | Captures high-quality images for DR screening programs [15] |

| Statistical Agreement Packages | ICC, Kappa, Bland-Altman analysis | Quantifies inter-rater reliability and method comparison [15] [16] |

Methodological Validation and Quality Assurance

Manual Scoring Validation Framework

Quality assurance in manual scoring requires systematic approaches to maintain and verify scoring accuracy over time. Regular reliability testing is essential, typically performed through periodic inter-rater agreement assessments where multiple scorers independently evaluate the same samples. Scorers who demonstrate declining agreement rates receive remedial training to address identified discrepancies. For long-term studies, drift in scoring criteria represents a significant concern, addressed through regular recalibration sessions using reference standards and blinded duplicate scoring.

Documentation protocols represent another critical component, requiring detailed recording of scoring decisions, ambiguous cases, and protocol deviations. This documentation enables systematic analysis of scoring challenges and facilitates protocol refinements. Additionally, certification maintenance ensures ongoing competency, particularly in clinical applications like sleep medicine where AASM certification provides an important benchmark for quality and reliability [1]. These rigorous validation approaches establish manual scoring as the reference standard against which automated systems are evaluated.

Manual scoring by human observers remains the gold standard in multiple scientific domains due to its established reliability, contextual adaptability, and capacity for complex pattern recognition. The quantitative evidence demonstrates strong performance across diverse applications, from medical imaging to physiological monitoring. However, manual approaches face limitations in scalability, throughput, and potential inter-observer variability.

The emerging paradigm in behavioral scoring leverages the complementary strengths of both methodologies. Manual scoring provides the foundational reference standard and handles complex edge cases, while automated systems offer efficiency for high-volume screening and analysis. This integrated approach is particularly valuable in drug development, where both accuracy and throughput are essential. As automated systems continue to evolve, the principles and protocols of manual scoring will remain essential for their validation and appropriate implementation in research and clinical practice.

In the data-driven world of modern research, the process of behavior scoring—assigning quantitative values to observed behaviors—is a cornerstone for fields ranging from psychology and education to drug development. Traditionally, this has been a manual endeavor, reliant on human observers to record, classify, and analyze behavioral patterns. This manual process, however, is often fraught with challenges, including subjectivity, inconsistency, and significant time demands, which can compromise the reliability and scalability of research findings. The emergence of artificial intelligence (AI) and machine learning (ML) presents a powerful alternative: automated behavior scoring systems that promise enhanced precision, efficiency, and scalability.

This guide provides an objective comparison between manual and automated behavior scoring methodologies. Framed within the critical research context of reliability and validity, it examines how these approaches stack up against each other. For researchers and drug development professionals, the choice between manual and automated scoring is not merely a matter of convenience but one of scientific rigor. Reliability refers to the consistency of a measurement procedure, while validity is the extent to which a method measures what it intends to measure [18] [3]. These two pillars of measurement are paramount for ensuring that behavioral data yields trustworthy and actionable insights. This analysis synthesizes current experimental data and protocols to offer a clear-eyed view of the performance capabilities of both human-driven and AI-driven scoring systems.

Manual Behavior Scoring: Foundations and Workflows

Manual behavior scoring is characterized by direct human observation and interpretation of behaviors based on a predefined coding scheme or ethogram. The core principle is the application of human expertise to identify and categorize complex, and sometimes subtle, behavioral motifs.

Key Experimental Protocols

A rigorous manual scoring protocol typically involves several critical stages to maximize reliability and validity [19] [3].

- Operational Definition: Before any data collection begins, the target behavior must be defined in clear, observable, and measurable terms. For instance, rather than tracking a vague concept like "agitation," a researcher might define it as "rapid pacing covering more than two meters in a five-second interval."

- Observer Training: Multiple human coders undergo extensive training using practice videos or live sessions until they achieve a high level of agreement (typically ≥80% inter-rater reliability) on the operational definitions.

- Data Collection & Coding: Observers record data in real-time or from video recordings. This can involve various metrics:

- Inter-Rater Reliability (IRR) Checks: Throughout the study, a portion of the data (e.g., 20-30%) is independently scored by all trained coders. The consistency of their scores is calculated using statistics like Cohen's κ for categorical data or Cronbach's α for quantitative ratings, providing a direct measure of the method's reliability [3].

- Validity Assessment: Researchers evaluate validity by comparing the manual scores to other established criteria (criterion validity) and ensuring the measurement covers all aspects of the construct being studied (content validity) [3].

The following workflow diagram summarizes the sequential and iterative nature of this manual process.

The AI Alternative: Automated Scoring with Machine Learning

Automated behavior scoring leverages ML models to identify and classify patterns in behavioral data, such as video, audio, or sensor feeds. This approach transforms raw, high-dimensional data into quantifiable metrics with minimal human intervention. The core distinction lies in its ability to learn complex patterns from data and apply this learning consistently at scale.

Key Experimental Protocols

The development and deployment of an AI-based scoring system follow a structured, data-centric pipeline, as evidenced by recent research in behavioral phenotyping and student classification [20] [21].

- Data Acquisition & Pre-processing: Large volumes of raw behavioral data (e.g., video recordings of animal models or human subjects) are collected. This data is then pre-processed, which may involve frame extraction, background subtraction, and normalization. Techniques like Singular Value Decomposition (SVD) can be used for outlier detection and dimensionality reduction, creating a cleaner dataset for training [20].

- Model Selection & Training: A machine learning model, such as a neural network, is selected. The model is "trained" on a labeled subset of the data, where the "correct" scores (often generated by human experts during the protocol phase) are provided. To avoid overfitting and find the optimal model parameters, advanced optimization techniques like Genetic Algorithms (GA) can be employed [20].

- Model Validation: The trained model's performance is tested on a separate, held-out dataset that it has never seen before. This critical step evaluates how well the model generalizes to new data. Performance is quantified using metrics like accuracy, precision, recall, and F1-score.

- Deployment & Scoring: The validated model is deployed to score new behavioral data automatically. The system outputs structured data (e.g., frequency counts, duration, classifications) for final analysis.

- Continuous Validation: Even after deployment, the model's outputs may be periodically checked against human scores to monitor for performance drift and ensure ongoing validity [22].

The workflow for an automated system is more linear after the initial training phase, though it requires a robust initial investment in data preparation.

Comparative Analysis: Quantitative Data and Performance

Direct comparisons between manual and automated methods reveal distinct performance trade-offs. The table below summarizes key quantitative metrics based on current research findings.

Table 1: Performance Comparison of Manual vs. Automated Behavior Scoring

| Metric | Manual Scoring | Automated Scoring | Key Findings & Context |

|---|---|---|---|

| Throughput/Time Efficiency | Limited by human speed; ~34% of time spent on actual tasks [23]. | Saves 2-3 hours daily per researcher; processes data continuously [23]. | Automation recovers time for analysis. Manual processes are a significant time drain [23]. |

| Consistency/Reliability | 60-70% consistency in follow-up tasks; Inter-rater reliability (IRR) requires rigorous training to reach ~80% [23] [3]. | Up to 99% consistency in task execution; high internal consistency once validated [20] [23]. | Human judgment is inherently variable. AI systems perform repetitive tasks with near-perfect consistency [23]. |

| Accuracy & Validity | High potential validity when using expert coders, but can be compromised by subjective bias. | Can match or surpass human accuracy in classification tasks (e.g., superior accuracy in SCS-B system) [20]. | A study on AI-assisted systematic reviews found AI could not replace human reviewers entirely, highlighting potential validity gaps in complex judgments [22]. |

| Scalability | Poor; scaling requires training more personnel, leading to increased cost and variability. | Excellent; can analyze massive datasets with minimal additional marginal cost. | ML-driven systems like the behavior-based student classification system (SCS-B) handle extensive data with minimal processing time [20]. |

| Response Latency | Can be slow; average manual response times can be 42 hours [23]. | Near-instantaneous; can reduce response times from hours to minutes [23]. | Rapid automated scoring enables real-time feedback and intervention in experiments. |

The data shows a clear trend: automation excels in efficiency, consistency, and scalability. However, the "Accuracy & Validity" metric reveals a critical nuance. While one study found a machine learning-based classifier yielded "superior classification accuracy" [20], another directly comparing AI to human reviewers concluded that a "complete replacement of human reviewers by AI tools is not yet possible," noting a poor inter-rater reliability on complex tasks like risk-of-bias assessments [22]. This suggests that the superiority of automated scoring may be task-dependent.

The Scientist's Toolkit: Essential Reagents & Materials

Selecting the right tools is fundamental to implementing either scoring methodology. The following table details key solutions and their functions in the context of behavioral research.

Table 2: Essential Research Reagents and Solutions for Behavior Scoring

| Item Name | Function/Application | Relevance to Scoring Method |

|---|---|---|

| Structured Behavioral Ethogram | A predefined catalog that operationally defines all behaviors of interest. | Both (Foundation): Critical for ensuring human coders and AI models are trained to identify the same constructs. |

| High-Definition Video Recording System | Captures raw behavioral data for subsequent analysis. | Both (Foundation): Provides the primary data source for manual coding or for training and running computer vision models. |

| Inter-Rater Reliability (IRR) Software | Calculates agreement statistics (e.g., Cohen's κ, Cronbach's α) between coders. | Primarily Manual: The primary tool for quantifying and maintaining reliability in human-driven scoring [3]. |

| Data Annotation & Labeling Platform | Software that allows researchers to manually label video frames or data points for model training. | Primarily Automated: Creates the "ground truth" datasets required to supervise the training of machine learning models [20] [21]. |

| Machine Learning Model Architecture | The algorithm (e.g., Convolutional Neural Network) that learns to map raw data to behavioral scores. | Automated: The core "engine" of the automated scoring system. Genetic Algorithms can optimize these models [20]. |

| Singular Value Decomposition (SVD) Tool | A mathematical technique for data cleaning and dimensionality reduction. | Automated: Used in pre-processing to remove noise and simplify the data, improving model training efficiency and performance [20]. |

Integrated Discussion and Research Outlook

The evidence indicates that the choice between manual and automated behavior scoring is not a simple binary but a strategic decision. Automated systems offer transformative advantages in productivity, consistency, and the ability to manage large-scale datasets, making them ideal for high-throughput screening in drug development or analyzing extensive observational studies [20] [23]. Conversely, manual scoring retains its value in novel research areas where labeled datasets for training AI are scarce, or for complex, nuanced behaviors that currently challenge algorithmic interpretation [22].

The most promising path forward is a hybrid approach that leverages the strengths of both. In this model, human expertise is focused on the tasks where it is most irreplaceable: defining behavioral constructs, creating initial labeled datasets, and validating AI outputs. The automated system then handles the bulk of the repetitive scoring work, ensuring speed and reliability. This synergy is exemplified in modern research protocols that use human coders to establish ground truth, which then fuels an AI model that can consistently score the remaining data [20] [21].

Future directions in the field point toward increased integration of AI as a collaborative team member rather than a mere tool. Research is exploring ecological momentary assessment via mobile technology and the use of machine learning to identify subtle progress patterns that human observers might miss [19]. As these technologies mature and datasets grow, the reliability, validity, and scope of automated behavior scoring are poised to expand further, solidifying its role as an indispensable component of the researcher's toolkit.

In preclinical research, the accurate quantification of rodent behavior is foundational for studying neurological disorders and evaluating therapeutic efficacy. For decades, the field has relied on manual scoring systems like the Bederson and Garcia neurological deficit scores for stroke models, and the elevated plus maze, open field, and light-dark tests for anxiety research [24] [25]. These manual methods, while established, involve an observer directly rating an animal's behavior on defined ordinal scales, making the process susceptible to human subjectivity, time constraints, and inter-observer variability [24]. The emergence of automated, video-tracking systems like EthoVision XT represents a paradigm shift, offering a data-driven alternative that captures a vast array of behavioral parameters with high precision [24] [26] [27]. This guide objectively compares the performance of these manual versus automated approaches, providing researchers with experimental data to inform their methodological choices.

Quantitative Performance Comparison: Key Studies and Data

Direct comparisons in well-controlled experiments reveal critical differences in the sensitivity, reliability, and data output of manual versus automated scoring systems. The table below summarizes findings from key studies across different behavioral domains.

Table 1: Comparative Performance of Manual vs. Automated Behavioral Scoring

| Behavioral Domain & Test | Scoring Method | Key Performance Metrics | Study Findings | Reference |

|---|---|---|---|---|

| Stroke Model (MCAO Model) | Manual: Bederson Scale | Pre-stroke: 0; Post-stroke: 1.2 ± 0.8 | No statistically significant difference was found between pre- and post-stroke scores. | [24] |

| Manual: Garcia Scale | Pre-stroke: 18 ± 1; Post-stroke: 14 ± 4 | No statistically significant difference was found between pre- and post-stroke scores. | [24] | |

| Automated: EthoVision XT | Parameters: Distance moved, velocity, rotation, zone frequency | Post-stroke data showed significant differences (p < 0.05) in multiple parameters. | [24] | |

| Anxiety (Trait) | Single-Measure (SiM) | Correlation between different anxiety tests | Limited correlation between tests, poor capture of stable traits. | [26] |

| Automated Summary Measure (SuM) | Correlation between different anxiety tests | Stronger inter-test correlations; better prediction of future stress responses. | [26] | |

| Anxiety (Pharmacology) | Manual Behavioral Tests (EPM, OF, LD) | Sensitivity to detect anxiolytic drug effects | Only 2 out of 17 common test measures reliably detected effects. | [28] |

| Creative Cognition (Human) | Manual: AUT Scoring | Correlation with automated scoring (Elaboration) | Strong correlation (rho = 0.76, p < 0.001). | [29] |

| Automated: OCSAI System | Correlation with manual scoring (Originality) | Weaker but significant correlation (rho = 0.21, p < 0.001). | [29] |

Detailed Experimental Protocols and Methodologies

Protocol: Comparing Scoring Systems in a Rodent Stroke Model

This protocol is derived from a study directly comparing manual neurological scores with an automated open-field system [24].

- Animals: Male Sprague-Dawley rats.

- Stroke Model: Endothelin-1 (ET-1) induced Middle Cerebral Artery Occlusion (MCAO).

- Behavioral Assessment Timeline: Assessments were performed pre-stroke and 24 hours post-stroke.

- Manual Scoring:

- Bederson Scale: Scored from 0-3 based on forelimb flexion, resistance to lateral push, and circling behavior.

- Garcia Scale: Scored from 3-18 based on spontaneous activity, symmetry of limb movement, forepaw outstretching, climbing, body proprioception, and response to vibrissae touch.

- Procedure: Performed by trained lab personnel as part of their usual duties, blinded to the comparative nature of the study.

- Automated Scoring:

- System: EthoVision XT video-tracking software.

- Setup: Animals recorded in a Noldus Phenotyper cage for 2 hours.

- Parameters: Distance moved (cm), velocity (cm/s), clockwise vs. counter-clockwise rotations, frequency of visits to defined zones (e.g., water spout, feeder), rearing frequency, and meander (degrees/cm).

- Infarct Validation: Infarct size was quantified via TTC staining to confirm stroke presence.

This protocol outlines a novel approach to overcome the limitations of single-test anxiety assessment by using automated tracking and data synthesis [26].

- Animals: Rats and mice of both sexes (e.g., Wistar rats, C57BL/6J mice).

- Test Battery: A semi-randomized sequence of elevated plus-maze (EPM), open field (OF), and light-dark (LD) tests.

- Key Innovation: Each test is repeated three times over a 3-week period to capture behavior across multiple challenges.

- Automated Tracking: All tests are recorded and analyzed using automated software (e.g., EthoVision XT) to generate "Single Measures" (SiMs) like time spent in aversive zones.

- Data Synthesis:

- Summary Measures (SuMs): Created by averaging the min-max scaled SiMs across the repeated trials of the same test type. This reduces situational noise.

- Composite Measures (COMPs): Created by averaging SiMs or SuMs across different test types (e.g., combining EPM, OF, and LD data). This captures a common underlying trait.

- Validation: SuMs and COMPs were validated by their ability to better predict behavioral responses to subsequent acute stress and fear conditioning compared to single-measure approaches.

Protocol: Unified Behavioral Scoring for Complex Phenotypes

This methodology integrates data from a battery of tests into a single score for a specific behavioral trait, maximizing data use and statistical power [27].

- Concept: Similar to clinical unified rating scales for diseases like Parkinson's.

- Procedure:

- Test Battery Administration: Animals undergo a series of tests probing a specific trait (e.g., anxiety: elevated zero maze, light/dark box; sociability: 3-chamber test, social odor discrimination).

- Automated Data Collection: All tests are video-recorded and analyzed with tracking software (EthoVision XT) to generate multiple outcome measures.

- Data Normalization: Results for every outcome measure are normalized.

- Score Generation: Normalized scores from tests related to a single trait (e.g., anxiety) are combined to give each animal a single "unified score" for that trait.

- Advantage: This method can reveal clear phenotypic differences between strains or sexes that may be ambiguous or non-significant when looking at individual test measures alone.

Workflow and Logical Diagrams

The following diagram illustrates the core conceptual shift from traditional single-measure assessment to the more powerful integrated scoring approaches.

The Scientist's Toolkit: Essential Research Reagents and Solutions

This table details key software, tools, and methodological approaches essential for implementing the scoring systems discussed in this guide.

Table 2: Key Research Reagents and Solutions for Behavioral Scoring

| Item Name | Type | Primary Function in Research | Application Context |

|---|---|---|---|

| EthoVision XT | Automated Video-Tracking Software | Records and analyzes animal movement and behavior in real-time; extracts parameters like distance, velocity, and zone visits. | Open field, elevated plus/zero maze, stroke model deficit quantification, social interaction tests [24] [26] [27]. |

| Bederson Scale | Manual Behavioral Scoring Protocol | Provides a quick, standardized ordinal score (0-3) for gross neurological deficits in rodent stroke models. | Primary outcome measure in MCAO and other cerebral ischemia models [24]. |

| Garcia Scale | Manual Behavioral Scoring Protocol | A multi-parameter score (3-18) for a more detailed assessment of sensory, motor, and reflex functions post-stroke. | Secondary detailed assessment in rodent stroke models [24]. |

| Summary Measures (SuMs) | Data Analysis Methodology | Averages scaled behavioral variables across repeated tests to reduce noise and better capture stable behavioral traits. | Measuring trait anxiety, longitudinal study designs, improving test reliability [26]. |

| Unified Behavioral Scoring | Data Analysis Methodology | Combines normalized outcome measures from a battery of tests into a single score for a specific behavioral trait. | Detecting subtle phenotypic differences in complex disorders, strain/sex comparisons [27]. |

| Somnolyzer 24x7 | Automated Polysomnography Scorer | Classifies sleep stages and identifies respiratory events using an AI classifier (bidirectional LSTM RNN). | Validation in sleep research (shown here as an example of validated automation) [30]. |

From Theory to Lab Bench: Implementing Manual and Automated Scoring Systems

In scientific research, particularly in fields like drug development and behavioral analysis, the reliability and validity of manually scored data are paramount. Manual scoring involves human raters using structured scales to measure behaviors, physiological signals, or therapeutic outcomes. While artificial intelligence (AI) and automated systems offer compelling alternatives, manual assessment remains the gold standard against which these technologies are validated [15]. The integrity of this manual reference standard directly influences the perceived accuracy and ultimate adoption of automated solutions. This guide examines best practices for developing robust scoring scales and training raters effectively, providing a foundational framework for research comparing manual versus automated scoring reliability.

Foundational Principles of Scale Development

The Three-Phase, Nine-Step Process

Developing a rigorous measurement scale is a methodical process that ensures the tool accurately captures the complex, latent constructs it is designed to measure. This process can be organized into three overarching phases encompassing nine specific steps [31]:

Phase 1: Item Development This initial phase focuses on generating and conceptually refining the individual items that will constitute the scale.

- Step 1: Identification of the Domain(s) and Item Generation: Clearly articulate the concept, attribute, or unobserved behavior that is the target of measurement. A well-defined domain provides working knowledge of the phenomenon, specifies its boundaries, and eases subsequent steps. Generate items using both:

- Deductive methods (e.g., literature review, analysis of existing scales)

- Inductive methods (e.g., focus groups, interviews, direct observation) [31]

- Step 2: Consideration of Content Validity: Assess whether the items adequately cover the entire domain and are relevant to the target population. This often involves review by a panel of subject matter experts [31].

Phase 2: Scale Construction This phase transforms the initial item pool into a coherent measurement instrument.

- Step 3: Pre-testing Questions: Administer the draft items to a small, representative sample to identify problems with understanding, wording, or response formats [31].

- Step 4: Sampling and Survey Administration: Administer the pre-tested scale to a larger, well-defined sample size appropriate for planned statistical analyses [31].

- Step 5: Item Reduction: Systematically reduce the number of items to create a parsimonious scale. The initial item pool should be at least twice as long as the desired final scale [31].

- Step 6: Extraction of Latent Factors: Use statistical techniques like Factor Analysis to identify the underlying dimensions (factors) that the scale captures [31].

Phase 3: Scale Evaluation The final phase involves rigorously testing the scale's psychometric properties.

- Step 7: Tests of Dimensionality: Confirm the factor structure identified in Step 6 using confirmatory statistical methods [31].

- Step 8: Tests of Reliability: Evaluate the scale's consistency, including internal consistency (e.g., Cronbach's alpha) and inter-rater reliability (e.g., Intra-class Correlation Coefficient) [31].

- Step 9: Tests of Validity: Establish that the scale measures what it claims to measure by assessing construct validity, criterion validity, and other relevant forms of validity [31].

Visualizing the Scale Development Workflow

The following diagram illustrates the sequential and iterative nature of the scale development process:

Best Practices in Rater Training Methodologies

Effective rater training is critical for minimizing subjective biases and ensuring consistent application of a scoring scale. Several evidence-based training paradigms have been developed.

Core Rater Training Paradigms

Rater Error Training (RET): This traditional approach trains raters to recognize and avoid common cognitive biases that decrease rating accuracy [32]. Key biases include:

- Distributional Errors: Severity (harsh ratings), leniency (generous ratings), and central tendency (clustering ratings in the middle) [32].

- Halo Effect: Allowing a strong impression in one dimension to influence ratings on other, unrelated dimensions [32].

- Similar-to-Me Effect: Judging those perceived as similar to the rater more favorably [32].

- Contrast Effect: Evaluating individuals relative to others rather than against job requirements or absolute standards [32].

- First-Impression Error: Allowing an initial judgment to distort subsequent information [32]. RET is most effective when participants engage actively, apply principles to real or simulated situations, and receive feedback on their performance [32].

Frame-of-Reference (FOR) Training: This more advanced method focuses on aligning raters' "mental models" with a common performance theory. Instead of just focusing on the rating process, FOR training provides a content-oriented approach by training raters to maintain specific standards of performance across job dimensions [32]. A typical FOR training protocol involves [32]:

- Informing participants that performance consists of multiple dimensions.

- Instructing them to evaluate performance on separate, specific dimensions.

- Reviewing performance dimensions using tools like Behaviorally Anchored Rating Scales (BARS).

- Having participants practice rating using video vignettes or standardized scenarios.

- Providing detailed feedback on practice ratings to align raters with expert standards.

Behavioral Observation Training (BOT): This training focuses on improving the rater's observational skills and memory recall for specific behavioral incidents, ensuring that ratings are based on accurate observations rather than general impressions [32].

Visualizing the Rater Training Decision Process

Selecting the right training approach depends on the research context and the nature of the scale. The following flowchart aids in this decision-making process:

Experimental Protocols for Validation

Case Study: Validating a Manual Actigraphy Scoring Protocol

A 2024 study on scoring actigraphy (sleep-wake monitoring) data without sleep diaries provides an excellent template for a rigorous manual scoring validation protocol [33].

Objective: To develop a detailed actigraphy scoring protocol promoting internal consistency and replicability for cases without sleep diary data and to perform an inter-rater reliability analysis [33].

Methods:

- Sample: 159 nights of actigraphy data from a random subsample of 25 veterans with Gulf War Illness [33].

- Independent Scoring: Data were independently and manually scored by multiple raters using the standardized protocol [33].

- Parameters Measured:

- Start and end of rest intervals

- Derived sleep parameters: Time in Bed (TIB), Total Sleep Time (TST), Sleep Efficiency (SE) [33].

- Reliability Analysis: Inter-rater reliability was evaluated using Intra-class Correlation (ICC) for absolute agreement. Mean differences between scorers were also calculated [33].

Results:

- ICC demonstrated excellent agreement between manual scorers for:

- Rest interval start (ICC = 0.98) and end times (ICC = 0.99)

- TIB (ICC = 0.94), TST (ICC = 0.98), and SE (ICC = 0.97) [33].

- No clinically important differences (greater than 15 minutes) were found between scorers for start of rest (average difference: 6 mins ± 28) or end of rest (2 mins ± 23) [33].

Conclusion: The study demonstrated that a detailed, standardized scoring protocol could yield excellent inter-rater reliability, even without supplementary diary data. The protocol serves as a reproducible guideline for manual scoring, enhancing internal consistency for studies involving clinical populations [33].

Experimental Data: Manual vs. Automated Scoring Reliability

The table below summarizes key quantitative findings from recent studies comparing manual and automated scoring approaches, highlighting the performance metrics that establish manual scoring as a benchmark.

Table 1: Comparison of Manual and Automated Scoring Performance in Recent Studies

| Field of Application | Method | Key Performance Metrics | Agreement/Reliability Statistics | Reference |

|---|---|---|---|---|

| Diabetic Retinopathy Screening | Manual Consensus (MC) Grading | Reference Standard | Established benchmark for comparison | [15] |

| Automated AI Grading (EyeArt) | Sensitivity: 94.0% (any DR), 89.7% (referable DR)Specificity: 72.6% (any DR), 83.0% (referable DR)Diagnostic Accuracy (AUC): 83.5% (any DR), 86.3% (referable DR) | Moderate agreement with manual (QWK) | [15] | |

| Actigraphy Scoring (Sleep) | Detailed Manual Protocol | Reference Standard for rest intervals and sleep parameters | Excellent Inter-rater Reliability (ICC: 0.94 - 0.99) | [33] |

| Drug Target Identification | Traditional Computational Methods | Lower predictive accuracy, higher computational inefficiency | Suboptimal for novel chemical entities | [34] |

| AI-Driven Framework (optSAE+HSAPSO) | Accuracy: 95.5%, Computational Complexity: 0.010s/sample, Stability: ±0.003 | Superior predictive reliability vs. traditional methods | [34] |

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 2: Key Materials and Solutions for Scoring Scale Development and Validation Research

| Tool/Reagent | Primary Function | Application Context |

|---|---|---|

| Behaviorally Anchored Rating Scales (BARS) | Performance evaluation tool that uses specific, observable behaviors as anchors for different rating scale points. Enhances fairness and reduces subjectivity [35]. | Used across employee life cycle (hiring, performance reviews); adaptable for research subject behavior rating [35]. |

| Rater Training Modules (RET, FOR, BOT) | Standardized training packages to reduce rater biases, align rating standards, and improve observational skills [32]. | Critical for preparing research staff in multi-rater studies to ensure consistent data collection and high inter-rater reliability [32]. |

| Intra-class Correlation Coefficient (ICC) | Statistical measure used to quantify the degree of agreement or consistency among two or more raters for continuous data. | The gold standard statistic for reporting inter-rater reliability in manual scoring validation studies [33]. |

| Quadratic Weighted Kappa (QWK) | A metric for assessing agreement between two raters when the ratings are on an ordinal scale, and disagreements of different magnitudes are weighted differently. | Commonly used in medical imaging studies (e.g., diabetic retinopathy grading) to measure agreement against a reference standard [15]. |

| Standardized Patient Vignettes / Recorded Scenarios | Training and calibration tools featuring simulated or real patient interactions, performance examples, or data segments (e.g., video, actigraphy data). | Used in Frame-of-Reference training and for periodically recalibrating raters to prevent "rater drift" over the course of a study [32] [33]. |

| Statistical Software (R, with psychometric packages) | Open-source environment for conducting essential scale development analyses (Factor Analysis, ICC, Cronbach's Alpha, etc.) [36]. | Used throughout the scale development and evaluation phases for item reduction, dimensionality analysis, and reliability testing [36] [31]. |

Manual scoring, when supported by rigorously developed scales and comprehensive rater training, remains an indispensable and highly reliable methodology in scientific research. The disciplined application of the outlined best practices—from the structured phases of scale development to the implementation of evidence-based rater training programs like FOR and RET—establishes a robust foundation of data integrity.

This foundation is crucial not only for research relying on human judgment but also for the validation of emerging automated systems. As AI and automated grading tools evolve [15] [34] [37], their development and performance benchmarks are intrinsically linked to the quality of the manual reference standards against which they are measured. Therefore, investing in the refinement of manual scoring protocols is not a legacy practice but a critical enabler of technological progress, ensuring that automated solutions are built upon a bedrock of reliable and valid human assessment.

In the realm of scientific research, particularly in behavioral scoring for drug development, the methodology for evaluating complex traits profoundly impacts the validity, reproducibility, and translational relevance of findings. Unified scoring systems represent an advanced paradigm designed to synthesize multiple individual outcome measures into a single, composite score for each behavioral trait under investigation. This approach stands in stark contrast to traditional methods that often rely on single, isolated tests to represent complex, multifaceted systems [38]. The core premise of unified scoring is to maximize the utility of all generated data while simultaneously reducing the incidence of statistical errors that frequently plague research involving multiple comparisons [38].

The comparison between manual and automated behavior scoring reliability is not merely a technical consideration but a foundational aspect of rigorous scientific practice. Manual scoring, while allowing for nuanced human judgment, is inherently resource-intensive and prone to subjective bias, limiting its scalability [39]. Conversely, automated scoring, powered by advances in Large Language Models (LLMs) and artificial intelligence, offers consistency and efficiency but requires careful validation to ensure it captures the complexity of biological phenomena [39]. This guide provides an objective comparison of these methodologies within the context of preclinical behavioral research and drug development, supported by experimental data and detailed protocols to inform researchers, scientists, and professionals in the field.

Experimental Comparison: Manual vs. Automated Scoring Protocols

Quantitative Performance Metrics

Table 1: Comparative Performance of Manual vs. Automated Scoring Systems

| Performance Metric | Manual Scoring | Automated Scoring | Experimental Context |

|---|---|---|---|

| Time Efficiency | Representatives spend 20-30% of work hours on repetitive administrative tasks [23] | Saves 2-3 hours daily per representative; 15-20% productivity gain [23] | Sales automation analysis; comparable time savings projected for research scoring |

| Consistency Rate | 60-70% follow-up consistency [23] | 99% consistency in follow-up and data accuracy [23] | Quality assurance testing; directly applicable to behavioral observation consistency |

| Data Accuracy | Prone to subjective bias and human error [39] | Approaches 99% data accuracy [23] | Educational assessment scoring; LLMs achieved high performance replicating expert ratings [39] |

| Statistical Error Risk | Higher probability of Type I errors with multiple testing [38] | Reduced statistical errors through standardized application [38] | Preclinical behavioral research using unified scoring |

| Scalability | Limited by human resources; time-intensive [39] | Highly scalable; handles large datasets efficiently [39] | Educational research with LLM-based scoring |

| Inter-rater Reliability | Often requires reconciliation; Fleiss' kappa as low as 0.047 [40] | Standardized application improves agreement to Fleiss' kappa 0.176 [40] | Drug-drug interaction severity rating consistency study |