Optimizing Motion Index Thresholds for Accurate Rodent Freezing Detection in Behavioral Neuroscience

This article provides a comprehensive guide for researchers and drug development professionals on optimizing motion index thresholds for automated rodent freezing detection, a critical measure in fear conditioning and memory...

Optimizing Motion Index Thresholds for Accurate Rodent Freezing Detection in Behavioral Neuroscience

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on optimizing motion index thresholds for automated rodent freezing detection, a critical measure in fear conditioning and memory studies. It covers the foundational principles of Pavlovian fear conditioning and the necessity for automated scoring to replace tedious and potentially biased manual methods. The content details methodological approaches for establishing and applying optimal thresholds using video-based and inertial measurement systems, alongside practical troubleshooting for common scoring inaccuracies. Furthermore, it outlines rigorous validation protocols to ensure system reliability and offers a comparative analysis of different detection technologies. The synthesis of this information aims to empower scientists to generate more precise, reproducible, and high-throughput behavioral data for pharmacological and genetic screening.

The Critical Role of Freezing Detection in Behavioral Neuroscience and Drug Discovery

Pavlovian Fear Conditioning as a Leading Model for Learning, Memory, and Pathological Fear

FAQs: Pavlovian Fear Conditioning & Freezing Detection

Q1: What are the core reasons Pavlovian fear conditioning is a leading model in research? Pavlovian fear conditioning is a leading model due to several key strengths [1]:

- Robustness and Efficiency: Conditioning is robust in rats and mice and can occur after a single training trial. Training sessions are typically short (3-10 minutes) [1].

- Well-Defined Neurobiology: The neural circuits, particularly the roles of the hippocampus in contextual fear and the amygdala in fear memory, have been extensively mapped [1] [2].

- Translational Utility: The paradigm is a primary model for studying mechanisms of exposure therapy and the biological underpinnings of anxiety and trauma-related disorders, such as PTSD [2].

- Tractable Memory Phases: The learning episode is punctate, and the memory is long-lasting, allowing for high-temporal-resolution study of different memory phases [1].

Q2: My motion index threshold does not seem to accurately detect freezing. How can I optimize it? Optimizing the threshold is crucial and depends on your specific experimental setup [3]. The relationship between motion sensitivity and the detection threshold is key [3]:

- Sensitivity can be thought of as the amount of change in the field of view that qualifies as potential motion.

- Threshold is the amount of that potential motion that must accumulate to trigger a freezing/immobility event [3]. A practical approach is [3]:

- Calibrate to Your Setup: There is no universal setting; configuration must be based on what your specific camera sees.

- Find a Balance: A very low sensitivity with a very high threshold may never trigger, while a very high sensitivity with a very low threshold will cause the slightest change to set off an alarm.

- Test Systematically: Adjust settings incrementally and validate the automated scores against manual scoring for a subset of your data to ensure accuracy [1].

Q3: What are common rodent models of impaired fear extinction used in drug development? Deficient fear extinction is a robust clinical endophenotype for anxiety and trauma-related disorders, and several rodent models have been developed to study it [2]. These are built around:

- Experimentally Induced Neural Disruptions: Targeting extinction-related circuits like the amygdala and medial prefrontal cortex [2].

- Genetic Modifications: Using spontaneously arising or laboratory-induced genetic variants to understand susceptibilities [2].

- Environmental Insults: Exposing animals to stress, drugs of abuse, or unhealthy diets to model extinction deficits linked to lifestyle factors [2].

Q4: Are there ecological validity concerns with standard fear conditioning protocols? Yes, recent research highlights important ecological considerations. One study found that rats foraging in a naturalistic arena failed to exhibit conditioned fear to a tone that had been paired with a shock. In contrast, animals that encountered a shock paired with a realistic predatory threat (a looming artificial owl) instantly fled to safety upon hearing the tone for the first time. This suggests that in ecologically relevant environments, survival may be guided more by nonassociative processes or learning about realistic threat agents than by the standard associative fear processes observed in small, artificial chambers [4].

Troubleshooting Guides

Problem: Inaccurate Freezing Detection

Issue: The automated system is over-scoring (detecting freezing when the animal is moving) or under-scoring (missing genuine freezing bouts) behavior.

| Troubleshooting Step | Action and Rationale |

|---|---|

| 1. Validate Against Manual Scoring | Manually score a subset of videos and compare results to the automated system. This is the essential first step to confirm a problem exists and quantify its severity [1]. |

| 2. Adjust Sensitivity & Threshold | If the system is over-scoring, slightly decrease the sensitivity or increase the threshold. If it is under-scoring, try the opposite. Change only one parameter at a time to understand its effect [3]. |

| 3. Check Environmental Consistency | Ensure lighting (use consistent near-infrared lighting if applicable), camera position, and background are identical across all recordings, as changes can drastically affect motion detection [1]. |

| 4. Review Raw Video | Inspect the video files for periods of reported high and low freezing. Look for artifacts like camera shake, sudden light changes, or reflections that could be misinterpreted as motion. |

Problem: High Variability in Freezing Data Between Subjects

Issue: Freezing scores show unusually high variance across animals within the same experimental group, complicating data interpretation.

| Troubleshooting Step | Action and Rationale |

|---|---|

| 1. Standardize Handling & Habituation | Ensure all animals receive identical handling and a consistent habituation period to the testing chamber before the experiment begins. |

| 2. Verify Stimulus Consistency | Check that all auditory (tone frequency, volume), visual (light), and shock (current, duration) stimuli are calibrated and delivered consistently across all chambers in a multi-chamber setup. |

| 3. Control for Baseline Activity | Analyze baseline activity levels prior to CS/US presentation. High variability in baseline activity can confound the measurement of conditioned freezing. |

| 4. Check Equipment Logs | Review system logs for any timing errors or dropped stimuli during presentations, as these can lead to failed conditioning and thus high variability [1]. |

Problem: Failure to Replicate Established Fear Conditioning or Extinction Protocol

Issue: Animals are not showing the expected levels of conditioned freezing or are failing to extinguish the fear memory.

| Troubleshooting Step | Action and Rationale |

|---|---|

| 1. Re-confirm Contingency | Double-check the experimental design to ensure the CS and US are paired with the correct temporal contiguity. A misplaced delay can prevent robust conditioning. |

| 2. Review Animal Model | If using a genetically modified model, reconfirm that its baseline fear and extinction phenotypes are as reported in the literature, as background strain and housing conditions can influence behavior [2]. |

| 3. Sanity Check Shock Reactivity | Ensure the US (footshock) is functioning correctly and elicits a clear startle or flinch response (UR). An insufficient US intensity will not support conditioning. |

| 4. Assess Extinction Protocol | For extinction studies, confirm that a sufficient number of CS-only trials are being presented in the correct context (e.g., distinct from the training context for renewal studies) [2]. |

Quantitative Data Tables

Table 1: Validation Metrics for an Automated Freezing Detection System (VideoFreeze) [1]

| Metric | Value/Outcome | Importance |

|---|---|---|

| Correlation with Human Scoring | Very high correlation reported. | Ensures the automated measure reflects the ground-truth behavior. |

| Linear Fit (Human vs. Automated) | Slope of ~1, intercept of ~0. | Critical for group mean scores to be nearly identical to human scores. |

| Detection Range | Accurate at very low and very high freezing levels. | Prevents floor or ceiling effects in data. |

| Stimulus Presentation | Millisecond precision timing. | Essential for accurate CS-US pairing and measuring reaction times. |

Table 2: Motion Detection Parameter Interplay [3]

| Sensitivity Setting | Threshold Setting | Likely Outcome |

|---|---|---|

| Low (e.g., 1) | High (e.g., 100) | Misses true freezing; rarely triggers. |

| High (e.g., 100) | Low (e.g., 1) | Over-detects; slight movements trigger freezing. |

| Balanced Low | Balanced Low | May be susceptible to false positives from minor noise. |

| Balanced High | Balanced High | May miss shorter or less intense freezing bouts. |

| Recommended | Recommended | Requires empirical testing and validation for each specific lab setup and research question. |

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function in Fear Conditioning Research |

|---|---|

| E-Prime 3 Software | A suite for designing, running, and analyzing behavioral experiments with millisecond precision timing for stimulus presentation and response collection [5]. |

| Tobii Pro Lab | Advanced software for eye tracking studies; allows for synchronization of eye movement data with auditory/visual stimuli to study attention and cognitive load [6]. |

| VideoFreeze System | A validated, automated system for scoring conditioned freezing in rodents, reducing bias and time associated with manual scoring [1]. |

| High Horned Owl (Artificial) | Used in ecological validity studies as a realistic, innate fear stimulus to simulate an aerial predator, eliciting robust escape behavior [4]. |

| Mouse Imaging Program (MIP) | Provides access to in vivo imaging technologies (MRI, PET-CT) for studying neurobiological correlates of fear memory and extinction [7]. |

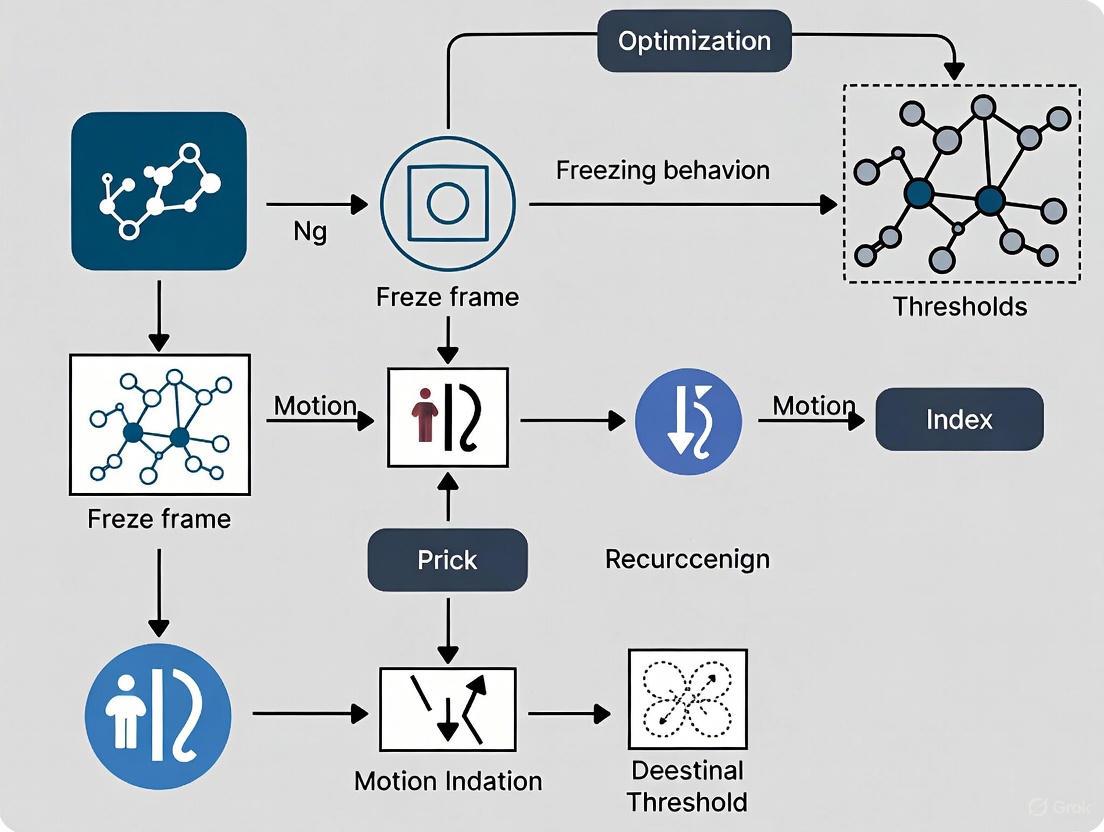

Experimental Workflow & Troubleshooting Diagrams

Core Concepts and Definitions

What is Freezing Behavior?

Freezing is a well-defined behavioral response in rodent fear conditioning studies, characterized by the "suppression of all movement except that required for respiration" [8] [9]. It represents a species-typical defensive behavior that serves as a key index of learned fear [10]. Operationally, it is defined as a period of immobility where the animal ceases all bodily movement apart from those necessary for breathing [11].

How Do Automated Systems Detect Freezing?

Automated systems detect freezing by measuring the absence or reduction of movement. The underlying principle is that "since freezing is defined as absence of movement, the system should measure movement in some way" and equate near-zero movement with freezing [8]. Systems utilize various technological approaches:

- Video-based systems analyze frame-by-frame pixel changes to detect movement [8] [11]

- Photobeam-based systems measure latency between beam interruptions [9]

- Pose estimation-based systems track keypoints on the animal's body and calculate kinematic metrics [12]

Critical Parameters for Automated Freezing Detection

Motion Index Threshold

The motion threshold is an "arbitrary limit above which the subject is considered moving" [8]. This parameter determines the sensitivity of movement detection, where higher thresholds may miss subtle movements, and lower thresholds may classify minor movements as activity.

Minimum Freeze Duration

The minimum freeze duration defines the "duration of time that a subject's motion must be below the Motion Threshold for a Freeze Episode to be counted" [8]. This prevents brief pauses from being classified as freezing bouts.

Table 1: Key Parameters for Freezing Detection

| Parameter | Definition | Function | Common Values/Units |

|---|---|---|---|

| Motion Threshold | Arbitrary movement limit | Distinguishes movement from immobility | Varies by system (e.g., 18 AU in VideoFreeze) [8] |

| Minimum Freeze Duration | Time motion must be below threshold | Defines a freezing episode | Typically 1-2 seconds [8] |

| Freezing Episode | Continuous period below threshold | Discrete freezing event | Number per session [8] |

| Percent Freeze | Time immobile/total session time | Overall freezing measurement | Percentage [8] |

Experimental Protocols for Validation

Protocol 1: Computer-Scoring versus Hand-Scoring Validation

Purpose: To ensure automated system accuracy compared to traditional manual scoring [8].

Procedure:

- Manual Scoring: Trained observers score freezing every 5-10 seconds using instantaneous time sampling or continuous duration recording with a stopwatch [8]

- Computer Scoring: Analyze same videos with automated system across multiple parameter combinations

- Statistical Comparison: Calculate correlation coefficients between manual and automated scores

- Parameter Optimization: Select parameters yielding highest correlation, slope closest to 1, and intercept closest to 0 [8]

Validation Metrics:

- Correlation coefficient (aim for >0.9)

- Slope of linear fit (target: ~1)

- Y-intercept (target: ~0) [8]

Protocol 2: Extended Fear Conditioning with Overtraining

Purpose: To induce robust, long-lasting fear memory for studying fear incubation [10].

Procedure:

- Subject Preparation: Male adult Wistar rats housed under 12h light-dark cycle

- Apparatus Setup: Sound-attenuating chamber with grid floor, speaker, and cleaning with 10% ethanol between subjects

- Training Session: Single session with 25 tone-shock pairings (overtraining)

- Testing: Context and cue tests at 48 hours (short-term) and 6 weeks (long-term) post-training [10]

Key Measures:

- Percent freezing during context test

- Percent freezing during cue test in altered context

- Comparison between short-term and long-term freezing

Research Reagent Solutions

Table 2: Essential Materials for Freezing Behavior Research

| Item | Function | Example/Notes |

|---|---|---|

| Sound-attenuating Cubicle | Isolates experimental chamber from external noise | Often lined with acoustic foam [8] |

| Near-Infrared Illumination System | Enables recording in darkness without affecting behavior | Minimizes video noise [8] |

| Low-noise Digital Video Camera | Captures high-quality video for analysis | Essential for reliable automated detection [8] |

| Conditioning Chamber with Grid Floor | Controlled environment for fear conditioning | Stainless steel rods for shock delivery [9] [10] |

| Aversive Stimulation System | Delivers precise footshocks | Shock generator/scrambler with calibrator [10] |

| Contextual Inserts | Alters chamber appearance for context discrimination | Changes geometry, odor, visual cues [8] |

| Open-Source Software | Automated behavior analysis | DeepLabCut, BehaviorDEPOT, Phobos, B-SOiD [13] [14] [12] |

Troubleshooting Common Experimental Issues

Problem: System Overestimates Freezing

Symptoms:

- High freezing scores during periods of observable movement

- Slope of correlation may or may not be near 1

- Relatively low correlation coefficient

- Y-intercept > 0 [8]

Solutions:

- Increase Motion Threshold: Threshold may be too high, insufficiently sensitive to subtle movements [8]

- Decrease Minimum Freeze Duration: Duration may be too short, categorizing brief pauses as freezing [8]

- Check for Video Noise: Ensure proper illumination and camera calibration [8]

Problem: System Underestimates Freezing

Symptoms:

- Low freezing scores when animal appears immobile

- Slope may or may not be near 1

- Relatively low correlation coefficient

- Y-intercept < 0 [8]

Solutions:

- Decrease Motion Threshold: Threshold may be too low, failing to detect slight movements [8]

- Increase Minimum Freeze Duration: Duration may be too long, missing legitimate freezing bouts [8]

- Validate Against Manual Scoring: Ensure operational definition matches human observation [8]

Problem: Inconsistent Performance Across Different Experimental Setups

Solutions:

- Re-calibrate for Each Setup: Lighting, camera angle, and context changes require parameter adjustment [11]

- Use Self-Calibrating Software: Tools like Phobos use brief manual quantification to automatically adjust parameters [11]

- Verify Contrast and Resolution: Ensure adequate video quality (e.g., minimum 384 × 288 pixels) [11]

Experimental Workflow Visualization

Threshold Optimization Process

Frequently Asked Questions

What are the key metrics for validating automated freezing detection?

The three key validation metrics are: (1) Correlation coefficient - should be near 1, indicating strong agreement between automated and manual scores; (2) Slope - should be close to 1, showing proportional agreement; and (3) Y-intercept - should be near 0, indicating no systematic over- or under-estimation [8]. A motion threshold of 18 with 30 frames minimum freeze duration has been shown to provide an optimal balance of these metrics [8].

How much manual scoring is needed to validate an automated system?

Studies suggest that a single 2-minute manual quantification can be sufficient for software calibration when using self-calibrating tools like Phobos [11]. However, for initial system validation, more extensive manual scoring (multiple raters, multiple videos) is recommended to establish reliability across different conditions and observers.

Can automated systems detect freezing in animals with head-mounted hardware?

Yes, newer pose estimation-based systems like BehaviorDEPOT can successfully detect freezing in animals wearing tethered head-mounts for optogenetics or imaging, overcoming a major limitation of commercial systems [12]. The keypoint tracking approach focuses on body part movements rather than overall centroid movement, making it more robust to experimental hardware.

What video specifications are required for reliable automated detection?

Minimum recommended specifications include native resolution of 384 × 288 pixels and frame rate of 5 frames/second [11]. Higher resolutions and frame rates generally improve accuracy. Consistent lighting, high contrast between animal and background, and minimal video noise are also critical factors.

How do I choose between different open-source tools?

Selection depends on your specific needs:

- For simplicity and predefined assays: BehaviorDEPOT offers hard-coded heuristics [12]

- For custom pose estimation: DeepLabCut provides flexible keypoint tracking [13] [14]

- For self-calibration: Phobos automatically adjusts parameters [11]

- For machine learning approaches: B-SOiD, MARS, or SimBA offer advanced classification [13] [14]

In rodent fear conditioning research, Pavlovian conditioned freezing has become a prominent model for studying learning, memory, and pathological fear. This paradigm has gained widespread adoption in large-scale genetic and pharmacological screens due to its efficiency, reproducibility, and well-defined neurobiology [1] [15]. However, a significant bottleneck in this research has traditionally been the measurement of freezing behavior itself.

For decades, researchers have relied on manual scoring by human observers, who measure freezing as the percent time an animal suppresses all movement except for respiration during a test period. While this method has proven reliable, it introduces substantial limitations that can compromise experimental integrity [1] [15]. The tedious nature of manual scoring makes it time-consuming for experimenters, while the subjective judgment involved can lead to unwanted variability and potential bias [1]. As the field moves toward larger-scale screens and requires more precise phenotypic characterization, these limitations have become increasingly problematic.

This technical guide addresses these challenges by providing troubleshooting guidance and validated methodologies for implementing automated freezing detection systems. By optimizing motion index thresholds and understanding the limitations of different scoring approaches, researchers can significantly enhance the reliability, efficiency, and objectivity of their behavioral assessments.

Troubleshooting Guides & FAQs

Frequently Asked Questions

Q: What are the primary limitations of manual freezing scoring that automated systems address?

A: Manual scoring suffers from three critical limitations: (1) Time consumption - it is tedious and labor-intensive, especially for large-scale studies; (2) Inter-rater variability - subjective judgment introduces inconsistency; and (3) Potential bias - experimenter expectations may unconsciously influence scoring [1]. Automated systems address these by providing objective, consistent, and high-throughput measurement.

Q: How do I validate that my automated system accurately detects freezing behavior?

A: Comprehensive validation requires demonstrating a very high correlation and excellent linear fit (with an intercept near 0 and slope near 1) between human and automated freezing scores [1]. The system must accurately score both very low freezing (detecting small movements) and very high freezing (detecting no movement). Correlation alone is insufficient, as high correlation can be achieved with scores on a completely different scale [1].

Q: Why does my photobeam-based system consistently overestimate freezing compared to manual scoring?

A: Photobeam systems with detectors placed 13mm or more apart often lack the spatial resolution needed to detect very small movements (such as minor grooming or head sway) that human scorers would not classify as freezing [1]. This can result in intercepts of ~20% or effectively double human scores, as reported in some validations [1].

Q: What are the advantages of video-based systems over other automated methods?

A: Video-based systems like VideoFreeze use digital video and near-infrared lighting to achieve outstanding performance in scoring both freezing and movement [1] [15]. They provide superior spatial resolution compared to photobeam systems and can distinguish between different behavioral states more effectively.

Q: Can automated systems detect defensive behaviors other than freezing?

A: Yes, advanced systems can identify behaviors like darting, flight, and jumping using measures such as the Peak Activity Ratio (PAR), which reflects the largest amplitude movement during a period of interest [16]. This is crucial as these active defenses may replace freezing under certain conditions and represent different emotional states.

Troubleshooting Common Technical Issues

Problem: Poor correlation between automated and manual scores across the entire freezing range

- Potential Cause: Suboptimal motion index threshold

- Solution: Systematically test multiple threshold values against manually scored reference videos. Ensure validation includes animals with very low (<10%) and very high (>80%) freezing levels [1].

- Verification: The optimal threshold should produce mean computer and human values for group data that are nearly identical [1].

Problem: Inconsistent scoring of brief freezing episodes

- Potential Cause: Inadequate temporal resolution in movement analysis

- Solution: For video-based systems, ensure frame rate is sufficient (typically ≥30 fps). For inertial measurement units (IMUs), sampling rates of 300 Hz provide excellent temporal resolution [17].

- Verification: Compare automated detection of short freezing bouts (2-3 seconds) with manual scoring.

Problem: System fails to detect certain freezing phenotypes

- Potential Cause: Over-reliance on a single detection modality

- Solution: Consider multi-modal approaches. IMU-based systems that measure head kinematics can automatically quantify behavioral freezing with superior precision and temporal resolution [17].

- Verification: Validate system performance across different rodent strains and experimental conditions.

Problem: Different results between commercial automated systems

- Potential Cause: Proprietary algorithms with different validation standards

- Solution: Request detailed validation data from manufacturers showing linear fit between human and automated scores. Prefer systems that demonstrate intercepts near 0 and slopes near 1 [1].

- Verification: Conduct pilot studies comparing new systems with your established manual or automated scoring methods.

Quantitative Comparison of Scoring Methodologies

Table 1: Comparison of Freezing Detection Methodologies

| Method Type | Key Advantages | Key Limitations | Optimal Use Cases |

|---|---|---|---|

| Manual Scoring | High face validity, adaptable to behavioral nuances | Time-consuming, subjective, prone to bias and variability [1] | Small-scale studies, method development |

| Video-Based Systems (VideoFreeze) | Excellent spatial resolution, well-validated, "turn-key" operation [1] [15] | Proprietary algorithms, may require validation for novel setups | Large-scale screens, standard fear conditioning |

| Photobeam-Based Systems | Lower cost, established technology | Poor spatial resolution, often overestimates freezing [1] | Budget-conscious labs with consistent behavioral profiles |

| Inertial Measurement Units (IMUs) | High precision kinematics, wireless capability, superior temporal resolution [17] | Requires animal attachment, more complex setup | High-precision studies, head movement analysis |

Table 2: Validation Metrics for Automated Freezing Detection Systems

| Validation Parameter | Target Performance | Importance |

|---|---|---|

| Correlation with Human Scoring | r > 0.9 | Essential but not sufficient alone [1] |

| Linear Fit (Slope) | As close to 1 as possible | Ensures proportional accuracy across freezing range [1] |

| Linear Fit (Intercept) | As close to 0 as possible | Prevents systematic over/underestimation [1] |

| Low Freezing Detection | Accurate for <10% freezing | Tests sensitivity to small movements [1] |

| High Freezing Detection | Accurate for >80% freezing | Tests specificity in absence of movement [1] |

Experimental Protocols & Methodologies

Protocol: Validation of Automated Freezing Scoring Systems

Purpose: To systematically validate any automated freezing detection system against manual scoring by trained observers.

Materials:

- Automated freezing detection system (video-based, photobeam, or IMU)

- Video recording system synchronized with automated scoring

- Appropriate animal subjects (typically 16-24 rodents representing diverse freezing levels)

- Statistical analysis software

Procedure:

- Record behavioral sessions that represent the full range of expected freezing values (0-100%).

- Have at least two trained observers manually score all videos using standardized criteria, resolving discrepancies through consensus.

- Run automated scoring on the same sessions.

- Calculate correlation coefficients between automated and manual scores.

- Perform linear regression analysis with manual scores as independent variable and automated scores as dependent variable.

- Verify system performance at extremes by separately analyzing subsets with <10% and >80% manual freezing scores.

Validation Criteria: A well-validated system should show correlation >0.9, linear regression slope approaching 1, and intercept approaching 0 across the entire freezing range [1].

Protocol: Extended Fear Conditioning with Overtraining

Purpose: To induce robust fear conditioning that may elicit diverse defensive behaviors beyond freezing.

Materials:

- Standard fear conditioning apparatus with grid floor for footshock delivery

- Sound-attenuating chamber

- Video recording system with appropriate lighting (near-infrared for dark phase)

- Automated behavior scoring system

Procedure:

- Subject Preparation: House rats (e.g., male adult Wistar) under standard conditions. Maintain at 85% of free-feeding weight if combining with appetitive tasks.

- Apparatus Setup: Clean all internal surfaces with 10% ethanol between subjects. Calibrate shock intensity using a shock-intensity calibrator connected to grid bars [10].

- Training Phase: Expose subjects to a single session with 25 tone-shock pairings (overtraining) [10]. Each pairing consists of a tone (e.g., 30s, 80dB) coterminating with a footshock (e.g., 1s, 0.7mA).

- Testing Phase: Conduct context tests (exposure to original training context) and cue tests (exposure to modified context with tone presentation) at both short-term (48h) and long-term (6 weeks) intervals to examine fear incubation [10].

- Behavior Scoring: Analyze sessions using automated systems capable of detecting both freezing and active defenses (flight, darting).

Signaling Pathways & Experimental Workflows

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Automated Freezing Detection Research

| Item | Function/Application | Key Considerations |

|---|---|---|

| VideoFreeze System | Automated video-based freezing analysis | Provides validated "turn-key" solution; uses digital video and near-infrared lighting [1] [15] |

| Wireless IMU (Inertial Measurement Unit) | High-precision head kinematics measurement | Samples at 300Hz for superior temporal resolution; enables real-time movement tracking [17] |

| Fear Conditioning Chamber | Standardized environment for Pavlovian conditioning | Should include grid floor for footshock, speaker for auditory CS, and appropriate contextual cues |

| Shock Intensity Calibrator | Precise calibration of aversive stimulus | Ensures consistent US intensity across experiments and apparatuses [10] |

| Near-Infrared Lighting System | Enables video recording in dark phases | Essential for circadian studies; compatible with rodent visual capabilities |

| Photobeam Activity System | Alternative motion detection method | Lower spatial resolution than video; may overestimate freezing [1] |

Why Automation? The Drive for Efficiency and Reproducibility in High-Throughput Screens

Frequently Asked Questions (FAQs)

1. How does automation specifically improve data reproducibility in high-throughput screens? Automation significantly enhances reproducibility by standardizing every step of the experimental process. Robotic systems perform tasks like sample preparation, liquid handling, and plate management with minimal human intervention, which reduces manual errors and variations [18]. This creates standardized, documented workflows that make replication and experimental validation easier [18]. For instance, automated cell and colony counting via digital image analysis provides more accurate and reproducible counts than manual methods [19].

2. What are the primary technical challenges when implementing an automated HTS workflow? The primary challenges include managing the massive data volumes generated, which can reach terabytes or petabytes, creating pressure on storage and computing resources [18]. Integration between different systems, such as robotic workstations, detectors, and data analysis software, can be complex [20]. Furthermore, maintaining quality control across thousands of samples and ensuring proper instrument calibration are critical to avoid artifacts like the "edge effect" in microplates [21].

3. In the context of rodent freezing detection, how can automation aid in behavioral annotation? Automated detection modules can analyze pose estimation data by calculating velocity between frame-to-frame movements [22]. Freezing behavior is then identified as periods where movement falls below a defined velocity threshold sustained over a specific time [22]. This automated analysis assists researchers by identifying periods of inactivity in animals in a high-throughput manner, which is crucial for consistent behavioral scoring.

4. How can research teams balance the high start-up costs of automation? Teams can balance costs through strategic, staged implementation that first targets critical workflow bottlenecks [18]. Utilizing cloud computing for flexible data analysis resources, leveraging open-source software tools, and sharing equipment costs through collaborations are effective strategies [18]. Despite the high initial investment, the return is quick due to increased productivity, decreased per-sample costs, and reduced labor demands [18] [19].

5. What role does data management play in a successful HTS operation? Effective data management is crucial as HTS generates volumes of data that are impossible for humans to process alone [20]. A FAIR (Findable, Accessible, Interoperable, Reusable) data environment is recommended [20]. This often involves combining Electronic Lab Notebook (ELN) and Laboratory Information Management System (LIMS) environments to manage the complete workflow, from sample request and experimentation to analysis and reporting [20]. Proper data management ensures that the high volumes of data can be effectively mined for insights.

Troubleshooting Guide

This guide addresses common issues in automated high-throughput screening environments.

| Problem | Possible Causes | Recommended Solutions |

|---|---|---|

| High Data Variability (Poor Precision) | • Pipetting errors• Edge effect (evaporation from outer wells)• Instrument calibration drift | • Implement automated liquid handling to minimize human error [19]• Use plate-based QC controls to identify and correct for edge effects [21]• Adhere to a strict instrument calibration and maintenance schedule |

| Excessive Carryover Between Samples | • Inadequate needle wash• Incompatible wash solvent• Worn or contaminated sampling needle | • Check and unclog needle wash ports; ensure sufficient wash volume and time [23]• Optimize wash solvent composition for your specific analytes [23]• Regularly inspect and replace sampling needles as per manufacturer guidelines |

| Inconsistent Freezing Detection in Behavioral Assays | • Incorrect motion index threshold• Poor lighting or video quality• Variations in animal baseline activity | • Validate and calibrate velocity/duration thresholds for your specific setup and rodent strain [22] [10]• Ensure consistent, shadow-free illumination and camera positioning• Establish baseline movement levels for each subject and normalize data accordingly |

| Failed System Integration & Data Flow | • Incompatible software platforms• Lack of a centralized data system• Manual data transfer steps | • Invest in a platform that integrates ELN, LIMS, and analysis modules [20]• Use a centralized data management system to integrate all instruments and data streams [24]• Automate data transfer to eliminate manual entry errors and bottlenecks |

| Low Hit Confirmation Rate | • High false-positive/false-negative rates in primary screen• Single-concentration screening artifacts | • Adopt quantitative HTS (qHTS), testing each compound at multiple concentrations to generate reliable concentration-response curves [25]• Implement robust QC measures, including both plate-based and sample-based controls [21] |

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function in HTS |

|---|---|

| Microplates (96- to 3,456-well) | The standard platform for HTS, allowing for miniaturization of assays and parallel testing of thousands of samples [21]. |

| Liquid Handlers / Automated Pipettors | Robotic systems that accurately dispense reagents and samples in the microliter to nanoliter range, enabling speed and precision [21] [19]. |

| Plate Readers (Detectors) | Instruments that read assay outputs (e.g., fluorescence, luminescence, absorption) from microplates, providing the raw data for analysis [21]. |

| Automated Colony Counters | Systems that use digital imaging and edge detection to automatically count cell or microbial colonies, increasing throughput and accuracy over manual counts [19]. |

| Needle Wash Solvent | A crucial rinsing liquid used in autosamplers to clean the sampling needle and reduce carryover from the previous sample [23]. |

| Carrier Solvent | The liquid used to aspire the sample into the sampling system; it must be compatible with both the sample and the mobile phase to avoid precipitation [23]. |

Experimental Workflow for an Automated HTS Campaign

The following diagram illustrates the key stages of a generalized, automated high-throughput screening workflow, from initial sample management to final data analysis and hit identification.

Core Components of an Automated Freezing Detection System

Automated freezing detection systems are essential tools in neuroscience and pharmacology for studying learned fear, memory, and the efficacy of new therapeutic compounds in rodent models. These systems provide objective, high-throughput alternatives to tedious and subjective manual scoring, enhancing the reproducibility and rigor of behavioral experiments [15] [12]. The core principle involves detecting the characteristic absence of movement, except for respiration, that defines freezing behavior—a prominent species-specific defense reaction [15].

Advancements in technology have shifted methodologies from basic photobeam interruption systems to more sophisticated video-based tracking and inertial measurement units (IMUs). Modern systems leverage machine learning for markerless pose estimation, allowing researchers to track specific body parts with high precision and define behaviors based on detailed kinematic and postural statistics [12]. This article details the core components of these systems, provides troubleshooting guidance, and discusses the critical process of optimizing motion index thresholds for research.

Core System Components & Workflow

An automated freezing detection system integrates several hardware and software components to capture and analyze animal behavior. The typical workflow moves from data acquisition to behavioral classification and data output.

System Workflow

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 1: Key materials and equipment for automated freezing detection experiments.

| Item | Function & Application in Research |

|---|---|

| Fear Conditioning Chamber | An enclosed arena where rodents are exposed to neutral conditioned stimuli (CS - e.g., tone, context) paired with an aversive unconditioned stimulus (US - e.g., mild foot shock) to elicit learned freezing behavior [15]. |

| Video Camera | Records rodent behavior for subsequent analysis. High-frame-rate cameras are used with video-based systems like FreezeScan [26] or BehaviorDEPOT [12] to capture subtle movements. |

| Inertial Measurement Units (IMUs) | Wearable sensors containing accelerometers and gyroscopes that capture motion data. Used in some paradigms, particularly for studying freezing of gait in Parkinson's disease models [27] [28] [29]. |

| Stimulus Control System | Hardware (e.g., Arduino) and software to precisely administer and time-lock stimuli (shock, tone, light) with behavioral recording, ensuring consistent experimental protocols [12]. |

| Pose Estimation Software | Machine learning-based tools (e.g., DeepLabCut, SLEAP) that identify and track specific animal body parts ("keypoints") frame-by-frame in video recordings, providing the raw data for movement analysis [12]. |

| Behavior Analysis Software | Programs (e.g., BehaviorDEPOT, FreezeScan, Video Freeze) that calculate movement metrics from tracking data and apply heuristics or classifiers to detect freezing bouts [26] [12]. |

Troubleshooting Guides & FAQs

FAQ 1: Why is my system failing to detect freezing bouts accurately?

Potential Causes and Solutions:

Incorrect Threshold Setting: The most common cause. The velocity threshold for classifying a frame as "freezing" is too high or too low.

- Solution: Use the Optimization Module in software like BehaviorDEPOT. Manually score a short video segment, then adjust the threshold until the automated detection matches the manual scoring. Re-validate with a new video segment [12].

Poor Keypoint Tracking Accuracy: The pose estimation model is not accurately tracking the animal's body parts, leading to erroneous velocity calculations.

- Solution: Retrain the pose estimation model (e.g., DeepLabCut) with more labeled frames from your specific experimental setup, ensuring coverage of various animal orientations and lighting conditions [12].

Environmental Interference: Changes in lighting, shadows, or reflections can confuse the tracking algorithm.

- Solution: Use consistent, diffuse lighting. Employ infrared lighting and cameras for experiments in dark conditions. Ensure the background is stable and non-reflective [26].

Hardware Interference: Tethered head-mounts for optogenetics or fiber photometry can be misidentified as animal movement.

- Solution: Use software like BehaviorDEPOT, which is explicitly validated to maintain high accuracy (>90%) with animals wearing head-mounted hardware [12].

FAQ 2: How do I optimize and validate the motion index threshold?

Optimizing the motion index threshold is a core requirement for generating reliable and reproducible data.

Detailed Validation Protocol:

Generate Ground Truth Data: Manually score a subset of your experimental videos (e.g., 5-10 minutes from different experimental groups). This should be done by a trained observer, with inter-rater reliability checks if multiple people are involved [15] [12].

Software Analysis: Run the same video subset through your automated detection system (e.g., BehaviorDEPOT, Video Freeze) [12].

Statistical Comparison: Compare the automated scores against your manual ground truth. A well-validated system requires more than just a high correlation; it should have a linear fit with a slope near 1 and an intercept near 0, meaning the automated scores are numerically identical to human scores across a wide range of freezing values [15].

Performance Metrics Calculation: Calculate standard diagnostic metrics to quantify performance [28]. The following table defines these key metrics.

Table 2: Key performance metrics for validating freezing detection algorithms.

| Metric | Definition | Interpretation in Validation |

|---|---|---|

| Accuracy | (True Positives + True Negatives) / Total Frames | The overall proportion of correct detections. Aim for >90% [12]. |

| Sensitivity | True Positives / (True Positives + False Negatives) | The system's ability to correctly identify true freezing bouts. Also known as recall. |

| Specificity | True Negatives / (True Negatives + False Positives) | The system's ability to correctly identify non-freezing movement. |

| Positive Predictive Value (PPV) | True Positives / (True Positives + False Positives) | The probability that a detected freeze is a true freeze. Also known as precision. |

The validation process and the relationship between manual and automated scoring can be visualized as a logical pathway to a reliable threshold.

Threshold Validation Logic

FAQ 3: What are the limitations of the Freeze Index (FI) and how can they be mitigated?

The Freeze Index (FI), defined as the ratio of power in the "freeze" band (3-8 Hz) to the "locomotion" band (0.5-3 Hz) in accelerometer data, is a common feature for detecting freezing of gait (FoG) in Parkinson's research [27] [29].

- Limitation 1: Inability to Distinguish Voluntary Stops. The FI increases during both involuntary freezing (trembling or akinesia) and voluntary stops, as both involve a reduction in power in the locomotion band. This leads to false positives [29].

Mitigation Strategy: Combine motion data with heart rate monitoring. Studies show that heart rate changes during a FOG event are statistically different from those during a voluntary stop, providing a complementary signal of the patient's intention to move [29].

Limitation 2: Lack of Standardization. The FI has been implemented with a broad range of hyperparameters (e.g., sampling frequency, time window, normalization), leading to inconsistent results across studies and hindering regulatory acceptance [27].

- Mitigation Strategy: Adopt a standardized, rigorously defined FI estimation algorithm. Recent research provides open-source code to formalize the FI's calculation, promoting reproducibility and reliability for clinical and regulatory evaluations [27].

Implementing Automated Detection: From System Setup to Threshold Calibration

Frequently Asked Questions (FAQs)

Q1: What are Motion Threshold and Minimum Freeze Duration in rodent freezing detection?

These are the two primary parameters in automated fear conditioning systems (like VideoFreeze) that work together to define and detect freezing behavior.

- Motion Threshold: This is an arbitrary unit threshold that determines when the subject is considered "moving." The system calculates a "motion index" based on pixel changes between video frames. If the motion index is above this threshold, the animal is classified as active. If it is below the threshold, the potential freezing episode begins [8].

- Minimum Freeze Duration: This is the minimum length of time (e.g., in seconds or video frames) that the subject's motion must continuously remain below the Motion Threshold for the episode to be officially counted and recorded as a "freeze" [8].

The following workflow illustrates how these two parameters work in tandem to classify behavior.

Q2: What are the typical values for these parameters in mice and rat studies?

The optimal parameters are species-dependent and must be validated for your specific setup. The table below summarizes values reported in the literature for the VideoFreeze system.

Table 1: Reported Parameter Values for VideoFreeze Software

| Species | Motion Threshold | Minimum Freeze Duration | Key Reference / Context |

|---|---|---|---|

| Mice | 18 (arbitrary units) | 30 frames (1 second) | Anagnostaras et al. (2010) - Systematically validated settings [15] [30] |

| Rats | 50 (arbitrary units) | 30 frames (1 second) | Zelikowsky et al. (2012) - Used in contextual discrimination research [31] [30] |

Q3: How do I validate the parameters for my own experimental setup?

Relying on default parameters is not recommended. Proper validation is a trial-and-error process to ensure the automated scores align with human observation [31] [30]. The standard method involves:

- Record Experimental Sessions: Capture video during your fear conditioning tests.

- Manual Scoring by Human Observers: Have one or more trained researchers, blind to the software scores, manually score the videos for freezing. The gold standard is to define freezing as the "absence of movement of the body and whiskers with the exception of respiratory motion" [15]. Scoring can be done using instantaneous time sampling (e.g., every 8 seconds) or continuous measurement with a stopwatch [8] [15].

- Automated Scoring: Score the same videos using your automated system (e.g., VideoFreeze) with a range of different parameter settings.

- Statistical Comparison: Compare the manual and automated scores. A well-validated system should show [8] [15]:

- A high linear correlation coefficient (near 1).

- A slope of the linear fit near 1.

- A y-intercept near 0.

- A high Cohen's kappa statistic for agreement.

Q4: What are common troubleshooting issues related to these parameters?

Table 2: Troubleshooting Guide for Parameter Configuration

| Problem | Potential Cause | Solution |

|---|---|---|

| System over-estimates freezing (High intercept, counts slight movements as freezing) | Motion Threshold is too HIGH and/or Minimum Freeze Duration is too SHORT [8]. | Gradually decrease the Motion Threshold and/or increase the Minimum Freeze Duration. Re-validate. |

| System under-estimates freezing (Low intercept, fails to count true freezing) | Motion Threshold is too LOW and/or Minimum Freeze Duration is too LONG [8]. | Gradually increase the Motion Threshold and/or decrease the Minimum Freeze Duration. Re-validate. |

| Good agreement in one context but not another | Differences in lighting, contrast, or camera white balance between contexts can affect the motion index calculation, even with identical parameters [31] [30]. | Calibrate cameras meticulously in all context configurations. Ensure consistent lighting and image contrast. You may need to find a compromise setting or validate parameters separately for each context. |

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Key Materials for Automated Fear Conditioning Experiments

| Item | Function / Description |

|---|---|

| Video Fear Conditioning System | An integrated system (e.g., from Med Associates) including sound-attenuating cubicles, conditioning chambers, and near-infrared (NIR) illumination to enable recording in darkness [8]. |

| Near-Infrared (NIR) Camera | A low-noise digital video camera sensitive to NIR light. It allows for motion tracking without providing visual cues to the rodent, which is critical for unbiased context learning [8]. |

| Contextual Inserts | Modular inserts (e.g., A-frames, curved walls, different floor types) that change the geometry and cues within the conditioning chamber. These are essential for context discrimination and generalization studies [8] [31]. |

| VideoFreeze or Similar Software | Software that uses a digital video-based algorithm to calculate a motion index and automatically score freezing behavior based on the configured Motion Threshold and Minimum Freeze Duration [8] [15]. |

| Calibration Tools | Tools for standardizing camera settings (like white balance) and ensuring consistent video quality across different sessions and contexts, which is vital for reliable automated scoring [31] [30]. |

A Step-by-Step Guide to Initial System Calibration and Context Configuration

Technical Support Center

Troubleshooting Guides

Issue 1: Poor Correlation Between Automated and Manual Freezing Scores

- Problem: The system's automated freezing measurements do not align with scores from a trained human observer.

- Solution:

- Re-calibrate with a new reference video: Ensure the calibration video has freezing behavior representing 10%-90% of the total video time. Scores outside this range can compromise calibration accuracy [32] [11].

- Verify video quality: Confirm your video meets minimum requirements (e.g., 384 x 288 pixels, 5 frames per second). Poor resolution or low frame rate can hinder detection [32] [11].

- Check for environmental artifacts: Look for mirror reflections (artifacts) or poor contrast between the animal and the background, which can distort movement tracking. Re-record under optimized conditions if necessary [32].

Issue 2: High Inter-user or Intra-user Variability in Results

- Problem: Different users, or the same user at different times, get inconsistent results with the same video set.

- Solution:

- Standardize the manual calibration process: Use the software's built-in calibration interface, where a single 2-minute manual quantification is used to automatically adjust system parameters for a whole set of videos. This reduces the need for individual parameter adjustments by each researcher [32] [11].

- Use a shared calibration file: Once a satisfactory calibration is achieved for a specific setup, save the MAT file containing the best parameters. Other users can then apply this file to videos recorded under identical conditions, ensuring consistency [32].

Issue 3: System Fails to Detect Small Movements or Distinguishes Poorly Between High and Low Freezing

- Problem: The software categorizes slight movements as freezing, or its performance is unreliable at the extremes of the freezing spectrum.

- Solution:

- Optimize the freezing threshold and minimum freezing time: These are two critical parameters. The freezing threshold is the number of non-overlapping pixels between frames below which the animal is considered freezing. The minimum freezing time is the shortest duration a movement suppression must last to be counted as a freezing epoch. Automated calibration software like Phobos systematically tests combinations of these parameters to find the optimum values that best match human scoring [32].

- Validate the linear fit: A system is only valid if it shows a strong linear fit (slope near 1 and intercept near 0) with human scores across the entire range of freezing values, not just a high correlation. Systems that merely correlate well may still consistently over- or under-estimate freezing [1].

Frequently Asked Questions (FAQs)

Q1: What are the minimum technical specifications for my video recordings to ensure reliable analysis? A1: The software has been validated with videos meeting the following minimum specifications. Using videos below these standards is not recommended and may yield unreliable results [32] [11].

Table: Minimum Video Recording Specifications

| Parameter | Minimum Specification | Note |

|---|---|---|

| Resolution | 384 × 288 pixels | A larger crop area is recommended if close to this minimum. |

| Frame Rate | 5 frames per second | Higher frame rates (e.g., 24-30 fps) are commonly used in studies. |

| Format | .avi | Ensure compatibility with your analysis software. |

| Contrast | High contrast between animal and background | Poor contrast reduces tracking accuracy [32]. |

Q2: How long does the initial calibration process take, and what is required from the user? A2: The core calibration process is designed to be efficient. It requires the user to manually score a single 2-minute reference video by pressing a button to mark the start and end of each freezing episode. The software then uses this manual scoring to automatically calibrate its parameters for the entire video set, a process that typically completes in minutes [32] [11].

Q3: What are the key performance metrics I should check to validate my automated system? A3: When validating your system against manual scoring, do not rely on correlation alone. A comprehensive validation should report the following metrics [1]:

- Pearson's r: Measures the strength of the correlation.

- Slope of the linear fit: Should be as close as possible to 1.

- Intercept of the linear fit: Should be as close as possible to 0.

- Comparison of group means: The average freezing scores from automated and manual scoring should be nearly identical.

Q4: My research involves assessing skilled forelimb function, not just freezing. Are there advanced metrics for this? A4: Yes, for complex motor tasks like the single pellet reach task, summary metrics like success rate are insufficient. The Kinematic Deviation Index (KDI) is a unitless score developed to quantify the overall difference between an animal's movement during a task and its optimal performance. It uses principal component analysis (PCA) on spatiotemporal data to provide a sensitive measure of motor function, useful for assessing recovery and compensation in neurological disorder models [33].

Essential Research Reagent Solutions

The following table details key materials and software solutions used in rodent freezing and motion analysis research.

Table: Essential Research Reagents and Tools

| Item Name | Type | Function / Explanation |

|---|---|---|

| Phobos | Software | A freely available, self-calibrating software for automatic measurement of freezing behavior. It reduces inter-observer variability and labor time [32] [11]. |

| VideoFreeze | Software | A commercial, "turn-key" system for fear conditioning that uses digital video and near-infrared lighting to score freezing and movement. It has been extensively validated [1]. |

| Kinematic Deviation Index (KDI) | Analytical Metric | A unitless summary score that quantifies the deviation of an animal's movement from an optimal performance during a skilled task, bridging the gap between simple success/failure metrics and complex kinematic data [33]. |

| Rodent Research Hardware System | Hardware Platform (NASA) | Provides a standardized platform for long-duration rodent experiments, including on the International Space Station. It includes habitats and a video system for monitoring rodent health and behavior [34] [35]. |

| Single Pellet Reach Task | Behavioral Assay | A standardized task to assess skilled forelimb function and motor learning in rodents. It is often recorded with high-speed cameras for subsequent kinematic analysis [33]. |

Experimental Protocols and Workflows

Detailed Protocol: System Calibration with Phobos Software

- Video Acquisition: Record a set of videos under consistent conditions (lighting, camera angle, chamber setup). Ensure they meet the minimum technical specifications.

- Manual Calibration Scoring:

- Select one representative 2-minute video as your reference.

- Using the software interface, press a button to mark the beginning of a freezing episode and press it again to mark the end.

- The software will create an output file with timestamps for your manual scoring.

- A warning will appear if freezing is less than 10% or more than 90% of the video; select a different reference video if this occurs [32] [11].

- Automated Parameter Optimization:

- The software analyzes your manual scoring in 20-second bins.

- It then tests hundreds of combinations of freezing threshold (e.g., 100-6000 pixels) and minimum freezing time (e.g., 0-2 seconds).

- It selects the parameter set that yields the highest correlation (Pearson's r) and a linear fit closest to a slope of 1 and an intercept of 0 when compared to your manual scores [32].

- Application and Validation:

- The optimized parameters are saved in a MAT file.

- Apply this calibration file to analyze other videos recorded under the same conditions.

- It is good practice to validate the system's performance on a subset of videos by comparing automated scores with those from a blinded human observer.

Workflow Diagram: Automated Freezing Analysis Pipeline

Detailed Protocol: Forelimb Function Assessment with KDI

- Animal Preparation: House mice (e.g., C57BL/6J strain) in a controlled environment. Use a restricted diet to motivate performance in food-rewarded tasks [33].

- Task Training: Train mice on the Single Pellet Reach Task to establish a stable baseline performance. The task involves the mouse reaching through a slot to grasp a single food pellet [33].

- Video Recording: Use high-speed cameras to record the mice performing the task. Record multiple trials per session [33].

- Kinematic Data Processing:

- Labeling: Use specialized software to label key points on the mouse's body (e.g., digits, pellet) in each video frame.

- Data Extraction: Extract the spatial coordinates (x, y) of these labels over time to create movement trajectories.

- Data Cleaning: Apply filters to smooth the data and remove noise caused by tracking errors. Standardize the length of all trials for comparison [33].

- KDI Calculation:

- Perform a Principal Component Analysis (PCA) on the kinematic data from healthy, baseline performance trials to create a reference model of "optimal" movement.

- For each subsequent trial, project its kinematic data onto this reference model.

- The KDI is a unitless score calculated as the standardized difference from the reference performance. A lower KDI indicates movement closer to the optimal pattern, while a higher KDI indicates greater deviation and poorer performance [33].

Workflow Diagram: Kinematic Deviation Index (KDI) Calculation

This guide provides technical support for researchers troubleshooting the validation of automated freezing detection systems against human observation, a critical step in optimizing motion index thresholds for rodent freezing detection research.

Frequently Asked Questions (FAQs)

What are the essential requirements for an automated freezing detection system? A system must accurately distinguish immobility from small movements like grooming, be resilient to video noise, and generate scores that correlate highly with human observers. Validation requires a near-zero y-intercept, a slope near 1, and a high correlation coefficient when computer scores are plotted against human scores [1].

My automated system consistently over-estimates freezing. What is the likely cause? This is often due to a Motion Index Threshold that is set too high or a Minimum Freeze Duration that is too short [8]. This causes the system to misinterpret subtle, brief movements as freezing. To correct this, try lowering the motion threshold and increasing the minimum duration required to classify an event as a freeze [8].

My automated system under-estimates freezing. How can I fix this? Under-estimation typically results from a Motion Index Threshold that is set too low or a Minimum Freeze Duration that is too long [8]. In this case, the system is failing to recognize periods of low movement as freezing. Adjusting the motion threshold upward and potentially shortening the minimum freeze duration can improve accuracy [8].

Why is it insufficient to only report a correlation coefficient during validation? A high correlation coefficient alone can be misleading, as it can be achieved with scores on a completely different scale or only across a small range of values [1]. A system could consistently double the human scores and still have a high correlation. Therefore, a linear fit with an intercept near 0 and a slope near 1 is essential to prove the scores are identical in both value and scale [1].

What is the definition of "freezing" I should provide to human scorers? The standard definition used in validation studies is the "suppression of all movement except that required for respiration" [1] [8]. Human scorers typically use instantaneous time sampling (e.g., judging every 8 seconds whether the animal is freezing or not) or continuous monitoring with a stopwatch [8].

Troubleshooting Guides

Problem 1: Over-Estimation of Freezing

Symptoms: System scores are higher than human scores, especially at low movement levels. The linear fit of computer vs. human scores has a y-intercept greater than 0 [8]. Solutions:

- Lower the Motion Index Threshold: This makes the system more sensitive to movement, preventing small motions from being classified as freezing.

- Increase the Minimum Freeze Duration: Require a longer period of immobility before counting a freeze episode, which helps filter out brief pauses in movement [8].

Problem 2: Under-Estimation of Freezing

Symptoms: System scores are lower than human scores. The linear fit of computer vs. human scores has a y-intercept less than 0 [8]. Solutions:

- Raise the Motion Index Threshold: This makes the system less sensitive, ensuring that low-level movements associated with freezing are correctly identified.

- Decrease the Minimum Freeze Duration: Allow shorter periods of immobility to be counted as freezing, capturing brief freeze episodes a human would score [8].

Problem 3: Poor Correlation Across All Freezing Levels

Symptoms: The scatter plot of computer vs. human scores shows a poor fit, with a low correlation coefficient and a slope far from 1 [1] [8]. Solutions:

- Re-validate System Parameters: Systematically test combinations of motion thresholds and minimum freeze durations. As shown in the table below, the optimal setting is the one that simultaneously maximizes correlation and achieves a slope and intercept near 1 and 0, respectively [8].

- Ensure High-Quality Video: Use a low-noise camera and consistent near-infrared (NIR) illumination to minimize video noise that can interfere with motion detection [8].

- Verify Chamber Configuration: Ensure that contextual inserts or other modifications to the chamber do not create poor contrast or shadows that disrupt the tracking software [8].

Experimental Protocols for Validation

Core Validation Methodology

This protocol is designed to find the optimal motion index threshold and minimum freeze duration for your specific setup [8].

- Video Sample Collection: Record video footage of rodents during fear conditioning tests that represents the full spectrum of freezing behavior, from high mobility to complete immobility [8].

- Human Scoring: Have one or more trained observers, who are blind to the experimental conditions, score the videos for freezing. The standard method is instantaneous time sampling every 8-10 seconds [1] [8].

- Automated Scoring: Run the same set of video files through your automated system using a range of different Motion Index Thresholds and Minimum Freeze Durations.

- Data Analysis and Parameter Selection: For each combination of parameters, perform a linear regression analysis comparing the automated percent-freeze scores to the human scores for all video samples. The goal is to find the parameter set that produces a linear fit with a slope closest to 1, a y-intercept closest to 0, and the highest correlation coefficient (r) [8].

The workflow for this validation process is as follows:

Detailed Protocol: Contextual and Cued Fear Conditioning

This is an example of a standard fear conditioning procedure that can be used to generate videos for validation [36] [10].

- Animals: Adult C57BL/6J mice (8-16 weeks old) are commonly used [36].

- Apparatus: Sound-attenuating behavioral cabinets equipped with a rodent conditioning chamber (e.g., PhenoTyper), a grid floor connected to a scrambled shock generator, an infrared camera, and a speaker for delivering an auditory cue [36] [8].

- Training Phase:

- Place the mouse in the conditioning chamber.

- After a brief acclimatization period (e.g., 2-3 minutes), deliver a conditioned stimulus (CS), such as a 30-second tone, which terminates with a mild, unscrambled footshock (e.g., 2 seconds, 0.75 mA) as the unconditioned stimulus (US) [36] [10].

- Repeat this tone-shock pairing 1-3 times at set intervals.

- Remove the mouse from the chamber after a total session time of 5-10 minutes [36].

- Testing Phase (Contextual): 24 hours after training, return the mouse to the exact same chamber for a 5-minute session without any tones or shocks. Freezing to the context is measured [36] [10].

- Testing Phase (Cued): Either after the context test or in a separate group of animals, place the mouse in a novel, modified context. After a pre-CS period (e.g., 2-3 minutes), present the tone cue for several minutes in the absence of shock. Freezing to the cue is measured [36] [10].

Data Presentation

Table 1: Example Parameter Validation Results

The following table, inspired by data from Anagnostaras et al. (2010) and Herrera (2015), shows how different parameter combinations affect the correlation between automated and human scoring. The optimal setting balances high correlation with a slope and intercept closest to the ideal values [8].

| Motion Threshold (a.u.) | Min Freeze Duration (Frames) | Correlation Coefficient (r) | Slope of Linear Fit | Y-Intercept |

|---|---|---|---|---|

| 18 | 10 | 0.95 | 0.85 | 5.5 |

| 18 | 20 | 0.97 | 0.92 | 2.1 |

| 18 | 30 | 0.99 | 0.99 | 0.5 |

| 25 | 30 | 0.98 | 0.90 | 8.0 |

| 12 | 30 | 0.96 | 1.10 | -7.5 |

Note: a.u. = arbitrary units. Values are illustrative; optimal parameters must be determined empirically for your specific setup and software [8].

Table 2: Key Research Reagent Solutions

This table lists essential materials and software for establishing a fear conditioning and automated freezing detection setup [36] [1] [8].

| Item | Function in the Experiment |

|---|---|

| Rodent Conditioning Chamber | An enclosed arena (e.g., PhenoTyper) with features (grid floor, speaker) for delivering controlled stimuli and containing the subject [36]. |

| Scrambled Footshock Generator | Delivers a mild, unpredictable electric shock to the feet of the rodent as an aversive unconditioned stimulus (US) [36]. |

| Near-Infrared (NIR) Camera & Illumination | Provides consistent, non-visible lighting and video capture for reliable motion tracking across different visual contexts and during dark phases [8]. |

| Sound-Attentuating Cubicle | Isolates the experimental chamber from external noise and prevents interference between multiple simultaneous experiments [8]. |

| Automated Tracking Software | Software (e.g., VideoFreeze, EthoVision) that analyzes video to quantify animal movement and calculate freezing based on user-defined thresholds [36] [1] [8]. |

| Contextual Inserts | Modular walls and floor covers that alter the geometry, texture, and smell of the conditioning chamber to create distinct environments for context discrimination tests [8]. |

The relationship between key parameters and scoring outcomes can be visualized as follows:

Contextual vs. Cued Fear Conditioning Paradigms

FAQs: Resolving Common Experimental Challenges

1. Our automated freezing scores do not match human observer ratings. What should we check?

Inconsistent results between automated and manual scoring are often due to incorrect motion index thresholds. To troubleshoot:

- Calibrate Your Threshold: Systematically adjust the pixel-change threshold in your software to achieve the best fit with scores from an experienced human observer. The threshold must be sensitive enough to detect the complete absence of movement, except for respiration [1].

- Validate the System: Ensure your automated system shows a high correlation and a linear fit with a slope near 1 and an intercept near 0 when compared to human scores across a wide range of freezing values. Correlation alone is insufficient [1].

- Check Environmental Factors: Ensure consistent lighting and that the rodent contrasts sharply with the background (e.g., a dark rodent on a white floor). Clean the apparatus between trials to prevent olfactory cues from confounding results [37].

2. Our negative control group (unpaired) shows high contextual freezing. What does this mean?

High contextual freezing in the unpaired group is an expected finding that validates your paradigm, as it demonstrates contextual conditioning. In an unpredictable preparation (unpaired group), the aversive US occurs without a predictive discrete cue. Consequently, the background context becomes the best predictor of danger, and animals will gradually learn to associate the context with the shock, showing a trial-by-trial increase in US-expectancy and freezing to the context [38].

3. Our experimental group shows poor cued freezing but strong contextual freezing. Is this a failed experiment?

Not necessarily. This dissociation can reveal important biological or methodological insights.

- Check the Appropriateness of Freezing: Freezing is not the only fear response. Its expression depends on the context and the distance to the feared cue. If escape is possible, other behaviors like flight may be more appropriate [39].

- Assess Cue Salience: The discrete CS (e.g., tone) may not be salient enough for your specific animal strain or experimental condition.

- Consider Neural Substrates: A selective deficit in cued fear with intact contextual fear can indicate specific neurological impacts, as these two types of memory rely on partially distinct neural circuits (e.g., involving the amygdala and hippocampus) [1].

4. We observe high variability in freezing behavior within the same experimental group. What factors should we investigate?

Rodent behavior is influenced by numerous factors beyond the experimental manipulation. Key sources of variability include:

- Handling and Habituation: Inadequate handling can increase stress, leading to generalized fear and elevated baseline freezing. Gentle techniques like "cupping" are preferred over "tail pickups" to reduce anxiety [39].

- Animal-Specific Factors: The individual "personality," sex, strain, and estrous cycle phase of the animals can significantly affect motivation and anxiety-like behavior [40] [39].

- Environmental Consistency: Test animals at the same time of day to control for circadian effects. Be aware that sounds inaudible to humans (ultrasonic or low-frequency) or subtle smells can distract animals and alter performance [39].

- Experimenter Effects: The sex of the experimenter can influence rodent stress levels and behavior. Where possible, standardize and blind the experimenter across groups [40].

Troubleshooting Guide: Motion Index Threshold Optimization

This guide addresses specific issues related to setting the motion index threshold, a critical parameter in automated freezing detection.

Problem: Failure to Distinguish Freezing from Immobility

- Symptoms: The system classifies quiet but active behaviors (e.g., sniffing, slight postural adjustments) as "freezing," leading to overestimation.

- Solution:

- Refine the Threshold: Lower the motion index threshold to be more sensitive to small movements. The threshold must be calibrated to detect the complete absence of movement, except for respiration [1].

- Implement a Duration Filter: Configure the software to only count an episode as "freezing" if the immobility lasts for a minimum duration (e.g., 1-2 seconds), which helps filter out brief pauses in movement [37].

Problem: Inconsistent Performance Across Different Testing Contexts

- Symptoms: A threshold calibrated in the conditioning context performs poorly in a novel context during the cued test, or vice versa.

- Solution:

- Context-Specific Calibration: Perform separate threshold calibration and validation for each distinct testing apparatus (e.g., the square conditioning chamber and the triangular altered context chamber) [37].

- Standardize Visual Cues: Ensure the visual contrast between the animal and the background is identical and optimal across all chambers used in the experiment [37].

Problem: System Fails to Detect a Wide Range of Freezing Intensities

- Symptoms: The system scores low-freezing animals well but fails to accurately score high-freezing animals, or vice versa.

- Solution:

- Demand High Validation Standards: Use a system that has been validated to produce a linear fit with human scores across the full range of freezing values (from 0% to 100%), with a slope of 1 and an intercept of 0 [1].

- Avoid Photobeam Systems with Low Resolution: Some older automated systems use photobeams placed too far apart (>13mm), lacking the spatial resolution to detect subtle movements. Prefer modern video-based systems for higher precision [1].

Experimental Protocols & Data

Standardized Protocol for Contextual and Cued Fear Conditioning

The following protocol, adapted for use with an automated video analysis system, ensures reproducibility and reliability [37].

Day 1: Conditioning

- Setup: Use a square chamber with metal grid floors connected to a shock generator. Ensure even, bright lighting and a white background for optimal video tracking of dark-furred rodents.

- Habituation: Transfer the home cages to a soundproof waiting room at least 30 minutes before the experiment.

- Session:

- Place a mouse in the conditioning chamber.

- Allow a 120-second exploration period without stimuli.

- Present the Conditional Stimulus (CS), a 30-second white noise (e.g., 55 dB).

- During the last 2 seconds of the CS, deliver the Unconditional Stimulus (US), a mild footshock (e.g., 0.3 mA).

- Repeat this CS-US pairing three times, with intervals between pairings.

- Leave the mouse in the chamber for an additional 90 seconds after the final pairing to strengthen the context-shock association.

- Return the mouse to its home cage.

- Cleaning: Clean the grid floors and chamber walls thoroughly with 70% ethanol between animals to maintain electrical conductivity and remove olfactory cues.

Day 2: Context Test (Hippocampus-Dependent Memory)

- Setup: Use the exact same conditioning chamber.

- Session:

- Place the mouse back into the conditioning chamber.

- Record freezing behavior for 5 minutes without any tone or shock presentation.

- The percentage of time spent freezing is the measure of contextual fear memory.

Day 2 or 3: Cued Test (Amygdala-Dependent Memory)

- Setup: Use a novel chamber with different visual, tactile, and olfactory cues (e.g., a triangular chamber with acrylic flat floor, different lighting, and scent). This creates an "altered context."

- Session:

- Place the mouse in the novel chamber.

- Allow a 3-minute exploration period without the cue to establish a baseline freezing level in the new context.

- Present the same CS (white noise) for 3 minutes.

- The increase in freezing during the tone presentation, compared to the pre-tone baseline, is the measure of cued fear memory.

Quantitative Data from Validation Studies

Table 1. Comparison of Automated Freezing Detection Systems in Mice [1]