Optimizing User Experience in VR Neuropsychological Batteries: Strategies for Enhanced Ecological Validity and Clinical Adoption

This article synthesizes current research and best practices for optimizing user experience (UX) in virtual reality neuropsychological batteries, targeting researchers and drug development professionals.

Optimizing User Experience in VR Neuropsychological Batteries: Strategies for Enhanced Ecological Validity and Clinical Adoption

Abstract

This article synthesizes current research and best practices for optimizing user experience (UX) in virtual reality neuropsychological batteries, targeting researchers and drug development professionals. It explores the critical role of UX in enhancing ecological validity, participant engagement, and data reliability. The content covers foundational principles of immersive VR assessment, methodological approaches for development and implementation, troubleshooting for common technical and physiological challenges, and validation strategies against traditional measures. By addressing these interconnected areas, the article provides a comprehensive framework for creating VR neuropsychological tools that effectively bridge laboratory assessment and real-world cognitive functioning, ultimately supporting more sensitive measurement in clinical trials and therapeutic development.

The Foundation of UX in VR Assessment: Bridging Ecological Validity and User Engagement

This technical support center provides guidance for researchers and professionals working with Virtual Reality (VR) neuropsychological batteries. A core challenge in this field is bridging the gap between highly controlled laboratory assessments and the complex, dynamic nature of real-world cognitive functioning. Ecological validity refers to the extent to which research findings and assessment results can be generalized to real-world settings, conditions, and populations [1]. For neuropsychological testing, this involves two key requirements: veridicality (where performance on a test predicts day-to-day functioning) and verisimilitude (where the test's requirements and conditions resemble those of daily life) [2]. The emergence of immersive VR tools, such as the Virtual Reality Everyday Assessment Lab (VR-EAL), offers new pathways to enhance ecological validity while maintaining experimental control [3] [4] [2]. This guide addresses specific issues you might encounter when integrating these tools into your research on user experience optimization.

Frequently Asked Questions (FAQs)

1. What is the difference between ecological validity and general external validity?

While both concern generalizability, ecological validity is a specific type of external validity that focuses exclusively on how well research findings translate to real-world, everyday scenarios [1]. External validity considers generalizability to different settings, populations, or times more broadly. Ecological validity zeroes in on the accuracy of replicating real-life conditions in research.

2. Our lab is considering a shift from traditional paper-and-pencil tests to a VR battery like the VR-EAL. What are the key benefits we can demonstrate in our research proposal?

Immersive VR neuropsychological batteries offer several evidence-based advantages over traditional methods:

- Enhanced Ecological Validity: They simulate real-life situations, leading to better generalization of performance outcomes to everyday life [3] [2].

- Shorter Administration Time: Studies have shown that batteries like the VR-EAL can have a shorter administration time compared to extensive paper-and-pencil batteries [3].

- Improved Participant Engagement: VR tasks are consistently rated as more pleasant and engaging by participants, which can reduce attrition and improve data quality [3] [4].

3. A common criticism we face is that VR assessments induce cybersickness, potentially confounding results. How can we address this?

Select tools that have been specifically validated for minimal cybersickness. For instance, the VR-EAL has been documented as not inducing cybersickness and offering a pleasant testing experience [3] [4]. Furthermore, you can implement standardized questionnaires, such as the iUXVR (index of User Experience in immersive Virtual Reality), to quantitatively monitor and control for VR sickness symptoms in your study sample [5].

4. How can we convincingly argue that our VR-based measures are valid for assessing cognitive constructs?

It is essential to use and reference VR tools that have undergone formal validation studies. The core argument is supported by convergent and construct validity. For example, the VR-EAL's scores have been shown to be significantly correlated with equivalent scores on established paper-and-pencil neuropsychological tests [3]. Presenting these correlation data helps establish that the VR tool is measuring the intended cognitive constructs (e.g., prospective memory, executive function).

Troubleshooting Guides

Issue 1: Low Ecological Validity in Assessments

Problem: Traditional, construct-driven tests (e.g., Wisconsin Card Sort Test, Stroop Test) show poor correlation with real-world functional performance, limiting the practical application of your findings [2].

Solution: Adopt a function-led testing approach using immersive VR.

- Recommended Protocol:

- Identify Target Behavior: Define the real-world cognitive function you wish to assess (e.g., planning a shopping trip, following a new route).

- Select/Develop a VR Environment: Choose a validated VR environment that requires the same sequence of cognitive actions as the real-world behavior. The VR-EAL is one such battery designed for this purpose [3] [4].

- Benchmark Against Traditional Tests: In your methodology, include correlations with traditional paper-and-pencil tests to demonstrate convergent validity [3].

- Measure User Experience: Use standardized tools like the iUXVR questionnaire to assess key components of the VR experience, including usability, sense of presence, and aesthetics, which are crucial for ecological validity [5].

Issue 2: Participant Cybersickness and Poor User Experience

Problem: Participants report symptoms of cybersickness (headaches, nausea) or find the VR interface unintuitive, leading to poor data quality and high dropout rates.

Solution: Proactively optimize the user experience (UX) and monitor participant responses.

- Recommended Protocol:

- Pre-Study Hardware Selection: Opt for headsets that use inside-out tracking (e.g., Meta Quest series). This technology eliminates complex external sensor setups, reduces costs, and enhances portability, making the environment more adaptable and user-friendly [6].

- UX Assessment: Integrate the iUXVR questionnaire or similar instruments into your study design. This allows you to measure and optimize five key components: usability, sense of presence, aesthetics, VR sickness, and emotions [5].

- Content and Interaction Optimization: Research suggests that leveraging large language models (LLMs) can further optimize UX by improving the accuracy of voice interactions, enabling personalized content recommendations, and creating more naturalistic dialogues within the VR environment [7].

Issue 3: Validating VR Assessments for Occupational Safety and Health (OSH)

Problem: When using VR for workstation design or safety training, it is difficult to establish that operator behavior and risk perception in the virtual environment accurately reflect what would occur in the real world [8].

Solution: Systematically evaluate the ecological validity of your VR simulation against real-world benchmarks.

- Recommended Protocol:

- Break Down by Behavioral Thematic: Do not treat behavior as monolithic. Assess validity separately for specific components [8]:

- Spatial Perception: Can users accurately judge distances and dimensions?

- Movement: Does motion in VR closely mimic real-world biomechanics?

- Cognition: Are decision-making processes and cognitive load similar?

- Stress & Risk Perception: Does the scenario elicit a comparable level of physiological and subjective stress?

- Conduct Correlation Studies: Compare performance metrics and physiological data (e.g., heart rate for stress) collected in the VR simulation with data from the real-world task or a high-fidelity simulator [8].

- Acknowledge and Document Limitations: Be transparent about technological factors (e.g., display resolution, haptic feedback fidelity) that may currently limit perfect validity, and frame your VR tool as effective for comparative design analysis even if absolute measures may differ [8].

- Break Down by Behavioral Thematic: Do not treat behavior as monolithic. Assess validity separately for specific components [8]:

Data & Experimental Protocols

Quantitative Validation of a VR Neuropsychological Battery

The table below summarizes key quantitative findings from a validation study of the VR-EAL, illustrating the type of data you should collect or reference when justifying the use of a VR tool [3].

Table 1: Key Comparative Metrics for the VR-EAL vs. Paper-and-Pencil Battery

| Metric | VR-EAL Performance | Traditional Paper-and-Pencil Battery | Statistical Significance |

|---|---|---|---|

| Correlation with traditional tests | Significant correlations with equivalent scores | Benchmark | Yes (via Bayesian Pearson's correlation) |

| Ecological Validity (similarity to real-life tasks) | Significantly higher | Lower | Yes (via Bayesian t-tests) |

| Administration Time | Shorter | Longer | Yes |

| Pleasantness | Significantly higher | Lower | Yes |

| Cybersickness Induction | No induction reported | Not applicable | Confirmed |

Experimental Workflow for Validating a VR Simulation

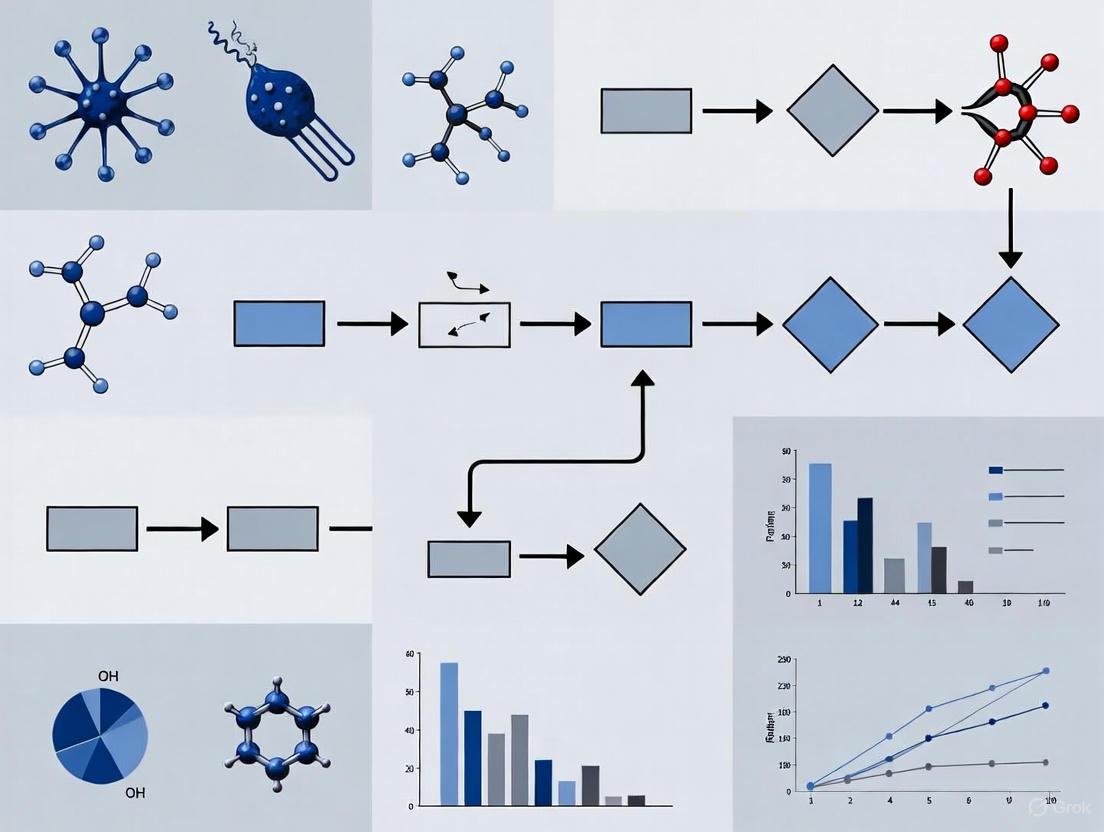

The following diagram outlines a general workflow for establishing the ecological validity of a VR simulation in a research context, synthesizing methodologies from the provided literature.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key Components for VR Neuropsychological Research

| Item | Function in Research |

|---|---|

| Immersive VR Headset (with inside-out tracking) | Provides the visual and auditory immersion necessary for presence. Inside-out tracking (e.g., Meta Quest, Microsoft HoloLens) simplifies setup, increases portability, and reduces costs, facilitating research in diverse settings [6]. |

| Validated Neuropsychological VR Battery (e.g., VR-EAL) | A standardized software suite designed to assess everyday cognitive functions (prospective memory, executive function) with enhanced ecological validity and built-in metrics [3] [4]. |

| UX Assessment Questionnaire (e.g., iUXVR) | A standardized instrument to measure key components of the user experience, including usability, sense of presence, aesthetics, VR sickness, and emotions. Critical for optimizing and controlling the subjective participant experience [5]. |

| Physiological Data Acquisition System | Devices to measure physiological signals (e.g., heart rate, galvanic skin response) provide objective data for comparing stress and affective responses between virtual and real-world settings, strengthening validity arguments [5] [9]. |

| Traditional Neuropsychological Test Battery | Established paper-and-pencil or computerized tests (e.g., Wisconsin Card Sorting Test, Stroop) are necessary for establishing the convergent validity of the new VR tool [3] [2]. |

The Critical Link Between UX and Data Reliability in Cognitive Assessment

Troubleshooting Guide: Common UX Issues and Solutions

Low User Motivation and Engagement

Problem: Participants lack the motivation to complete the VR assessment, leading to incomplete data or poor effort.

- Solution: Implement game-like elements and narrative structures. The VR-CAT system successfully increased engagement by framing assessment tasks within a "rescue mission" storyline where children saved an animated character named "Lubdub" from a castle [10]. This approach resulted in reported high levels of enjoyment and motivation across both clinical and control groups.

Cybersickness and Adverse Effects

Problem: Users experience nausea, dizziness, disorientation, or postural instability during or after VR sessions.

- Solution:

- Always administer a Simulator Sickness Questionnaire (SSQ) before and after sessions to quantify symptoms [10].

- Optimize session length - the VR-CAT assessment completes in approximately 30 minutes [10].

- Ensure stable frame rates and minimize latency through technical optimization.

- Provide adequate breaks between tasks to reduce sensory conflict.

- Consider individual susceptibility - ABI patients may be more vulnerable to cybersickness [11].

Non-Intuitive Controls and Interaction

Problem: Participants struggle with the VR interface, creating frustration and introducing measurement error.

- Solution: For non-gaming populations, prefer immersive VR with naturalistic interactions over controller-based systems. Studies show natural interactions facilitate comparable performance between gamers and non-gamers, while non-immersive VR with controllers can be challenging for older adults and clinical populations [11]. The CAVIRE system successfully used hand tracking and voice commands for more intuitive interaction [12].

Ecological Validity Concerns

Problem: VR assessment scores don't correlate well with real-world functional performance.

- Solution: Design environments and tasks that mirror Activities of Daily Living (ADLs). The CAVIRE-2 system demonstrated improved ecological validity through 13 virtual scenes simulating both basic and instrumental ADLs in familiar community settings [13]. This verisimilitude approach creates cognitive demands that better mirror real-world challenges.

Frequently Asked Questions (FAQs)

Q: How does user experience directly impact data reliability in VR cognitive assessment? A: UX factors directly influence multiple psychometric properties. Poor UX can lead to:

- Increased participant dropout, creating selection bias

- Inconsistent effort levels, adding measurement error

- Cybersickness symptoms that artificially depress performance scores

- Practice effects that vary based on individual technology comfort levels

Studies show that well-designed VR systems can achieve good test-retest reliability (ICC = 0.89) and internal consistency (Cronbach's α = 0.87) when UX issues are properly addressed [13].

Q: What specific UX metrics should we track during VR cognitive assessments? A: Implement a multi-metric approach:

Table: Essential UX Metrics for VR Cognitive Assessment

| Metric Category | Specific Measures | Target Values |

|---|---|---|

| Usability | System Usability Scale (SUS) | >68 (above average) |

| User Experience | User Experience Questionnaire (UEQ) | Positive ratings across 6 dimensions |

| Adverse Effects | Simulator Sickness Questionnaire (SSQ) | Minimal score increases post-session |

| Engagement | Session completion rates, task persistence | >90% completion |

| Efficiency | Time to complete assessment, error rates | Benchmark against norms |

One study found moderate SUS scores (52.3-55.1) in VR cognitive training, indicating room for improvement even in implemented systems [14].

Q: How can we balance ecological validity with experimental control? A: This fundamental challenge can be addressed through:

Structured realism: Create virtual environments that simulate real-world scenarios (e.g., supermarkets, streets) while maintaining standardized conditions [11]. The VR-EAL system demonstrates how to achieve this balance while meeting National Academy of Neuropsychology criteria [4].

Systematic variation: Introduce realistic distractions and multi-tasking demands gradually while preserving core measurement constructs.

Iterative design: Test environments with both healthy controls and target populations to refine the balance between realism and controllability.

Q: What technical specifications are crucial for reliable VR cognitive assessment? A: Based on validated systems:

Table: Technical Specifications from Validated VR Assessment Systems

| Component | VR-CAT System [10] | CAVIRE System [12] |

|---|---|---|

| Display | HTC VIVE VR viewer | HTC Vive Pro HMD |

| Tracking | Position tracking | Lighthouse sensors + Leap Motion for hand tracking |

| Interaction | Standard controllers | Natural hand movements, head tracking, and voice commands |

| Software | Windows-based VR application | Unity game engine with API for voice recognition |

| Assessment Time | ~30 minutes | ~10 minutes for CAVIRE-2 [13] |

| Cognitive Domains | Executive functions (3 domains) | All six DSM-5 cognitive domains |

Q: How can we minimize cybersickness without compromising assessment validity? A: Implement these evidence-based strategies:

- Technical optimization: Maintain high, stable frame rates (90Hz+) and minimize latency [11]

- Interaction design: Avoid artificial locomotion when possible; use teleportation or fixed viewpoints

- Progressive exposure: Begin with shorter sessions and gradually increase duration

- Environmental stability: Include stationary reference points in the visual field

- Participant screening: Identify susceptible individuals early and implement accommodations

Studies demonstrate that properly implemented VR can be well-tolerated, with one study reporting no participant dropouts due to VR-induced symptoms [12].

Experimental Protocols for UX Validation

Protocol 1: Usability Testing for VR Cognitive Assessment Tools

Purpose: To systematically evaluate the user experience of a VR cognitive assessment system before full validation studies.

Materials:

- VR hardware system (HMD, sensors, input devices)

- Target population participants (appropriate sample size)

- Standardized usability measures (SUS, UEQ, SSQ)

- Video recording equipment for session documentation

- Think-aloud protocol instructions

Procedure:

- Pre-session assessment: Administer SSQ and collect baseline data

- Orientation: Provide standardized introduction to VR controls

- Task completion: Participants complete VR cognitive tasks with think-aloud commentary

- Observation: Researchers note interaction difficulties, confusion points, and behavioral indicators of frustration or engagement

- Post-session measures: Administer SUS, UEQ, and SSQ again

- Structured interview: Gather qualitative feedback on specific system aspects

Success Criteria:

- SUS scores above 68 (above average)

- No significant increase in SSQ scores

- High task completion rates (>90%)

- Positive qualitative feedback on engagement and intuitiveness

Protocol 2: Establishing Test-Retest Reliability

Purpose: To determine whether UX improvements contribute to more consistent performance across sessions.

Materials:

- Fully implemented VR cognitive assessment system

- Participant sample representing target population

- Controlled testing environment

- Data recording and scoring system

Procedure:

- Session 1: Administer full VR cognitive assessment with UX measures

- Washout period: Allow 1-4 weeks between sessions (prevents practice effects while measuring consistency)

- Session 2: Repeat identical assessment procedure

- Data analysis: Calculate intraclass correlation coefficients (ICC) for composite scores and individual tasks

Interpretation:

- ICC > 0.90 indicates excellent reliability

- ICC 0.75-0.90 indicates good reliability

- ICC < 0.75 suggests need for UX improvements to reduce measurement error

The VR-CAT study demonstrated modest test-retest reliability across two independent assessments [10], while CAVIRE-2 achieved ICC of 0.89 [13].

Research Reagent Solutions

Table: Essential Components for VR Cognitive Assessment Research

| Component | Function | Examples from Literature |

|---|---|---|

| Immersive HMD | Creates sense of presence and reduces external distractions | HTC Vive Pro [12], Meta Quest2 [14] |

| Natural Interaction Interfaces | Enables intuitive control for non-gaming populations | Leap Motion for hand tracking [12], voice command systems |

| Ecological Validated Environments | Provides verisimilitude for real-world cognitive demands | Virtual supermarkets [11], streets [11], ADL simulations [13] |

| Standardized UX Measures | Quantifies user experience dimensions | System Usability Scale (SUS) [14], User Experience Questionnaire (UEQ) [14] |

| Adverse Effects Measures | Monitors cybersickness and other side effects | Simulator Sickness Questionnaire (SSQ) [10], Cybersickness in VR Questionnaire (CSQ-VR) [14] |

| Automated Scoring Algorithms | Reduces administrator variability and bias | CAVIRE's automated scoring [12], VR-CAT's composite scores [10] |

Visualizing the UX-Reliability Relationship

Traditional pen-and-paper neuropsychological tests have long served as the standard for cognitive assessment, but they face significant limitations in ecological validity—the ability to predict real-world functioning based on test performance. These conventional methods often assess cognitive functions in isolation, lacking the complexity and multi-sensory nature of everyday cognitive challenges. Virtual reality (VR) neuropsychological assessment directly addresses these shortcomings by creating immersive, ecologically valid testing environments that simulate real-world scenarios while maintaining experimental control [15] [4].

The Virtual Reality Everyday Assessment Lab (VR-EAL) represents a pioneering approach in this field, demonstrating how immersive VR head-mounted displays can serve as effective research tools that overcome the ecological validity problem while avoiding the pitfalls of VR-induced symptoms and effects (VRISE) that have historically hindered widespread adoption [15]. By embedding cognitive tasks within realistic environments, VR-based assessment captures the complexity of daily cognitive challenges more effectively than traditional methods, offering researchers and clinicians a more accurate prediction of how individuals will function in their actual environments [4].

Technical Support Center

Hardware Troubleshooting Guides

Table 1: Common VR Hardware Issues and Solutions

| Problem Category | Specific Issue | Troubleshooting Steps | Prevention Tips |

|---|---|---|---|

| Headset Issues | Blurry Image | 1. Instruct user to move headset up/down until clear vision2. Tighten headset dial3. Adjust headset strap [16] | Ensure proper fit during initial setup |

| Headset Not Detected | 1. Verify link box is ON2. Unplug/reconnect all link box connections3. Reset headset in SteamVR [16] | Check connections before starting experiments | |

| Image Not Centered | 1. Instruct user to look straight ahead2. Press 'C' button on keyboard to recalibrate [16] | Recalibrate at start of each session | |

| Tracking Problems | Base Station Not Detected | 1. Ensure power connected (green light on)2. Remove protection plastic3. Verify clear line of sight4. Run automatic channel configuration [16] | Maintain clear line of sight between base stations and headset |

| Lagging Image/Tracking Issues | 1. Check frame rate (press 'F' key, target ≥90 fps)2. Restart computer if fps low3. Verify base station setup4. Perform SteamVR room setup [16] | Close unnecessary applications before VR sessions | |

| Controller Issues | Controller Not Detected | 1. Ensure controller is turned on and charged2. Re-pair via SteamVR3. Right-click controller icon → "Pair Controller" [16] | Maintain regular charging schedule for controllers |

Software & Performance FAQs

Q: What measures can reduce cybersickness in participants during neuropsychological testing? A: Implement several strategies: use high frame rates (maintain ≥90 FPS), employ virtual horizon frameworks that provide stable visual references, limit field of view during movement sequences, and gradually increase exposure duration. The VR-EAL demonstrated minimal cybersickness symptoms during 60-minute sessions through these optimizations [15] [17] [18].

Q: How can I ensure consistent performance across different VR hardware setups? A: Standardize these key parameters: frame rate (maintain ≥90 FPS), display resolution, tracking precision, and refresh rate. Conduct regular performance checks using the frame rate display (F key in SteamVR) [16]. The VR-EAL validation studies utilized consistent hardware specifications to ensure reliable results [15].

Q: What software solutions help maintain data integrity in VR neuropsychological assessments? A: Implement automated data logging that captures response times, movement patterns, and behavioral metrics. Use version control for software updates, and ensure encrypted data transmission where required. The VR-EAL development team emphasized data security and integrity throughout their implementation [4].

Q: How can I optimize the visual quality without compromising performance? A: Employ several techniques: use optimized texture compression, implement Level of Detail (LOD) systems that adjust complexity based on distance, leverage foveated rendering if eye-tracking is available, and maintain consistent lighting calculations. These approaches helped VR-EAL achieve high visual quality without inducing cybersickness [15] [18].

Experimental Protocols & Methodologies

VR-EAL Development and Validation Protocol

The Virtual Reality Everyday Assessment Lab (VR-EAL) was developed following comprehensive guidelines for immersive VR software in cognitive neuroscience, with rigorous attention to both technical implementation and neuropsychological standards [15].

Table 2: VR-EAL Development Workflow

| Development Phase | Key Activities | Quality Assurance Measures | Outcome Metrics |

|---|---|---|---|

| Conceptual Design | - Define target cognitive domains- Create realistic scenarios- Storyboard narrative flow | - Expert neuropsychologist review- Ecological validity assessment | Scenario blueprint approved by clinical panel |

| Technical Implementation | - Unity engine development- SDK integration- Asset optimization | - Frame rate monitoring- Cross-platform testing | Stable performance ≥90 FPS across all scenarios |

| VRISE Mitigation | - Implement virtual horizon frames- Optimize movement mechanics- Reduce visual conflicts | - Simulator Sickness Questionnaire (SSQ)- Continuous user feedback | SSQ scores below cybersickness threshold |

| Validation Testing | - Participant trials (n=25, ages 20-45)- Comparative assessment with traditional tests- Psychometric evaluation | - VR Neuroscience Questionnaire (VRNQ)- Standard neuropsychological measures | High VRNQ scores across all domains [15] |

The validation study involved 25 participants aged 20-45 years with 12-16 years of education who evaluated various versions of VR-EAL. The final implementation achieved high scores on the VR Neuroscience Questionnaire (VRNQ), exceeding parsimonious cut-offs across all domains including user experience, game mechanics, in-game assistance, and minimal VRISE [15].

Dot-Probe Task Adaptation to VR Environment

The dot-probe paradigm, originally developed by MacLeod, Mathews and Tata (1986) to assess attentional bias to threat stimuli, can be effectively adapted to VR environments to enhance ecological validity [19].

Protocol Implementation:

- Setup: Participants begin with a fixation point in the VR environment to ensure central attention focus

- Stimulus Presentation: Two different stimuli (emotional and neutral) appear simultaneously in different spatial locations within the virtual environment

- Target Detection: After brief presentation (typically 500ms), a target (dot/probe) appears at the location of one stimulus

- Response: Participants indicate target location via VR controllers

- Trial Types:

- Congruent trials: Target appears at emotional stimulus location

- Incongruent trials: Target appears at neutral stimulus location

Data Collection Metrics:

- Response time differences between congruent and incongruent trials

- Attentional Bias Score (ABS): RTincongruent - RTcongruent

- Accuracy rates and error patterns

- Gaze tracking data through built-in eye tracking

- Physiological measures (when integrated) [19]

This paradigm adaptation leverages VR's capacity to present stimuli in more naturalistic, three-dimensional environments compared to traditional two-dimensional computer displays, potentially increasing the ecological validity of attentional bias assessment [19].

Visualization of VR Neuropsychology Workflows

VR Neuropsychological Assessment Development Pipeline

VR Assessment Development Workflow

VR Testing Session Flow

VR Testing Session Flow

Research Reagent Solutions: Essential Materials for VR Neuropsychology

Table 3: Essential Research Materials for VR Neuropsychological Assessment

| Component Category | Specific Items | Function & Purpose | Implementation Example |

|---|---|---|---|

| Hardware Platforms | VR Head-Mounted Displays (HMD) | Create immersive environments for ecological assessment | VR-EAL used HMDs to present realistic scenarios [15] |

| Base Stations & Tracking Systems | Enable precise movement and interaction tracking | SteamVR tracking for positional accuracy [16] | |

| VR Controllers & Input Devices | Capture participant responses and interactions | Controller-based response recording in dot-probe tasks [19] | |

| Software Tools | Game Engines (Unity, Unreal) | Develop and render interactive virtual environments | VR-EAL built using Unity engine [15] |

| VR Development SDKs | Interface with hardware and enable VR-specific features | SteamVR integration for headset management [16] | |

| Specialized Assessment Software | Implement standardized testing protocols | VR-EAL battery for everyday cognitive functions [4] | |

| Validation Instruments | VR Neuroscience Questionnaire (VRNQ) | Assess software quality, UX, and VRISE | Used in VR-EAL validation [15] |

| Simulator Sickness Questionnaire (SSQ) | Quantify cybersickness symptoms | Pre/post-test assessment [17] | |

| Traditional Neuropsychological Tests | Establish convergent validity | Comparison with pen-and-pencil measures [4] | |

| Data Collection Tools | Integrated Performance Logging | Automatically record responses and timing | Built-in data capture in VR-EAL [15] |

| Physiological Monitoring | Capture complementary psychophysiological data | EEG, eye-tracking integration possibilities [20] |

The integration of immersive Virtual Reality (VR) in neuropsychology represents a paradigm shift in cognitive assessment and rehabilitation. By simulating realistic, ecologically valid environments, VR offers unparalleled opportunities for evaluating and treating executive functions within activities of daily living (ADL) [21]. The core user experience (UX) components—presence (the subjective feeling of "being there"), immersion (the objective level of sensory fidelity delivered by the technology), and usability (the effectiveness, efficiency, and satisfaction of the interaction)—are critical determinants of the success, validity, and adoption of these tools in research and clinical practice [5]. This technical support center provides targeted guidance for researchers and professionals optimizing these components in VR-based neuropsychological batteries.

Troubleshooting Guide: Common UX Issues in VR Neuropsychological Research

Q1: How can we mitigate cybersickness to prevent data contamination in our studies? A: Cybersickness, a form of motion sickness induced by VR, can skew cognitive performance data and increase dropout rates [22]. To mitigate it:

- Use Teleport Movement: Avoid smooth locomotion in initial assessments; use teleportation to move between points to reduce sensory conflict [22].

- Optimize Technical Settings: Ensure a high, stable refresh rate (90Hz or above) for smoother visual motion [22].

- Environmental Controls: Use a fan directed at the participant to provide an external sensory anchor and ensure the play area is well-ventilated [22].

- Session Management: Keep initial exposure sessions short to help users build tolerance gradually [22].

- Monitor Symptoms: Employ standardized tools like the Simulator Sickness Questionnaire (SSQ) to quantitatively track symptoms and intervene when necessary [10].

Q2: Our VR cognitive tasks suffer from inconsistent controller tracking. How can we improve reliability? A: Tracking loss disrupts task performance and compromises data integrity.

- Optimize Lighting: Ensure the testing environment is evenly and brightly lit. Avoid both darkness and direct sunlight, which can blind the sensors [22].

- Remove Reflective Surfaces: Cover or remove mirrors, glossy TVs, and shiny monitors that can confuse the headset's tracking cameras [22].

- Maintain Controller Power: Low battery is a common cause of mid-session tracking failure. Use a charging dock to ensure controllers are fully powered before every session [22].

Q3: Participants often report blurry visuals, which may affect performance on visuospatial tasks. What are the solutions? A: Blurriness can hinder the assessment of functions like visuospatial abilities and attention.

- Adjust Interpupillary Distance (IPD): Correctly set the IPD using the headset's physical slider or software setting. This is often the primary fix for blurry visuals [22].

- Achieve the "Sweet Spot": Carefully adjust the headset's position on the user's face to find the lens area with the sharpest vision and ensure the head strap is secure to prevent slippage during movement [22].

- Lens Care: Clean the headset lenses before each use with a dry microfiber cloth. Avoid chemical wipes that can damage optical coatings [22].

Q4: How can we enhance the sense of presence to improve ecological validity? A: A strong sense of presence is crucial for eliciting real-world cognitive behaviors.

- Leverage Embodiment: Design virtual environments that incorporate a virtual body (avatar) to enhance the illusion of presence and body ownership [21].

- Prioritize Aesthetic Design: The visual appeal of the virtual environment is not merely cosmetic; it is essential for shaping users' emotions and overall experience, thereby supporting a stronger sense of presence [5].

- Use a Comprehensive UX Framework: Adopt evaluation tools like the iUXVR questionnaire, which measures key components of the VR experience, including presence, usability, aesthetics, and emotions, providing a holistic view of the user's experience [5].

Experimental Protocols & Validation Methods

The following table summarizes key methodological aspects from validated VR neuropsychological tools, providing a blueprint for research design.

Table 1: Experimental Protocols from Validated VR Neuropsychological Studies

| Study / Tool | Primary Objective | Target Population & Sample Size | VR Hardware & Core Tasks | Key UX & Outcome Measures |

|---|---|---|---|---|

| VR-CAT (VR Cognitive Assessment Tool) [10] | Assess executive functions (EFs: inhibitory control, working memory, cognitive flexibility). | Children with Traumatic Brain Injury (TBI) vs. Orthopedic Injury (OI); Total N=54. | HTC VIVE; "Rescue Lubdub" narrative with 3 EF tasks (e.g., directing sentinels, memory sequences). | Usability: High enjoyment/motivation. Reliability: Modest test-retest. Validity: Modest concurrent validity with standard EF tools, utility to distinguish TBI/OI groups. |

| VESPA 2.0 [23] | Cognitive rehabilitation for Activities of Daily Living (ADL). | Patients with Mild Cognitive Impairment (MCI); N=50. | Fully immersive VR system; Simulations of ADL. | Usability: Valuable, ecologically valid tool. Efficacy: Significant post-treatment improvements in global cognition, visuospatial skills, and executive functions. |

| VR-EAL (VR Everyday Assessment Lab) [4] | Neuropsychological battery for everyday cognitive functions. | Not specified in excerpt; designed for clinical assessment. | Immersive VR; Software meets professional body criteria. | UX Focus: Pleasant testing experience without inducing cybersickness. Validation: Meets NAN and AACN criteria for neuropsychological assessment devices. |

Research Workflow and UX Evaluation Framework

The diagram below illustrates the integrated workflow for developing and validating a VR neuropsychological tool, with a focus on the continuous assessment of core UX components.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Essential Hardware, Software, and Assessment Tools for VR Neuropsychological Research

| Item Name | Category | Function & Application in Research |

|---|---|---|

| Standalone/PC-VR Headset (e.g., HTC VIVE) [10] | Hardware | Provides the immersive visual and auditory experience. Choice depends on the required balance between mobility (standalone) and graphical fidelity (PC-tethered). |

| VR-CAT Software [10] | Software | An example of a validated, game-based VR application designed specifically to assess core executive functions (inhibitory control, working memory, cognitive flexibility) in a pediatric clinical population. |

| VR-EAL Software [4] | Software | The first immersive VR neuropsychological battery focusing on everyday cognitive functions. It is explicitly designed to meet professional criteria (NAN, AACN) and offers high ecological validity. |

| iUXVR Questionnaire [5] | Assessment Tool | A standardized questionnaire to measure the key components of User Experience in immersive VR, including usability, sense of presence, aesthetics, VR sickness, and emotions. |

| Simulator Sickness Questionnaire (SSQ) [10] | Assessment Tool | A standardized metric to quantify physical side effects (e.g., nausea, oculomotor strain) caused by VR exposure, crucial for ensuring participant safety and data quality. |

| Traditional Neuropsychological Tests (e.g., Stroop, Trail-Making) [21] | Assessment Tool | Standard pen-and-paper or computerized tests used to establish concurrent validity for new VR tools by correlating performance between the traditional and novel measures. |

| Prescription Lens Inserts [22] | Accessory | Custom lenses that slot into the VR headset, allowing participants who wear glasses to experience clear vision without discomfort or risk of scratching the headset lenses. |

The successful implementation of VR in neuropsychological research hinges on a meticulous, user-centered approach that prioritizes the core UX components of presence, immersion, and usability. By systematically addressing technical pitfalls through targeted troubleshooting, adhering to rigorous validation protocols, and continuously evaluating the user experience, researchers can develop robust, ecologically valid tools. This foundation is essential for advancing the field, ultimately leading to more precise cognitive assessment and more effective rehabilitation strategies for patients with neurological conditions.

Frequently Asked Questions & Troubleshooting Guides

This technical support center provides evidence-based guidance for researchers conducting VR neuropsychological assessments. The FAQs and troubleshooting guides address common challenges across different clinical populations, helping optimize user experience and data quality.

FAQ: General VR Assessment

Q1: What are the key ethical and safety standards for VR neuropsychological assessment? The American Academy of Clinical Neuropsychology (AACN) and National Academy of Neuropsychology (NAN) have established 8 key criteria for computerized neuropsychological assessment devices covering: (1) safety and effectivity; (2) identity of the end-user; (3) technical hardware and software features; (4) privacy and data security; (5) psychometric properties; (6) examinee issues; (7) use of reporting services; and (8) reliability of responses and results [4]. The VR-EAL (Virtual Reality Everyday Assessment Lab) has been validated against these criteria and demonstrates compliance with these standards [4].

Q2: How can I minimize cybersickness (VRISE) in clinical populations? Modern VR systems have significantly reduced VR-induced symptoms and effects (VRISE) through technical improvements [24]. To further minimize risks: use commercial HMDs like HTC Vive or Oculus Rift with high refresh rates (>90Hz) and resolution; ensure smooth, stable frame rates; implement comfortable movement mechanics (teleportation instead of smooth locomotion); provide adequate rest breaks during extended sessions; and gradually acclimatize sensitive participants through brief initial exposures [25] [24]. The VR Neuroscience Questionnaire (VRNQ) can quantitatively evaluate software quality and VRISE intensity [24].

Q3: What technical specifications are most important for clinical VR assessments? Prioritize hardware and software that deliver high user experience scores across VRNQ domains: user experience, game mechanics, in-game assistance, and low VRISE [24]. Key technical factors include: high display resolution and refresh rate; precise tracking systems; intuitive interaction modes (eye-tracking shows particular promise for accuracy); ergonomic software design; and robust performance without lag or stuttering [25] [24].

Q4: How does ecological validity in VR assessment translate to real-world functioning? VR enhances ecological validity through both verisimilitude (resemblance to real-life activities) and veridicality (correlation with real-world performance) [25]. Studies show that VR assessment results correlate better with activities of daily living ability compared to traditional neuropsychological tests [26]. For example, the VR-EAL creates realistic scenarios that mimic everyday cognitive challenges, making assessments more predictive of actual functioning [4] [24].

FAQ: Population-Specific Considerations

Q5: What UX adaptations are needed for ADHD populations? Adults with ADHD may exhibit greater performance differences in VR environments, suggesting VR more effectively captures their real-world cognitive challenges [25]. Recommended adaptations include: using eye-tracking interaction modes (shown to be more accurate for ADHD populations); minimizing extraneous distractions in the virtual environment; providing clear, structured task instructions; and implementing engaging, game-like mechanics to maintain motivation [25]. The TMT-VR (Trail Making Test in VR) has demonstrated high ecological validity for assessing ADHD [25].

Q6: How should VR assessments be adapted for psychosis spectrum disorders? Patients with schizophrenia and related disorders benefit from: highly controllable environments that minimize unpredictable stimuli; gradual exposure to potentially triggering scenarios; careful monitoring for paranoid ideation (as some VR content may trigger paranoid beliefs); and integration of metacognitive strategies alongside cognitive training [26]. VR interventions have shown good acceptability in this population, with patients reporting enjoyment and preference over conventional training [26].

Q7: What considerations are important for mood disorder populations? Individuals with mood disorders often experience cognitive impairments that benefit from: environments that balance stimulation to maintain engagement without causing fatigue; positive reinforcement mechanisms; tasks that gradually increase in complexity to build confidence; and pleasant, non-stressful virtual scenarios [26]. Studies show mood disorder patients tolerate VR well with mild or no adverse effects [26].

Troubleshooting Guide: Common Technical Issues

| Issue | Possible Causes | Solutions |

|---|---|---|

| High dropout rates | Cybersickness, lack of engagement, task frustration | Implement pre-exposure acclimatization; enhance game mechanics; provide clear instructions and in-task assistance; monitor comfort regularly [26] [24] |

| Poor data quality | Unintuitive interaction methods, inadequate training | Use eye-tracking or head movement instead of controllers; provide comprehensive tutorials; ensure tasks match cognitive abilities [25] |

| Performance variability | Individual differences in VR familiarity, technological anxiety | Assess prior gaming experience; implement consistent practice trials; use standardized instructions; consider technological background in analysis [25] |

| Equipment discomfort | Improper HMD fit, session duration too long | Ensure proper fitting; schedule regular breaks; monitor for physical discomfort; use lighter HMD models when available [24] |

Experimental Protocols & Methodologies

Standardized VR Assessment Protocol

VR Assessment Workflow

Comprehensive Protocol Details:

Participant Screening

- Assess for contraindications to VR (e.g., epilepsy, severe vertigo)

- Evaluate prior VR/gaming experience

- Document clinical characteristics and medication status

- Obtain informed consent with specific VR risk disclosure

VR Acclimatization Phase (5-10 minutes)

- Introduce HMD fitting and comfort adjustments

- Begin with static, low-stimulus environments

- Gradually introduce movement and interaction mechanics

- Monitor closely for any VRISE symptoms using standardized checklists

Task Tutorial Implementation

- Provide interactive, guided practice of each task

- Offer multiple explanation modalities (visual, auditory, text)

- Include competency checks before proceeding to assessment

- Allow repetition of tutorials based on participant needs

Standardized Assessment Administration

- Maintain consistent environmental conditions across participants

- Implement fixed order of tasks or counterbalanced design as appropriate

- Monitor performance in real-time for task comprehension

- Record both performance metrics and behavioral data

Data Collection & Quality Assurance

- Automate data capture for accuracy and objectivity

- Include timestamps for all interactions and responses

- Record both primary metrics and process measures

- Implement data validation checks during collection

Post-Assessment Debriefing

- Systematically assess VRISE using standardized instruments

- Gather qualitative feedback on user experience

- Provide information about potential after-effects

- Document any technical issues or anomalies

Population-Specific Methodological Adaptations

Clinical Group UX Adaptations

Evidence-Based Adaptation Guidelines:

ADHD Populations:

- Implement eye-tracking interaction modes, which demonstrate superior accuracy compared to controllers [25]

- Design environments with minimal extraneous distractions while maintaining ecological validity

- Provide immediate feedback and engaging reward structures to sustain motivation

- Break complex tasks into manageable segments with clear progression indicators

Psychosis Spectrum Populations:

- Create highly controlled, predictable environments that minimize unexpected events

- Carefully monitor for paranoid ideation triggered by specific VR content [26]

- Integrate metacognitive strategy training directly into task execution [26]

- Use familiar, non-threatening scenarios that reduce anxiety and suspicion

Mood Disorder Populations:

- Balance stimulation levels to maintain engagement without causing cognitive fatigue [26]

- Implement positive reinforcement mechanisms that build confidence gradually

- Design pleasant, aesthetically appealing environments that promote calm engagement

- Provide clear success indicators and progress tracking to counter negative cognitive biases

Elderly and Cognitively Impaired Populations:

- Extend tutorial phases with additional practice opportunities

- Simplify interaction mechanics to reduce cognitive load

- Prioritize physical comfort through appropriate seating, session duration limits, and HMD comfort

- Use familiar, contextually appropriate scenarios that match lived experiences

Research Reagent Solutions: Essential Materials

| Category | Specific Tools/Frameworks | Purpose & Function | Evidence Base |

|---|---|---|---|

| VR Assessment Platforms | VR-EAL (Virtual Reality Everyday Assessment Lab) | Comprehensive neuropsychological battery assessing everyday cognitive functions with enhanced ecological validity [4] [24] | Validated against NAN/AACN criteria; demonstrates high ecological validity [4] |

| TMT-VR (Trail Making Test in VR) | VR adaptation of traditional Trail Making Test for assessing attention, processing speed, cognitive flexibility [25] | Shows significant correlation with traditional TMT and ADHD symptomatology [25] | |

| Evaluation Instruments | VR Neuroscience Questionnaire (VRNQ) | Assesses software quality, user experience, game mechanics, in-game assistance, and VRISE intensity [24] | Validated tool with established cut-offs for software quality assessment [24] |

| Cybersickness Assessment Scales | Standardized measures of VR-induced symptoms and effects during and after exposure | Critical for safety monitoring and protocol refinement [24] | |

| Interaction Modalities | Eye-tracking Systems | Enables interaction through gaze control; particularly beneficial for ADHD and motor-impaired populations [25] | Demonstrates superior accuracy compared to controller-based interaction [25] |

| Head Movement Tracking | Alternative interaction method that may facilitate faster task completion | Shows efficiency advantages for certain populations and tasks [25] | |

| Technical Frameworks | Unity Development Platform | Flexible environment for creating customized VR assessment scenarios with research-grade data collection | Widely used in research including VR-EAL development [24] |

VR Efficacy Across Clinical Populations

| Population | Assessment Tool | Key Efficacy Metrics | Effect Size/Outcomes |

|---|---|---|---|

| ADHD Adults | TMT-VR | Ecological validity, usability, user experience | Strong correlation with traditional TMT (r=significant); high usability scores; better capture of real-world challenges [25] |

| Mixed Mood/Psychosis | CAVIR (Cognition Assessment in Virtual Reality) | Global cognitive skills, processing speed | Significant improvement in global cognition and processing speed post-VR intervention [26] |

| General Clinical | VR-EAL | Ecological validity, user experience, VRISE | Meets NAN/AACN criteria; high user experience; minimal VRISE in 60-min sessions [4] [24] |

| Elderly/MCI | Various VR Assessments | Diagnostic sensitivity, ecological validity | Superior to traditional tests like MMSE in detecting mild cognitive impairment; better prediction of daily functioning [27] |

Technical Implementation Specifications

| Parameter | Optimal Specification | Rationale & Evidence |

|---|---|---|

| Session Duration | 45-60 minutes maximum | VR-EAL demonstrated minimal VRISE during 60-minute sessions; longer sessions increase discomfort risk [24] |

| Interaction Mode | Eye-tracking preferred | Superior accuracy, especially for non-gamers and ADHD populations; more intuitive for clinical use [25] |

| Frame Rate | >90 Hz | Reduces latency-induced cybersickness; critical for user comfort and data reliability [24] |

| Display Resolution | Higher preferred (varies by device) | Enhances visual fidelity and presence; reduces eye strain; supports more complex environments [24] |

| Movement Mechanics | Teleportation over smooth locomotion | Significantly reduces cybersickness while maintaining task engagement [25] [24] |

Methodological Framework: Designing and Implementing UX-Optimized VR Neuropsychological Batteries

FAQs and Troubleshooting Guides

FAQ 1: What are the key hardware specifications to look for in a VR system for neuropsychological research?

When selecting VR hardware for research, you should prioritize specifications that ensure high-fidelity performance, minimize cybersickness, and support accessible design. The table below summarizes the core criteria.

| Category | Key Specification | Importance for Research |

|---|---|---|

| Performance | High Display Refresh Rate (90Hz+) [24] | Reduces latency and lag, which are primary contributors to VR-induced symptoms and effects (VRISE) [24]. |

| Performance | High Sampling Rate (e.g., 256kHz inputs) [28] | Ensures precise data capture for behavioral metrics, crucial for detailed performance analysis [28]. |

| Tracking | Accurate 6-Degrees-of-Freedom (6DoF) Tracking | Enables naturalistic movement and interaction within the virtual environment, enhancing ecological validity [29]. |

| Tracking | Support for Multiple Interaction Modes (Eye-tracking, Controllers) [29] | Allows for the study of different interaction paradigms; eye-tracking can reduce bias from prior gaming experience [29]. |

| Comfort & Safety | Ergonomic Headset Design [24] | Mitigates physical discomfort during extended testing sessions, which is critical for patient populations [24]. |

| Comfort & Safety | "Green Mode" or Power-saving Features [28] | Reduces operational costs and system noise, which is beneficial for labs with multiple units or extended testing schedules [28]. |

| Accessibility | Software Support for Display Adjustments (Contrast, Saturation) [30] | Essential for accommodating participants with low vision or color perception deficiencies [30] [31]. |

| Accessibility | Double-Isolated Chassis [28] | Improves signal integrity by reducing ground loop noise and external interference, leading to cleaner data [28]. |

FAQ 2: A participant reports nausea and dizziness (cybersickness). How can we mitigate this in our hardware setup and protocol?

Cybersickness, or VR-induced symptoms and effects (VRISE), poses a significant risk to data quality and participant safety [24] [29]. Mitigation is a multi-faceted effort involving both hardware selection and protocol design.

Hardware and Software Checks:

- Ensure High Frame Rates: Use a computer with a sufficiently powerful GPU to maintain a consistent and high frame rate (e.g., 90Hz or higher). Dropping frames is a primary trigger for discomfort [24].

- Minimize Latency: Verify that the entire system—from PC to headset—is optimized for low latency. This includes using high-quality connection cables and updated drivers.

- Calibrate Correctly: Always ensure the headset's Interpupillary Distance (IPD) is correctly calibrated for each participant. An incorrect IPD setting can cause eye strain and disorientation.

Experimental Protocol Adjustments:

- Limit Initial Session Duration: For new participants, especially those naive to VR, begin with shorter sessions (e.g., 5-10 minutes) and gradually increase the time as they acclimatize [24].

- Incorporate Regular Breaks: Design your protocol with scheduled breaks to allow participants to rest their eyes and re-orient themselves.

- Avoid Artificial Locomotion: When possible, use teleportation-based movement instead of continuous joystick-based locomotion, as the latter is more likely to induce vection and sickness [32].

FAQ 3: Our VR headset is not being tracked or displays a black screen. What are the first steps to troubleshoot this?

This is a common issue that often has a simple solution. Follow this systematic protocol to resolve the problem.

Troubleshooting Protocol:

- Verify Physical Connections: Follow the headset cord from the headset to the link box and then to the computer and power outlet. Ensure all connections are secure [32].

- Check the Link Box: The link box is a small black box with a blue button. If the headset is not connecting, power cycle the link box by pressing the blue button to turn it off, waiting 3 seconds, and then pressing it again to turn it on. A green light should indicate it is on [32].

- Inspect Software Status: Open your VR software (e.g., SteamVR). Check the status icons for the headset and controllers. If the headset icon is not green, it indicates a tracking or connection issue [32].

- Reboot the Headset: Use the software menu (e.g., in SteamVR) to navigate to settings and perform a headset reset [32].

- Restart the Software and Computer: If the above steps fail, fully close the VR software, shut down the computer, and then restart everything.

FAQ 4: How can we ensure our VR hardware and tests are accessible for participants with visual impairments?

Accessibility is a critical component of ethical and inclusive research. Implement the following hardware and software strategies.

Leverage Built-in Display Settings:

- High Contrast Mode: Both VR software and operating systems (e.g., Windows High Contrast Mode) can simplify color palettes and improve legibility. The Web Content Accessibility Guidelines (WCAG) recommend a contrast ratio of at least 4.5:1 for standard text [33] [31].

- Color Adjustment Tools: Provide in-app settings that allow users to adjust contrast, saturation, hue shift, and tint. This helps accommodate various visual needs, such as color blindness (e.g., protanopia, deuteranopia) and low vision [30].

- Saturation Control: Allowing saturation to be lowered to -100 (grayscale) enables researchers to verify that information is not conveyed by color alone, a key accessibility guideline [30] [33].

Research and Testing Considerations:

- Avoid Color-Only Cues: Never use color as the sole means of conveying information. Supplement with patterns, textures, or symbols [33].

- Test in Grayscale: Periodically view your virtual environment in grayscale to identify potential accessibility barriers for colorblind participants [30] [31].

Experimental Protocols for Hardware Validation

Protocol: Validating a New VR System for Neuropsychological Testing

Before deploying a new VR system for research, it is essential to validate its technical performance and user tolerance. The following workflow outlines a standard validation procedure.

Detailed Methodology:

Technical Specification Audit:

- Confirm that the VR Head-Mounted Display (HMD) and host computer meet or exceed the minimum specifications for the intended software, paying particular attention to display resolution and refresh rate [24].

System Calibration and Tracking Accuracy Test:

- Calibrate the headset's IPD for a reference user.

- Define the tracking play area and verify that both headset and controller movements are tracked smoothly and without jitter or loss of signal across the entire volume [32].

Cybersickness and User Experience (UX) Assessment:

- Recruit a small pilot group (n=5-10) representative of your target population.

- Participants complete a 15-20 minute standardized VR exposure, such as the VIVE Origin Tutorial [32] or a demo of your test environment.

- Immediately following the exposure, administer the Virtual Reality Neuroscience Questionnaire (VRNQ) [24]. This tool quantitatively assesses the intensity of VRISE and the quality of the user experience, game mechanics, and in-game assistance. The software should exceed the parsimonious cut-off scores defined by the VRNQ to be deemed suitable [24].

Usability and Acceptability Testing:

- Administer standardized usability scales, such as the System Usability Scale (SUS), to gather subjective feedback on how easy the system is to use.

- Assess acceptability by asking participants about their willingness to use the system again.

Data Integrity Check:

- Run a mock experiment and verify that all planned data (e.g., completion time, accuracy, head position, interaction logs) is being recorded accurately and completely, with millisecond-level precision where required [29].

The Scientist's Toolkit: Essential Research Reagents and Materials

The following table details key hardware and software solutions essential for setting up a VR lab for neuropsychological research.

| Item Category | Specific Examples | Function in Research |

|---|---|---|

| Primary VR Hardware | HTC Vive, Oculus Rift, Meta Quest Pro [24] | Provides the immersive display and tracking core of the system. Modern commercial HMDs are critical for mitigating VRISE [24]. |

| VR Software Development Kits (SDK) | XR Interaction Toolkit (Unity), Oculus Integration [30] | Provides the essential Application Programming Interfaces (APIs) and assets to develop and build VR applications within game engines. |

| Vibration Control System | VR9700 Vibration Controller [28] | Advanced hardware for supporting high sampling rates (256kHz) and reducing system noise/ground loops, ensuring clean signal data for studies incorporating motion or physiological monitoring [28]. |

| Data Analysis Software | ObserVIEW, Custom Python/MATLAB scripts [28] | Software used for analyzing recorded behavioral and physiological data. Supports various file types (e.g., .csv, .rpc, .uff) for flexibility [28]. |

| Validation & Assessment Tools | Virtual Reality Neuroscience Questionnaire (VRNQ) [24] | A standardized tool to quantitatively evaluate the quality of VR software and the intensity of cybersickness symptoms in participants, ensuring data reliability [24]. |

| Accessibility Checking Tools | WebAIM Color Contrast Checker [33] | An online tool that allows researchers to verify that the color contrast ratios used in their virtual environments meet WCAG accessibility guidelines [33] [31]. |

Troubleshooting Guides and FAQs

This technical support resource addresses common challenges faced by researchers and developers creating VR neuropsychological assessment software. The guidance is framed within the context of optimizing user experience for rigorous scientific and clinical applications.

Frequently Asked Questions (FAQs)

Q1: How can we mitigate VR-induced symptoms and effects (VRISE) to ensure data reliability in our studies? VRISE (e.g., nausea, dizziness) can compromise cognitive performance and physiological data [24]. Mitigation is multi-faceted:

- Software Quality: Use high-quality, ergonomic software with high frame rates (90 FPS for VR) and low latency to reduce sensory conflict [24] [34].

- Hardware Selection: Employ modern Head-Mounted Displays (HMDs) with sufficient resolution and tracking capabilities [24].

- User Experience Design: Implement a dark theme to reduce eye strain, avoid sudden acceleration cues, and ensure comfortable interaction mechanics [35]. The final version of the VR-EAL battery, for instance, was able to almost eradicate VRISE during 60-minute sessions [24].

Q2: What are the key performance benchmarks we must hit for a comfortable VR experience? Consistent performance is non-negotiable for immersion and user comfort. The following table summarizes key quantitative targets:

Table 1: Key VR Performance Benchmarks

| Component | Target Benchmark | Explanation |

|---|---|---|

| Frame Rate | 90 FPS (VR), 60 FPS (AR) | Essential for preventing disorientation and motion sickness [34] [36]. |

| Draw Calls | 500 - 1,000 per frame | Reducing draw calls is critical for CPU performance [34]. |

| Vertices/Triangles | 1 - 2 million per frame | Limits GPU workload per frame [34]. |

| Script Latency | < 3 ms | Keep logic execution, like Unity's Update(), minimal to avoid frame drops [34]. |

Q3: Our VR assessments lack ecological validity. How can we better simulate real-world cognitive demands? Traditional tests use simple, static stimuli, whereas immersive VR can create dynamic, realistic scenarios [24]. To enhance ecological validity:

- Implement Realistic Storylines: Develop scenarios that mimic Activities of Daily Living (ADL) and Instrumental ADL (IADL), such as performing errands in a virtual city [24] [21].

- Assess Complex Functions: Design tasks that tap into prospective memory, multitasking, and executive functions within a realistic context [24].

- In-Game Assistance: Provide seamless tutorials and prompts integrated into the environment to guide participants without breaking immersion [24] [37].

Q4: What are the unique considerations for designing user interfaces (UI) in VR? VR UI operates in 3D space, requiring a different approach from 2D screens [35].

- Anchor Points: Place UI elements on a curved cylinder in front of the user, attached to the controller, or as a diegetic part of the environment [35].

- Scale and Readability: Use concepts like angular scale (dmm) to maintain consistent size and legibility at different distances. Avoid thin fonts due to limited headset resolution [35].

- Interaction: Make buttons and interactive elements larger than on desktop to accommodate controller inaccuracy, following Fitts's Law [35].

Common Performance Issues & Troubleshooting

This guide helps diagnose and resolve typical performance bottlenecks.

Table 2: Troubleshooting Common VR Performance Problems

| Problem Symptom | Likely Cause | Solution |

|---|---|---|

| Consistently low frame rate, jitter | High GPU Load: Too many polygons, complex shaders, or overdraw. | Implement Level of Detail (LOD), use texture atlasing, simplify lighting and shadows, and employ foveated rendering if available [34] [38]. |

| Frame rate spikes, "hitching" | High CPU Load: Too many draw calls, complex physics, or garbage collection. | Reduce draw calls by batching meshes. Use multithreading for physics/AI (e.g., Unity's Job System). Avoid garbage-generating code in loops [34] [38]. |

| Visual "popping" or stuttering | Memory Management: Assets loading mid-frame. | Use asynchronous asset streaming to load objects in the background before they are needed [38]. |

| User reports of discomfort/eyestrain | VRISE Triggers: Latency, low frame rate, or conflicting sensory cues. | Verify performance against benchmarks in Table 1. Use a profiler to identify bottlenecks. Ensure a stable, high frame rate above all else [24] [34]. |

Experimental Protocols & Methodologies

Protocol: Validating a VR Neuropsychological Battery Using the VRNQ

This methodology outlines the process for quantitatively evaluating the usability and safety of a VR research application, based on the validation of the VR-EAL [24].

1. Objective: To appraise the quality of a VR software in terms of user experience, game mechanics, in-game assistance, and the intensity of VRISE.

2. Materials:

- VR Software (Alpha, Beta, or Final versions)

- Modern HMD (e.g., HTC Vive, Oculus Rift)

- VR Neuroscience Questionnaire (VRNQ) [24]

- Standardized computing hardware that meets recommended specifications

3. Procedure:

- Participant Recruitment: Recruit a representative sample (e.g., n=25) within the target age and education range for the assessment [24].

- VR Session: Participants engage with the VR software for a standardized duration (e.g., 60 minutes).

- Post-Session Assessment: Immediately following the session, participants complete the VRNQ.

- Data Analysis: Calculate sub-scores for User Experience, Game Mechanics, In-Game Assistance, and VRISE from the VRNQ. Compare scores against the questionnaire's parsimonious cut-offs to determine suitability for research use [24].

4. Outcome Measures: The VRNQ provides quantitative scores that allow developers to iterate on software design, with the goal of achieving high user experience scores while minimizing VRISE.

Development Workflow for a VR Neuropsychological Test

The following diagram visualizes the key stages in developing a validated VR neuropsychological tool.

The Scientist's Toolkit: Essential Research Reagents & Materials

This table details key "reagents" and tools required for the development and deployment of VR neuropsychological batteries.

Table 3: Essential Toolkit for VR Neuropsychology Research

| Item / Solution | Function / Rationale | Examples / Specifications |

|---|---|---|

| Immersive HMD | Presents the virtual environment. Higher resolution and refresh rate reduce VRISE and increase presence. | HTC Vive, Oculus Rift, Meta Quest Pro [24] [21]. |

| Game Engine | Core software environment for building and rendering the 3D virtual world, handling physics, and scripting logic. | Unity, Unreal Engine [24] [38]. |

| Performance Profiling Tools | Critical for identifying CPU/GPU bottlenecks to ensure consistent frame rates and minimize latency. | NVIDIA Nsight, AMD Radeon GPU Profiler, built-in engine profilers [34] [38]. |

| VR Neuroscience Questionnaire (VRNQ) | A validated instrument to quantitatively assess user experience, game mechanics, and VRISE intensity for research purposes [24]. | |

| 3D Asset Optimization Tools | Reduces polygon counts and texture sizes to meet performance benchmarks without sacrificing visual quality. | MeshLab, Simplygon [38]. |

| Diegetic UI Framework | A design system for integrating user interfaces into the virtual world to maintain immersion (diegesis) [35]. | |

| Data Protection & Security Protocol | Ensures patient/participant data collected in VR is stored and processed in compliance with regulations (e.g., HIPAA). | Encryption, anonymization techniques [37]. |

FAQs and Troubleshooting for Multisensory VR Research

Q: How can I ensure temporal coherence between visual, auditory, and tactile stimuli in my experiments? A: Temporal coherence is a key factor in cross-modal integration. Use a single, custom software platform to manage all stimuli and their synchronization [39]. The platform should control stimulus delivery (e.g., visual LEDs, tactile electric stimulators) via serial communication and use a motion tracking system to update the virtual environment in real-time, ensuring stimuli are perfectly aligned [39].

Q: Participants do not feel a strong sense of embodiment with the virtual avatar. What can I do? A: Embodiment can be enhanced by:

- First-Person Perspective: Present the avatar from a first-person point of view [39].

- Accurate Motion Mapping: Real-time tracking of the participant's body (e.g., using infrared cameras with reflective markers) to control the virtual avatar's movements improves vividness and embodiment [39] [4].

- Avatar Customization: Adjust the avatar's gender and height to match the participant's physical characteristics [39].

Q: What are the best practices for integrating tactile/haptic feedback into a VR environment? A: For upper limb rehabilitation, research shows that even partial tactile feedback delivered via wearable haptic devices can significantly improve engagement and motor learning [40]. Ensure the tactile stimuli (e.g., vibrations) are precisely timed with the participant's interactions with virtual objects to provide coherent multisensory feedback [40]. For other modalities like thermal stimuli, use an Arduino-based control module embedded into the VR platform (e.g., Unity) for automated operation [41].

Q: How can I measure the effectiveness of multisensory integration in a VR paradigm? A: Common quantitative measures include:

- Reaction Time (RT): Measure the speed of response to a tactile stimulus, which can decrease when a simultaneous visual or auditory stimulus is presented near the hand, indicating integration in peripersonal space [39].

- Motion Tracking: Use infrared cameras to record hand and stimulus positions in real-time, allowing you to analyze parameters like hand-distance from a stimulus [39].

- Psychophysical Assessments: Employ standardized neuropsychological batteries designed for VR that assess everyday cognitive functions and meet criteria set by major neuropsychological organizations [4].

Q: Our setup induces cybersickness in participants. How can this be mitigated? A: Choose a VR platform that has been validated for a pleasant testing experience without inducing cybersickness [4]. To minimize latency—a common cause of cybersickness—ensure your motion tracking and avatar updating have high refresh rates and that all sensory stimuli are delivered with minimal delay [39] [4].

Troubleshooting Guides

Problem: Unsynchronized Sensory Stimuli Solution: Implement a centralized control system.

- Root Cause: Independent control of stimulus devices leads to temporal drift.

- Actionable Steps:

- Develop or use a custom software application that manages the virtual environment, motion tracking, and stimulus devices (like electric stimulators) simultaneously [39].

- This master software should send triggers via reliable low-latency communication (e.g., serial port) to all peripheral devices [39].

- Verification: Run a validation protocol where a single trigger simultaneously activates a visual LED and a tactile stimulator; verify synchronization with high-speed recording.

Problem: Inaccurate Motion Tracking of Participant's Body Solution: Recalibrate the motion capture system and kinematic model.

- Root Cause: Misalignment between the real-world and virtual coordinate systems, or drift in the orientation calculations of body segments.

- Actionable Steps:

- Perform an initial alignment between the experimental reference frame and the virtual coordinate system [39].

- Use a known initial pose (e.g., a "T-pose") to define the baseline orientation of the arm, forearm, and hand links. Compute subsequent orientations as quaternion transformations relative to this initial pose to maintain accuracy [39].

- Verification: Have the participant move their arm to predefined physical locations and confirm the virtual avatar's hand reaches the corresponding virtual locations.

Problem: Weak or Inconsistent Cross-Modal Effects (e.g., in PPS modulation) Solution: Optimize stimulus parameters and participant posture.

- Root Cause: Stimuli may not be perceived as spatially or temporally coherent, or the experimental design may not adequately engage multisensory neurons.

- Actionable Steps:

- Spatial Coherence: Ensure visual and tactile stimuli are presented in close spatial proximity, as the cross-modal effect on reaction time is strongest near the hand [39].

- Experimental Design: Implement a protocol that includes a high proportion of simultaneous visuo-tactile (VT) trials interspersed with tactile-only (T) and visual-only (V) control conditions [39].

- Hand Position: Investigate the peripersonal space by having participants place their hand in multiple static positions, as the effect is modulated by hand-stimulus distance [39].

Experimental Protocol: Visuo-Tactile Integration for Peripersonal Space (PPS) Investigation

This protocol, adapted from a validated VR platform, investigates how visual stimuli near the hand influence reaction to a tactile stimulus [39].

1. Objective To measure the cross-modal congruency effect by testing if a visual stimulus presented near a participant's hand speeds up the reaction time (RT) to a simultaneous tactile stimulus on the hand, thereby mapping the peripersonal space (PPS).

2. Methodology

- Setup: A VR headset displays a first-person view of a virtual avatar arm on a virtual table. Four infrared cameras track motion via markers on the participant's right arm [39].

- Stimuli:

- Tactile (T): An electric stimulator attached to the participant's right index finger.

- Visual (V): A red LED-like semi-sphere that appears on the virtual table for 100 ms [39].

- Trial Structure: Each trial presents one of three conditions, randomly interleaved:

- V-only: Visual stimulus only.

- T-only: Tactile stimulus only.

- VT: Simultaneous visual and tactile stimuli [39].

- Task: The participant is instructed to react as quickly as possible to the tactile stimulus (by pressing a keypad) while ignoring any visual stimuli [39]. The V and T control conditions ensure the participant is not responding to the visual stimulus alone.

- Conditions:

- Single Pose: The participant's hand remains in a fixed position.

- Multiple Poses: The participant moves their hand to different locations in the right hemispace, with stimuli delivered when the hand is static. This allows investigation of how PPS representation changes with arm posture [39].

- Data Analysis: The primary dependent variable is the reaction time to the tactile stimulus. A significant negative correlation between the hand-visual stimulus distance and the RT in VT conditions indicates a cross-modal effect within the PPS [39].

3. Quantitative Data Summary

| Parameter/Measure | Value / Description | Notes |

|---|---|---|

| Visual Stimulus Duration | 100 milliseconds | [39] |

| Tactile Stimulus Control | Serial communication | From main software to electric stimulator [39] |

| Motion Tracking System | 4 Infrared Cameras | Tracks reflective passive markers [39] |

| Trials per Session | 50 | Typically composed of 8T, 8V, 34VT [39] |

| Visual Stimulus Position (α) | -45 to 225 degrees | Angle from hand, preventing appearance on arm [39] |

| Key Result (Pilot) | Significant correlation (p=0.013) | Between hand-stimulus distance and reaction time [39] |

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function in Multisensory VR Research |

|---|---|

| VR Headset | Provides visual immersion and head tracking; crucial for first-person perspective and inducing presence [39] [4]. |

| Infrared Motion Capture System | Tracks body segment (e.g., arm, hand) position and orientation in real-time with high precision for avatar control and quantitative movement analysis [39]. |

| Haptic/Tactile Actuator | Delivers controlled tactile stimuli (e.g., vibration, electrical pulse) to the skin; essential for studying touch and body ownership [39] [40]. |