Parameter Optimization for Automated Behavior Assessment: A 2025 Guide for Biomedical Researchers

This article provides a comprehensive guide for researchers and drug development professionals on optimizing parameters in automated behavior assessment software.

Parameter Optimization for Automated Behavior Assessment: A 2025 Guide for Biomedical Researchers

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on optimizing parameters in automated behavior assessment software. It covers the foundational principles of why parameter tuning is critical for data accuracy and reliability, moving to practical methodologies for implementing optimization techniques like AI and autotuning. The content addresses common troubleshooting challenges and presents a rigorous framework for validating optimized models against human scorers and commercial solutions. The goal is to empower scientists to enhance the precision, efficiency, and translational value of their preclinical behavioral data.

Why Parameter Optimization is Critical in Modern Behavioral Neuroscience

In the realm of automated behavior assessment, default parameters present researchers with a dangerous paradox: they offer immediate accessibility while potentially compromising scientific validity. The reliance on untailored defaults affects a wide audience, from new data analysts to seasoned data scientists and business leaders who rely on data-driven decisions [1]. In practice, statistical modeling pitfalls and automated behavior analysis tools often produce deceptively promising initial results that fail to survive real-world validation. This application note examines how suboptimal parameter configuration can lead to misinterpreted findings and provides structured protocols for parameter optimization tailored to behavioral researchers and drug development professionals.

The core challenge lies in the fundamental mismatch between generalized software defaults and context-specific research needs. As Brown explains regarding performance measurement systems, effective measures must be "flexible and adaptable to an ever-changing business environment" [2]. This principle applies equally to behavioral research software, where default settings often fail to account for crucial variables such as species-specific kinematics, experimental apparatus design, or hardware configurations like tethered head-mounts for neural recording [3]. The consequence is what statisticians identify as a fundamental validation gap—approximately 67% of models show at least one significant pitfall when re-evaluated on fresh data [1].

Key Pitfalls and Quantitative Impacts

Documented Consequences of Default Parameter Usage

Table 1: Statistical Pitfalls from Inadequate Parameter Validation

| Pitfall | Primary Symptoms | Prevalence | Performance Impact |

|---|---|---|---|

| Data Leakage | Overly optimistic test accuracy; contamination between training/test sets | ~30% of models in time-series data [1] | Unquantified in exact figures, but creates invalid performance estimates |

| Overfitting | High training accuracy, low test accuracy | Common with complex models on small datasets [1] | Can inflate perceived performance by >35% without cross-validation [1] |

| Model/Calibration Drift | Performance decay over time; misaligned probability estimates | ~26% of deployed models [1] | Progressive accuracy loss requiring recalibration |

| Misinterpreted p-values | Significant results without proper context | ~42% in published regression analyses [1] | Leads to false positive findings and theoretical errors |

Behavioral Detection Specific Failures

In automated behavior analysis, the pitfalls extend beyond statistical measures to direct observational errors. BehaviorDEPOT developers note that commercially available behavior detectors are often "prone to failure when animals are wearing head-mounted hardware for manipulating or recording brain activity" [3]. This specific failure mode demonstrates how default parameters optimized for standard animal configurations become invalid under common experimental conditions. Parameter optimization for automated behavior assessment requires careful fine-tuning to obtain reliable software scores in each context configuration [4]. Research indicates that subtle behavioral effects, such as those in generalization or genetic research, are particularly vulnerable to divergence between automated and manual scoring when parameters are suboptimal [4].

Experimental Protocols for Parameter Validation

Protocol 1: Cross-Validation Framework for Behavioral Software

Purpose: To establish a systematic methodology for validating and optimizing parameters in automated behavior assessment tools.

Materials:

- Behavioral annotation software (e.g., BehaviorDEPOT [3])

- Video recordings representing full experimental diversity

- Manual scoring protocols with operational definitions

- Computing resources for parallel processing

Procedure:

- Stratified Data Partitioning: Segment video data into training, validation, and testing sets that maintain representation of all experimental conditions, including variations in animal characteristics, lighting conditions, and behavioral states.

- Baseline Manual Annotation: Have multiple trained raters manually score a minimum of 20% of videos using explicit operational definitions. Calculate inter-rater reliability (Cohen's kappa > 0.8 required).

- Iterative Parameter Testing: Systematically vary key parameters (velocity thresholds, spatial criteria, temporal windows) while holding others constant across the validation set.

- Performance Mapping: For each parameter combination, calculate accuracy metrics against manual scoring standards.

- Optimal Parameter Identification: Select parameters that maximize both precision and recall for target behaviors while minimizing false positives.

Validation Requirements:

- Performance must be consistent across all experimental conditions

- Verification on held-out test set completely separate from optimization process

- Documentation of all parameter choices and their performance characteristics

Protocol 2: Heuristic Development for Novel Behaviors

Purpose: To create customized behavioral detection rules that address specific research questions beyond default capabilities.

Materials:

- Keypoint tracking data (e.g., from DeepLabCut [3])

- BehaviorDEPOT software or equivalent platform

- Data exploration and visualization tools

Procedure:

- Metric Calculation: From keypoint tracking data, compute kinematic and postural statistics including velocity, angular velocity, body length, and spatial relationships between body parts [3].

- Feature Expansion: Create derived metrics that capture relevant behavioral features (e.g., approach vectors, orientation angles).

- Threshold Optimization: Using the Optimization Module in BehaviorDEPOT, systematically test detection thresholds against manual scoring.

- Spatial Mapping: For location-dependent behaviors, define zones of interest with precise boundaries based on experimental apparatus.

- Temporal Criteria: Establish minimum and maximum duration thresholds to filter false positives and ensure behavioral bout validity.

Integration:

- Incorporate validated heuristics into graphical interface for ongoing use

- Establish framewise output data for alignment with neural recordings

- Create validation reports documenting performance characteristics

The Researcher's Toolkit: Essential Solutions

Table 2: Research Reagent Solutions for Parameter Optimization

| Tool/Category | Specific Examples | Function/Purpose | Implementation Considerations |

|---|---|---|---|

| Pose Estimation Systems | DeepLabCut, SLEAP, MARBLE | Provides foundational keypoint tracking for behavioral quantification | Requires training datasets specific to experimental conditions; performance varies by species and setup [3] |

| Behavior Detection Software | BehaviorDEPOT, MARS, SimBA | Converts tracking data into behavioral classifications | Balance between heuristic-based (transparent) vs. machine learning (complex behavior) approaches [3] |

| Validation Frameworks | Cross-validation modules, Inter-rater reliability tools | Quantifies detection accuracy and reliability | Must include diverse data representations; requires manual scoring benchmarks [1] [3] |

| Statistical Diagnostic Tools | Residual analysis, VIF calculation, calibration curves | Identifies model assumptions violations and overfitting | Critical for interpreting automated outputs; detects multicollinearity and drift [1] |

| Data Provenance Tracking | Experimental metadata capture, version control | Documents data origins and processing history | Essential for reproducibility; captures potential sources of contamination [1] |

Visualization Frameworks

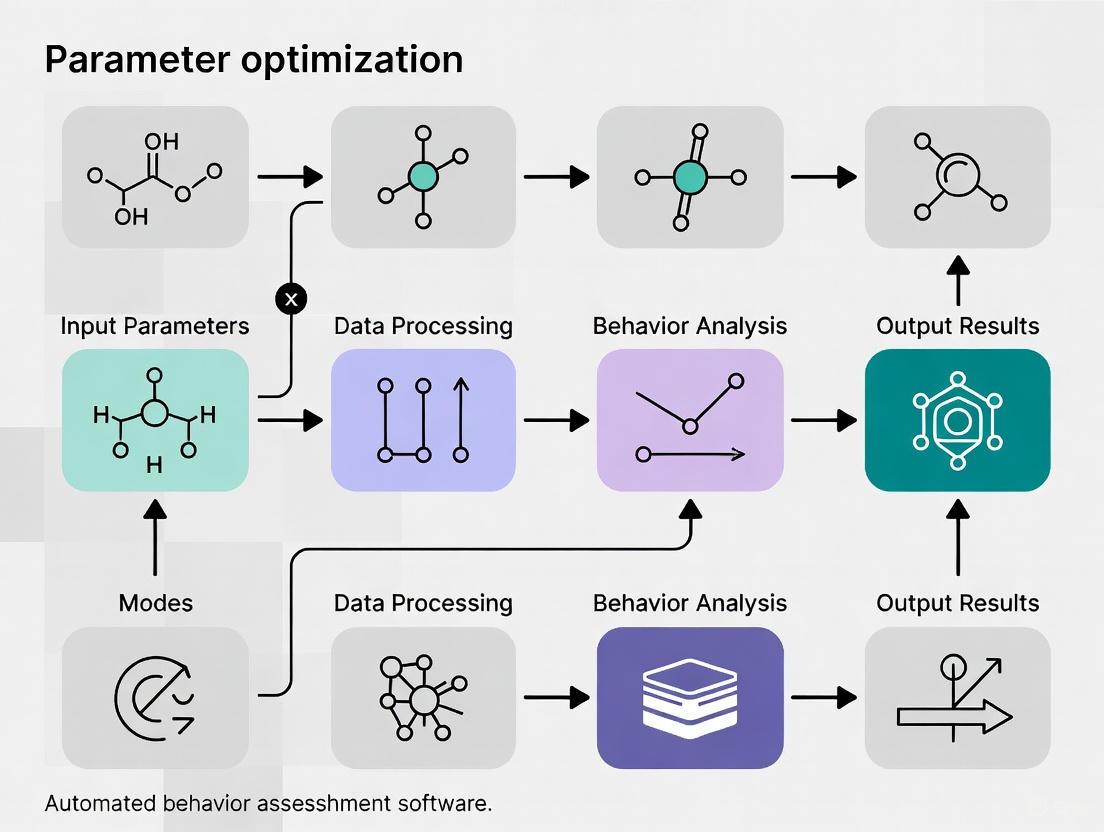

Parameter Optimization Workflow

Statistical Pitfalls Identification System

Implementation Framework and Best Practices

Successful navigation of default setting pitfalls requires both technical solutions and methodological rigor. Researchers should implement a comprehensive validation strategy that includes:

Proactive Validation Planning: Schedule validation milestones as regularly as code reviews, not as afterthoughts [1]. This includes establishing minimum validation standards before model deployment and creating living validation dashboards with drift alerts and recalibration reminders.

Context-Aware Parameterization: "Linking performance to strategy" is equally crucial in research settings [2]. Parameters must reflect specific experimental contexts, including animal strain, testing apparatus, hardware configurations, and environmental conditions. BehaviorDEPOT exemplifies this approach with heuristics adaptable to various experimental designs [3].

Multidimensional Performance Assessment: Move beyond single metrics like accuracy to comprehensive evaluation including calibration, temporal stability, and robustness across conditions. As noted in performance measurement literature, effective systems must "measure the effectiveness of all processes including products and/or services that have reached the final customer" [2].

The evidence-based practice framework emphasizes "the integration of the best available evidence with client values/context and clinical expertise" [5]. Translated to behavioral research, this means combining algorithmic capabilities with deep domain knowledge to develop detection parameters that are both statistically sound and biologically meaningful.

The integration of automated scoring systems into high-stakes fields like pharmaceutical research and educational assessment represents a paradigm shift towards efficiency and standardization. However, a significant challenge persists: ensuring that these automated systems reliably reproduce the nuanced judgments of human expert raters, the established "gold standard." Automated systems, while consistent, can operate as black boxes, making their scores difficult to interpret and trust for critical decisions. This document outlines application notes and experimental protocols for optimizing the parameters of automated behavior assessment software. The core thesis is that deliberate, evidence-based parameter optimization is not merely a technical step but a fundamental requirement for bridging the gap between algorithmic output and human expert judgment, thereby ensuring the validity, fairness, and practical utility of automated scores in scientific and clinical contexts.

Quantitative Performance Landscape of Automated Scoring Systems

The following tables summarize empirical data on the performance of various automated scoring systems compared to human raters, highlighting the critical role of optimization techniques.

Table 1: Performance of Optimized AI Frameworks in Pharmaceutical Research

| AI Framework / Model | Application Domain | Key Optimization Technique | Performance Metric & Result | Comparison to Pre-Optimization or Other Methods |

|---|---|---|---|---|

| optSAE + HSAPSO [6] | Drug classification & target identification | Hierarchically Self-Adaptive Particle Swarm Optimization for hyperparameter tuning | Accuracy: 95.52% [6]Computational Speed: 0.010 s/sample [6]Stability: ± 0.003 [6] | Outperformed traditional models (e.g., SVM, XGBoost) in accuracy, speed, and stability [6]. |

| Generative Adversarial Networks (GANs) [7] | Molecular property prediction & drug design | Dual-network system (generator & discriminator) | (Specific quantitative results not provided in the source; noted for versatility and performance) [7] | Introduces new possibilities in drug design [7]. |

| Random Forest [7] | Toxicity profile classification & biomarker identification | Ensemble method combining multiple decision trees | (Specific quantitative results not provided in the source; noted for effectiveness in minimizing overfitting) [7] | Effective in classifying toxicity profiles and identifying biomarkers [7]. |

Table 2: Performance of Automated Systems in Language and Writing Assessment

| Automated System | Subject / Task | Optimization / Prompting Strategy | Performance Metric & Result | Alignment with Human Raters |

|---|---|---|---|---|

| ChatGPT [8] | Automated Writing Scoring (EFL essays) | Few-Shot Prompting | Severity (MFRM): 0.10 logits [8] | Closest to human rater severity [8]. |

| ChatGPT [8] | Automated Writing Scoring (EFL essays) | Zero-Shot Prompting | Severity (MFRM): 0.31 logits [8] | More severe than humans [8]. |

| Claude [8] | Automated Writing Scoring (EFL essays) | Few-Shot Prompting | Severity (MFRM): 0.38 logits [8] | More severe than humans [8]. |

| Claude [8] | Automated Writing Scoring (EFL essays) | Zero-Shot Prompting | Severity (MFRM): 0.46 logits [8] | Most severe compared to humans [8]. |

| Feature-Based AES [9] | Essay Scoring (Year 5 persuasive writing) | Feature-based difficulty prediction with LightGBM | Overall QWK: 0.861 [9]Human IRR QWK: 0.745 [9] | Exceeded human inter-rater agreement [9]. |

| Chinese AES (e.g., AI Speaking Master) [10] | Spoken English Proficiency | (System-specific calibration) | Strong agreement with human ratings [10] | Deemed a valuable complement to human assessment [10]. |

| Chinese AES (Unspecified, 3rd system) [10] | Spoken English Proficiency | (Lacked proper calibration) | Systematic score inflation [10] | Poor alignment due to algorithmic discrepancies [10]. |

Experimental Protocols for Optimization and Validation

Protocol: Hyperparameter Optimization for a Stacked Autoencoder (SAE) using Hierarchically Self-Adaptive PSO (HSAPSO)

Application Note: This protocol is designed for high-dimensional pharmaceutical data (e.g., from DrugBank, Swiss-Prot) to achieve maximal classification accuracy for tasks like druggable target identification. The HSAPSO algorithm adaptively balances exploration and exploitation, overcoming the limitations of static optimization methods [6].

Materials:

- Curated pharmaceutical dataset (e.g., molecular descriptors, protein features).

- High-performance computing cluster.

- Programming environment (e.g., Python) with deep learning libraries (e.g., TensorFlow, PyTorch).

Procedure:

- Data Preprocessing: Clean, normalize, and partition the dataset into training, validation, and holdout test sets. Ensure the holdout set remains completely unseen until the final evaluation stage [6].

- SAE Architecture Initialization: Define the initial SAE architecture, including the number of layers, nodes per layer, and initial learning rate. The SAE will be responsible for unsupervised feature learning from the input data [6].

- HSAPSO Parameter Setup:

- Initialize a swarm of particles, where each particle's position vector represents a potential set of SAE hyperparameters (e.g., learning rate, batch size, regularization parameters).

- Define the search boundaries for each hyperparameter.

- Set the HSAPSO's hierarchical adaptation parameters for inertia and acceleration coefficients [6].

- Fitness Evaluation: For each particle's hyperparameter set: a. Configure the SAE with the proposed hyperparameters. b. Train the SAE on the training set for a predefined number of epochs. c. Evaluate the partially trained SAE on the validation set, using classification accuracy as the fitness score.

- Swarm Update: Update the velocity and position of each particle based on its personal best performance, the global best performance of the swarm, and the hierarchically self-adaptive parameters. This step allows the swarm to dynamically focus on promising areas of the hyperparameter space [6].

- Termination Check: Repeat steps 4 and 5 until a convergence criterion is met (e.g., a maximum number of iterations is reached or the improvement in global best fitness falls below a threshold).

- Final Model Training & Evaluation: Train a final SAE model using the global best hyperparameters found by HSAPSO on the combined training and validation set. Report the final performance metrics (e.g., accuracy, stability) on the protected holdout test set [6].

Protocol: Many-Facet Rasch Model (MFRM) for Validating LLM-based Automated Scoring

Application Note: This protocol provides a robust statistical framework for comparing the severity, consistency, and bias of Large Language Model (LLM) raters against human raters. It moves beyond simple correlation coefficients to deliver a nuanced understanding of alignment [8].

Materials:

- A sample of student essays or other scored responses (N ≥ 100 recommended).

- Scores from at least two trained human raters.

- Access to LLM APIs (e.g., OpenAI's ChatGPT, Anthropic's Claude) and MFRM software (e.g., FACETS, jMetrik).

Procedure:

- Data Collection & Preparation:

- Collect a representative sample of essays.

- Have each essay scored by multiple human raters blind to each other's scores.

- For each LLM to be evaluated, generate scores using different prompting strategies (e.g., Zero-Shot, Few-Shot with example essays) [8].

- Data Structuring: Structure the data for MFRM analysis. Each data point is a single score, linked to the specific essay, the rater (human or LLM/prompt combination), and potentially other facets like the scoring criterion (trait).

- Model Specification: Run the MFRM analysis. A typical model would specify:

Score = Essay Ability + Rater Severity + Criterion Difficulty + Interaction Effects. - Interpretation and Validation:

- Severity Analysis: Examine the "Rater Severity" measures in logits. LLM raters with measures close to 0 are aligned with average human severity. Positive values indicate greater severity, negative values indicate leniency [8].

- Consistency: Analyze the model fit statistics (e.g., Infit/Outfit mean squares) for each LLM rater. Values near 1.0 indicate good fit and high consistency [8].

- Bias Analysis: Conduct bias/interaction analysis to check if any LLM rater exhibits systematic bias towards specific subgroups (e.g., based on gender) or essay topics [8].

- Optimization Feedback: Use the results to select the best-performing LLM and prompting strategy. If an LLM is consistently too severe, instructional fine-tuning or prompt engineering (e.g., "be more lenient") can be applied, and the protocol can be repeated for validation.

Protocol: Feature-Based Difficulty Prediction for Trait-Level Uncertainty Quantification

Application Note: This protocol addresses the "black box" problem in Automated Essay Scoring (AES) by predicting which essays or traits are difficult for the model to score accurately. This allows for a hybrid human-AI workflow where only uncertain cases are deferred to human raters, optimizing resource use [9].

Materials:

- A dataset of expert-marked essays, with scores available for individual traits (e.g., Ideas, Spelling, Sentence Structure).

- The feature set extracted from each essay (e.g., lexical diversity, syntactic complexity, paragraph statistics).

- A machine learning environment (e.g., Python with LightGBM).

Procedure:

- Feature Engineering: Extract a set of interpretable, curriculum-aligned features from the essay texts. This contrasts with using opaque, high-dimensional representations like n-gram models [9].

- Error Calculation: For each essay and each trait, calculate the absolute difference between the automated system's initial score and the human expert's score. This is the "scoring error."

- Model Training: Train a secondary machine learning model (e.g., a LightGBM regressor) to predict the scoring error. The input features are the same interpretable features from Step 1, and the target variable is the scoring error from Step 2 [9].

- Difficulty Prediction: Use the trained model to predict the "difficulty" (expected scoring error) for new, unseen essays.

- Deferral Rule Implementation: Establish a threshold for the predicted difficulty. Essays or traits with a predicted error above this threshold are flagged for human review. This creates a semi-automated, robust scoring pipeline [9].

- Validation: Evaluate the effectiveness of the system by comparing the final agreement (QWK) on the retained (non-deferred) essays against pre-defined deployment thresholds for each trait [9].

Visualization of Optimization Workflows

The following diagrams, generated using Graphviz DOT language, illustrate the logical flow of the key optimization protocols described above.

SAE-PSO Optimization

MFRM Validation

Trait-Level Uncertainty

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for Automated Scoring Optimization Research

| Item / Tool Name | Function / Application Note |

|---|---|

| VideoFreeze Software [11] | Widely used automated system for scoring rodent freezing behavior. Serves as a platform for studying context-dependent parameter optimization and divergence from manual scoring. |

| Med Associates Fear Conditioning System [11] | Standardized hardware (chambers, grids) for behavioral experiments, providing a controlled environment for testing automated scoring software. |

| Design of Experiment (DoE) Software | Statistical tool for optimizing complex bioprocess parameters (e.g., in bioreactors) [12]. Its principles are directly applicable to designing efficient experiments for scoring system parameter tuning. |

| Many-Facet Rasch Model (MFRM) Software [8] | (e.g., FACETS, jMetrik) Provides a robust statistical framework for decomposing scores into facets (essay ability, rater severity, trait difficulty), essential for validating automated raters against humans. |

| LightGBM [9] | A fast, distributed, high-performance gradient boosting framework. Ideal for building secondary "difficulty predictor" models due to its efficiency and handling of tabular data. |

| Pre-trained LLMs (e.g., ChatGPT, Claude) [8] | Serve as the core scoring engine in modern AES. Different models and prompting strategies (Zero/Few-Shot) are key variables in the optimization process. |

| Stacked Autoencoder (SAE) | A deep learning model used for unsupervised feature learning from high-dimensional data, such as pharmaceutical compounds [6]. |

| Particle Swarm Optimization (PSO) Libraries | Computational libraries that implement the PSO algorithm, enabling efficient hyperparameter search for models like SAEs [6]. The HSAPSO variant offers adaptive improvements. |

| Stratified Data Splits | A methodological "tool" to ensure training, validation, and holdout datasets maintain the same distribution of score classes, which is critical for realistic performance evaluation [9]. |

In the field of automated behavior assessment, the reliability of software outputs is highly dependent on the careful selection and tuning of operational parameters. Automated systems offer significant advantages in objectivity and throughput over manual human scoring, but these benefits can only be realized through rigorous parameter optimization [4]. This application note establishes a standardized framework for defining core parameters, establishing performance thresholds, and implementing robust optimization workflows. The protocols detailed herein are designed to support researchers in neuroscience and pharmacology who require consistent, reproducible behavioral assessment in contexts such as fear conditioning research and genetic studies where detecting subtle behavioral effects is paramount [4].

Core Definitions and Quantitative Standards

Fundamental Parameter Types

In automated behavior assessment systems, parameters are configurable values that control how the software interprets raw data. These typically fall into three primary categories:

- Detection Parameters: Govern the identification of behavioral events from sensor data

- Classification Parameters: Define boundaries between distinct behavioral categories

- Temporal Parameters: Control the time-based aggregation and segmentation of behavioral data

These parameters collectively form a configuration space that must be optimized for each specific experimental context and hardware setup.

Threshold Definitions and Performance Metrics

Performance thresholds are predefined criteria that determine whether a parameter set produces acceptable results. These thresholds should be established prior to optimization and may include:

- Minimum Agreement Levels: Statistical measures of concordance with manual scoring

- Performance Stability: Consistency across multiple experimental sessions

- Sensitivity/Specificity Boundaries: Balanced accuracy in detecting target behaviors

Optimization Workflow Protocol

The following structured protocol provides a methodological approach to parameter optimization for automated behavior assessment systems.

Workflow Visualization

Figure 1. Parameter optimization workflow for automated behavior assessment. This iterative process ensures systematic refinement until performance thresholds are achieved.

Detailed Procedural Steps

Pre-Optimization Requirements

- Manual Scoring Dataset: Establish a ground truth dataset of 50-100 behavioral sessions scored by trained human observers

- Performance Baseline: Determine current system performance against manual scoring using Cohen's kappa or intraclass correlation coefficients

- Threshold Definition: Set minimum acceptable agreement levels based on experimental requirements (e.g., κ ≥ 0.7 for high-stakes studies)

Optimization Execution

- Initial Parameter Selection: Choose starting values based on published recommendations or pilot data

- Behavioral Assessment: Process the validation dataset using current parameters

- Performance Comparison: Calculate agreement metrics between software and manual scores

- Parameter Refinement: Employ selected optimization algorithm to adjust parameters

- Iteration: Repeat steps 2-4 until performance thresholds are met or diminishing returns observed

Validation and Implementation

- Cross-Validation: Test optimized parameters on held-out behavioral data

- Robustness Testing: Verify performance across different subjects, timepoints, and conditions

- Documentation: Record final parameters, optimization history, and validation results

Optimization Algorithms and Performance

Algorithm Comparison and Selection Criteria

Different optimization approaches offer distinct advantages depending on the parameter space complexity and available computational resources.

Figure 2. Optimization algorithm categories with respective advantages and limitations.

Quantitative Performance Comparison

Table 1. Optimization algorithm performance characteristics for behavior assessment parameter tuning.

| Algorithm Type | Convergence Speed | Global Optimum Probability | Implementation Complexity | Ideal Use Case |

|---|---|---|---|---|

| Local Search | Fast | Low | Low | Fine-tuning with good initial values |

| Particle Swarm | Moderate | High | Moderate | Complex parameter spaces |

| Grid Search | Very Slow | High | Low | Small parameter sets |

| Bayesian Optimization | Moderate-High | High | High | Limited evaluation budgets |

Advanced Methodological Approaches

Hybrid Optimization Framework

For challenging optimization scenarios, a hybrid approach combining particle swarm optimization (PSO) with local search algorithms (LSA) has demonstrated superior performance in overcoming local optima while maintaining computational efficiency [13]. This method is particularly valuable when initial parameter values are unknown or when dealing with complex, non-convex parameter spaces.

The PSO-LSA hybrid protocol:

- Exploration Phase: Use PSO to identify promising regions of the parameter space

- Exploitation Phase: Apply LSA to refine solutions within these regions

- Validation: Verify that the solution represents a global rather than local optimum

Special Considerations for Behavioral Research

Specific challenges in behavioral neuroscience require specialized optimization approaches:

- Subtle Behavioral Effects: Genetic and generalization studies often produce small effect sizes that demand highly sensitive parameter tuning [4]

- Individual Variability: Parameters may require adjustment across different subjects or strains

- Temporal Dynamics: Behavioral baselines and responses can shift across experimental sessions

The Scientist's Toolkit: Essential Research Materials

Table 2. Key research reagents and computational tools for automated behavior assessment optimization.

| Item | Function | Application Notes |

|---|---|---|

| VideoFreeze Software | Automated assessment of conditioned freezing behavior | Requires parameter calibration for each lab environment [4] |

| Manual Scoring Dataset | Ground truth for optimization and validation | Should include diverse behaviors and multiple expert scorers |

| Statistical Analysis Package | Compute agreement metrics (e.g., Cohen's kappa, ICC) | R or Python with specialized reliability libraries |

| Parameter Optimization Framework | Implement and compare optimization algorithms | Custom code or platforms like MATLAB Optimization Toolbox |

| High-Quality Video Recording System | Capture behavioral data for analysis | Consistent lighting and positioning critical for reliability |

Troubleshooting and Quality Control

Common Optimization Challenges

- Local Optima Trapping: Solution: Implement hybrid approaches or multiple restarts

- Overfitting: Solution: Use cross-validation and independent test datasets

- Parameter Interdependence: Solution: Employ algorithms that handle correlated parameters

Quality Assurance Protocol

- Regular Recalibration: Schedule parameter re-optimization when introducing new equipment or animal strains

- Performance Monitoring: Continuously track agreement between automated and manual scoring

- Documentation Standards: Maintain detailed records of all optimization procedures and results

Systematic parameter optimization is not merely a technical prerequisite but a fundamental methodological component of reliable automated behavior assessment. The frameworks and protocols presented herein provide researchers with standardized approaches for establishing robust, validated parameters that ensure scientific rigor and reproducibility. As automated behavioral analysis continues to evolve, these optimization workflows will remain essential for generating trustworthy, publication-quality data in neuroscience and pharmacological research.

The field of automated behavior assessment, particularly within pharmaceutical research and preclinical studies, is undergoing a profound transformation. This shift is characterized by a transition from reliance on classic commercial software packages to the adoption of flexible, powerful deep learning platforms. This evolution represents more than a mere change in tools—it constitutes a fundamental reimagining of how researchers approach parameter optimization to extract meaningful, quantitative insights from complex behavioral data.

The limitations of traditional software often include closed architectures, fixed analytical pipelines, and predefined parameters that constrain scientific inquiry. In contrast, modern deep learning frameworks offer open, customizable environments where researchers can design, train, and validate bespoke models tailored to specific research questions. This expanded toolbox enables unprecedented precision in measuring subtle behavioral phenotypes, accelerating the development of more effective and targeted therapeutics [14]. The integration of these artificial intelligence technologies represents not just a technological advancement but a paradigm shift toward intelligent, data-driven models capable of improving therapeutic outcomes while reducing development costs [14].

The Evolution: From Commercial Packages to Deep Learning Frameworks

The historical progression in computational tools for behavioral analysis reveals a clear trajectory toward greater flexibility, power, and precision. Classic commercial software packages provided valuable standardized assays but often operated as "black boxes" with limited transparency into their underlying algorithms and parameter optimization processes. These systems typically offered fixed feature extraction methods and predetermined analytical pathways that constrained innovation and adaptation to novel research questions.

The advent of machine learning introduced greater adaptability, but the recent rise of deep learning frameworks has fundamentally transformed the landscape. These platforms provide researchers with complete control over the entire analytical pipeline, from raw data preprocessing to complex model architecture design and optimization. This shift has been particularly transformative for behavior analysis, where subtle, high-dimensional patterns often elude predefined algorithms [15]. Deep learning excels at automatically learning relevant features directly from raw data, identifying complex nonlinear relationships that traditional methods might miss [16] [17].

This evolution has been driven by several key factors: the exponential growth in computational power, the availability of large-scale behavioral datasets for training, and the development of more accessible programming interfaces that lower the barrier to entry for researchers without extensive computer science backgrounds. The resulting ecosystem empowers scientists to build specialized models that can detect nuanced behavioral signatures with human-level accuracy or greater, while providing the transparency and customization necessary for rigorous scientific validation [18].

The Deep Learning Framework Landscape

The current ecosystem of deep learning frameworks offers researchers a diverse range of tools, each with distinct strengths, architectures, and optimization capabilities. Understanding the characteristics of these platforms is essential for selecting the appropriate foundation for automated behavior assessment systems.

Table 1: Comparison of Major Deep Learning Frameworks for Behavioral Research

| Framework | Primary Language | Key Strengths | Optimization Features | Ideal Use Cases in Behavior Analysis |

|---|---|---|---|---|

| TensorFlow | Python, C++ | Production-ready deployment, Excellent visualization with TensorBoard [15] | Distributed training across GPUs/TPUs [15] | Large-scale video analysis, Multi-animal tracking |

| PyTorch | Python | Dynamic computational graphs, Pythonic syntax [15] | Rapid prototyping, Strong GPU acceleration [15] | Research prototyping, Novel behavior detection |

| Keras | Python | User-friendly API, Fast experimentation [15] | Multi-GPU support, Multiple backend support [15] | Rapid model iteration, Transfer learning |

| Deeplearning4j | Java, Scala | JVM ecosystem integration, Hadoop/Spark support [15] | Distributed training on CPUs/GPUs [15] | Enterprise-scale data processing, Integration with existing Java systems |

| Microsoft CNTK | Python, C++ | Efficient multi-machine scaling [15] | Optimized for multiple servers [15] | Large-scale distributed training, Speech recognition |

This diverse toolbox enables researchers to select platforms based on their specific requirements for scalability, development speed, deployment environment, and analytical complexity. The frameworks share common capabilities for automating feature discovery from raw input data—a crucial advantage for behavior analysis where manually engineering features for complex behaviors like social interactions or subtle gait abnormalities proves challenging [16].

Experimental Protocols for Deep Learning-Enabled Behavior Analysis

Implementing deep learning approaches for automated behavior assessment requires carefully designed experimental protocols that ensure scientific rigor while leveraging the unique capabilities of these platforms. The following sections provide detailed methodologies for key applications in pharmaceutical research.

Protocol: Implementation of Sequential Behavior Classification Using LSTM Networks

Objective: To classify temporal sequences of behavior in video recordings of animal models, enabling quantitative assessment of behavioral states and transitions relevant to drug efficacy studies.

Materials and Reagents:

- Video Recording System: High-resolution cameras with appropriate frame rates (≥30fps) and lighting conditions sufficient for automated tracking [18]

- Computing Hardware: GPU-accelerated workstations (NVIDIA RTX series or equivalent) with sufficient VRAM (≥8GB) for deep learning model training

- Deep Learning Framework: TensorFlow with Keras API for model implementation [15]

- Data Annotation Tool: Custom or commercial software (e.g., BORIS, DeepLabCut) for generating ground truth labels

Procedure:

- Data Acquisition and Preprocessing:

- Record video sessions under consistent lighting and environmental conditions

- Extract frames and normalize pixel values to [0,1] range

- Apply data augmentation techniques (rotation, translation, brightness adjustment) to improve model generalization

Model Architecture Design:

- Implement a sequential model combining convolutional and recurrent layers:

- Feature Extraction: 2D convolutional layers with increasing filter sizes (32, 64, 128) and 3×3 kernels

- Temporal Modeling: Bidirectional LSTM layers with 128 units to capture temporal dependencies in both forward and backward directions

- Classification: Fully connected layers with softmax activation for behavior state probability output

- Implement a sequential model combining convolutional and recurrent layers:

Training Configuration:

- Loss Function: Categorical cross-entropy for multi-class behavior classification

- Optimizer: Adam with learning rate 0.001 and decay rate 0.9

- Validation: 80/20 training/validation split with stratified sampling to maintain behavior class distributions

Model Evaluation:

- Assess performance using precision, recall, and F1-score for each behavior class

- Implement confusion matrix analysis to identify specific misclassification patterns

- Calculate inter-rater reliability between model predictions and human expert annotations

This approach enables the capture of complex temporal patterns in behavior that traditional threshold-based methods cannot detect, providing more nuanced assessment of drug effects on behavioral sequences and transitions [18].

Protocol: 3D Pose Estimation for Quantitative Gait Analysis

Objective: To implement markerless 3D pose estimation for quantitative assessment of motor function and gait parameters in neurodegenerative disease models.

Materials and Reagents:

- Multi-view Camera System: Synchronized cameras (≥3) positioned at different angles to enable 3D reconstruction

- Calibration Target: Custom or commercial calibration object for camera alignment and 3D coordinate system establishment

- Deep Learning Model: Pre-trained pose estimation framework (e.g., DeepLabCut, SLEAP) with custom fine-tuning

- Analysis Software: Custom Python scripts for extracting kinematic parameters from 3D keypoint data

Procedure:

- System Calibration:

- Record calibration object from multiple camera views

- Perform camera calibration to obtain intrinsic and extrinsic parameters

- Compute stereo rectification parameters for 3D reconstruction

Data Preparation and Annotation:

- Collect multi-view video recordings of subjects in appropriate testing environments

- Manually annotate body parts (limbs, joints, etc.) across a representative frame subset

- Generate training dataset with consistent labeling across all camera views

Model Training and Optimization:

- Employ transfer learning using pre-trained ResNet-50 or EfficientNet backbone

- Fine-tune network on labeled behavior data with progressive resizing

- Implement consistency loss across multiple views to improve 3D accuracy

3D Reconstruction and Analysis:

- Triangulate 2D predictions from multiple views to obtain 3D coordinates

- Apply temporal filtering to smooth trajectories and reduce jitter

- Extract gait parameters (stride length, velocity, stance/swing phase timing) from 3D joint trajectories

This markerless approach enables more naturalistic assessment of motor function without the confounding effects of attached markers, providing higher-throughput and more objective quantification of therapeutic interventions for movement disorders [18].

Visualization: Workflow Architectures for Deep Learning-Enabled Behavior Analysis

The integration of deep learning into behavior analysis requires structured workflows that ensure reproducible and validated results. The following diagrams illustrate key experimental and computational pipelines.

Deep Learning Behavior Analysis Workflow

Neural Network Architecture for Behavior Classification

The Scientist's Toolkit: Essential Research Reagents and Materials

Successful implementation of deep learning approaches for automated behavior assessment requires both computational resources and specialized experimental materials. The following table details essential components of the modern behavior neuroscience toolkit.

Table 2: Essential Research Reagents and Materials for Deep Learning-Enabled Behavior Analysis

| Item | Specifications | Function/Role in Research |

|---|---|---|

| GPU-Accelerated Workstations | NVIDIA RTX 4090 (24GB VRAM) or A100 (40GB VRAM) | Enables rapid training of complex deep learning models on large video datasets [15] |

| Multi-camera Behavioral Recording Systems | Synchronized high-speed cameras (≥4MP, ≥60fps) with IR capability | Captures comprehensive behavioral data from multiple angles for 3D reconstruction |

| Deep Learning Frameworks | TensorFlow, PyTorch, or Keras with specialized behavior analysis extensions | Provides building blocks for designing, training, and validating custom neural networks [15] |

| Data Annotation Platforms | BORIS, DeepLabCut, or custom web-based annotation tools | Generates ground truth labels for supervised learning approaches |

| Behavioral Testing Apparatus | Standardized mazes, open fields, and operant chambers with consistent lighting | Provides controlled environments for reproducible behavioral data collection |

The expansion from classic commercial software to deep learning platforms represents more than a technological upgrade—it constitutes a fundamental shift in how researchers approach quantitative behavior assessment. This transition enables unprecedented precision in measuring subtle behavioral phenotypes, accelerating the development of more effective therapeutics for neurological and psychiatric disorders. The parameter optimization capabilities of these platforms allow researchers to move beyond predefined analytical pathways toward customized, validated solutions for specific research questions.

As these technologies continue to evolve, we anticipate further integration with other emerging technologies such as the Internet of Medical Things (IoMT) for real-time monitoring [14], advanced visualization tools for model interpretability, and federated learning approaches that enable collaborative model development while preserving data privacy. The expanding toolbox empowers researchers to ask more complex questions about behavior and its modification by pharmacological interventions, ultimately advancing both basic neuroscience and drug development. By embracing these powerful new platforms while maintaining rigorous validation standards, the research community can unlock deeper insights into the complex relationship between neural function and behavior.

Implementing Optimization Techniques: From Autotuning to Deep Learning

Leveraging Autotuning Frameworks for Static and Dynamic Parameter Adjustment

Autotuning represents a transformative methodology for automating the optimization of internal software parameters, enabling systems to self-adapt to specific execution environments, datasets, and operational requirements [19]. In the specialized field of automated behavior assessment, where consistent and accurate measurement of subtle behavioral phenotypes is critical for both basic research and drug development, autotuning moves beyond traditional trial-and-error parameter adjustment to provide systematic, data-driven optimization [20] [11]. This approach is particularly valuable when assessing genetically modified models or detecting nuanced behavioral changes in response to pharmacological interventions, where measurement precision directly impacts experimental validity and translational potential [11].

The fundamental architecture of autotuning systems typically comprises four interconnected components: expectations (defining how the system should perform under specific conditions), measurement (gathering behavioral data), analysis (determining whether expectations are met), and actions (dynamically reconfiguring parameters) [21]. This framework supports two primary operational modes: static (offline) autotuning, which occurs at compile-time using heuristics and pre-collected profiling data, and dynamic (online) autotuning, which leverages runtime profiling and adaptive models to adjust parameters during program execution [19]. The choice between these approaches involves careful consideration of the trade-offs between optimization completeness and computational overhead, with hybrid models increasingly emerging to balance these competing demands [19].

Core Autotuning Concepts and Techniques

Classification of Autotuning Approaches

Table 1: Classification of Autotuning Approaches for Behavior Assessment

| Approach | Execution Timing | Key Characteristics | Best-Suited Applications |

|---|---|---|---|

| Static Autotuning | Compile-time/Before execution | Uses heuristics, compiler analysis, and historical profiling data; generates multiple code versions; minimal runtime overhead [19] | Batch processing of stable behavioral datasets; environments with consistent hardware; standardized behavioral paradigms [19] |

| Dynamic Autotuning | Runtime | Leverages real-time profiling and model refinement; enables sophisticated adaptivity schemes; incurs runtime overhead [19] | Real-time behavior analysis; changing environmental conditions; adaptive experimental designs; unpredictable behavioral responses [19] [22] |

| Hybrid Autotuning | Both compile-time and runtime | Combines static analysis with dynamic refinement; uses offline models updated with online data [19] | Long-term behavioral monitoring; studies requiring both stability and adaptability; resource-constrained environments [19] |

Search and Optimization Strategies

The parameter optimization process employs diverse strategies to navigate complex configuration spaces. Exhaustive search methods guarantee optimal results by evaluating all possible design points but incur significant computational costs that often prove prohibitive for complex behavioral assessment systems [19]. Sequentially decoupled search strategies reduce evaluation numbers but may converge on local rather than global optima [19].

Heuristic methods and search space pruning techniques address the challenge of exponentially large parameter spaces, with evolutionary algorithms (such as genetic algorithms) generating solutions through processes mimicking natural selection and evolution [19]. These metaheuristics require careful parameter tuning themselves and thorough validation to prevent overtraining to specific behavioral patterns or experimental conditions [19].

Machine learning-based approaches incrementally build performance models using offline static analysis and profiling, with continuous refinement possible through runtime data integration [19]. More recently, Bayesian optimization has emerged as a powerful technique for navigating expensive-to-evaluate hyperparameter spaces, using surrogate models (Gaussian processes or random forests) with acquisition functions to guide the search toward optimal configurations [19] [23]. Reinforcement learning frameworks further extend this capability by enabling systems to learn tuning policies through direct interaction with the behavioral assessment environment [22].

Quantitative Comparison of Autotuning Performance

Performance Metrics Across Applications

Table 2: Performance Metrics of Autotuning Frameworks Across Domains

| Application Domain | Framework/Tool | Key Parameters Tuned | Performance Improvement | Quantitative Results |

|---|---|---|---|---|

| High-Performance Computing | Intel Autotuning Tools | Loop blocking sizes, domain decomposition, prefetching flags [19] | Execution time reduction, energy efficiency [19] | Up to 6x improvement on Intel Xeon E5-2697 v2 processors; nearly 30x on Intel Xeon Phi coprocessors [19] |

| Machine Learning (SVM) | Mixed-Kernel SVM Autotuner | Regularization (C), coef0, kernel parameters [23] | Classification accuracy [23] | Accuracy increased to 94.6% for HEP applications; 97.2% for heterojunction transistors [23] |

| Behavioral Neuroscience | VideoFreeze | Motion index threshold, minimum freeze duration [20] [11] | Agreement with manual scoring (Cohen's kappa) [20] [11] | Poor agreement in context A (κ=0.05) vs substantial agreement in context B (κ=0.71) with identical settings [11] |

| Dynamic ML Training | LiveTune | Learning rate, momentum, regularization, batch size [22] | Time and energy savings during hyperparameter changes [22] | Savings of 60 seconds and 5.4 kJ per hyperparameter change; 5x improvement over baseline [22] |

| Compiler Optimization | ML-based Compiler Autotuning | Optimization sequences, phase ordering [19] | Execution time, energy consumption [19] | Standard loop optimizations can reduce energy consumption by up to 40% [19] |

Experimental Protocols for Autotuning in Behavior Assessment

Protocol 1: Static Autotuning for Rodent Freezing Behavior Analysis

Objective: To establish optimized static parameters for automated freezing detection across varying experimental contexts using offline calibration [20] [11].

Materials and Equipment:

- Video recording system with consistent lighting conditions

- Rodent housing and testing apparatus (e.g., Med Associates fear conditioning chamber)

- VideoFreeze software or equivalent automated behavior assessment platform

- Validation dataset with manually scored behavioral annotations [20] [11]

Procedure:

- Initial Parameter Setup: Begin with literature-derived baseline parameters (motion threshold = 50 for rats, minimum freeze duration = 30 frames/1 second) [11].

- Calibration Data Collection: Record video sequences across all experimental contexts (e.g., Context A with standard grid floor and black triangular insert; Context B with staggered grid floor and white curved back wall) [11].

- Manual Annotation: Have multiple trained observers manually score freezing behavior (defined as "absence of movement of the body and whiskers with the exception of respiratory motion") with continuous measurement using stopwatches from video recordings [11].

- Inter-rater Reliability Assessment: Calculate Cohen's kappa between observers to establish ground truth reliability (target: substantial agreement, κ > 0.6) [11].

- Parameter Space Exploration: Systematically vary motion threshold (±10 units) and minimum duration (±15 frames) while measuring agreement with manual scores.

- Performance Validation: Compare software scores with manual scores using correlation analysis and Cohen's kappa statistics across different experimental contexts [11].

- Context-Specific Optimization: Repeat process for each distinct experimental context, as optimal parameters may vary significantly between contexts despite identical software settings [11].

Troubleshooting Notes:

- Poor agreement between software and manual scores in specific contexts may require camera recalibration or white balance adjustment [11].

- If context-dependent performance variance persists, consider implementing context-specific parameter sets rather than universal parameters [11].

- Validation should include assessment of both group-level differences and individual animal tracking accuracy [20].

Protocol 2: Dynamic Autotuning with LiveTune Framework

Objective: To implement real-time hyperparameter adjustment during machine learning model training for behavioral classification tasks [22].

Materials and Equipment:

- Computing system with LiveTune API installed

- Behavioral dataset with annotated examples

- Target machine learning model (e.g., neural network, reinforcement learning agent)

- Performance monitoring dashboard [22]

Procedure:

- LiveVariable Initialization: Replace standard hyperparameters with LiveVariables (tag, initial value, designated port) for learning rate, momentum, regularization coefficients, and batch size [22].

- Baseline Performance Establishment: Execute training process with initial hyperparameter values while monitoring loss curves and accuracy metrics.

- Real-Time Adjustment: Without stopping the process, dynamically adjust LiveVariables through designated ports based on observed training behavior [22].

- LiveTrigger Implementation: Embed boolean flags to activate or halt specific procedures (e.g., learning rate decay, early stopping) based on developer commands without program termination [22].

- Continuous Monitoring: Track time and energy consumption savings compared to traditional stop-restart tuning approaches.

- Reward Structure Adaptation: For reinforcement learning applications, modify reward dynamics in real-time based on agent learning progress [22].

- Performance Benchmarking: Compare final model accuracy and training time against fixed-parameter baselines and grid search approaches.

Validation Metrics:

- Quantitative time and energy savings per hyperparameter change (target: 60 seconds and 5.4 kJ savings based on LiveTune benchmarks) [22]

- Training stability and convergence rates

- Final model accuracy on held-out test datasets

- Resource utilization efficiency during tuning process [22]

Signaling Pathways and Workflow Architecture

Autotuning System Architecture

Static vs Dynamic Autotuning Workflow

Research Reagent Solutions for Behavioral Autotuning

Table 3: Essential Research Tools for Behavioral Analysis Autotuning

| Tool/Category | Specific Examples | Primary Function | Application Context |

|---|---|---|---|

| Open-Source Behavioral Analysis Software | DeepLabCut, Simple Behavioral Analysis (SimBA), JAABA [24] | Provides pose estimation, tracking, and behavior classification capabilities with modifiable algorithms | Rodent behavioral analysis with custom experimental paradigms; enables algorithm customization for specific research needs [24] |

| Autotuning Frameworks | LiveTune, fastText Autotune, ytopt [22] [25] [23] | Automated hyperparameter optimization for machine learning models and behavioral classification systems | Dynamic parameter adjustment during model training; optimization of classification thresholds for behavior detection [22] [25] |

| Commercial Behavioral Systems | Med Associates VideoFreeze, Noldus EthoVision [20] [11] | Standardized automated behavior assessment with validated parameters | High-throughput drug screening; standardized behavioral phenotyping with established validation protocols [20] [11] |

| Search Algorithm Libraries | Bayesian optimization, Genetic algorithms, Random search [19] [23] | Efficient navigation of complex parameter spaces to find optimal configurations | Optimization of multiple interdependent parameters in behavioral classification systems [19] [23] |

| Performance Monitoring Tools | Custom validation scripts, Inter-rater reliability assessment [11] | Quantifying agreement between automated and manual behavioral scoring | Validation of automated behavior assessment systems; establishing ground truth for tuning processes [11] |

Implementation Considerations for Behavioral Research

Successful implementation of autotuning frameworks in automated behavior assessment requires careful attention to several domain-specific challenges. The sensitivity of behavioral measurements to environmental factors necessitates robust validation across varying conditions, as identical parameter settings may yield significantly different performance across experimental contexts [11]. This is particularly critical when studying subtle behavioral effects, such as those in generalization research or genetic modification studies, where measurement precision directly impacts experimental conclusions [11].

The trade-offs between automation and accuracy must be carefully balanced, with systematic validation against manual scoring remaining essential even in highly automated workflows [20]. Researchers should implement continuous monitoring of system performance with mechanism for manual override when automated scoring deviates from established benchmarks [11]. Furthermore, the computational costs of sophisticated autotuning approaches must be justified by the specific research context, with simpler heuristic-based methods sometimes providing sufficient accuracy for well-established behavioral paradigms with minimal computational overhead [24].

As behavioral neuroscience increasingly incorporates complex machine learning approaches, the integration of autotuning frameworks will become increasingly essential for maintaining methodological rigor while embracing the analytical power of modern computational methods. By implementing structured autotuning protocols and maintaining critical validation checkpoints, researchers can leverage the efficiency of automated parameter optimization while ensuring the reliability and interpretability of behavioral measurements.

In the field of behavioral neuroscience, the reliance on automated behavior assessment software has grown significantly due to its potential for increased objectivity and time-efficiency compared to manual human scoring [4]. The core challenge, however, lies in the parameter optimization for these software tools, which often requires careful fine-tuning through a trial-and-error process to achieve reliable results [4]. The efficacy of the entire research pipeline—from raw data collection to final, validated insights—is dependent on a robust workflow encompassing diligent data profiling, systematic search strategies, and rigorous data validation. This guide details the application notes and protocols for establishing such a workflow, specifically framed within research involving automated behavior assessment software.

Workflow Design and Best Practices

A well-defined workflow is the structural backbone of any successful parameter optimization project. It ensures that processes are reproducible, efficient, and minimizes errors.

Foundational Workflow Principles

Effective workflow management brings clarity to daily activities, setting the stage for success at both individual and team levels, resulting in greater collaboration, productivity, and higher engagement [26]. The following principles are crucial:

- Think Non-Linearly: Design workflows as non-linear processes rather than straight-line charts. This allows for going back to previous steps to correct mistakes or make improvements, offering necessary flexibility during iterative optimization [27].

- Visual Representation: During the building stage, visually represent the workflow using flowcharts or diagrams. This enhances understanding and communication among all team members, regardless of their technical expertise [27].

- Define Roles and Responsibilities: Clearly outline who is accountable for each task. This reduces conflict, increases accountability, and speeds up decision-making. Align roles with individual team members' skills and strengths [26].

- Address Bottlenecks Proactively: Actively manage workflows by identifying and fixing bottlenecks, such as a missed review cycle, which can cause major delays and decrease morale. Foster a culture of transparency where team members feel empowered to voice concerns [26].

Selecting a Workflow Model

Choosing the appropriate model is key to handling the specific nature of parameter optimization tasks. The two primary models are:

- Sequential Workflow Model: Best suited for shorter, less complicated processes where speed is critical. It moves from one step to the next in a predefined, linear order [27].

- State Machine Model: Ideal for tracking complex processes where an item or task can exist in multiple states. This model is particularly useful in contexts like agile software development or tracking the status of different optimization runs [27].

Data Profiling and Quantitative Presentation

Before optimization can begin, a comprehensive understanding of the input data is essential. Data profiling techniques provide insights into the general health of your data, highlighting inconsistencies, errors, and missing instances [28].

Structuring Quantitative Data

For quantitative data generated from behavioral experiments, proper presentation is the first step toward analysis. Tabulation and visualization are fundamental.

Tabulation: A frequency table is the foundational step for organizing quantitative data. The table below outlines the principles for creating effective tables [29].

Table 1: Principles for Effective Tabulation of Quantitative Data

| Principle | Description |

|---|---|

| Numbering | Tables should be numbered (e.g., Table 1, Table 2). |

| Title | Each table must have a brief, self-explanatory title. |

| Headings | Column and row headings should be clear and concise. |

| Data Order | Data should be presented in a logical order (e.g., ascending/descending). |

| Unit Specification | The units of data (e.g., percent, milliseconds) must be mentioned. |

When dealing with a large number of data values, it is common to group data into class intervals [30]. The general rules are:

- Each interval should be equal in size.

- The number of classes should typically be between 5 and 20, depending on the data volume [30].

- To create intervals: 1) Calculate the range (Highest value - Lowest value). 2) Divide this range into the desired number of equal subranges [29].

Visualizing Data Distributions

Graphical presentations convey the essence of statistical data quickly and with a striking visual impact [29]. For quantitative data from behavioral experiments, the following visualizations are critical:

- Histogram: A pictorial diagram of the frequency distribution. It consists of a series of rectangular, contiguous blocks, with the class intervals on the horizontal axis and frequency on the vertical axis. The area of each column represents the frequency [29] [30].

- Frequency Polygon: This is derived by joining the mid-points of the blocks in a histogram. It is particularly useful for comparing the frequency distributions of different datasets (e.g., control group vs. experimental group) on the same diagram [29] [30].

- Frequency Curve: When the number of observations is very large and class intervals are reduced, the frequency polygon becomes a smooth, angular line known as a frequency curve [29].

- Line Diagram: Primarily used to demonstrate the time trend of an event (e.g., learning curve across trials) [29].

- Scatter Diagram: Used to visualize the correlation between two quantitative variables (e.g., the relationship between a specific behavioral parameter and a physiological measure) [29].

Data Validation Protocols

Data validation is a linchpin in quality assurance, guaranteeing the correctness, completeness, and reliability of datasets, which in turn drives accurate business insights and decision-making [28]. In a research context, it ensures the integrity of findings.

Essential Validation Practices

1. Define Clear Validation Rules: Establish unambiguous rules for what constitutes valid data. This includes field-level checks, consistent data types, permissible value ranges, and adherence to defined patterns [28].

2. Implement Automated Validation: Leverage software to automate repetitive validation checks. This increases productivity, reduces manual errors, and frees up researchers to focus on critical analysis and interpretation tasks [26] [28].

3. Conduct Regular Monitoring and Auditing: Data validation is not a one-time event. Continuous monitoring and systematic auditing are required to retain data accuracy, identify unusual patterns, and mitigate risks associated with erroneous information [28].

4. Leverage Statistical Analysis: Use statistical methods to validate data. Techniques like regression analysis or chi-square testing can help verify data consistency and identify discrepancies that rule-based checks might miss [28].

Addressing Common Validation Challenges

- Missing or Incomplete Data: Strategies like data imputation or the use of advanced machine learning algorithms can be deployed to manage this issue. Regular data auditing is crucial for prevention [28].

- Ambiguous or Inconsistent Data: Mitigate this by implementing a comprehensive data quality management policy with standards for every data input. Seek clarification at the source to prevent the propagation of errors [28].

- Data Security and Privacy: Protect sensitive experimental data during validation using authentication, encryption, and the principle of least privilege. Adherence to data handling regulations is paramount [28].

Experimental Protocol: Parameter Optimization for Behavioral Software

This protocol provides a detailed methodology for optimizing parameters in automated behavior assessment software, as referenced in foundational literature [4].

Objective

To systematically calibrate and optimize the critical parameters of automated behavior assessment software (e.g., VideoFreeze) to achieve a high level of agreement with manual human scoring, especially when dealing with subtle behavioral effects.

Pre-Experiment Requirements

- Ethical Approval: Secure all necessary institutional animal care and use committee (IACUC) or ethics board approvals.

- Behavioral Setup: Configure the experimental apparatus (e.g., fear conditioning chamber) and ensure the video recording system is properly calibrated for lighting, focus, and field of view.

- Manual Scoring Protocol: Establish a rigorous protocol for manual scoring by human observers, including operational definitions of the target behavior (e.g., "freezing") and inter-rater reliability standards.

Step-by-Step Procedure

- Pilot Data Collection: Conduct a small-scale experiment to collect a representative set of behavioral video data. This dataset should encompass the expected range of behavioral responses.

- Initial Parameter Setting: Define the key software parameters to be optimized (e.g., motion threshold, minimum bout duration, pixel change sensitivity). Set initial values based on software documentation or previous literature.

- Manual Scoring of Pilot Data: Have trained observers, blinded to the software's output, manually score the pilot data. This serves as the "ground truth" for optimization.

- Iterative Optimization Loop: a. Software Analysis: Run the automated software with the current parameter set on the pilot data. b. Comparison and Discrepancy Analysis: Compare the software's output against the manual scoring. Use statistical measures (e.g., Cohen's Kappa, Pearson correlation) to quantify agreement. c. Parameter Adjustment: Systematically adjust parameters based on the discrepancy analysis. For example, if the software underestimates freezing, the motion threshold may need to be lowered. d. Re-run and Re-evaluate: Repeat steps a-c until the agreement metric meets a pre-defined success criterion (e.g., Kappa > 0.8).

- Validation Run: Using the optimized parameter set from the pilot data, analyze a new, independent validation dataset. Compare these results to manual scores of the same dataset to confirm the robustness and generalizability of the parameters.

- Documentation: Meticulously document the final optimized parameters, the entire optimization process, and the achieved agreement statistics.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials and Tools for Automated Behavior Assessment Research

| Item/Tool | Function/Description |

|---|---|

| Automated Behavior Software | Software (e.g., VideoFreeze, EthoVision) for automated tracking and quantification of animal behavior [4]. |

| High-Quality Video Recording System | Provides the raw input data; requires consistent lighting and resolution for accurate software analysis. |

| Data Observability Platform | Provides visibility across data pipelines, helping to identify anomalies and ensuring data quality from collection to analysis [28]. |

| Statistical Analysis Software | Used for calculating agreement statistics (e.g., Kappa), data validation, and generating final results (e.g., R, Python with SciPy/StatsModels). |

| Workflow Automation Tool | Platforms (e.g., Nintex, Kissflow) can help streamline and document the multi-step parameter optimization process, ensuring reproducibility [31]. |

| Data Governance Platform | Facilitates accurate validation by establishing a framework for data standards and handling procedures across the research project [28]. |

Visualization Standards and Diagrammatic Representation

Clear visualizations of workflows and data relationships are essential for communication and reproducibility. The following standards must be adhered to.

Color Palette and Contrast Rules

The following color palette is mandated for all diagrams. To ensure accessibility, all foreground elements (text, arrows, symbols) must have sufficient contrast against their background. For any node containing text, the fontcolor attribute must be explicitly set to achieve high contrast against the node's fillcolor. The algorithm from the font-color-contrast JavaScript module can be used as a guide, where a background brightness over 50% requires black text and under 50% requires white text [32].

- Primary Palette:

#4285F4(Blue),#EA4335(Red),#FBBC05(Yellow),#34A853(Green) - Neutral Palette:

#FFFFFF(White),#F1F3F4(Light Gray),#5F6368(Medium Gray),#202124(Dark Gray)

Workflow and Logical Relationship Diagrams

The following Graphviz (DOT) script generates a flowchart that encapsulates the core parameter optimization protocol detailed in Section 5.

Diagram 1: Parameter Optimization Workflow

This diagram illustrates the non-linear, iterative nature of the optimization process, highlighting the critical feedback loop for parameter adjustment.

The following diagram provides a high-level overview of the entire data management and validation workflow, connecting data profiling to the final analytical output.

Diagram 2: Data Management Pipeline

Harnessing DeepLabCut (DLC) for Markerless Pose Estimation and Feature Extraction

DeepLabCut (DLC) is an open-source, deep learning-based software toolkit that enables markerless pose estimation of user-defined body parts across various species and behavioral contexts. By leveraging state-of-the-art human pose estimation algorithms and transfer learning, DLC allows researchers to train customized deep neural networks with limited training data (typically 50-200 frames) to achieve human-level labeling accuracy [33] [34]. This capability is transformative for experimental neuroscience, biomechanics, ethology, and drug development, where non-invasive behavioral tracking provides critical insights into neural mechanisms, disease progression, and treatment efficacy. Within the framework of parameter optimization for automated behavior assessment, DLC serves as a foundational technology that enables high-throughput, quantitative analysis of behavioral phenotypes with minimal experimenter bias, addressing a critical need for standardized behavioral quantification across laboratories [33] [35].

The versatility of the DeepLabCut framework has been demonstrated in numerous applications, from tracking mouse reaching and open-field behaviors to analyzing Drosophila egg-laying and even human movements. Its animal- and object-agnostic design means that any visible point of interest can be tracked, making it equally valuable for studying laboratory animals, wildlife, and human clinical populations [36] [34]. Furthermore, DLC supports both 2D and 3D pose estimation, with 3D reconstruction possible using either a single network and camera or multiple cameras with standard triangulation methods [33]. This flexibility makes it particularly valuable for comprehensive behavioral assessment in pharmaceutical research and development, where precise quantification of motor behaviors, social interactions, and stereotypic patterns can reveal subtle treatment effects that might be missed by conventional observational methods.

Core Technical Specifications and Performance Benchmarks

Architectural Foundations and Model Variants

DeepLabCut builds upon deep neural network architectures, initially adapting the feature detectors from DeeperCut, a state-of-the-art human pose estimation algorithm [36]. The framework has evolved significantly since its inception, incorporating various backbone architectures that offer different trade-offs between speed, accuracy, and computational requirements. Early versions primarily utilized ResNet architectures, but current implementations support more efficient networks like MobileNetV2, EfficientNets, and the proprietary DLCRNet, providing users with options tailored to their specific hardware constraints and accuracy requirements [36] [37].

The recent introduction of foundation models within the "SuperAnimal" series represents a significant advancement for parameter optimization in behavioral research. These pretrained models, including SuperAnimal-Quadruped (trained on over 40,000 images of various quadrupedal species) and SuperAnimal-TopViewMouse (trained on over 5,000 mice across diverse lab settings), enable researchers to perform pose estimation without any model training, dramatically reducing the initial setup time and computational resources required for behavioral analysis [36] [34]. For specialized applications requiring custom models, DLC's transfer learning approach fine-tunes these pretrained networks on user-specific labeled data, achieving robust performance with remarkably small training sets through its sophisticated data augmentation pipelines and optimization methods.

Quantitative Performance Metrics

Table 1: Performance Comparison of DeepLabCut 3.0 Pose Estimation Models

| Model Name | Type | mAP SA-Q on AP-10K | mAP SA-TVM on DLC-OpenField |

|---|---|---|---|

| topdownresnet_50 | Top-Down | 54.9 | 93.5 |

| topdownresnet_101 | Top-Down | 55.9 | 94.1 |

| topdownhrnet_w32 | Top-Down | 52.5 | 92.4 |

| topdownhrnet_w48 | Top-Down | 55.3 | 93.8 |

| rtmpose_s | Top-Down | 52.9 | 92.9 |

| rtmpose_m | Top-Down | 55.4 | 94.8 |

| rtmpose_x | Top-Down | 57.6 | 94.5 |

Note: mAP = mean Average Precision; SA-Q = SuperAnimal-Quadruped; SA-TVM = SuperAnimal-TopViewMouse. Higher values indicate better performance. Source: [36]

Table 2: Validation Against Manual Scoring in Behavioral Quantification

| Analysis Method | Grooming Duration Accuracy | Grooming Bout Count Accuracy | Throughput Capacity |

|---|---|---|---|

| DeepLabCut/SimBA | No significant difference from manual scoring | Significant difference from manual scoring (varies by condition) | High |

| HomeCageScan (HCS) | Significantly elevated relative to manual scoring | Significant difference from manual scoring (varies by condition) | Medium |

| Manual Scoring | Reference standard | Reference standard | Low |

Source: Adapted from [35]