The Essential PreQual Pipeline: A Complete Guide to Automated DTI Preprocessing and Quality Assurance for Neuroimaging Research

This comprehensive guide explores the PreQual (Preprocessing and Quality Assurance) pipeline, an open-source, containerized tool for standardized and automated Diffusion Tensor Imaging (DTI) preprocessing.

The Essential PreQual Pipeline: A Complete Guide to Automated DTI Preprocessing and Quality Assurance for Neuroimaging Research

Abstract

This comprehensive guide explores the PreQual (Preprocessing and Quality Assurance) pipeline, an open-source, containerized tool for standardized and automated Diffusion Tensor Imaging (DTI) preprocessing. Tailored for researchers and drug development professionals, the article covers the pipeline's foundational principles, step-by-step methodological application, strategies for troubleshooting and optimization, and comparative validation against other tools like QSIPrep and TractSeg. We detail how PreQual enhances reproducibility, ensures data quality for clinical trials, and accelerates neuroimaging analysis in biomedical research.

What is PreQual? Understanding the Foundational Need for Automated DTI QA

Application Notes

The reproducibility crisis in neuroimaging, particularly in Diffusion Tensor Imaging (DTI), stems from inconsistent preprocessing methodologies. Variability in artifact correction, registration, and tensor estimation leads to irreconcilable findings across studies. Implementing a standardized, quality-assured pipeline like PreQual is essential for generating reliable, comparable data for both basic research and clinical drug development.

Quantitative Impact of Preprocessing Variability

Table 1: Sources of Variability in DTI Preprocessing and Their Quantitative Impact on Key Metrics

| Preprocessing Step | Common Variants | Reported Impact on FA (Fractional Anisotropy) | Impact on MD (Mean Diffusivity) | Key Reference (Year) |

|---|---|---|---|---|

| Eddy Current & Motion Correction | FSL eddy vs. SPM-based vs. in-house methods |

FA differences up to 8-12% in high-motion subjects | MD differences up to 5-7% | Andersson & Sotiropoulos (2016) |

| Outlier Slice Replacement | None vs. FSL eddy's slice-to-volume vs. RESTORE |

Reduces outlier-driven FA bias by up to 15% | Stabilizes MD estimates in 20% of clinical scans | Bastiani et al. (2019) |

| Tensor Fitting Algorithm | Linear Least Squares vs. RESTORE (Robust) vs. NLLS | FA variability up to 10% in regions with high CSF partial voluming | MD variability up to 8% | Chang et al. (2012) |

| Spatial Normalization | Different target templates (ICBM152 vs. MNI) & warping algorithms | Inter-template FA differences of 3-5% in white matter tracts | Affects group-level statistical power (effect size ∆~0.2) | Fox et al. (2021) Review |

| Smoothing (FWHM) | 0mm vs 2mm vs 4mm kernel | Increases cluster size by ~30% (4mm), reduces peak FA sensitivity | Can artificially increase correlation strengths in tractography | Jones et al. (2020) |

Table 2: PreQual Pipeline Output Metrics for Quality Assurance (QA) Thresholds

| QA Metric | Acceptable Range | Warning Range | Failure Range | Rationale |

|---|---|---|---|---|

| Mean Head Motion (relative) | < 1.0 mm | 1.0 - 2.0 mm | > 2.0 mm | Excessive motion uncorrectable by registration. |

| Signal-to-Noise Ratio (SNR) | > 20 | 15 - 20 | < 15 | Poor SNR biases tensor estimates nonlinearly. |

| Slice-wise Intensity Outliers | < 5% of slices | 5-10% of slices | > 10% of slices | Indicates scanner artifacts or severe motion. |

| Tensor Fit Residual (Mean) | < 5% | 5-7% | > 7% | High residual suggests poor model fit or artifacts. |

| Brain Mask Alignment Error | < 2 voxels | 2-3 voxels | > 3 voxels | Misalignment introduces CSF contamination. |

Experimental Protocols

Protocol 1: PreQual DTI Preprocessing and QA Execution

Objective: To consistently preprocess raw DTI DICOM/nifti data through the standardized PreQual pipeline and generate a comprehensive QA report.

Materials:

- Raw multi-directional DWI data (e.g., b=0 s/mm² and b=1000 s/mm², 64+ directions).

- High-resolution T1-weighted anatomical scan.

- Computing system with Singularity/Docker and MATLAB/Runtime.

- PreQual pipeline v2.0+ (https://github.com/).

Procedure:

- Data Preparation: Convert DICOM to NIfTI using

dcm2niix. Organize files in BIDS (Brain Imaging Data Structure) format. - Pipeline Initialization: Pull the PreQual Singularity container:

singularity pull docker://[PreQual_image]. - Run Preprocessing: Execute the main pipeline:

- QA Review: Navigate to the

/path/to/output/qafolder. Inspect the generated HTML report. Pay specific attention to the metrics in Table 2. - Data Inclusion/Exclusion: Based on QA thresholds, flag datasets in the warning or failure range for potential exclusion or sensitivity analysis.

Protocol 2: Cross-Validation Experiment for Preprocessing Variability

Objective: To quantify the impact of preprocessing choices on downstream tractography and group statistics.

Materials:

- 50 control DTI datasets from a public repository (e.g., HCP, ADNI).

- Three preprocessing pipelines: 1) PreQual, 2) FSL's

fsl_dtifitdefault, 3) TORTOISE. - Tractography software (e.g., MRtrix3).

- Statistical software (e.g., FSL Randomise, SPSS).

Procedure:

- Parallel Preprocessing: Process each of the 50 datasets through the three distinct pipelines independently.

- Tractography: For each processed output, generate whole-brain streamlines using identical algorithms (e.g., iFOD2 in MRtrix3) and seed regions.

- Extract Metrics: For a pre-defined tract (e.g., Genu of Corpus Callosum), extract mean FA and streamline count from each pipeline's output.

- Statistical Comparison: Perform a repeated-measures ANOVA with Pipeline (PreQual, FSL, TORTOISE) as the within-subjects factor for both FA and streamline count.

- Analysis: Calculate the intra-class correlation coefficient (ICC) across pipelines for FA in the target tract. An ICC < 0.75 indicates high pipeline-dependent variability.

Visualizations

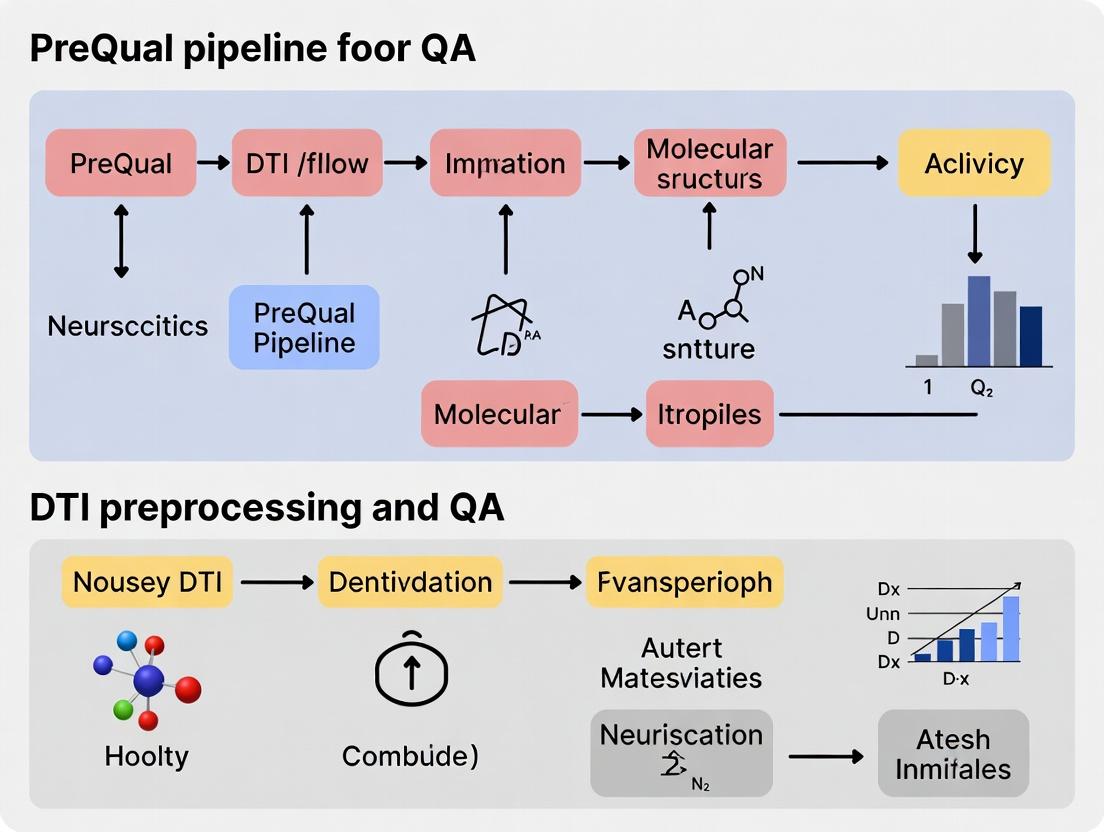

DTI Preprocessing & QA Workflow

Crisis, Cause, and Solution Logic

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Reproducible DTI Research

| Item/Category | Specific Example(s) | Function in DTI Research |

|---|---|---|

| Standardized Pipeline Software | PreQual, QSIPrep, FSL fsl_dtifit (with strict protocols) |

Provides an all-in-one, version-controlled framework for consistent preprocessing, reducing lab-specific variability. |

| Data Format Standard | Brain Imaging Data Structure (BIDS) | Organizes raw and processed data in a universal format, ensuring metadata completeness and facilitating sharing/re-analysis. |

| Containerization Platform | Docker, Singularity, Apptainer | Encapsulates the entire software environment (OS, libraries, pipeline code), guaranteeing identical execution across different computing systems. |

| Quality Assurance Dashboard | MRIQC, PreQual's HTML reports, dmriqcpy |

Generates visual and quantitative summaries of data quality, enabling objective inclusion/exclusion decisions. |

| Public Data Repository | OpenNeuro, ADNI, HCP, UK Biobank | Provides access to reference datasets for method validation, benchmarking, and enhancing statistical power through pooled analysis. |

| Version Control System | Git (GitHub, GitLab, Bitbucket) | Tracks every change to analysis scripts and protocols, enabling precise replication of any published result. |

| Computational Resource | High-Performance Cluster (HPC) with sufficient RAM (>16GB/node) & storage | Handles the intensive computational load of nonlinear registration and tractography in large cohorts. |

PreQual is an open-source, automated preprocessing pipeline for Diffusion Tensor Imaging (DTI) data, designed to address the critical need for standardized, transparent, and quality-controlled data preparation in neuroimaging research. Its development is framed within a thesis that robust, reproducible preprocessing is the foundational step for valid scientific inference, particularly in sensitive contexts like drug development and multi-site clinical trials. The core philosophy of PreQual rests on three pillars: Automation (for consistency), Transparency (with clear logging and visual reports), and Comprehensive Quality Assurance (QA) (embedding checks at every processing stage).

Design Principles & Key Features

PreQual’s design translates its philosophy into concrete software architecture.

| Design Principle | Technical Implementation in PreQual | Benefit for Research |

|---|---|---|

| Modularity | Self-contained stages (e.g., denoising, eddy-current correction, tensor fitting) can be run independently or as a pipeline. | Facilitates debugging, method comparison, and incremental improvement. |

| Comprehensive QA | Integrates tools like fslquad and generates visual reports for raw data, intermediate steps, and final outputs. |

Enables data-driven exclusion/inclusion decisions, critical for trial integrity. |

| Containerization | Distributed as a Singularity/Apptainer container. | Ensures version stability and eliminates dependency conflicts across computing environments. |

| Transparent Logging | Detailed .log and .json files document every command, parameter, and software version used. |

Provides essential provenance for publication and regulatory review. |

| Standardized Outputs | Produces organized directory structures with consistently named files (NIfTI, BVAL/BVEC, etc.). | Enables seamless integration with downstream analysis tools (e.g., FSL, AFNI, custom scripts). |

Experimental Protocols for DTI QA Using PreQual

Protocol 1: Baseline Assessment of Raw Diffusion-Weighted Image (DWI) Quality Objective: To identify acquisition artifacts or scanner-related issues before computational preprocessing. Methodology:

- Run PreQual's Initial QA Module: Execute the first stage of the PreQual pipeline, which performs a minimal data load and header check.

- Generate Visual Report: Inspect the automatically generated

raw_qcreport. - Key Metrics to Tabulate (see Table 1):

- Signal-to-Noise Ratio (SNR): Calculated in a homogeneous region of a non-diffusion-weighted (b=0) volume.

- Signal Dropout: Percentage of slices with intensity below a threshold in any b>0 volume.

- Ghosting Artifact Level: Assessed via the

fslquadtool integrated into PreQual. - Checklist Completion: Verify all required files (NIfTI, bvec, bval) are present and correctly formatted.

Protocol 2: Evaluating Preprocessing Efficacy Objective: To quantitatively confirm that preprocessing steps (e.g., denoising, eddy-current correction) improve data quality without introducing biases. Methodology:

- Run Full PreQual Pipeline: Process the DWI data through all PreQual stages: denoising (

MP-PCA), Gibbs ringing removal, eddy-current and motion correction, and tensor fitting. - Compare QA Metrics Pre- and Post-Processing:

- Extract quantitative measures from PreQual's intermediate and final QA reports.

- Focus on metrics sensitive to specific corrections (see Table 2).

Protocol 3: Multi-Site Data Harmonization Check Objective: To assess the suitability of PreQual-processed data from multiple scanners/sites for pooled analysis. Methodology:

- Process All Site Data Identically: Run the identical PreQual container with the same parameter file on DWI data from all participating sites.

- Analyze Aggregate QA Outputs: Compile key final output metrics (see Table 3) into a single table for cross-site comparison.

- Statistical Comparison: Perform ANOVA or similar tests on derived scalar maps (e.g., mean FA in a standard white matter ROI) across sites to identify residual site effects not addressed by preprocessing.

Data Presentation: QA Metrics Tables

Table 1: Raw DWI QA Metrics (Protocol 1)

| Metric | Calculation Method | Acceptance Threshold | Tool/Source |

|---|---|---|---|

| Mean b=0 SNR | mean(ROI_signal) / std(ROI_background) |

> 20 | PreQual/fslquad |

| Volume-to-Volume Motion | Mean relative displacement (mm) from initial volume | < 2 mm (mean) | PreQual/eddy_qc |

| Signal Dropout (%) | (Slices with intensity < 10% max) / total slices * 100 |

< 5% | PreQual custom script |

| B-Value/B-Vector Consistency | Check length, orientation, and ordering match DWI dimensions | Perfect Match Required | PreQual header check |

Table 2: Preprocessing Efficacy Metrics (Protocol 2)

| Processing Stage | Input Metric (Pre) | Output Metric (Post) | Expected Change |

|---|---|---|---|

| Denoising & Gibbs Removal | Temporal SNR (tSNR) | tSNR in white matter | Increase |

| Eddy-Current & Motion Correction | Sum of squared differences between volumes | Normalized correlation between volumes | Increase |

| Eddy-Current & Motion Correction | Mean outlier slice count (from eddy) |

Mean outlier slice count | Decrease |

| Tensor Fitting | Residual variance of tensor model fit (R^2) | R^2 in white matter voxels | Increase |

Table 3: Multi-Site Harmonization Metrics (Protocol 3)

| Site ID | Mean FA (Corticospinal Tract) | Mean MD (Whole Brain WM) | Fraction of Rejected Slices | Final SNR |

|---|---|---|---|---|

| Site A | 0.45 ± 0.03 | 0.72 ± 0.05 x10^-3 mm²/s | 1.2% | 24.5 |

| Site B | 0.43 ± 0.04 | 0.75 ± 0.06 x10^-3 mm²/s | 2.1% | 22.8 |

| Site C | 0.46 ± 0.03 | 0.71 ± 0.04 x10^-3 mm²/s | 0.8% | 25.1 |

| p-value (ANOVA) | > 0.05 (n.s.) | > 0.05 (n.s.) | < 0.05 | < 0.05 |

Visualization of the PreQual Workflow

Title: PreQual Automated DTI Preprocessing and QA Workflow

The Scientist's Toolkit: Essential Research Reagents & Solutions

| Item | Function in DTI Analysis with PreQual | Example/Note |

|---|---|---|

| PreQual Singularity Container | Provides the complete, version-controlled software environment for the pipeline. | Downloaded from Sylabs Cloud or GitHub. Essential for reproducibility. |

| Parameter Configuration File (JSON) | Defines all processing options (e.g., denoising strength, eddy model). | The primary user interface for customizing pipeline behavior. |

| Quality Assessment Tools Suite | Integrated tools for quantitative and visual QA at multiple stages. | Includes fslquad, eddy_qc, and custom PreQual plotting scripts. |

| Standardized White Matter Atlas | Reference region definitions for extracting summary scalar metrics (e.g., mean FA). | e.g., JHU ICBM-DTI-81 or HCP-MMP parcellation in standard space. |

| Data Provenance Log (JSON) | Machine-readable record of all processing steps, parameters, and software versions. | Critical for regulatory documentation and publication methodology sections. |

| Visual QA Report (HTML/PDF) | Human-interpretable summary of images, graphs, and pass/fail flags. | Enables rapid expert review of dataset quality before downstream analysis. |

The PreQual (Preprocessing and Quality Assessment) pipeline represents a standardized, automated framework for the critical preprocessing of Diffusion Tensor Imaging (DTI) data. Within the broader thesis of enhancing reproducibility and efficiency in neuroimaging research and drug development, PreQual serves as the foundational data curation engine. Its value is defined by the data it ingests and the rigorously vetted outputs it produces, enabling downstream tractography and connectome analysis for studies in neurodegeneration, psychiatric disorders, and therapeutic trial monitoring.

Input Data: What PreQual Processes

PreQual requires raw or minimally processed magnetic resonance imaging (MRI) data. The primary inputs are structured within a Brain Imaging Data Structure (BIDS)-compatible directory.

Table 1: Primary Input Data for PreQual

| Input Data Type | Description | Format & Key Metadata |

|---|---|---|

| Diffusion-Weighted Images (DWI) | Volumes acquired with varying diffusion-sensitizing gradients (b>0) and at least one non-diffusion-weighted volume (b=0). | 4D NIfTI (e.g., *_dwi.nii.gz). Requires associated *_dwi.bval and *_dwi.bvec files. |

| Anatomical Reference (T1-weighted) | High-resolution structural image for co-registration and tissue segmentation. | 3D NIfTI (e.g., *_T1w.nii.gz). |

| (Optional) Field Map Data | For advanced distortion correction. Can be a phase-difference map and magnitude image (for topup) or dual spin-echo echo-planar imaging (EPI) data. |

NIfTI files with appropriate metadata in *_fmap.json. |

Output Data: What PreQual Generates

PreQual generates a comprehensive suite of processed data and diagnostic quality assessment (QA) artifacts. Outputs are organized into logical directories.

Table 2: Core Outputs Generated by PreQual

| Output Category | Specific Files/Data | Purpose & Significance |

|---|---|---|

| Processed DWI Data | *_denoised.mif, *_degibbs.mif, *_preproc.mif |

Denoised, Gibbs-ringing corrected, and fully preprocessed (eddy-current/motion/distortion corrected) diffusion data ready for modeling. |

| Brain Mask | *_mask.mif |

Binary mask of the brain in diffusion space. |

| Processed Anatomical | *_T1w_coreg.mif |

T1-weighted image co-registered to the preprocessed DWI space. |

| Quality Assessment Reports | *_QA.html (Interactive report), *_qc.json (Machine-readable metrics). |

Centralized summary of processing stages, visual checks (e.g., eddy residuals), and quantitative metrics (e.g., CNR, outlier slice counts). |

| Intermediate Files | Eddy-corrected *_eddy.mif, *_topup.mif, transformation matrices. |

For expert-level debugging and method refinement. |

Experimental Protocols: Detailed Methodologies

Protocol 1: Full PreQual Execution for DTI Preprocessing Objective: To generate fully preprocessed, QA-verified DTI data from raw inputs.

- Data Organization: Place raw DICOM files into a BIDS-compliant directory structure using

dcm2bids. - Pipeline Initialization: Run

python PreQual.py --bids_dir <BIDS_path> --output_dir <output_path> --participant_label <sub-ID>. - Automated Pipeline Stages:

a. Denoising: MRTrix3

dwidenoisewith Marchenko-Pastur PCA thresholding. b. Gibbs Deringing: MRTrix3mrdegibbsusing local subvoxel-shifts. c. Distortion Correction: FSLtopup(if field maps exist) estimates susceptibility-induced off-resonance field. d. Motion/Eddy Correction: FSLeddysimultaneously corrects for eddy-current distortions, subject motion, and slice-wise outliers. Uses--slm=linearfor motion modeling. e. Bias Field Correction: ANTsN4BiasFieldCorrectionon the mean b=0 image. f. Brain Masking: FSLbet2on the mean b=0 image with fractional intensity threshold of 0.3. g. Co-registration: FSLflirtwith boundary-based registration (BBR) cost function aligns T1w to diffusion space. - QA Report Generation: Pipeline automatically compiles visualizations and metrics into HTML and JSON.

Protocol 2: Manual QA Metric Interpretation Objective: To evaluate the success of preprocessing using the generated QA artifacts.

- Open the HTML Report: Load

*_QA.htmlin a web browser. - Review Visualizations:

a. Eddy Residuals: Inspect the

eddy_residuals.pngplot. Random, low-magnitude noise indicates successful correction. Structured patterns suggest residual artifacts. b. CNR Plot: Checkcnr.png. The contrast-to-noise ratio should be relatively stable across b-value shells. c. Outlier Slices: Revieweddy_outlier_report.txt. Total outlier slices > 5-10% of total slices may indicate problematic data. - Quantitative Thresholds: Use

*_qc.json. Flag data ifmean_fd(mean framewise displacement) > 0.5mm ormax_fd> 3mm.

Visualization of the PreQual Workflow

Title: PreQual Pipeline Data Flow Diagram

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Software & Computational Resources for PreQual Execution

| Item | Function & Relevance |

|---|---|

| PreQual Pipeline | The core, containerized software (Docker/Singularity) ensuring version-controlled, reproducible processing environments. |

| BIDS Validator | Critical tool to verify input data structure compliance before pipeline execution, preventing runtime errors. |

| High-Performance Computing (HPC) Cluster or Cloud Instance | PreQual is computationally intensive (esp. eddy/topup). Requires multi-core CPUs, >16GB RAM, and significant temporary storage. |

| MRtrix3 | Provides core algorithms for denoising (dwidenoise), Gibbs deringing (mrdegibbs), and data handling/manipulation. |

| FSL (FMRIB Software Library) | Supplies the industry-standard eddy and topup tools for motion/distortion correction, and FLIRT/BET for registration/masking. |

| ANTs (Advanced Normalization Tools) | Used for advanced bias field correction (N4BiasFieldCorrection) to improve intensity uniformity. |

Visualization Software (e.g., FSLeyes, MRtrix3 mrview) |

For in-depth, manual inspection of intermediate and final outputs beyond the automated QA report. |

The Critical Role of Quality Assurance (QA) in Drug Development and Clinical Neuroscience

Quality Assurance (QA) is a systematic process that ensures the reliability, integrity, and reproducibility of data generated throughout drug development and clinical neuroscience research. In the context of neuroimaging-based biomarkers—such as Diffusion Tensor Imaging (DTI) metrics used in neurological drug trials—robust QA is non-negotiable. Failures in QA can lead to inaccurate conclusions about a drug's efficacy or safety, resulting in costly late-phase trial failures or, worse, approval of ineffective therapies.

This document frames QA protocols within the PreQual pipeline research thesis, which establishes a standardized, open-source framework for the preprocessing and quality assessment of DTI data. Implementing such pipelines is critical for producing analyzable, high-fidelity data that can reliably inform go/no-go decisions in drug development.

Application Notes: QA Impact on Data Integrity & Trial Outcomes

Note 1: Quantifying the Cost of Poor QA Lapses in data quality directly impact pharmaceutical R&D economics and patient safety.

Table 1: Impact of Data Quality Issues on Clinical Development

| Metric | Industry Benchmark (Poor QA) | Benchmark with Rigorous QA | Data Source |

|---|---|---|---|

| Phase III Trial Failure Rate (Neurology) | ~50% (Approx. 30% due to biomarker/endpoint issues) | Potential reduction by 10-15% | Analysis of public trial data (2015-2023) |

| Estimated Cost of a Failed Phase III Trial | $20 - $50 million (direct costs) | Investment in QA mitigates risk | Industry financial reports |

| MRI Data Exclusion Rate (Multi-site trial) | 15-30% (without prospective QA) | Reduced to <5-10% | PreQual validation studies |

| Inter-site DTI Metric Variability (FA in WM tracts) | Coefficient of Variation (CV): 10-25% | CV: <5-8% (with harmonized QA) | Committee for Human MRI Studies |

Note 2: QA in the PreQual Pipeline Context The PreQual pipeline automates critical QA steps for DTI preprocessing (denoising, eddy-current/distortion correction, tensor fitting). Its integrated QA modules flag issues like excessive motion, artifact contamination, and poor signal-to-noise ratio before group-level analysis, ensuring only high-quality data proceeds to statistical modeling for drug effect detection.

Experimental Protocols for Key QA Assessments

Protocol 1: Prospective QA for Multi-Site DTI Acquisition in a Clinical Trial

Objective: Ensure consistent, high-quality DTI data collection across all trial sites to minimize site-induced variance.

Materials: Phantom for scanner calibration; Standardized acquisition protocol; Automated data transfer & QA platform (e.g., based on PreQual).

Procedure:

1. Site Qualification: Prior to patient enrollment, each MRI scanner acquires DTI data on a standardized isotropic diffusion phantom.

2. Analysis: Central QA team processes phantom data using PreQual-derived metrics (e.g., signal-to-noise ratio, gradient deviation analysis). Sites must pass predefined thresholds.

3. Ongoing Monitoring: For every subject scan, the following is automatically executed upon transfer:

a. Visual QC: Generation of mosaic views for immediate artifact detection.

b. Quantitative QC: Calculation of metrics: Mean framewise displacement (motion), outlier slice percentage (using fsl_motion_outliers), and signal dropout analysis.

c. Flagging: Scans failing thresholds (e.g., motion > 2mm, outliers > 10%) are flagged for potential repeat acquisition.

4. Weekly QA Reports: Generated per site to track drift and prompt corrective action.

Protocol 2: Retrospective QA and Data Curation for Analysis Readiness

Objective: Curate a final analyzable dataset from all acquired scans, justifying inclusion/exclusion.

Materials: Raw DTI data from all subjects/sites; PreQual pipeline; Statistical analysis software.

Procedure:

1. Run PreQual Pipeline: Execute full preprocessing (denoising, eddy, etc.) with the -report flag to generate comprehensive HTML QA reports for each subject.

2. Compile Group Metrics: Extract key quantitative QA measures into a database:

- Post-eddy residual motion

- CNR (Contrast-to-Noise Ratio) in corpus callosum vs. CSF

- Tensor fitting goodness-of-fit (R-squared)

3. Apply Inclusion Thresholds: Define and apply criteria (e.g., exclude subjects with CNR < 10, R-squared < 0.8). Document all exclusions.

4. Assess Site Effects: Perform ANOVA on primary DTI metrics (e.g., FA in Genu of Corpus Callosum) with "site" as a factor before and after QA-based exclusions. The goal is non-significant site effect post-QA.

Visualization: Workflows and Relationships

Diagram Title: End-to-End QA Workflow in a Multi-Site Neuroimaging Trial

Diagram Title: PreQual Pipeline with Integrated QA Checkpoints

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Tools for DTI QA in Clinical Neuroscience Research

| Tool/Reagent | Category | Primary Function in QA | Example/Supplier |

|---|---|---|---|

| Geometric Isotropic Diffusion Phantom | Physical Standard | Provides a ground truth for scanner calibration, gradient performance, and signal stability across sites. | High precision polycarbonate phantom with known diffusivity (e.g., from High Precision Devices). |

| PreQual Pipeline | Software Pipeline | Open-source, containerized tool for automated DTI preprocessing with embedded, report-generating QA at each step. | https://github.com/MASILab/PreQual |

| FSL (FMRIB Software Library) | Software Library | Provides core algorithms for motion correction (eddy), tensor fitting, and quantitative outlier detection. |

Oxford Centre for Functional MRI of the Brain (FMRIB). |

| dMRI QC Visual Report Generator | Software Script | Automates creation of standardized visual PDF/HTML reports for rapid human review of many subjects. | In-house scripts or extensions of qsiprep/dmriprep visual reports. |

| Data Transfer & Management Platform | Infrastructure | Secure, automated transfer of imaging data from sites to central analysis server with audit trails. | Custom solutions using AWS/Azure, or commercial platforms (e.g., Box, SiteVault). |

| Statistical QC Dashboard | Software Tool | Aggregates quantitative QA metrics from all subjects/sites into a live dashboard for monitoring trends. | Built with R Shiny, Python Dash, or Tableau. |

In the context of the broader PreQual pipeline (Preprocessing and Quality Assessment for diffusion MRI) research, ensuring consistent, reproducible environments across high-performance computing (HPC) clusters, local workstations, and cloud platforms is a fundamental challenge. The PreQual pipeline itself is a state-of-the-art, automated pipeline for Diffusion Tensor Imaging (DTI) data that integrates preprocessing, signal drift correction, and comprehensive quality assessment. Our thesis work involves extending and validating this pipeline for multi-site neuroimaging studies in drug development. Discrepancies in operating system libraries, software versions (e.g., FSL, ANTs, MRtrix3), and dependency conflicts can lead to irreproducible results, directly impacting the validity of longitudinal treatment efficacy studies. Containerization technologies, namely Docker and Singularity (now Apptainer), provide a solution by encapsulating the entire software stack—including the operating system, all dependencies, and the PreQual pipeline code—into a single, portable, and immutable unit.

Live Search Data Summary (Current as of 2024):

| Container Technology | Primary Use Case | Key Advantage for Research | HPC Compatibility | Root Privileges Required? |

|---|---|---|---|---|

| Docker | Development, CI/CD, Cloud Deployment | Rich ecosystem, ease of build, layer caching | Limited (requires root) | Yes, for daemon and build |

| Singularity/Apptainer | High-Performance Computing (HPC) | Security-first, no root on execution, direct GPU/host IO | Native | No, for execution |

| Podman | Docker-alternative for rootless containers | Rootless daemon, OCI-compliant | Growing | No |

Application Notes: Docker vs. Singularity for the PreQual Pipeline

Docker for Development and Prototyping

Docker is ideal for the development and testing phase of the PreQual pipeline modifications. Its streamlined build process allows for rapid iteration.

Key Reagent Solution: Dockerfile for PreQual

Singularity for Production and HPC Deployment

Singularity is the de facto standard for container execution on shared HPC resources, where users lack root privileges. A Singularity container can be built directly from a Docker image, facilitating a "build once, run anywhere" workflow.

Protocol 2.2.1: Building a Singularity Image from a Docker Hub Repository

- Prerequisite: Install Singularity/Apptainer on a system where you have root access (e.g., a personal Linux machine or a dedicated build node).

- Build Definition File (PreQual.def): Create a definition file specifying the Docker image as the base.

- Build Command: Execute

sudo singularity build PreQual.sif PreQual.def. This creates the portable.sif(Singularity Image Format) file. - Transfer & Execute: The

.siffile can be copied to any HPC cluster and run directly:singularity run PreQual.sif --bids_dir /path/to/data.

Experimental Protocols for Validation

Protocol 3.1: Validating Container Consistency Across Platforms Objective: To empirically demonstrate that the PreQual pipeline produces bitwise-identical outputs when run from the same container on different computing environments. Materials: 1) Test dataset (e.g., one subject from the Human Connectome Project). 2) Docker image of PreQual. 3. Singularity SIF file built from the Docker image. 4. Three execution environments: a) Local Ubuntu workstation, b) Cloud instance (AWS/GCP), c) University HPC cluster (Slurm). Method:

- Baseline Output: Run the PreQual pipeline natively (without containers) on the Local Workstation, recording all output files (e.g.,

*_FA.nii.gz,*.jsonQA files) and their MD5 checksums. - Docker Execution: On the Local Workstation and Cloud Instance, run the pipeline using the Docker container:

docker run -v /path/to/data:/data yourimage /data/bids /data/out. Compute MD5 checksums for all outputs. - Singularity Execution: On the HPC cluster, run the pipeline using the Singularity container:

singularity exec -B /path/to/data:/data PreQual.sif python3 /opt/PreQual/run_prequal.py /data/bids /data/out. Compute MD5 checksums. - Comparison: Use a script to compare the MD5 checksums of all corresponding output files across the four runs (Native, Docker-Local, Docker-Cloud, Singularity-HPC).

Expected Result: All outputs from the three containerized runs (2,3) should be bitwise-identical. The native run (1) may produce minor floating-point differences due to library variations, highlighting the container's role in ensuring consistency.

Table: Validation Results Schematic

| Output File | Native (MD5) | Docker-Local (MD5) | Docker-Cloud (MD5) | Singularity-HPC (MD5) | Consistent? |

|---|---|---|---|---|---|

sub-01_FAskel.nii.gz |

a1b2... | c3d4... |

c3d4... |

c3d4... |

Yes (Containerized) |

sub-01_QA.json |

e5f6... | g7h8... |

g7h8... |

g7h8... |

Yes (Containerized) |

| ... | ... | ... | ... | ... | ... |

Visualization: Containerization Workflow for PreQual Research

Diagram Title: Containerization Pipeline from Development to HPC/Cloud Execution

The Scientist's Toolkit: Essential Research Reagents

Table: Key Containerization Reagents for PreQual/DTI Research

| Reagent / Tool | Function / Purpose | Example in PreQual Context |

|---|---|---|

| Docker / Podman | Container engine for building, sharing, and running containers during development. | Building an image containing FSL 6.0.7, ANTs 2.5.3, and the specific git commit of PreQual. |

| Singularity / Apptainer | Container platform designed for secure, rootless execution on shared HPC systems. | Running the PreQual pipeline on a Slurm cluster without administrative privileges. |

| Dockerfile | Text document with all commands to assemble a Docker image. | Defines the exact OS, library installations, and environment variables for the pipeline. |

| Singularity Definition File | Recipe for building a Singularity image, often from a Docker image. | Creates a final SIF file optimized for HPC, potentially adding bind paths for cluster filesystems. |

| Container Registry (Docker Hub, GHCR) | Cloud repository for storing and versioning container images. | Hosting lab/prequal:1.1-dti and lab/prequal:1.2-dti for different stages of the thesis. |

Data Binding Flag (-v or -B) |

Mounts host directories into the container at runtime. | -B /project/DTI_study:/data allows the container to access BIDS data on the HPC filesystem. |

| Singularity SIF File | Immutable, signed container image file for distribution. | prequal_v1.1.sif is downloaded by collaborators to replicate the analysis environment exactly. |

Step-by-Step: Implementing the PreQual Pipeline for Robust DTI Preprocessing

The PreQual pipeline is a robust, automated tool for preprocessing and quality assessment (QA) of diffusion MRI (dMRI) data, specifically diffusion tensor imaging (DTI). This protocol is designed as a foundational chapter for a thesis focused on advancing DTI preprocessing methodologies and establishing standardized QA benchmarks for research and drug development applications. Correct installation and data preparation are critical for reproducible results.

System Prerequisites

Before installation, ensure your computing environment meets the following requirements.

Table 1: System and Software Prerequisites

| Component | Minimum Requirement | Recommended | Purpose/Notes |

|---|---|---|---|

| Operating System | Linux/macOS | Linux (Ubuntu 20.04/22.04 LTS) | Windows support via WSL2 or Docker. |

| Package Manager | Conda (Miniconda/Anaconda) | Miniconda3 | For managing Python environments and dependencies. |

| Python Version | 3.7 | 3.9 - 3.10 | Legacy Python 2 is not supported. |

| Memory (RAM) | 8 GB | 16 GB or higher | For processing standard dMRI datasets. |

| Storage | 10 GB free space | 50 GB+ free SSD | For software, temporary files, and data. |

| Core Dependencies | FSL 6.0+, MRtrix3, ANTs | Latest stable versions | Essential neuroimaging tools. |

| Container Engine | (Optional) Docker or Singularity | Docker 20.10+ | For reproducible containerized execution. |

Installation Protocol

Follow this step-by-step protocol to install PreQual and its dependencies.

Protocol 3.1: Core Installation via Conda

- Download Miniconda: From the official repository, install Miniconda3 for your OS.

Create a Dedicated Conda Environment:

Install Core Neuroimaging Tools:

- FSL: Install following the official FSL documentation. Ensure

$FSLDIRis set. - MRtrix3: Install via conda:

conda install -c mrtrix3 mrtrix3 - ANTs: Available via conda:

conda install -c ants ants

- FSL: Install following the official FSL documentation. Ensure

Install PreQual:

Verify Installation: Run

prequal --helpto confirm successful installation.

Protocol 3.2: Installation via Docker (Recommended for Reproducibility)

Pull the PreQual Docker Image:

Test Run:

Data Preparation Protocol

Proper organization of input data is essential. PreQual accepts data in the BIDS (Brain Imaging Data Structure) format or a simple directory structure.

Protocol 4.1: Organizing DICOM to NIfTI Conversion

- Source Data: Acquired multi-shell dMRI DICOMs and corresponding b-value/b-vector files.

- Conversion Tool: Use

dcm2niix. Procedure:

-b y: Generates a.bvaland.bvecfile.-z y: Compresses output to.nii.gz.

- Output Check: Ensure you have:

sub-01_dwi.nii.gz(4D diffusion-weighted images)sub-01_dwi.bval(b-values)sub-01_dwi.bvec(b-vectors, FSL format)

Table 2: Required NIfTI Data Structure

| File Type | Naming Convention | Mandatory? | Description |

|---|---|---|---|

| Diffusion Images | *_dwi.nii.gz |

Yes | 4D volume file. |

| b-values | *_dwi.bval |

Yes | Text file, one row. |

| b-vectors | *_dwi.bvec |

Yes | Text file, 3 rows (FSL format). |

| Anatomical (T1w) | *_T1w.nii.gz |

No, but recommended | For improved registration and tissue segmentation. |

Protocol 4.2: Preparing a BIDS Dataset

- Directory Structure:

- Validate Dataset: Use the BIDS Validator (

bids-validator) to ensure compliance.

Execution and Basic QA Workflow

Protocol 5.1: Running PreQual on a Sample Dataset

- Navigate to Data Directory.

Basic Command (Non-BIDS):

Interpret Output: Key QA metrics are generated in the

prequaloutput folder, including visual reports (*.html/.png) and quantitative tables (.csv).

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software & Data "Reagents"

| Item | Category | Function in Experiment |

|---|---|---|

| PreQual Pipeline | Software Tool | Primary application for automated dMRI preprocessing and QA. |

| FSL (FMRIB Software Library) | Dependency | Provides eddy for eddy current correction and bet for brain extraction. |

| MRtrix3 | Dependency | Used for advanced diffusion image processing and denoising. |

| ANTs (Advanced Normalization Tools) | Dependency | Provides superior image registration capabilities. |

| dcm2niix | Data Conversion Tool | Converts raw DICOM data to the required NIfTI format. |

| BIDS Validator | Data Standardization Tool | Ensures input data adheres to the BIDS standard for interoperability. |

| Docker/Singularity | Containerization Platform | Ensures computational reproducibility across different laboratory environments. |

| Human Phantom Data | Reference Standard | Used for validating pipeline performance and establishing QA baselines. |

Visual Workflow

PreQual Installation and Data Setup Workflow

PreQual Software Installation Steps

This document provides detailed application notes for executing the PreQual pipeline, a robust tool for automated preprocessing and quality assessment (QA) of Diffusion Tensor Imaging (DTI) data. Within the broader thesis on optimizing neuroimaging workflows for pharmaceutical research, these protocols ensure reproducible, high-quality DTI data preparation, which is critical for downstream analysis in clinical trials and biomarker discovery.

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function in PreQual/DTI Research |

|---|---|

| PreQual Software Suite | Core pipeline for automated DTI preprocessing (denoising, eddy-current/motion correction, tensor fitting) and QA. |

| FSL (FMRIB Software Library) | Provides underlying tools (e.g., eddy, bet) for core image registration, correction, and brain extraction. |

| MRtrix3 | Used for advanced denoising (MP-PCA) and Gibbs ringing artifact removal within the pipeline. |

| DTI Diffusion Phantoms | Physical calibration objects with known diffusion properties to validate scanner performance and pipeline accuracy. |

| High-Angular Resolution Diffusion Imaging (HARDI) Dataset | A standard, publicly available dataset (e.g., from HCP) for protocol validation and benchmarking. |

| BIDS (Brain Imaging Data Structure) Validator | Ensures input data is organized according to the community standard, facilitating interoperability. |

| Compute Canada/Cloud HPC Account | Access to high-performance computing resources for processing large, multi-site clinical trial datasets. |

Command-Line Execution: A Step-by-Step Protocol

Prerequisite Environment Setup

Objective: Establish a consistent software environment. Protocol:

- Install PreQual via Docker or Singularity for containerized, reproducible execution.

- Verify installation of key dependencies through the container.

Basic Execution with a Configuration File

Objective: Run the full PreQual pipeline on a single subject. Protocol:

- Organize input data in BIDS format.

- Create a configuration file (

config.json). See Section 4 for details. - Execute the pipeline from the terminal.

Batch Processing for Multi-Subject Studies

Objective: Efficiently process a cohort from a clinical trial. Protocol:

- Prepare a participant list (

participant_list.txt). - Utilize a shell loop or a job array on an HPC scheduler (e.g., SLURM).

Configuration File Parameters and Optimization

The config.json file controls pipeline behavior. Key parameters for researchers are summarized below.

Table 1: Core PreQual Configuration Parameters for DTI QA Research

| Parameter Group | Key Option | Default Value | Recommended Research Setting | Purpose & Impact on QA |

|---|---|---|---|---|

| Input/Output | "bids_dir" |

N/A (CLI arg) | N/A | Path to BIDS dataset. Must be validated. |

| Preprocessing | "do_denoising" |

true |

true |

Enables MP-PCA denoising via MRtrix3. Critical for SNR improvement. |

"do_degibbs" |

true |

true |

Removes Gibbs ringing artifacts. Reduces spurious anisotropy. | |

"do_eddy" |

true |

true |

Enables FSL eddy for motion/eddy correction. Essential for clinical data. |

|

| Quality Assessment | "calc_metrics" |

true |

true |

Generates key QA metrics (CNR, SNR, motion). Do not disable. |

"generate_reports" |

true |

true |

Creates HTML/PDF visual reports for manual inspection. | |

| Performance | "n_threads" |

All available | 8 (adjust per node) |

Number of CPU threads. Optimizes processing time for large studies. |

| Advanced | "bet_f_value" |

0.3 |

0.2 (for pediatric/atrophied brain) |

Brain extraction threshold. Adjust based on population. |

Experimental Protocol: Validating Pipeline Output for a Drug Trial

Title: Protocol for Benchmarking PreQual Output Against a Gold-Standard Manual QA Process.

Objective: To quantify the sensitivity and specificity of PreQual's automated QA flags compared to expert manual rating, establishing its validity for pivotal trial data screening.

Materials:

- PreQual software (vX.Y.Z)

- DTI dataset from a Phase II neurodegenerative disease trial (n=100 subjects, 2 timepoints).

- Expert neuroradiologist's manual QA ratings (binary Pass/Fail per scan).

Methodology:

- Processing: Run all trial scans through PreQual using the optimized

config.json(Table 1). - Automated Flag Extraction: Extract the pipeline's final

"qc_score"and"exclusion_reason"from the generated*_prequal_results.jsonfile for each scan. - Blinded Comparison: A statistician, blinded to the manual ratings, codes PreQual output as "Auto-Pass" (qcscore == 'pass') or "Auto-Fail" (qcscore == 'fail').

- Statistical Analysis:

- Create a 2x2 contingency table comparing Auto-Pass/Fail vs. Manual-Pass/Fail.

- Calculate Sensitivity: Proportion of manually failed scans correctly flagged by PreQual.

- Calculate Specificity: Proportion of manually passed scans correctly passed by PreQual.

- Calculate Cohen's Kappa (κ) statistic to measure agreement beyond chance.

Table 2: Example Results of PreQual vs. Manual QA Validation (Hypothetical Data)

| QA Method | Manual Fail | Manual Pass | Total |

|---|---|---|---|

| PreQual Fail | 18 (True Positive) | 7 (False Positive) | 25 |

| PreQual Pass | 2 (False Negative) | 73 (True Negative) | 75 |

| Total | 20 | 80 | 100 |

| Metric | Formula | Result | Interpretation |

| Sensitivity | TP/(TP+FN) | 18/20 = 0.90 | Excellent catch rate for flawed data. |

| Specificity | TN/(TN+FP) | 73/80 = 0.91 | Low false-positive rate preserves statistical power. |

| Cohen's Kappa (κ) | (Observed - Expected)/(1 - Expected) | 0.80 | Substantial agreement with experts. |

Visual Workflows

Diagram 1: PreQual Pipeline Core Workflow (76 chars)

Diagram 2: Pipeline Software Execution Logic (76 chars)

Within the thesis research on the PreQual pipeline for DTI preprocessing and Quality Assurance (QA), the anatomical processing stream forms the critical foundation for all subsequent diffusion tensor imaging analysis. Robust brain extraction, precise tissue segmentation, and accurate alignment to standard space are prerequisites for deriving valid quantitative diffusion metrics (e.g., FA, MD) and for performing tractography. This protocol details the application notes for these three core anatomical steps as implemented and validated within the PreQual framework, which emphasizes automated, containerized processing with integrated QA.

Application Notes & Protocols

Brain Extraction (Skull Stripping)

Objective: To remove non-brain tissue (skull, scalp, meninges) from T1-weighted anatomical images, creating a binary brain mask.

Protocol (Using ANTs antsBrainExtraction.sh within PreQual):

- Input: High-resolution 3D T1-weighted anatomical scan (e.g., MPRAGE, SPGR) in NIfTI format.

- Template Preparation: The protocol uses the OASIS template (

MNI152NLin2009cAsymfromantsscriptsdata) as a prior. The template consists of a T1 image (T_template0.nii.gz) and a corresponding brain probability mask (T_template0_BrainCerebellumProbabilityMask.nii.gz). - Execution Command:

- Outputs:

output_prefix_BrainExtractionBrain.nii.gz: Extracted brain image.output_prefix_BrainExtractionMask.nii.gz: Binary brain mask.output_prefix_BrainExtractionPrior0GenericAffine.mat: Initial transform to template.

- QA in PreQual: The pipeline automatically generates a montage overlay of the original T1 with the extracted brain mask boundary, allowing for visual inspection of stripping accuracy at the crown and cerebellum.

Tissue Segmentation

Objective: To classify voxels of the skull-stripped brain into Cerebrospinal Fluid (CSF), Gray Matter (GM), and White Matter (WM) probabilistic tissues.

Protocol (Using FSL FAST within PreQual):

- Input: The brain-extracted T1 image from Section 2.1.

- Preprocessing: The input image is bias-field corrected (using

antsN4BiasFieldCorrection) to address intensity inhomogeneities that would impair segmentation. - Segmentation Execution:

- Outputs:

output_prefix_prob_0.nii.gz: CSF probability map.output_prefix_prob_1.nii.gz: GM probability map.output_prefix_prob_2.nii.gz: WM probability map.output_prefix_seg.nii.gz: Hard segmentation (voxel labeled as class with highest probability).

- QA in PreQual: Generates a composite figure showing the original brain image alongside the three probability maps and the hard segmentation. Quantitative summary statistics (total volume per tissue class) are logged for cohort-level review.

Alignment (Spatial Normalization)

Objective: To non-linearly warp the individual's native T1 image to a standard template space (e.g., MNI152), enabling inter-subject analysis and use of atlases.

Protocol (Using ANTs antsRegistrationSyN.sh within PreQual):

- Input:

- Moving Image: The native, brain-extracted T1 image.

- Fixed Image: The standard template (e.g.,

MNI152NLin2009cAsym_T1_1mm.nii.gz).

- Execution Command:

- Transform Application: To warp the subject's DTI data (e.g., FA map) to template space:

- Outputs:

output_prefixWarped.nii.gz: The subject's T1 warped to template space.output_prefix0GenericAffine.mat: Affine transformation matrix.output_prefix1Warp.nii.gz: Non-linear deformation field.output_prefix1InverseWarp.nii.gz: Inverse deformation field.

- QA in PreQual: Generates a "checkerboard" overlay between the warped subject brain and the template, and calculates normalized mutual information (NMI) and Dice overlap of major brain regions (using a template atlas) to quantify registration success.

Table 1: Typical Performance Metrics for Anatomical Processing Steps

| Processing Step | Tool/Method | Key Metric | Typical Target Value (in healthy adult brains) | QA Output in PreQual |

|---|---|---|---|---|

| Brain Extraction | ANTs antsBrainExtraction.sh |

Dice Similarity vs. Manual Mask | >0.97 | Visual boundary overlay; Extraction failure flag if volume is ±3SD from cohort mean. |

| Tissue Segmentation | FSL FAST | Total Intra-Cranial Volume (TIV) | Cohort-specific | Tissue volume summary (CSF, GM, WM in cm³); Probability map overlays. |

| Alignment | ANTs SyN Registration | Normalized Mutual Info (NMI) | >0.80 | Checkerboard overlay; Dice of template ROIs (e.g., >0.85 for ventricles, >0.7 for cortical structures). |

Visualizations

Workflow Diagram

- Diagram Title: Anatomical Processing Stream with Integrated QA

Logical Relationship to PreQual DTI Pipeline

- Diagram Title: Role of Anatomical Stream in PreQual DTI Pipeline

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions for Anatomical Processing

| Item | Function in Protocol | Example/Note |

|---|---|---|

| High-Quality T1-Weighted MRI Data | Primary input for all anatomical processing. | 3D MPRAGE/SPGR, 1mm isotropic resolution recommended. Stored in NIfTI format. |

| Standard Template & Atlas | Target space for alignment; provides spatial priors for extraction and segmentation. | MNI152 (2009c non-linear asymmetric) from ANTs or FSL. Includes T1 image and tissue probability maps. |

| Brain Extraction Algorithm | Removes non-brain tissue to isolate the region of interest. | ANTs antsBrainExtraction.sh (used here), FSL BET, or HD-BET for deep learning. |

| Tissue Segmentation Tool | Classifies brain voxels into tissue types (CSF, GM, WM). | FSL FAST (used here), SPM12 Unified Segmentation, or ANTs Atropos. |

| Non-linear Registration Suite | Computes high-dimensional warp to align individual brains to a common template. | ANTs SyN (used here) or FNIRT (FSL). Critical for group analysis. |

| Containerization Platform | Ensures reproducibility and dependency management across compute environments. | Docker or Singularity container encapsulating PreQual with all tools (ANTs, FSL). |

| Quality Assessment (QA) Visualizer | Generates standardized visual reports for each processing step. | Custom PreQual module generating PNG montages (e.g., boundary overlays, checkerboards). |

| Quantitative Metrics Calculator | Computes objective scores (Dice, NMI, volumes) to flag potential failures. | Integrated Python/fslmaths scripts within PreQual pipeline. |

Application Notes

Within the PreQual pipeline for Diffusion Tensor Imaging (DTI) preprocessing and Quality Assurance (QA) research, establishing a robust and automated diffusion processing stream is paramount for ensuring data integrity in longitudinal and multi-site studies, particularly in clinical drug development. This stream addresses key artifacts that confound accurate estimation of diffusion-derived biomarkers. Denoising improves the signal-to-noise ratio (SNR), enabling more reliable tensor fitting. Eddy-current and motion correction compensates for distortions and subject movement, which are major sources of variance and misalignment. B1 field unwarping corrects intensity inhomogeneities caused by non-uniform radiofrequency excitation, ensuring quantitative accuracy across the brain. Implementing this stream as part of PreQual's standardized QA framework allows researchers to generate consistent, high-fidelity DTI data essential for detecting subtle treatment effects.

Protocols & Methodologies

Denoising Protocol: Patch-Based Principal Component Analysis (PCA)

Objective: To remove random noise from diffusion-weighted images (DWIs) while preserving anatomical detail.

Workflow:

- Input: Raw DWI series (N volumes, including b=0 s/mm² images).

- Patch Extraction: For each voxel, extract a small 3D patch (e.g., 5x5x5). Build a matrix from similar patches across the image.

- PCA Thresholding: Perform PCA on the patch matrix. Separate signal (represented by principal components with large eigenvalues) from noise (components with small eigenvalues). Apply a hard or soft threshold to the eigenvalues associated with noise.

- Patch Reconstruction: Reconstruct the denoised patches from the thresholded PCA components and aggregate them back to form the denoised image, using a non-local means approach to handle overlapping patches.

- Output: Denoised DWI series. Common Tool:

dwidenoisefrom MRtrix3 orDipy's patch-based denoising.

Key Parameters:

- Patch size.

- Thresholding method (e.g., Marchenko-Pastur).

- Number of principal components.

Eddy-Current & Motion Correction Protocol

Objective: To correct for distortions from eddy currents induced by diffusion gradients and for subject head motion.

Workflow:

- Input: Denoised DWI series.

- Reference Image: Select a high-quality b=0 volume as the target for registration.

- Simultaneous Correction: Employ a dual transformation model. A rigid-body transformation accounts for subject motion. A quadratic or affine transformation models the eddy-current-induced distortions, which are often slice- and axis-specific.

- Registration: Register all DWIs to the reference b=0 using a cost function (e.g., mutual information) that is robust to contrast changes caused by diffusion weighting. Common Tool: FSL's

eddy(recommended), which also models and replaces outliers. - Output: Corrected DWI series aligned in the subject's anatomical space.

Key Parameters:

- Registration model (e.g., eddycurrentsand_movement in

eddy). - Interpolation method.

- Outlier replacement thresholds.

B1 Field Unwarping (Bias Field Correction) Protocol

Objective: To correct smooth, low-frequency intensity inhomogeneities across the image (bias field).

Workflow:

- Input: Motion- and eddy-corrected DWI series. A corresponding anatomical T1-weighted image is highly beneficial.

- Bias Field Estimation:

- Use the averaged b=0 images or all DWIs to estimate the bias field.

- Employ a method like N4ITK, which models the bias field as a smooth multiplicative field and iteratively optimizes its parameters.

- Application: Apply the multiplicative correction field to all DWIs to produce uniformly intensity-scaled images.

- Output: Bias-corrected DWI series. Common Tool:

antsN4BiasFieldCorrection(from ANTs) ordwibiascorrectin MRtrix3 (which uses ANTs or FSL'sfast). - QA Step: Generate a report showing the bias field and intensity histograms before/after correction.

Key Parameters:

- Convergence thresholds.

- Spline distance for field modeling.

- Number of iterations.

Table 1: Impact of Preprocessing Steps on Key DTI Metrics (Hypothetical Cohort Data)

| Processing Stage | Mean FA (ROI: Corpus Callosum) | Standard Deviation of FA | Mean MD (x10⁻³ mm²/s) | SNR (in WM) | Visual QA Rating (1-5) |

|---|---|---|---|---|---|

| Raw Data | 0.68 | 0.12 | 0.78 | 18 | 2 |

| After Denoising | 0.69 | 0.08 | 0.77 | 28 | 3 |

| + Eddy/Motion Corr. | 0.71 | 0.05 | 0.76 | 28 | 4 |

| + B1 Unwarping | 0.71 | 0.04 | 0.75 | 29 | 5 |

Table 2: Recommended Software Tools & Key Parameters for PreQual Integration

| Step | Primary Tool (Version) | Critical Parameters for PreQual Defaults | Expected Runtime per Subject |

|---|---|---|---|

| Denoising | MRtrix3 dwidenoise |

-noise noise_map.nii.gz |

~5 minutes |

| Eddy/Motion Corr. | FSL eddy (v10.0+) |

--repol (outlier replacement), --data_is_shelled |

~15-30 minutes |

| B1 Unwarping | ANTs N4BiasFieldCorrection |

-s 3 (shrink factor), -c [200x200x200] (convergence) |

~10 minutes |

Diagrams

Title: DTI Preprocessing Stream in PreQual Pipeline

Title: Eddy Correction with Outlier Rejection

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software & Data Resources for DTI Preprocessing Research

| Item | Function in Research | Example/Note |

|---|---|---|

| PreQual Pipeline | Centralized framework for orchestrating and QA-checking all preprocessing steps. | Integrates calls to tools below; generates holistic HTML reports. |

| FSL (FMRIB Software Library) | Provides eddy, the industry-standard tool for combined eddy-current and motion correction. |

Critical for modeling and replacing outlier slices (--repol). |

| MRtrix3 | Offers state-of-the-art dwidenoise (MP-PCA) and dwibiascorrect utilities. |

Denoising is computationally efficient and preserves edges. |

| ANTs (Advanced Normalization Tools) | Contains the N4 algorithm for B1 bias field correction. | Often used via MRtrix3 wrapper; superior for strong field inhomogeneity. |

| Dipy (Diffusion Imaging in Python) | Python library offering alternative denoising and correction methods; ideal for prototyping. | Useful for implementing custom QA metric calculations. |

| Human Phantom DTI Data | Standardized dataset with known ground-truth properties for pipeline validation. | Essential for benchmarking PreQual's performance across sites/scanners. |

| Synthetic Lesion/Disease Models | Digital phantoms simulating pathology to test robustness of preprocessing streams. | Validates that corrections do not artificially alter lesion contrast. |

Within the PreQual pipeline for Diffusion Tensor Imaging (DTI) preprocessing and Quality Assurance (QA) research, the accurate derivation of tensor-based scalar metrics is a critical step for downstream neuroimaging analysis. PreQual ensures robust preprocessing—correcting for artifacts, eddy currents, and motion—to yield a clean diffusion-weighted dataset. This Application Note details the subsequent, essential procedures of tensor model fitting and the generation of Fractional Anisotropy (FA), Mean Diffusivity (MD), Axial Diffusivity (AD), and Radial Diffusivity (RD) maps. These metrics are indispensable for researchers, scientists, and drug development professionals studying white matter microstructure in health, disease, and treatment response.

Theoretical Foundation & Tensor Fitting

The diffusion tensor model, D, is a 3x3 symmetric, positive-definite matrix that describes the magnitude and directionality of water diffusion in each voxel. It is fitted from multi-directional diffusion-weighted images (DWIs) using a linear least-squares approach, solving the Stejskal-Tanner equation:

Sk = S0 exp(-b gkT D gk)

Where:

- Sk: Signal intensity for diffusion direction k.

- S0>: Non-diffusion-weighted (b=0) signal.

- b: The b-value (diffusion weighting factor).

- gk: Unit vector of the diffusion-sensitizing gradient for direction k.

- D: The diffusion tensor.

Table 1: Core Scalar Metrics Derived from the Diffusion Tensor

| Metric | Full Name | Mathematical Definition (from eigenvalues λ1≥λ2≥λ3) | Biological Interpretation | Typical Value Range in Healthy White Matter |

|---|---|---|---|---|

| FA | Fractional Anisotropy | $\sqrt{\frac{3}{2}} \cdot \frac{\sqrt{(\lambda1-\hat{\lambda})^2+(\lambda2-\hat{\lambda})^2+(\lambda3-\hat{\lambda})^2}}{\sqrt{\lambda1^2+\lambda2^2+\lambda3^2}}$ | Degree of directional restriction; white matter integrity. | 0.2 - 0.9 |

| MD | Mean Diffusivity | $(\lambda1 + \lambda2 + \lambda_3) / 3$ | Average magnitude of water diffusion; cellular density/edema. | ~0.7 x 10⁻³ mm²/s |

| AD | Axial Diffusivity | $\lambda_1$ | Diffusion parallel to primary axon direction; axonal integrity. | ~1.5 x 10⁻³ mm²/s |

| RD | Radial Diffusivity | $(\lambda2 + \lambda3) / 2$ | Diffusion perpendicular to axons; myelination status. | ~0.5 x 10⁻³ mm²/s |

Experimental Protocols

Protocol A: DTI Data Acquisition for Tensor Fitting

- Objective: Acquire diffusion-weighted data suitable for robust tensor estimation.

- Prerequisite: Data preprocessed through PreQual pipeline (denoising, Gibbs-ringing removal, eddy-current/motion correction, B1 field inhomogeneity correction, and robust brain masking).

- Materials: 3T MRI Scanner, 32-channel head coil, DTI sequence.

- Procedure:

- Acquire at least one b=0 s/mm² (non-diffusion-weighted) volume.

- Acquire diffusion-weighted volumes with a b-value of 700-1000 s/mm² (clinical) or 1000-3000 s/mm² (research).

- Use a minimum of 30 non-collinear diffusion encoding directions to ensure robust tensor estimation. 60+ directions are preferred for higher accuracy.

- Recommended sequence: Single-shot spin-echo echo-planar imaging (SS-SE-EPI).

- Key parameters: TR/TE ~8000/80ms, matrix=128x128, slice thickness=2-2.5mm, FOV=256mm.

- Total scan time: Typically 8-12 minutes.

Protocol B: Tensor Fitting and Metric Calculation (FSL DTIFIT)

- Objective: Fit the diffusion tensor and compute FA, MD, AD, RD maps.

- Input: PreQual-processed DWI data (

data.nii.gz), corresponding b-vectors and b-values (bvecs,bvals), and binary brain mask (nodif_brain_mask.nii.gz). - Software: FSL (FMRIB Software Library v6.0+).

Procedure:

- Data Check: Ensure b-vectors are rotated appropriately if using PreQual's

eddyfor motion correction. Command Execution:

Output Files:

dti_FA.nii.gz: Fractional Anisotropy map.dti_MD.nii.gz: Mean Diffusivity map.dti_AD.nii.gz: Axial Diffusivity map (called L1 by FSL).dti_RD.nii.gz: Radial Diffusivity map ((L2+L3)/2).dti_V1.nii.gz: Primary eigenvector (color-coded direction map).dti_tensor.nii.gz: The full tensor elements.

- Data Check: Ensure b-vectors are rotated appropriately if using PreQual's

Protocol C: Quality Assessment of Derived Metric Maps

- Objective: Visually and quantitatively inspect FA/MD/AD/RD maps for artifacts and plausibility.

- Procedure:

- Visual Inspection (FSLeyes):

- Load FA map overlaid on T1 or b=0 image.

- Check for: Geometric distortion mismatches, "patchy" noise in white matter (indicates poor tensor fit), and anatomically plausible values (e.g., high FA in corpus callosum).

- Histogram Analysis:

- Generate whole-brain histograms for each metric within the brain mask.

- Check for: Unimodal distribution for MD, AD, RD; expected positive skew for FA.

- Summary Statistics:

- Calculate mean, standard deviation, and range within major white matter tracts (using an atlas) to compare against normative database values.

- Visual Inspection (FSLeyes):

Visual Workflow

Diagram Title: Workflow for DTI Tensor Fitting and Metric Calculation

The Scientist's Toolkit

Table 2: Essential Research Reagent Solutions for DTI Analysis

| Item | Function/Description | Example Tools/Software |

|---|---|---|

| PreQual Pipeline | Automated, robust preprocessing for DTI data. Handles denoising, artifact correction, and QA. | https://github.com/MASILab/PreQual |

| Tensor Fitting Engine | Core software library to fit the diffusion tensor model to DWI data. | FSL's dtifit, DTI-TK, Dipy (Python) |

| Metric Calculation Library | Computes scalar indices (FA, MD, AD, RD) from tensor eigenvalues. | FSL, MRtrix3 tensor2metric, ANTS |

| Visualization Suite | For visual inspection and validation of derived metric maps. | FSLeyes, ITK-SNAP, MRtrix3 mrview |

| Statistical Analysis Package | For voxel-wise or tract-based analysis of metric maps. | FSL's Randomise, SPM, AFNI, R, Python (nilearn) |

| Normative Atlas Database | Reference values for comparison in healthy and disease populations. | UK Biobank, Human Connectome Project, ENIGMA-DTI |

The PreQual pipeline is a widely adopted, automated framework for the preprocessing and quality assessment (QA) of Diffusion Tensor Imaging (DTI) data. A core thesis in neuroimaging research posits that robust, automated QA is fundamental to ensuring the validity of downstream analyses, such as tractography and connectivity mapping, which are critical in both neuroscience research and clinical drug development for neurological disorders. This document details the application notes and protocols for interpreting the automated Quality Control (QC) reports generated by such pipelines, specifically focusing on their HTML and visual outputs. Mastery of these outputs allows researchers to efficiently identify systematic artifacts, subject-specific anomalies, and processing failures, thereby safeguarding data integrity.

Structure of the Automated QC Report

A typical PreQual-derived QC report is generated as an HTML document with embedded visualizations and quantitative summaries. The report is organized into logical sections.

Diagram: PreQual QC Report Dataflow

Key HTML Report Sections & Interpretation Protocols

The first page provides an at-a-glance overview of the processing batch.

Protocol for Interpretation:

- Check Overall Status Flags: Look for red "FAIL" or yellow "WARN" indicators next to subject IDs.

- Review Summary Table: Quickly scan key metrics against expected ranges (see Table 1).

Table 1: Key Dashboard Metrics and Interpretation

| Metric | Typical Range (Adult Human Brain) | Flag Condition | Potential Issue |

|---|---|---|---|

| Mean Relative Motion (mm) | < 1.5 mm | > 2.0 mm | Excessive subject movement; consider exclusion. |

| Max B-value Deviation | < 5% of nominal | > 10% | Gradient calibration error or severe distortion. |

| Signal-to-Noise Ratio (SNR) | > 20 | < 15 | Poor image quality; insufficient signal. |

| Number of Outlier Slices | < 5% of total slices | > 10% | Severe motion or artifact in specific volumes. |

| Brain Mask Coverage (%) | 98-100% of skull-stripped brain | < 95% | Inaccurate brain extraction impacting tensor fit. |

Section 3.2: Per-Subject Visual Diagnostics

This section contains core visualization panels. The protocol for systematic review is critical.

Experimental Protocol for Visual QA:

- Anatomical Overlays: Inspect the Eddy-Corrected Mean B0 image overlaid with the brain mask. Action: Ensure the mask tightly follows brain contours without including skull or dura.

- Tensor-Derived Maps: Review Fractional Anisotropy (FA) and Mean Diffusivity (MD) maps. Action: Look for anatomically plausible contrast (white matter: high FA, low MD). Check for dark, speckled noise patterns or geometric distortions.

- Residual Artifact Plots: Examine the post-eddy residual plots. Action: Identify systematic patterns (stripes, rings) indicating incomplete correction, versus random noise indicating successful correction.

- Outlier Detection Images: Review slices marked as "outliers" by algorithms like

fsl_motion_outliers. Action: Confirm the highlighted slice shows clear signal dropout or displacement compared to the reference.

Diagram: Visual QA Decision Pathway

Quantitative Data Tables and Trend Analysis

Reports often aggregate metrics across a study cohort in tabular form (e.g., CSV). The protocol involves importing this data into statistical or graphing software (e.g., R, Python) to identify population trends and outliers.

Protocol for Cohort-Level QA Analysis:

- Generate Descriptive Statistics: Calculate mean, standard deviation, and range for all key metrics in Table 1.

- Create Visualization: Plot distributions (histograms, boxplots) for each metric. Example: A boxplot of mean relative motion can reveal if one scanning site has systematically higher motion.

- Correlate Metrics: Assess correlations between QA metrics (e.g., motion vs. outlier count) using Pearson's r. This can confirm expected relationships and identify atypical subjects.

Table 2: Example Cohort QA Summary (Simulated Data, n=50)

| Subject ID | Mean Motion (mm) | SNR | Outlier Slices (%) | Mask Coverage (%) | Status |

|---|---|---|---|---|---|

| MEAN (SD) | 1.2 (0.6) | 24.5 (4.2) | 3.1 (2.8) | 99.1 (0.7) | — |

| sub-001 | 0.8 | 28.1 | 1.2 | 99.5 | PASS |

| sub-002 | 2.3 | 19.8 | 12.5 | 98.9 | FLAG |

| sub-003 | 1.1 | 22.4 | 2.8 | 94.1 | FAIL |

| ... | ... | ... | ... | ... | ... |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for DTI QA & Preprocessing

| Item | Function/Description | Example Solution/Software |

|---|---|---|

| Preprocessing Pipeline | Automated framework for core DTI steps: eddy-current/motion correction, skull-stripping, tensor fitting. | PreQual, FSL's eddydtifit, QSIPrep, TORTOISE. |

| Quality Assessment Toolkit | Generates visual and quantitative metrics from processed data. | Fslqc (from PreQual), DTI-TK's dti_qc_tool, in-house Python/R scripts. |

| Visualization Suite | Software for rendering 2D slices, overlays, and 3D tractography. | FSLeyes, MRtrix3's mrview, 3D Slicer. |

| Statistical Environment | For aggregating cohort metrics, performing statistical tests, and creating publication-quality plots. | R (tidyverse, ggplot2), Python (pandas, seaborn, matplotlib). |

| Data Format Library | Tools to read/write neuroimaging-specific file formats. | NiBabel (Python), RNifti (R), FSL's fsleyes. |

| High-Performance Compute (HPC) Scheduler | Enables batch processing of large datasets on cluster infrastructure. | SLURM, Sun Grid Engine (SGE). |

Solving Common PreQual Pipeline Errors and Optimizing Performance for Large Datasets

Top 5 Common Runtime Errors and How to Resolve Them

Within the context of developing and implementing the PreQual pipeline for Diffusion Tensor Imaging (DTI) preprocessing and Quality Assurance (QA), runtime errors are frequent obstacles that disrupt automated analysis workflows. These errors can introduce significant delays in research timelines and compromise the reproducibility of results in neuroscience and drug development studies. This document details the five most common runtime errors encountered, their underlying causes within neuroimaging computation, and precise protocols for resolution.

Error 1: Memory Allocation Failure (Out-of-Memory)

This error occurs when a process requests more RAM than is available on the system. In DTI preprocessing, it is common during tensor fitting, tractography, or large batch processing of high-resolution datasets.

Table 1: Common Memory-Intensive Steps in PreQual/DTI Pipelines

| Pipeline Step | Typical Memory Demand | Primary Cause |

|---|---|---|

| Eddy Current Correction | 4-8 GB per subject | Simultaneous loading of all DWIs and b-matrices. |

| Tensor Fitting (OLS) | 2-4 GB per subject | Inversion of large design matrices for full brain voxels. |

| Probabilistic Tractography | 8-16+ GB per subject | Generation and storage of thousands of streamlines. |

| Population Averaging | Scale with cohort size | Loading multiple subject volumes into memory. |

Resolution Protocol:

- Diagnosis: Use system monitoring tools (

top,htop,System Monitor) to confirm memory exhaustion. - Immediate Action: Implement data chunking. Modify the script to process data in smaller spatial blocks (e.g., slices or parcels) or temporal batches.

- Code Optimization: Convert data types from 64-bit float (

float64) to 32-bit float (float32) where precision loss is acceptable. - Hardware/Configuration Solution: Increase system swap space temporarily. For long-term solutions, consider adding RAM or using high-performance computing (HPC) clusters with distributed memory.

Error 2: File Not Found or Incorrect Path

A pervasive error caused by incorrect file paths, missing data, or inconsistent naming conventions between pipeline stages. Critical in QA where specific outputs are expected.

Resolution Protocol:

- Structured Input/Output (I/O) Schema: Implement a BIDS (Brain Imaging Data Structure) compliant directory structure. Enforces predictable file locations.

- Pre-flight Check Script: Develop and run a script at pipeline start to verify the existence and integrity of all required input files (e.g.,

NIFTIheaders,bval,bvecfiles). - Use Absolute or Pipeline-Relative Paths: Define a single root directory variable at the start of the workflow. All subsequent paths are built relative to this root.

- Exception Handling: Wrap file I/O operations in

try-exceptblocks (Python) or equivalent, logging the precise missing file and skipping the subject for manual review.

Error 3: Library or Dependency Version Conflict

Occurs when software packages (e.g., FSL, ANTs, MRtrix3, Python libraries) require specific, incompatible versions of shared libraries or dependencies.

Resolution Protocol:

- Environment Isolation: Use containerization (

Docker,Singularity/Apptainer) to package the entire PreQual pipeline with all correct dependencies. This is the gold standard for reproducibility. - Environment Management: If containers are not feasible, use virtual environments (

conda,venv) to create isolated, project-specific software stacks. - Dependency Specification: Maintain a version-locked requirements file (e.g.,

environment.ymlfor conda,requirements.txtfor pip) that is rigorously tested.

Error 4: Permission Denied

The process lacks necessary read, write, or execute permissions on critical directories, files, or temporary spaces.

Resolution Protocol:

- Pre-Run Permission Audit: Prior to execution, script a check for write permissions in the designated output and temporary directories.

- Principle of Least Privilege: Do not run pipelines as

root. Instead, ensure the user account has explicit ownership or group membership with appropriate permissions (chmod,chgrp) on the data and output directories. - Temporary Directory Management: Explicitly set and control the location of temp files (via

TMPDIRenvironment variable) to a location with guaranteed write access.

Error 5: Numerical Instability (NaN or Inf Values)

The generation of Not-a-Number (NaN) or Infinite (Inf) values during mathematical operations, such as division by zero in fractional anisotropy calculation or log-transform of non-positive values.

Resolution Protocol:

- Proactive Masking: Apply a robust brain mask to all operations to exclude zero-valued background voxels from computations.

- Data Sanitization Check: Insert a preprocessing step that scans the raw DWI data for negative or zero values (which are non-physical) and replaces them with a small positive epsilon or flags the dataset.

- Stable Algorithm Selection: Use numerically stable algorithms. For example, prefer Log-Euclidean or RESTORE methods for tensor fitting over standard linear least squares if the data is noisy.

- Post-Processing NaN Cleanup: Implement a final check that identifies and interpolates NaN/Inf voxels from neighboring healthy voxels in derived maps (FA, MD).

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Computational Tools for DTI Pipeline Stability

| Tool / Reagent | Function in Pipeline Stability | Example/Version |

|---|---|---|

| Docker/Singularity | Dependency & environment isolation; eliminates "works on my machine" errors. | apptainer/stable |

| BIDS Validator | Ensures input data adheres to a standardized structure, preventing path errors. | v1.15.0 |

| FSL (FMRIB Software Library) | Provides core algorithms for Eddy correction, brain extraction, and registration. | FSL 6.0 |

| MRtrix3 | Advanced tools for constrained spherical deconvolution and tractography. | MRtrix3 3.0.4 |

| dcm2niix | Reliable DICOM to NIFTI conversion, the critical first step in data ingestion. | v1.0.20240202 |

| Python NumPy/SciPy | Core numerical computing with options for memory-mapped arrays (numpy.memmap). |

NumPy >=1.21 |

| Nipype | Python framework for creating reproducible, portable neuroimaging workflows. | Nipype 1.8.6 |

| JSON Configuration Files | Human- and machine-readable files to store all pipeline parameters and paths. | Custom |

Visualizations

Diagram 1: PreQual Pipeline Error Checkpoints

Diagram 2: Resolution Strategy for Out-of-Memory Error

Within the development and validation of the PreQual pipeline for Diffusion Tensor Imaging (DTI) preprocessing and quality assurance (QA), managing data artifacts is paramount. This document details application notes and protocols for addressing three pervasive challenges: excessive participant motion, low signal-to-noise ratio (SNR), and non-standard acquisition schemes. Effective handling of these issues is critical for generating reliable, reproducible biomarkers in neuroscience research and clinical drug development.

Table 1: Impact of Artifacts on DTI Metric Reliability

| Artifact Type | Primary Effect | Typical Magnitude of Bias | Affected DTI Metrics |

|---|---|---|---|