Troubleshooting Camera Calibration: A Guide for Consistent Behavioral Measurement in Biomedical Research

This guide provides a comprehensive framework for researchers and scientists in drug development to achieve and maintain precise camera calibration, a critical prerequisite for accurate behavioral phenotyping and measurement.

Troubleshooting Camera Calibration: A Guide for Consistent Behavioral Measurement in Biomedical Research

Abstract

This guide provides a comprehensive framework for researchers and scientists in drug development to achieve and maintain precise camera calibration, a critical prerequisite for accurate behavioral phenotyping and measurement. It covers foundational calibration principles, establishes robust methodological procedures, details systematic troubleshooting for common pitfalls, and outlines rigorous validation protocols. By ensuring the geometric accuracy of imaging systems, this resource supports the generation of reliable, reproducible data essential for preclinical and clinical studies.

Why Calibration is Non-Negotiable for Accurate Behavioral Data

Troubleshooting Guides

Guide 1: High Reprojection Error

Problem: The overall reprojection error after calibration is unacceptably high (e.g., ≥ 1 pixel), which can lead to inaccurate measurements in your research data [1] [2].

Solutions:

- Identify and Remove Outlier Images: Use your calibration software's tools to visualize the reprojection error for each image. Exclude images with the highest errors and recalibrate [3] [2].

- Inspect Feature Detection: Manually check calibration images for false or inaccurate detections of pattern features (like checkerboard corners). Remove or retake images where detection has failed [3].

- Increase Data Variety: Ensure your image set includes the pattern at various angles, distances, and positions, covering the entire field of view, especially the edges. Avoid overfitting by using non-repetitive perspectives [3] [1].

- Use a More Complex Distortion Model: For wide-angle or fisheye lenses, try calibrating with a higher-order distortion model (e.g., 3 radial distortion coefficients instead of 2) or a specialized fisheye model [3] [4].

- Verify Pattern Flatness and Rigidity: Ensure your calibration target is mounted on a perfectly flat, rigid surface to prevent deformations that introduce errors [1] [4].

Guide 2: Calibration Failure or Non-Convergence

Problem: The calibration algorithm fails to compute camera parameters or returns a "linear calibration failed" error [4].

Solutions:

- Check Pattern Parameters: Verify that the correct number of inner corners (rows and columns) and the exact physical measurement of the squares/dots are provided to the software. For checkerboards, count black-to-black inner corners [3] [5] [4].

- Ensure Sufficient Pattern Tilt: Capture many images with the pattern tilted at significant angles (up to ±45° or ±60° relative to the camera plane). Avoid having all images be fronto-parallel [3] [1] [4].

- Improve Pattern Visibility: Confirm the entire calibration pattern is visible in all images used and is not partially blocked or at an overly acute angle to the camera [5] [4].

- Review Image Quality: Avoid motion blur, uneven lighting, glare, or reflections on the pattern. Use diffuse, controlled lighting and a stable setup [3] [4].

- Use More Images: Capture a larger set of images (e.g., 20-50) to give the algorithm sufficient data to converge on a solution [3] [1].

Guide 3: Inaccurate 3D Measurements After Calibration

Problem: Even with a low reprojection error, the 3D world measurements derived from the calibrated camera are inaccurate.

Solutions:

- Validate with Extrinsics Visualization: Use the software's feature to plot the locations of the camera and the calibration pattern. Look for impossible geometries, such as the pattern appearing behind the camera [3] [2].

- Check Calibration Coverage: Visually confirm that your calibration images provide even coverage of the camera's entire field of view. If there are noticeable gaps, capture additional images to fill them [3] [1].

- Re-calibrate at Application Resolution and Focus: The camera must be calibrated at the exact resolution and focus settings used during data collection. Any change in zoom or focus invalidates the calibration [3] [1].

- Verify Ground Plane Alignment: For motion capture systems, ensure the calibration board was perpendicular to the floor during the calibration process, as an incorrect ground plane can skew kinematics [5].

Frequently Asked Questions (FAQs)

Q1: What is an acceptable reprojection error for behavior measurement research? A: A reprojection error of less than 0.5 pixels is generally considered good for precise measurement applications. A value greater than 1 pixel is often unacceptable and indicates a need for recalibration [1] [2]. However, a low error is necessary but not sufficient; always validate your results visually and through other metrics [1].

Q2: How many calibration images do I need, and how should I capture them? A: While 10-20 images are a common starting point, using around 50 different images is a robust rule of thumb for high-precision research [3] [1]. Capture these by moving the pattern throughout the intended "calibration volume"—at different 3D orientations, distances, and angles—ensuring coverage of the entire field of view, especially the edges and corners [3] [1] [2].

Q3: My camera has auto-focus. Can I use it during calibration? A: No. You must disable auto-focus and auto-exposure. These automatic settings change the camera's intrinsic parameters (like focal length) during the capture process, which invalidates the consistency required for a stable calibration. Use fixed, manual settings for focus, exposure, and zoom [3] [1].

Q4: Why does my stereo or multi-camera system have poor 3D tracking accuracy? A: This can stem from several calibration-related issues:

- Poor Camera Synchronization: Ensure all cameras are hardware-synchronized or use a post-hoc synchronization method (e.g., a distinct motion like a hand punch) [5].

- Insufficient Overlapping Field of View: Reposition cameras so that the subject is visible by at least two cameras at all times to avoid occlusions [5].

- High Lens Distortion at the Edges: Wide-angle lenses may have significant distortion at the image edges. Ensure your calibration images cover these areas to model the distortion correctly [3] [6].

Q5: What are the best practices for creating or selecting a calibration target? A: The quality of the target is critical [1].

- Material: It should be printed on a flat, rigid, and non-reflective material. Avoid flimsy paper [3] [1].

- Accuracy: Use a professional printing service to ensure the pattern's geometry (e.g., square size) is accurate and without scaling [3] [1].

- Size and Coverage: The pattern should be large enough to cover a significant portion of the image (ideally 80% of the frame when parallel to the camera) [3].

- Border: Include a white border around the pattern to make it easier for the software to detect [3].

Calibration Error Metrics and Standards

The table below summarizes key quantitative metrics used to evaluate calibration accuracy, helping you determine if your system meets the required standards for measurement validity.

| Metric | Description | Acceptable Threshold for Precision Research |

|---|---|---|

| Mean Reprojection Error [3] [1] [2] | The root-mean-square (RMS) distance (in pixels) between detected pattern points and their projected locations using the calibrated model. | < 0.5 pixels (Good) [3] [1] |

| Standard Error of Parameters [2] | The standard error (uncertainty) for each estimated parameter (e.g., focal length, distortion coefficients). | A 95% confidence interval is within ±1.96σ of the estimated value. The smaller, the better [2]. |

| Pattern Distance [5] | The physical distance between the calibration target and the cameras during image capture. | Should be close to the working distance. For some systems, keep it < 5 meters for reliable detection [5]. |

Experimental Protocol: Achieving a High-Accuracy Calibration

This detailed methodology ensures reliable and repeatable camera calibration for scientific measurement.

Pre-calibration Setup

- Camera Locking: Mechanically secure the camera on a stable tripod or mount. Disable auto-focus, auto-exposure, and image stabilization. Set the resolution to the value that will be used in your experiments and do not change it [3] [1].

- Target Preparation: Obtain a high-quality calibration target (e.g., a checkerboard) printed on a rigid, flat, non-reflective surface by a professional print shop. Precisely measure the size of one square using calipers and note the number of inner corners (rows x columns) [3] [1].

- Lighting Control: Set up diffuse, even lighting to minimize shadows, glare, and reflections on the target [3] [4].

Image Acquisition

- Capture Volume: Move the target throughout the entire calibration volume—a cone-shaped space from the camera's sensor. Capture images at different distances, and tilt the pattern up to ±45 degrees to introduce foreshortening [3] [1].

- Frame Coverage: Ensure the pattern appears in all areas of the frame, including the four corners and edges. Capture at least one image where the pattern fills the entire frame [3] [1].

- Image Set: Capture a minimum of 20-50 images from diverse and non-repetitive viewpoints. Save images in a lossless format (e.g., PNG) [3] [1].

Software Calibration and Validation

- Parameter Input: Load the images into your calibration software (e.g., OpenCV, MATLAB). Input the correct physical square size and the number of inner corners [3] [2].

- Model Selection: For standard lenses, start with a model that includes 2 radial distortion coefficients. For wide-angle or fisheye lenses, use a specialized model with more coefficients [3] [4].

- Error Analysis: After calibration, inspect the mean reprojection error. If it is high (>0.5 px), use the software's tools to visualize errors per image and remove outliers. Recalibrate [3] [2].

- Extrinsics Check: Plot the estimated positions of the camera and the pattern to identify impossible geometries, which indicate a flawed calibration [3] [2].

The Scientist's Toolkit: Essential Research Reagents and Materials

| Item | Function / Explanation |

|---|---|

| High-Quality Calibration Target [3] [1] | A geometrically precise pattern (e.g., checkerboard or dot grid) on a rigid, flat substrate. It provides known physical reference points for the calibration algorithm. |

| Precision Measuring Tool [3] | Calipers or a micrometer to accurately measure the physical dimensions of the pattern's features, which is crucial for converting pixel measurements to real-world units. |

| Stable Camera Mount [3] [1] | A heavy-duty tripod or rig to prevent camera shake and blur, ensuring sharp images and a consistent camera position during calibration and data collection. |

| Controlled Lighting Source [3] [4] | Diffuse lights (e.g., softboxes) that provide even illumination, minimizing shadows, glare, and reflections that can corrupt feature detection on the target. |

| Calibration & Analysis Software [3] [2] | Computer vision tools (e.g., OpenCV, MATLAB Computer Vision Toolbox) that implement algorithms to detect the pattern and calculate camera parameters and errors. |

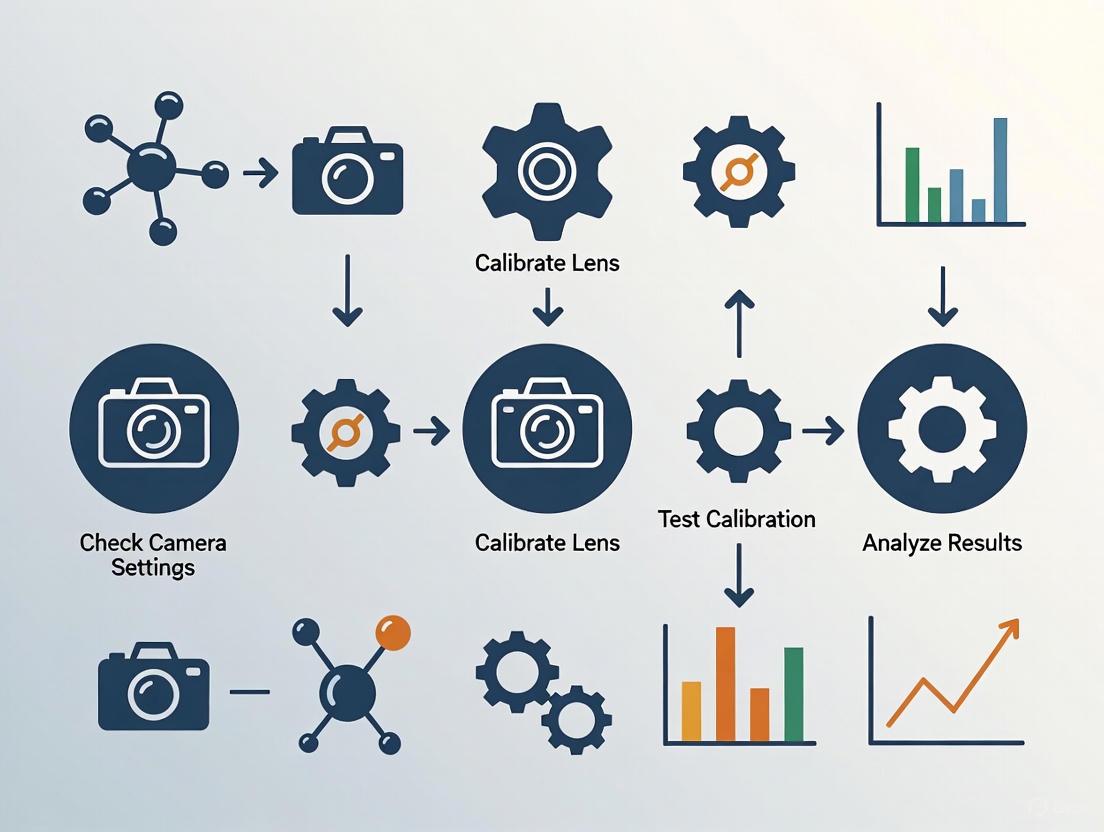

Camera Calibration and Validation Workflow

The diagram below outlines the critical steps for a reliable camera calibration process and key validation checkpoints to ensure measurement validity.

FAQs on Lens Distortion and Calibration

Q1: What are the main types of lens distortion and how do they affect my tracking data? Lens distortion is an optical aberration that causes straight lines in the real world to appear curved in an image. The primary types you will encounter are barrel distortion and pincushion distortion [7] [8] [9]. A third, more complex type is mustache distortion, which is a hybrid of the first two [7] [9].

- Barrel Distortion: This causes straight lines to bulge outward from the center of the image, creating a "barrel" effect. It is common with wide-angle lenses [7] [8].

- Pincushion Distortion: This causes straight lines to bend inward, creating a pinched effect toward the center. It is often seen in telephoto or zoom lenses [7] [9].

- Mustache Distortion: This is a combination of both barrel and pincushion distortion, where the image curves outward near the centre and inward toward the edges, creating a wavy warping [7] [9].

In animal tracking, these distortions introduce spatial inaccuracies. The position of an animal's joint, for example, will be measured incorrectly in the image, especially if it is near the edge of the frame. This can lead to significant errors in calculating gait, distance traveled, or posture [3] [7].

Q2: Why is camera calibration crucial for consistent behavior measurement? Camera calibration is the foundational first step for any quantitative image analysis [3]. A calibrated camera allows you to correct for lens distortion and obtain precise measurements of objects in the real world from your 2D images [3]. Without it, the inherent distortions of your lens will cause your data to be systematically biased, compromising the validity and repeatability of your research. For multi-camera setups used for 3D reconstruction, calibration is even more critical to ensure all cameras are working from a single, accurate geometric model [10].

Q3: What is a reprojection error and what is an acceptable value? The reprojection error is the difference between where a known point in the real world (from your calibration pattern) is detected in your image and where the camera's mathematical model "projects" it should appear. It is the primary metric for assessing the quality of your calibration [3]. This error is measured in pixels. Ideally, the mean reprojection error should be less than 0.5 pixels for a reliable calibration [3].

Q4: My calibration has a high reprojection error. What should I do? A high error indicates a problem with your calibration image set or setup. You can:

- Identify and remove outliers: Use your calibration software (e.g., MATLAB) to visualize the errors per image and exclude the images with the highest errors before recalibrating [3].

- Inspect feature detection: Manually check all calibration images to ensure the software correctly identified all the pattern features (like corners) without any false detections [3].

- Improve your image set: Ensure you have a sufficient number of images (10-20) with a wide variety of angles, distances, and coverage of the field of view. Avoid overfitting by using similar perspectives [3].

Troubleshooting Guides

Guide 1: Solving Common Camera Calibration Failures

| Problem Area | Specific Issue | Recommended Solution |

|---|---|---|

| Calibration Target | Charuco board not detected [10]. | Use a large, rigid, flat board. Ensure lighting is even and minimizes glare. Use the exact, predefined board layout required by your software [3] [10]. |

| Pattern only covers the center of the frame [3]. | The pattern should cover at least 50%, ideally 80%, of the image when parallel to the camera. Capture images where the pattern is close to all edges and corners [3]. | |

| Image Set | "Not enough values to unpack" or similar error [10]. | This means cameras lack shared views. Ensure each camera sees the board simultaneously with at least one other camera. Check for mirrored images from front-facing phone cameras and disable this feature [10]. |

| High reprojection error due to overfitting [3]. | Capture a diverse set of images: fronto-parallel, tilted up to ±45 degrees, and with the target near all edges. Avoid taking all images from the same distance and angle [3]. | |

| Camera Setup | Changing focus or zoom after calibration [3]. | Use fixed focus and a fixed zoom setting. Do not change these settings during or after the calibration process, as they alter the lens's intrinsic properties [3]. |

| Auto-exposure causing varying image brightness [3]. | Use a fixed exposure setting to ensure consistent images. Have controlled, diffuse lighting to minimize shadows and glare [3] [10]. |

Guide 2: Choosing and Using a Calibration Target

The calibration target is a physical object with a known geometry. The software uses it to model your camera's distortion. Here are the key specifications and choices:

Table: Calibration Target Specifications

| Feature | Requirement | Why It Matters |

|---|---|---|

| Flatness | Must be perfectly flat and rigid [10]. | A warped target will introduce errors in the 3D-to-2D mapping. Mount printed patterns on stiff cardboard or poster board [10]. |

| Print Quality | High contrast; no scaling applied during printing [3]. | Ensures the software can detect the pattern's features accurately. Use high-quality vector formats (PDF, SVG) where possible [3]. |

| Material | Non-reflective, matte surface [3]. | Prevents glare and hotspots from obscuring the pattern, which would lead to failed feature detection [3] [10]. |

| Pattern Size | Cover 50-80% of the image at working distance [3]. | A larger board is easier for cameras to detect. For small boards, hold them closer, but ensure they remain visible to multiple cameras simultaneously [10]. |

Table: Common Types of Calibration Patterns

| Pattern Type | Description | Best For |

|---|---|---|

| Checkerboard | A grid of alternating black and white squares [7]. | A common, versatile pattern suitable for most camera calibration tasks in computer vision [7]. |

| Charuco Board | A grid of black and white squares with unique ArUco markers in each alternate square [10]. | Enhanced pose estimation and robustness against occlusion. Recommended for tools like FreeMoCap [10]. |

| Dot Pattern | A grid of circles or dots [9]. | Used in specific standardized measurements (CPIQ compliance) [9]. |

Experimental Protocols

Protocol 1: Reliable Multi-Camera Calibration for 3D Tracking

This protocol details the steps for calibrating a multi-camera system, such as those used in motion capture setups like FreeMoCap, to ensure accurate 3D reconstruction of animal movement [10].

Methodology:

- Preparation:

- Recording:

- Slowly move and tilt the calibration board through the shared volume where the animal will be tracked. Show the board to each pair of cameras for 5-10 seconds to ensure you capture at least 200 shared frames per pair [10].

- Capture the board in many orientations: fronto-parallel to the camera, tilted up to ±45 degrees, and positioned so its edges are close to the sides of the frame [3].

- Ensure the board is well-lit and free of glare throughout the recording [10].

- Processing:

- Load the recorded videos or images into your calibration software (e.g., FreeMoCap, OpenCV, MATLAB).

- Input the physical dimensions of the pattern squares accurately [3].

- Run the calibration algorithm to compute the intrinsic parameters (for each camera) and extrinsic parameters (the positions of cameras relative to each other).

- Validation:

The workflow for this multi-camera calibration process is summarized in the following diagram:

Protocol 2: Quantifying Lens Distortion with a Checkerboard

This protocol uses a standard checkerboard to measure the specific distortion coefficients of a lens, which can be used to correct images for precise 2D analysis [3] [9].

Methodology:

- Image Acquisition:

- Place the checkerboard in the camera's field of view.

- Capture a set of 10-20 images with the pattern at different orientations (parallel, tilted) and positions (center, edges, corners). The pattern should cover most of the frame in several images [3].

- Use uncompressed (e.g., PNG) and unmodified images for calibration [3].

- Software Analysis:

- Model Fitting:

- The software fits a distortion model to the data. A common starting point is the radial distortion model, expressed as:

r_u = r_d + k1 * r_d^3 + k2 * r_d^5[9] wherer_dis the distorted radius,r_uis the undistorted radius, andk1,k2are the distortion coefficients [9]. - For complex "mustache" distortion, higher-order models (5th or 7th order polynomials) may be required [9].

- The software fits a distortion model to the data. A common starting point is the radial distortion model, expressed as:

- Correction:

- The calculated coefficients are used to create a correction map (e.g., an STMap in VFX) that can transform distorted images into undistorted ones, or vice-versa [7].

The Scientist's Toolkit

Table: Essential Research Reagent Solutions for Camera Calibration

| Item | Function | Technical Notes |

|---|---|---|

| Charuco Board | Provides a known geometric pattern for high-accuracy calibration and robust pose estimation [10]. | Use the exact definition required by your software. Must be printed at high contrast and mounted rigidly [10]. |

| Checkerboard Chart | A standard pattern for estimating camera parameters and distortion coefficients [7] [9]. | Ensure known physical dimensions of squares are input into the software. Vector formats (PDF/SVG) prevent scaling errors [3]. |

| Software: OpenCV | Open-source library with comprehensive functions for camera calibration, corner detection, and image correction [3]. | Uses the pinhole camera model. Can estimate intrinsic parameters, distortion coefficients, and reprojection error [3]. |

| Software: Imatest | Commercial solution providing detailed analysis of distortion, modulation transfer function (MTF), and other image quality factors [9]. | Offers multiple distortion models (3rd order, 5th order, tangent) and is highly accurate with checkerboard patterns [9]. |

| Software: MATLAB | Computing environment with a Computer Vision Toolbox for calibration and visualization of errors and extrinsic parameters [3]. | Useful for identifying and removing outlier images that contribute to high reprojection errors [3]. |

The logical process of troubleshooting a failed calibration, from symptom to solution, is visualized below:

Your Troubleshooting Guide to Camera Calibration

This guide provides clear answers to common challenges in camera calibration for behavior measurement research, helping you ensure the geometric accuracy essential for your data.

Frequently Asked Questions

Q1: What are intrinsic and extrinsic parameters, and why do I need both for 3D measurements?

- A: Intrinsic parameters describe the internal, optical properties of the camera itself, such as its focal length and lens distortion. Extrinsic parameters describe the camera's position and orientation in the 3D world. You need both to accurately relate the 2D coordinates of a point in your image to its actual 3D location in your research environment [11] [12]. The intrinsic parameters project the 3D world onto the 2D sensor, while the extrinsics define the camera's viewpoint.

Q2: My calibration reprojection error is high. What are the most common causes?

- A: A high reprojection error indicates that the calibrated model does not accurately predict where known 3D points will appear in your 2D images. The most common causes are:

- Poor Quality Images: Blurry images, poor lighting, or calibration patterns that are not in full focus.

- Insufficient View Variety: Not capturing the calibration pattern from a wide enough range of angles and positions relative to the camera. A minimum of 10-15 images from different viewpoints is often recommended [12].

- Inaccurate Feature Detection: The algorithm incorrectly identifying the key points (like chessboard corners) in your images. Always visually verify that the corners are detected correctly [12].

- Inadequate Pattern Coverage: The calibration pattern does not fill the entire field of view, especially the edges where lens distortion is most pronounced.

Q3: How can I check if my lens distortion correction is working properly?

- A: After applying your distortion coefficients, capture an image of a scene with many straight lines, like a building or a dedicated grid pattern. If the correction is successful, lines that are straight in the real world should appear straight in your corrected image, with no visible curving, especially at the edges [12].

Q4: What is the difference between calibration and profiling, and do I need both?

- A: In the context of camera calibration for geometric measurement:

- Calibration is the process of determining your camera's intrinsic and extrinsic parameters to correct geometric distortions [11] [12].

- Profiling (more common in color science) characterizes the color reproduction of a monitor or camera to ensure accurate color representation [13]. For consistent 3D behavior measurement, geometric calibration is the essential first step. Profiling is crucial for photometric accuracy but is a separate process.

Core Parameter Reference Tables

Table 1: Intrinsic Camera Parameters

These parameters are internal to the camera and define how it projects the 3D world onto a 2D image sensor [11].

| Parameter | Description | Mathematical Representation | Role in Imaging |

|---|---|---|---|

Focal Length (f_x, f_y) |

Distance between the lens and image sensor, determining the field of view. | Pixels (e.g., f_x = F/p_x) |

Controls magnification and perspective. |

Principal Point (c_x, c_y) |

The optical center of the image, where the optical axis intersects the sensor. | Pixels | Defines the image center for the projection. |

Radial Distortion (k_1, k_2, k_3) |

Corrects for light rays bending more at the lens edges than center ("barrel" or "pincushion" distortion) [11]. | x_distorted = x(1 + k_1*r^2 + k_2*r^4 + k_3*r^6) |

Makes straight lines appear straight in the image. |

Tangential Distortion (p_1, p_2) |

Corrects for distortion when the lens and image sensor are not perfectly parallel [11]. | x_distorted = x + [2*p_1*x*y + p_2*(r^2+2*x^2)] |

Corrects for decentering of the lens elements. |

Table 2: Extrinsic Camera Parameters

These parameters describe the camera's position and orientation in the 3D world [11].

| Parameter | Description | Mathematical Representation | Role in 3D Reconstruction |

|---|---|---|---|

| Rotation | The 3D orientation of the camera relative to the world coordinate system. | 3x3 Matrix (R) or Rotation Vector | Determines the viewing direction of the camera. |

| Translation | The 3D position of the camera relative to the world coordinate system origin. | 3x1 Vector (t) | Locates the camera in space. |

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Essential Materials for Camera Calibration

| Item | Function in Experiment |

|---|---|

| Checkerboard or ChArUco Board | A known geometric pattern with high-contrast features (e.g., squares) used as a calibration target. Its well-defined 3D geometry provides the reference points for calculating camera parameters [12]. |

| OpenCV Library | An open-source computer vision library that provides robust, ready-to-use functions for corner detection, parameter calculation, and image correction, streamlining the calibration workflow [12]. |

| Rigid Flat Surface | A stable, non-flexible platform (e.g., an acrylic sheet) to mount the calibration target. This ensures the known geometry of the pattern remains consistent during data collection. |

| Controlled Lighting Setup | Consistent and even illumination is critical for reducing noise and ensuring the calibration pattern features are detected accurately and consistently across all images. |

| Active Target (High-Precision) | A high-resolution flat screen that displays a sequence of coded patterns. It can generate dense, high-accuracy 3D-2D correspondences, often outperforming static patterns [14]. |

Experimental Protocols for Reliable Calibration

Detailed Methodology: Checkerboard Calibration with OpenCV

This is a standard protocol for calibrating a single camera using a checkerboard pattern [12].

- Prepare Calibration Target: Print a checkerboard pattern on a rigid, flat surface. Note the number of inner corners (e.g., 9x6) and the real-world size of each square.

- Capture Calibration Images:

- Use the camera to take at least 15-20 images of the pattern.

- Vary the pattern's position and orientation significantly: move it left/right, up/down, tilt it forward/backward, and rotate it.

- Ensure the pattern is in focus and the entire image is well-lit without glare or shadows.

- Ensure the pattern appears in different parts of the image, especially near the edges and corners, to characterize distortion across the entire field of view.

- Detect Corner Points: For each image, use an algorithm (like

cv2.findChessboardCornersin OpenCV) to automatically detect the 2D pixel coordinates of the inner corners of the checkerboard. - Define Object Points: For each image, define the 3D world coordinates of the checkerboard corners. Assume the board is flat on the XY-plane (Z=0). The coordinates are typically defined based on the square size (e.g., 2 cm).

- Calibrate the Camera: Pass the collected 2D image points and 3D object points to a calibration function (like

cv2.calibrateCamera). This function uses an optimization algorithm to find the intrinsic parameters (camera matrix and distortion coefficients) and extrinsic parameters (rotation and translation vectors for each image) that best map the 3D points to the 2D pixels [12]. - Validate Results: Calculate the reprojection error. This is the average distance in pixels between the detected corner points in your images and the points projected back into the image using the calibrated camera parameters. A lower error (typically < 0.5 pixels) indicates a better calibration.

Workflow and Relationship Diagrams

Camera Calibration Workflow

Intrinsic vs Extrinsic Parameters

FAQs: Camera Calibration in Behavior Measurement Research

Q1: What are the concrete signs that my camera system is poorly calibrated? A poorly calibrated camera system exhibits clear physical and data-level symptoms. Physically, straight lines in your capture volume appear curved (barrel or pincushion distortion), and objects seem stretched or misaligned [15]. In your data, you may observe inconsistent tracking of subjects across different camera views, inaccurate depth estimation for 3D reconstruction, and a high reprojection error (typically above 0.5 pixels) when you validate your calibration [3] [16]. These inaccuracies directly compromise the validity of kinematic or behavioral measurements.

Q2: How does poor calibration lead to compromised study outcomes? The primary consequence is the introduction of systematic measurement error. This drift means that the spatial data you collect—such as distance traveled, velocity, or posture angles—does not accurately reflect the subject's true behavior [15]. For example, in a rodent open field test, poor calibration could cause an overestimation of travel distance, falsely indicating higher activity levels. In pharmaceutical development, such data flaws threaten the reliability of efficacy and safety assessments, leading to incorrect conclusions and potentially jeopardizing regulatory compliance [17] [18].

Q3: Our multi-camera calibration keeps failing. What are we doing wrong? Failed multi-camera calibration is often due to insufficient shared views. Every camera in your system must see the calibration pattern at the same time as at least one other camera to establish a common 3D reference [10] [5]. Other common pitfalls include:

- Non-rigid calibration target: A bent or flexible board makes feature detection unreliable [10].

- Inadequate pattern coverage: The pattern should cover a significant portion of the image (ideally 80%) from multiple angles and distances [3].

- Glare and poor lighting: Glare can obscure the pattern, while low contrast prevents the software from detecting key points [10] [5].

Q4: How often should we recalibrate our camera system? The need for recalibration is triggered by any change to your setup or evidence of measurement drift. Recalibrate if you change the camera's focus, zoom, or resolution [3], physically bump or move a camera, or observe a sudden shift in your control data. As a best practice, even for a stable setup, perform a validation check before a critical experiment series to detect and correct for subtle drift.

Troubleshooting Guide: Solving Common Calibration Problems

| Problem | Symptom | Likely Cause | Solution |

|---|---|---|---|

| High Reprojection Error | High mean error (>0.5 px) during/after calibration [3]. | Poor quality image set, incorrect pattern definition, or moving calibration target [3]. | Capture new images with stable camera; ensure target is rigid [3] [10]. |

| Failed Calibration | Software error (e.g., "not enough values to unpack") [10]. | Lack of shared pattern views between cameras or mirrored video feed [10]. | Reposition cameras/target; disable phone camera mirroring [10]. |

| Distorted 3D Reconstructions | Subject's shape appears warped; inconsistent limb lengths [5]. | Incorrect lens distortion coefficients or poor coverage of calibration images [15] [3]. | Capture calibration images across entire field of view, especially edges [3]. |

| Inaccurate Depth Measurement | Systematic error in distance/position measurements along the Z-axis [15]. | Miscalibrated translation matrix (extrinsic parameter) or camera angles too narrow [15] [5]. | Recalibrate with target at different depths; reposition cameras for better angular separation (40-90°) [5]. |

Calibration Validation Metrics and Benchmarks

For reliable research, quantitative validation is non-negotiable. The table below outlines key metrics to assess your calibration quality.

| Metric | Target Value | Description & Impact on Data |

|---|---|---|

| Mean Reprojection Error [3] [16] | < 0.5 pixels | Average difference between detected and projected points. Higher values indicate poor parameter estimation and general measurement inaccuracy. |

| Parameter Standard Deviation | < 1-2% of mean value | Variation in estimated parameters (e.g., focal length) across calibration runs. High deviation indicates an unstable or noisy calibration. |

| Distortion Coefficient Stability | Low fluctuation | Consistency of k1, k2 (radial) and p1, p2 (tangential) coefficients. Instability suggests insufficient image coverage or pattern detection issues. |

Experimental Protocol: Validating Calibration for a Longitudinal Study

Objective: To verify that camera calibration remains stable over the duration of a multi-week study, ensuring no significant measurement drift has occurred.

Materials: The original calibration target (e.g., Charuco board), a fixed validation fixture with known dimensions placed within the capture volume.

Methodology:

- Pre-Study Calibration: Perform a full, multi-view camera calibration following best practices [3]. Save the calibration parameters (intrinsics, extrinsics, distortion coefficients).

- Validation Fixture Scan: Record a 30-second session of the fixed validation fixture placed in a standard orientation within the volume.

- Weekly Check: Before each week's data collection, repeat step 2 without performing a new calibration.

- Data Analysis:

- Reconstruct the 3D position of the fixture's key points using the original calibration parameters.

- Calculate the root mean square (RMS) error between the reconstructed fixture dimensions and its known, physical dimensions.

- Track this RMS error over time. A significant upward trend indicates measurement drift.

Interpretation: A stable, low RMS error confirms calibration integrity. A rising error necessitates investigation and likely recalibration to maintain data validity.

| Item | Function & Importance | Specification Notes |

|---|---|---|

| Calibration Target | Provides known geometry for estimating camera parameters. Planar targets (checkerboard/Charuco) are most common [3] [16]. | Must be flat and rigid [10]. Square size must be known and consistent. High-contrast, non-reflective surface is critical [3]. |

| Software Library (OpenCV) | Industry-standard library for computer vision. Contains full implementations for target detection, parameter calculation, and undistortion [3]. | Use functions like findChessboardCorners, calibrateCamera, and undistort. |

| Validation Fixture | An object of known, stable dimensions used to check calibration accuracy independently of the original target. | Should be different from the calibration target to avoid bias. Ideal for detecting measurement drift over time. |

| Reprojection Error Script | A custom script to calculate the mean reprojection error, providing a key quantitative metric of calibration quality [16]. | The core calculation involves the Euclidean distance between observed image points and points projected using the calibrated parameters [16]. |

Workflow and Impact Diagrams

The following diagram illustrates the logical chain of how poor calibration leads to compromised research outcomes.

Logical Chain from Poor Calibration to Compromised Outcomes

The diagram below outlines a robust calibration and validation workflow to prevent the issues detailed above.

Camera Calibration and Validation Workflow

Building Your Calibration Protocol: From Checkerboards to Fisheye Lenses

In scientific research, particularly in fields requiring consistent behavior measurement such as drug development, the accuracy of your camera system is paramount. This accuracy is established through a process called camera calibration, which corrects for lens distortion and sensor imperfections to ensure that measurements made from images are reliable and metrologically valid. The choice of calibration target is the first and one of the most critical steps in this process, directly influencing the precision of your entire vision system [19]. This guide provides a detailed, technical comparison of the three primary calibration target patterns—Checkerboard, Charuco, and Circular Grids—to help you select the optimal tool for your research and troubleshoot common implementation challenges.

Comparative Analysis of Calibration Patterns

The table below provides a quantitative and qualitative comparison of the three main calibration target types to inform your selection.

Table 1: Comparative Analysis of Calibration Target Patterns

| Feature | Checkerboard | Charuco (Checkerboard + Aruco) | Circular Grid |

|---|---|---|---|

| Core Principle | Detection of corner (saddle) points where four squares meet [19] | Checkerboard corners with unique Aruco markers for identification [19] | Detection of circle centers or ellipses [19] [20] |

| Detection Workflow | Image binarization → quadrilateral detection → grid structure matching [19] | Aruco marker identification → interpolation of saddle points between markers [19] | Blob detection → filtering by area, circularity → grid structure identification [19] |

| Key Advantage | High subpixel accuracy due to infinitesimal, unbiased saddle points [19] [20] | Partial visibility support; resistant to occlusions and uneven lighting [19] [10] | Noise resilience through circle fitting using all perimeter pixels [19] |

| Primary Limitation | Entire board must be visible in all images, limiting data from image edges [19] [20] | Higher algorithm complexity; requires specialized libraries (e.g., OpenCV 3.0+) [19] | Small perspective bias; circles project as ellipses, introducing minor fitting errors [19] [20] |

| Ideal Use Case | Single-camera calibration in controlled conditions with full-board visibility [19] | Multi-camera systems, high-distortion lenses, confined spaces, or variable lighting [19] [10] | Backlit applications or environments with variable lighting [19] |

| Subpixel Refinement | Yes [19] [20] | Yes [19] | Yes (performance varies by software implementation) [20] |

| Occlusion Tolerance | Low | High | Medium (individual circles can be partially occluded) [19] |

Visual Guide for Target Selection

The following diagram outlines the decision-making workflow for selecting the most appropriate calibration pattern based on your experimental conditions.

Experimental Protocols and Implementation

Target Design and Sizing Specifications

Proper physical target specification is critical for constraining the camera model and achieving high accuracy [19] [20].

Table 2: Calibration Target Design and Sizing Specifications

| Parameter | Specification | Technical Rationale |

|---|---|---|

| Field of View (FOV) Coverage | Target should occupy >50% of image pixels when viewed frontally [19] [21]. | A small target allows multiple camera parameter combinations to explain observations, degrading model constraints [19]. |

| Working Distance & Focus | Calibrate at the application's intended working distance. Maintain consistent focus and aperture after calibration [19] [21]. | Changing focus or aperture alters the principal distance and introduces optical aberrations, invalidating the calibration [19]. |

| Feature Resolution | Aim for at least 5 pixels per feature (e.g., checkerboard square) [21]. | Prevents aliasing and provides a smooth gradient for accurate sub-pixel fitting. |

| Checkerboard Symmetry | Use an even number of rows and an odd number of columns, or vice-versa [19] [20]. | Avoids 180-degree rotational ambiguity, which is critical for stereo calibration and target geometry optimization [20]. |

| Material & Flatness | Use laser-printed or etched targets on non-reflective, rigid substrates (e.g., aluminum composite). Deformation tolerance should be <0.1 mm/m² [19] [21]. | Ensures geometric stability. Warped targets introduce errors in 3D point localization. A rigid board is essential for proper detection [10]. |

Image Acquisition Protocol

A robust data acquisition methodology is required for a reliable calibration. The following steps outline a standard protocol:

- Image Count: Acquire 15-30 image pairs (for stereo) or images (for mono) [19].

- Target Poses: Position the target at varying orientations and depths within the working volume. Ensure the target appears in different sectors of the image, including the edges and corners, to better constrain the lens distortion model [19] [22].

- Lighting: Maintain consistent, diffuse illumination to avoid specular reflections and high-contrast shadows that can disrupt feature detection [19] [10].

- Validation: Manually inspect acquired images to ensure the pattern is clearly visible and in focus. Blurry or poorly lit images should be discarded [22].

Troubleshooting Guide: Frequently Asked Questions (FAQs)

Q1: My calibration software fails to detect the pattern. What are the most common causes?

- Insufficient Contrast or Lighting: Ensure high contrast between light and dark pattern elements. Avoid narrow aperture settings or incorrect exposure that reduce contrast. Turn off auto-gamma correction and set gain to unity to minimize noise [22].

- Glare or Reflections: Specular reflections can obscure the pattern. Tilt the target slightly to avoid direct reflections from light sources [10].

- Pattern Too Small: If the target occupies a small portion of the FOV, features will have low resolution, making them hard to detect. Use a larger target or move it closer to the camera [19] [21].

- Non-Rigid or Warped Target: The algorithm expects a flat plane. Mount paper targets on a rigid surface like cardboard or poster board to ensure flatness [10].

Q2: My calibration completes, but the 3D measurements are inaccurate. What could be wrong?

- Insufficient Data from Image Periphery: The lens distortion model is poorly constrained if the target was only shown in the image center. Use a Charuco board, which allows for partial views, to gather data from the very edges of the image [19] [20].

- Focus Shift: The calibration was invalidated by changing the lens focus or aperture after the fact. Always calibrate at your application's working distance and maintain fixed lens settings [19] [21].

- Poor Reprojection Error: During the calibration process, inspect the median reprojection error reported by your software. A value significantly above 0.1 pixels indicates a poor calibration likely due to bad image quality, an unfocused lens, or a poorly constrained target [22].

Q3: For a multi-camera setup, why is it critical to use asymmetric patterns (like asymmetric circular grids or Charuco)?

Symmetric patterns (like a standard circle grid or an even/even checkerboard) have a 180-degree rotational ambiguity [20]. Without a unique origin, the calibration software cannot consistently identify the same physical point from different camera viewpoints. This confusion leads to failures in correctly estimating the relative position and orientation (extrinsic parameters) between cameras. Asymmetric or coded patterns uniquely identify points, resolving this ambiguity [19].

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Essential Materials for Camera Calibration Experiments

| Item | Function / Application | Technical Notes |

|---|---|---|

| Charuco Board | Recommended robust target for multi-camera systems and high-distortion lenses [19]. | Ensure unique marker IDs and correct board dimensions (e.g., 5x7 grid of squares) as defined by your software library [10]. |

| High-Quality Target Print | Provides the physical pattern for feature detection. | Use laser printing or lithography on non-reflective, rigid substrates (e.g., aluminum composite) to ensure flatness and dimensional accuracy [19] [21]. |

| OpenCV Library | Open-source computer vision library with extensive calibration tools [19]. | Supports checkerboard, circular grid, and Charuco detection. Required for implementing Charuco-based calibration [19]. |

| Calibration Software (e.g., calib.io, MATLAB) | Software tools to compute intrinsic and extrinsic camera parameters from acquired images [22] [20]. | Offers advanced features like accounting for perspective bias in circular targets and robust optimization [20]. |

| Stable Mounting System | For securing cameras and the calibration target during data acquisition. | Eliminates motion blur and ensures the target plane remains consistent, improving calibration accuracy. |

This guide provides a proven workflow for image capture and camera calibration, a critical process for ensuring measurement accuracy in behavior measurement research. Proper calibration corrects lens distortions and determines intrinsic and extrinsic camera parameters, which is foundational for reliable data in studies involving animal behavior tracking, movement analysis, and other consistent behavior measurements [15]. This support center article details the necessary materials, a step-by-step protocol, troubleshooting for common issues, and a list of frequently asked questions to support researchers, scientists, and drug development professionals in achieving consistent and reproducible results.

The following diagram illustrates the comprehensive, iterative workflow for camera calibration, from initial setup to final validation. Adherence to each step is crucial for obtaining accurate parameters.

The Scientist's Toolkit: Research Reagent Solutions

The table below details the essential materials and software required for a successful camera calibration procedure.

| Item Name | Function/Application | Specification Guidelines |

|---|---|---|

| Calibration Target | A known physical pattern used to establish 2D-3D correspondences for parameter calculation [3]. | Use a high-contrast, flat checkerboard or grid pattern. For highest accuracy, use a vector format (PDF/SVG) and ensure no scaling is applied during printing [3]. The material should be rigid and non-reflective [3]. |

| Precision Measuring Tool | To measure the physical dimensions of the calibration target's features [3]. | Use calipers for precise measurement of squares or markers. Precision is crucial for accurate results [3]. |

| Stable Mounting | To prevent motion blur and ensure consistent focus and framing during image capture [3]. | Use a stable tripod for the camera or the target. Avoid touching the camera during capture to prevent blurry images [3]. |

| Controlled Lighting Source | To provide consistent, diffuse illumination and minimize shadows that can interfere with pattern detection [3]. | Use a diffuse light source to minimize harsh shadows and ensure even illumination of the calibration target [3]. |

| Calibration Software | Computer vision tools that detect pattern features and calculate the camera's intrinsic and extrinsic parameters [15] [3]. | Common tools include OpenCV, MATLAB, and ROS. These tools analyze images to estimate parameters and correct for lens distortion [3]. |

Experimental Protocols: Detailed Methodology

Step 1: Pre-Capture Setup and Preparation

- Camera Configuration: Set your camera to a fixed focus and a fixed exposure setting. Do not change the zoom, as this invalidates the calibration [3].

- Resolution Setting: Calibrate the camera at the resolution you intend to use for your actual experiments, as intrinsic parameters are resolution-dependent [3].

- Target Preparation: Print your calibration target (e.g., a checkerboard) using a high-quality, non-reflective material. Precisely measure the size of one square using calipers [3]. Ensure the target has a white border around it, ideally as large as one grid element, to minimize false feature detections [3].

Step 2: Systematic Image Capture

- Capture Volume: Capture a minimum of 10-20 images of the calibration pattern from different angles, distances, and orientations [3].

- Frame Coverage: The pattern should cover a significant portion of the frame—aim for at least 50%, ideally 80%, when the target is parallel to the camera at your working distance [3].

- Pose Variety:

- Include one fronto-parallel image (target parallel to the camera sensor) that fills the entire frame [3].

- For about 50% of your images, tilt the target at angles up to ±45 degrees to introduce foreshortening [3].

- Capture images with the edges of the target close to all four sides and corners of the camera frame to ensure even lens coverage [3].

- Image Quality: Use uncompressed (e.g., RAW) or lossless compression formats (e.g., PNG). Do not crop or modify the images before calibration [3].

Step 3: Parameter Calculation with Software

- Input Data: Load the captured images into your chosen calibration software (e.g., OpenCV, MATLAB). Input the known physical dimensions of the target pattern [3].

- Software Processing: The software will automatically detect key points (e.g., checkerboard corners) in the images and establish correspondences between the 2D image points and 3D world points [15] [3].

- Model Fitting: The calibration algorithm will compute the camera projection matrix by estimating the optimal intrinsic parameters (focal length, principal point, distortion coefficients) and extrinsic parameters (rotation and translation vectors for each image) that minimize the reprojection error [3].

Step 4: Validation of Results

- Quantitative Check: The primary metric is the reprojection error, which is the difference between the detected feature locations and their projected positions using the calculated camera model. Aim for a reprojection error of less than 0.5 pixels [3].

- Visual Inspection: Undistort a sample image using the estimated distortion coefficients. Check that straight lines in the real world appear straight in the corrected image, especially near the edges [15] [3].

- Coverage Check: Use software tools (e.g.,

mrcalor MATLAB's visualization) to plot the positions of the calibration target in the camera's field of view. This helps identify any gaps in coverage that could make the calibration unreliable for certain areas of the image [3].

Troubleshooting Guides

Common Error Messages and Solutions

| Error Message / Symptom | Possible Cause | Solution |

|---|---|---|

| High Reprojection Error | - Poor image quality (blur, motion) [3].- Incorrect target dimension input [3].- Poor variety of target poses (overfitting) [3].- False feature detections (outliers) [3]. | - Recapture images with a stable mount and sharp focus [3].- Verify physical measurements of the target [3].- Add more images with diverse tilts and positions.- Inspect and remove images where feature detection failed. |

| Poor Performance Despite Low Error | Overfitting to a narrow set of viewpoints [3]. | The calibration is accurate only for a specific viewpoint. Capture a new, more diverse set of images that cover the entire field of view as specified in the protocol [3]. |

| Incorrect Undistortion | - Using an incorrect lens model (e.g., standard model for a fisheye lens) [3].- Insufficient pattern coverage at image edges [3]. | - For wide-angle or fisheye lenses, use a specialized calibration model (e.g., OpenCV's fisheye module) [3].- Ensure your image set includes views where the pattern is visible near all edges of the frame. |

Frequently Asked Questions (FAQs)

Q1: Why is my reprojection error low in calibration, but my 3D measurements in the real experiment are still inaccurate? A low reprojection error indicates a good fit to your calibration data, but it does not guarantee accuracy across the entire field of view. This is often caused by an unrepresentative set of calibration images that do not cover the same areas where your experiment is taking place. Ensure your calibration images have even coverage of the entire frame, especially the edges and corners [3].

Q2: How often should I recalibrate my camera? Recalibrate whenever the physical configuration of your camera changes. This includes changes to focus, zoom, or aperture. It is also good practice to periodically recalibrate (e.g., at the start of a new experimental series) to account for any subtle mechanical shifts or to verify that the existing calibration is still valid [15] [3].

Q3: What should I do if my calibration software fails to detect the pattern in some images? First, visually inspect the failed images. Common reasons include poor lighting, shadows falling across the pattern, the pattern being out of focus, or the pattern not being fully visible in the frame. Remove these images from your calibration set and recapture them under better conditions. The software typically requires a clear, high-contrast view of the pattern to function correctly [3].

Q4: My application uses a wide-angle lens. Are there any special considerations? Yes. Wide-angle lenses often exhibit strong radial distortion (e.g., barrel distortion). When using calibration software, ensure you are using a model that can account for this, such as a fisheye model if applicable. Furthermore, it is critical that your calibration images have strong coverage at the edges of the frame, where this distortion is most pronounced [15] [3].

A guide to achieving precise and consistent camera calibration for rigorous scientific research.

Camera calibration is a foundational step in quantitative image analysis, enabling researchers to extract accurate and reliable measurements from video data. Inconsistent calibration can compromise data integrity, leading to unreliable results in behavior measurement studies. This guide provides detailed protocols and troubleshooting advice to ensure your camera calibration supports the highest standards of scientific rigor.

Calibration Fundamentals and Setup

Proper calibration estimates your camera's internal parameters (like focal length and lens distortion) to correct image imperfections, ensuring that measurements in the 2D image plane accurately represent the 3D world [3].

Essential Research Reagents and Materials

Gathering the right tools is the first critical step for a successful calibration.

| Item | Specification | Function in Experiment |

|---|---|---|

| Calibration Target | Checkerboard or Charuco board; high-contrast, flat, and rigid [3] [10]. | Serves as the known geometric reference for the calibration algorithm. |

| Precision Measuring Tool | Calipers or a high-quality ruler [3]. | Provides the ground-truth measurement for the calibration target's features. |

| Stable Platform | Tripod or fixed camera mount [3]. | Eliminates motion blur and ensures consistency across captured images. |

| Controlled Lighting | Diffuse, uniform light source to minimize shadows and glare [3] [10]. | Ensures the calibration pattern is clearly visible and detectable by software. |

Workflow for a Successful Calibration

The following diagram outlines the core steps and decision points in a robust camera calibration protocol.

Workflow Stages Explained:

- Prepare Calibration Target: Use a high-quality print of a checkerboard or Charuco board. For rigidity, mount it on a flat, solid surface like poster board [10]. Measure the physical size of the squares with calipers for precise input into calibration software [3].

- Configure Camera and Environment: Before capturing, lock your camera's settings. Use a fixed focus, fixed exposure, and the desired resolution for your research. Changing these later will invalidate the calibration. Illuminate the target with diffuse, even lighting to prevent shadows and glare that can interfere with pattern detection [3].

- Capture a Comprehensive Image Set: Move the target through the camera's field of view. Capture 15-20 images from a variety of angles and positions, ensuring the pattern is visible across the entire frame, especially the edges where lens distortion is most pronounced [3].

Troubleshooting Common Calibration Errors

Problem: High Reprojection Error

The reprojection error quantifies the difference between where the calibration algorithm predicts a pattern point should be and where it was actually detected in the image. An error of less than 0.5 pixels is ideal [3].

- Potential Cause 1: Poor Quality or Insufficient Image Set

- Solution: Ensure your image set has sufficient variety. The target should be tilted up to ±45 degrees and cover all areas of the frame, including the corners. Avoid overfitting by not using multiple nearly identical images [3].

- Potential Cause 2: Incorrect Pattern Detection

- Solution: Manually inspect the images in your calibration software to verify it correctly identified every corner of the pattern. Look for and remove any images with false detections or outliers [3].

Problem: Calibration Software Fails to Detect Pattern

- Potential Cause 1: Glare or Poor Lighting

- Solution: Glare can obscure the pattern. Tilt the board slightly up and down under your lights to find an angle that eliminates reflections [10].

- Potential Cause 2: Target is Too Small or Blurry

- Solution: The calibration pattern should cover a significant portion of the frame. For a more reliable detection, especially with high-resolution cameras, consider using a larger Charuco board [10].

- Potential Cause 3: Mirrored or Reversed Images

- Solution: Some front-facing smartphone cameras apply mirroring by default. This will prevent pattern recognition. Disable mirroring in the camera settings or use the rear camera [10].

Problem: Inconsistent Measurements After Calibration

- Potential Cause: Calibration Conditions Mismatch with Experimental Setup

- Solution: Calibrate under the same conditions as your experiment. This includes using the exsame camera resolution, lens focus, and zoom settings. A calibration is only valid for the specific setup and resolution for which it was performed [3].

Frequently Asked Questions (FAQs)

What is the minimum number of images required for a good calibration?

While you can get results with 10 images, capturing 15 to 20 images is recommended for a stable and accurate calibration [3]. The key is variety in angles and positions, not just quantity.

How do I validate my calibration results beyond the reprojection error?

A low reprojection error is necessary but not always sufficient [3].

- Visual Inspection: Apply the distortion coefficients to "undistort" your images. Straight lines in the real world should appear straight in the corrected images [3].

- Extrinsic Visualization: Use tools like MATLAB or the open-source

mrcalto visualize the positions of the target during calibration. This helps confirm you achieved full coverage of the field of view [3].

Why is my stereo camera calibration inaccurate?

For multi-camera setups, ensure that during capture, the calibration target is fully visible in both cameras simultaneously for a range of positions [3]. Each camera pair must share multiple views of the target for the software to accurately calculate their relative positions.

My camera has a fisheye lens. Does the standard process work?

No. Fisheye lenses require a specialized calibration model. Standard pinhole models will not suffice. Use dedicated fisheye calibration modules, such as those available in OpenCV or MATLAB [3].

Troubleshooting Guides

Q1: My images do not align after calibration, and my point cloud appears delaminated. What could be wrong?

This is a common issue in 3D reconstruction, often stemming from misaligned camera transforms. The root causes and solutions are multifaceted [23].

Potential Causes and Diagnostic Steps:

- Inaccurate Camera Calibration: Even slight errors in your camera's intrinsic parameters (focal length, principal point) or extrinsic parameters (camera pose) can cause significant misalignments. Begin by verifying your calibration results [23].

- Incorrect Coordinate System Conventions: Different libraries (e.g., OpenCV vs. OpenGL) use different coordinate conventions. A mismatch will introduce errors. Double-check that your transformations between the camera and world coordinate systems are consistent and correctly implemented [23].

- Noisy Depth Data: The quality of your depth data directly impacts backprojection. Noisy or incomplete depth maps will result in a misaligned point cloud [23].

- Faulty Relative Camera Poses: Errors in the relative poses between cameras in a multi-view system will prevent proper alignment [23].

Solutions:

- Verify Camera Calibration: Re-calibrate your camera using a robust method. Use a checkerboard pattern and ensure your calibration images cover the entire field of view, especially the edges, to accurately capture lens distortion. OpenCV's

cv2.calibrateCamerafunction is a standard tool for this [24]. - Double-Check Coordinate Transformations: Ensure your rotation matrices and translation vectors align with your primary library's convention. For OpenCV, the camera looks along the negative Z-axis in its coordinate system. You may need to construct a custom camera-to-world transform to ensure correctness [23].

- Filter Depth Data: Before backprojecting, apply filtering techniques like median filtering or statistical outlier removal to your depth maps to smooth noise and eliminate erroneous values [23].

- Optimize Relative Poses: For multi-camera systems, use techniques like bundle adjustment to jointly optimize camera poses and 3D point positions, minimizing reprojection errors for a more consistent reconstruction [23].

Q2: How can I evaluate the accuracy of my single-camera calibration to ensure it's reliable?

Evaluating calibration accuracy is crucial before proceeding with experiments. You can assess it using several methods [2].

- Examine Reprojection Errors: The reprojection error is the distance between a detected pattern keypoint in the image and its corresponding world point projected back into the image using the calibrated camera model. A high error indicates poor calibration. You can visualize the mean reprojection error for each calibration image to identify and remove outliers [2].

- Analyze Estimation Errors: The standard error of each estimated parameter (e.g., focal length, distortion coefficients) represents its uncertainty. A larger standard error for a parameter suggests less confidence in its estimated value. These errors can be used to calculate confidence intervals for your parameters [2].

- Plot Extrinsics: Visually inspect the relative locations of the camera and the calibration pattern for all the images used. This can reveal obvious errors, such as a pattern being behind the camera or a camera being behind the pattern, which would indicate a serious calibration problem [2].

Table: Standard Calibration Accuracy Metrics

| Metric | Description | Interpretation |

|---|---|---|

| Mean Reprojection Error | Average distance (in pixels) between detected image points and reprojected world points. | A lower value is better. An overall mean error below 0.5 pixels is often considered good, but this depends on application requirements [2]. |

| Parameter Standard Error | Standard deviation (uncertainty) for each estimated camera parameter (e.g., focal length). | Smaller values indicate higher confidence in the parameter estimate. For example, a focal length of ( 714.19 \pm 3.32 ) pixels [2]. |

| Extrinsics Visualization | A 3D plot of camera and pattern positions for all calibration images. | Helps identify obvious pose estimation errors visually [2]. |

Q3: My stereo camera calibration is complete, but 3D reconstruction is inaccurate. How can I improve it?

Inaccurate depth estimation in stereo vision often originates from the calibration process itself.

Troubleshooting Steps:

- Verify Individual Camera Calibration: First, ensure that the intrinsic calibration (camera matrix and distortion coefficients) for each camera in the stereo pair is accurate. Errors in individual camera parameters will propagate to the stereo calibration [25].

- Check Stereo Calibration Parameters: The rotation matrix (

R) and translation vector (T) that define the relative position and orientation of the two cameras are critical. An error here will misalign the epipolar geometry. Visually inspect the extrinsics if your calibration tool allows it [25] [26]. - Rectify Images: After calibration, you must use the stereo parameters to rectify the images. Rectification transforms the images so that corresponding points are on the same horizontal row, which is a prerequisite for efficient stereo matching. Use functions like

cv2.stereoRectifyandcv2.initUndistortRectifyMapin OpenCV [25] [26]. - Review Calibration Images: The quality of your calibration images is paramount. Ensure you have a sufficient number of image pairs (10-20 is recommended) where the calibration pattern is fully visible in both cameras and placed at different orientations and distances, covering the entire field of view [27] [26].

Frequently Asked Questions (FAQs)

Q4: What are the best practices for capturing images for camera calibration?

Following a rigorous protocol for image capture is the foundation of an accurate calibration.

Protocol Checklist:

- Fixed Focus and Zoom: Disable autofocus and auto-zoom. Changing focus or zoom between images changes the intrinsic parameters, making calibration impossible [27].

- Number of Images: Use a minimum of 10-20 images of the calibration pattern. The calibrator requires at least three, but more images lead to a more robust and accurate solution [27] [24].

- Pattern Placement: The pattern must not be coplanar across all images. Capture the pattern at a variety of orientations and positions relative to the camera. Tilt it and move it to cover the entire image frame, ensuring keypoints are present near the edges and corners [27] [24].

- Pattern Coverage: The calibration pattern should cover at least 20% of the captured image [27].

- Image Quality: Use uncompressed images (e.g., PNG) or images with lossless compression. Do not crop or modify the images after capture. Ensure the pattern is well-lit and not blurry [27].

Q5: When should I consider using deep learning for camera calibration instead of traditional methods?

Deep learning-based calibration offers a flexible, target-free alternative to traditional methods, which is particularly useful in specific scenarios [28].

Table: Traditional vs. Deep Learning-Based Calibration

| Feature | Traditional Methods (e.g., Checkerboard) | Deep Learning-Based Methods |

|---|---|---|

| Requirements | Requires a physical calibration target and controlled capture process [28]. | Can be trained to work from a single image without a physical target [28]. |

| Automation | Requires manual intervention for capturing and processing pattern images. | Can enable full self-calibration "in the wild" after the model is trained [28]. |

| Ideal Use Case | Controlled laboratory environments, static cameras, and when a high-precision physical target can be used [24] [28]. | Dynamic environments (e.g., autonomous vehicles, drones), large-scale deployments where manual calibration is infeasible, or calibration from internet images [15] [29] [28]. |

| Primary Challenge | Cumbersome manual process; impractical for frequent recalibration or wild images [28]. | Requires large, diverse datasets for training; accuracy can be affected by domain shift [29] [28]. |

There are two primary learning paradigms in deep learning-based calibration [28]:

- Regression-based Calibration: The network directly estimates a vector of camera parameters (e.g., focal length, distortion coefficients) from an input image.

- Reconstruction-based Calibration: The network learns a pixel-level mapping to directly output a corrected image, without explicitly predicting camera parameters.

Q6: What are the essential materials needed for a standard checkerboard-based calibration experiment?

For a traditional calibration experiment, you will need the following key reagents and tools.

Table: Research Reagent Solutions for Checkerboard Calibration

| Item | Function | Key Specifications |

|---|---|---|

| Checkerboard Pattern | A physical calibration target with known dimensions. Provides known 3D points (corners) and their corresponding 2D image projections. | Must be printed on a flat, rigid surface. The number of inner corners (e.g., 9x6, 7x6) must be specified. Square size must be known and consistent [27] [24]. |

| Fixed-Focus Camera | The imaging device to be calibrated. | Autofocus and auto-zoom must be disabled. The camera settings (focus, zoom) must remain constant throughout image capture [27]. |

| Stable Mounting Setup | A tripod or rig to hold the camera steady. | Minimizes motion blur and ensures a consistent camera position during image capture. |

| Adequate Lighting | A well-lit, uniform illumination source. | Ensures clear pattern detection with sharp corners and minimizes shadows and glare on the pattern [27]. |

Workflow Visualization

The following diagram illustrates the logical workflow and decision process for troubleshooting camera calibration in a scientific research context, integrating both traditional and deep learning approaches.

Calibration Troubleshooting Workflow

Frequently Asked Questions (FAQs)

Q1: Why can't I use a standard pinhole camera model to calibrate my fisheye lens?

The extreme distortion produced by a fisheye lens, which enables a field of view (FOV) of 180 degrees or more, cannot be accurately modeled by the standard pinhole model. The pinhole model is generally accurate only for FOVs up to about 95°. For fisheye lenses, you must use a specialized fisheye camera model, such as the Scaramuzza model (for FOVs up to 195°) or the Kannala-Brandt model (for FOVs up to 115°), which use different mathematical projections to map the wide-angle view [30].

Q2: What is the best pattern to use for calibrating a fisheye camera?

While checkerboard and dot patterns are common, a line-pattern is often recommended for fisheye calibration, particularly when distortion is severe. Because the lines become strongly curved in the image, they provide rich information for grouping points and calculating distortion parameters. This can make the process of grouping detected points into lines more robust compared to other patterns [31].

Q3: My multi-camera system doesn't have hardware sync. Can I still synchronize the videos?

Yes, software-based synchronization methods exist that do not require hardware triggers. One novel approach involves recording a "time-calibrated video" featuring specific markers and a uniformly moving ball before capturing your target scene. This video establishes a global time reference, allowing you to extract the temporal relationship between the local time systems of different cameras and align the sequences to a unified time reference, achieving synchronization at the subframe level [32].

Q4: After calibration, the edges of my undistorted image are still curved. What went wrong?

This is a common challenge and often indicates that the calibration model needs adjustment. You can try:

- Increasing the number of coefficients in your distortion model (e.g., in a Rational model, increasing the denominator coefficient) to allow the model to correct for more complex distortions [33].

- Using multiple calibration images where the pattern is placed at different locations and orientations, especially covering the edges and corners of the field of view. This provides more data for the calibration algorithm [30] [33].

- Ensure the calibration pattern is rigid and flat. A bent pattern will introduce errors [33].

Troubleshooting Guides

Issue: Poor 3D Reconstruction from Multi-Camera Fisheye System

Possible Causes and Solutions:

Cause 1: Incorrect Camera Model

- Solution: Verify that you are using a fisheye-specific camera model (e.g., Scaramuzza, Kannala-Brandt) during the calibration process, not the standard pinhole model [30].

Cause 2: Inaccurate or Insufficient Calibration Data

- Solution: Ensure your calibration pattern covers the entire field of view of each camera, from the center to the very edges. Use many images (e.g., 80-100) with the pattern in different orientations and positions. The pattern must be flat and well-focusse to ensure the detected points are accurate [30] [34] [33].

Cause 3: Improperly Accounted-for Perspective Distortion

- Solution: In complex setups, perspective distortion (from the calibration board not being perfectly parallel to the image plane) can interfere with calculating radial distortion parameters. Use methods that can independently characterize radial and perspective distortion, or take care to minimize perspective effects when capturing calibration images [31].

Issue: Lack of Temporal Synchronization in Multi-Camera Setup

Possible Causes and Solutions:

Cause 1: No Common Time Reference

- Solution: Implement a synchronization method. If hardware sync is unavailable, use a software-based approach. The "time-calibrated video" method provides a robust global time reference by displaying known time markers recorded by all cameras simultaneously [32].

Cause 2: Frame-Level Alignment is Not Sufficient

- Solution: Advance to subframe-level synchronization. After establishing a frame-level alignment, use interpolation algorithms to calculate the timing between frames. This is crucial for accurately analyzing fast-moving objects, such as animal behavior, where even a one-frame difference can cause misalignment [32].

Experimental Protocols

Protocol 1: Calibrating a Fisheye Lens Using a Checkerboard Pattern

This protocol is based on common procedures in tools like the MATLAB Computer Vision Toolbox [30].

1. Acquire Calibration Images:

- Use a high-contrast, rigid, flat checkerboard pattern.

- Capture enough images (e.g., 20-50) where the pattern is visible across the entire field of view of the fisheye camera—center, edges, and corners.

- Vary the tilt, rotation, and distance of the pattern relative to the camera.

2. Detect Checkerboard Points:

- Use a function like

detectCheckerboardPointswhich is capable of detecting the corners of the checkerboard squares even under high distortion. Ensure the function is set to handle 'HighDistortion' [30].

3. Generate World Coordinates:

- Define the world coordinates of the checkerboard corners based on the known physical size of the squares (e.g., 20 mm) [30].

4. Estimate Camera Parameters:

- Input the detected image points and world points into a function like

estimateFisheyeParametersalong with the image size. This function will compute the intrinsic and extrinsic parameters of the camera using a fisheye model [30].

5. Evaluate and Undistort:

- Check the calibration errors using the output from the estimation function.

- Use the

undistortFisheyeImagefunction with the obtained parameters to remove lens distortion from your images. You can adjust the 'OutputView' and 'ScaleFactor' to control the composition of the resulting image [30].

Protocol 2: Synchronizing a Multi-Camera System Without Hardware Triggers

This protocol is based on the subframe-level synchronization method described in recent research [32].

1. Record a Time-Calibrated Video:

- Before the main experiment, have all cameras record a common "time-calibrated video."

- This video should contain easily detectable temporal markers (e.g., a counter) and a uniformly moving object (e.g., a ball on a ramp) to establish a global time reference.

2. Unify Time Coordinate Systems:

- For each camera, detect the time-calibration information (markers, ball position) in its recorded video.